Successfully mastering database management requires a solid understanding of key principles, tools, and techniques. Whether you’re preparing for a certification or enhancing your skills, it’s essential to have a comprehensive grasp of fundamental concepts and advanced methods used in modern data systems.

Practicing real-world scenarios is an effective way to build confidence and improve problem-solving abilities. By engaging with a variety of tasks and challenges, you can ensure you are well-prepared for any test that assesses your database expertise. Focusing on a range of topics, from basic queries to more complex optimization strategies, will help solidify your command of the material.

In this guide, you will encounter different types of exercises designed to sharpen your abilities and broaden your knowledge. These exercises cover the core areas necessary for success, providing detailed explanations to ensure a deeper understanding of each topic. Whether you’re looking to reinforce your current knowledge or learn new techniques, this material will serve as a valuable resource.

Essential Database Concepts to Master

To excel in database-related assessments, it’s crucial to have a solid foundation in various fundamental topics. Mastering the core areas ensures that you’re able to approach challenges with confidence and precision. These key concepts are frequently tested and form the backbone of practical database work, from designing efficient structures to optimizing performance.

Understanding the structure and relationships within data is essential. Be sure to grasp the principles of data modeling, including normalization, entity relationships, and how data is organized across tables. Additionally, practicing how to write effective queries, including filtering, sorting, and joining tables, will prepare you for more complex tasks.

Another important aspect is the ability to troubleshoot and optimize. Knowing how to identify performance bottlenecks, implement indexing, and ensure the integrity of your data can make a significant difference. Familiarity with data manipulation commands and the proper use of constraints also plays a key role in achieving success.

Understanding Database Fundamentals

To build a strong foundation in data management, it’s essential to first understand the basic principles that govern how databases are structured and used. A clear grasp of these fundamentals will enable you to work more efficiently with data, design more effective systems, and optimize performance. These concepts form the bedrock for all advanced database operations.

Data Organization and Structure

Data in a relational system is stored in tables, each made up of rows and columns. These tables can be related to one another through keys, allowing data to be retrieved and managed effectively. Knowing how to design tables that are logically organized and properly normalized will help reduce redundancy and improve data integrity.

Key Database Operations

Key operations such as inserting, updating, and deleting data are fundamental. Alongside these basic manipulations, understanding how to retrieve data using queries is vital. By learning how to construct SELECT statements, you can filter, sort, and aggregate data to meet specific needs.

| Operation | Purpose |

|---|---|

| INSERT | Adding new data into a table |

| UPDATE | Modifying existing records |

| DELETE | Removing records from a table |

| SELECT | Retrieving specific data from a table |

Having a thorough understanding of these basic operations and the relationships between different data elements is crucial for developing efficient, reliable database systems. By focusing on these core concepts, you can create robust structures that meet various business needs and perform optimally under different conditions.

Key Database Concepts for Success

To achieve success in any data management assessment, it’s essential to focus on mastering the core concepts that are frequently tested. A deep understanding of these foundational ideas not only enhances your ability to solve complex problems but also helps in applying the knowledge practically in real-world scenarios. These concepts are the building blocks of efficient database management systems.

One crucial area to focus on is the use of relationships between data elements. Grasping how tables are linked through keys, such as primary and foreign keys, allows for better data organization and retrieval. Additionally, understanding the principles of normalization ensures that the data structure remains efficient and avoids redundancy.

Another vital concept is query optimization. Knowing how to write efficient queries and leverage indexing can drastically improve the performance of data retrieval operations. Techniques such as joining tables and using aggregate functions are frequently tested and vital to master for efficient data handling.

Top Questions on Data Types

Understanding the different types of data used in a relational system is essential for effective database design and manipulation. Data types determine how data is stored and the operations that can be performed on it. Mastering these concepts ensures that data is both accurate and efficient to process.

Common Numeric Data Types

One of the most fundamental categories of data types includes numbers. These types are used to store integer or floating-point values, depending on the precision needed. It’s important to differentiate between INT, FLOAT, and DOUBLE, as each serves a specific purpose in terms of memory usage and range.

String and Date-Time Types

String data types store text-based information, and their usage spans from simple text fields to more complex character sets. Knowing when to use CHAR, VARCHAR, or TEXT is key to managing storage effectively. Similarly, working with DATE and DATETIME types is essential for handling time-based data with accuracy.

Optimizing Queries for Better Performance

Optimizing database queries is essential for ensuring fast data retrieval, especially when working with large datasets. A well-optimized query can significantly improve the performance of your system, reducing both response time and resource consumption. In many assessments, being able to optimize queries effectively is a critical skill that demonstrates your understanding of efficient data handling.

Techniques for Query Optimization

There are several strategies to improve the speed of your queries. One of the most important techniques is using indexes on frequently queried columns. Indexing helps speed up data retrieval by allowing the database engine to locate data faster without scanning entire tables. Additionally, restructuring your queries to avoid unnecessary subqueries and joins can enhance performance, especially when dealing with large amounts of data.

Common Pitfalls to Avoid

It’s also crucial to avoid common mistakes that can negatively impact performance. For instance, using SELECT * retrieves all columns from a table, even if only a few are needed, which can lead to unnecessary overhead. Another pitfall is failing to limit the number of rows returned by the query, especially in cases where only a subset of data is required.

| Optimization Technique | Benefit |

|---|---|

| Using Indexes | Improves search speed by narrowing the search space |

| Avoiding Subqueries | Reduces execution time by simplifying query structure |

| Selecting Specific Columns | Minimizes data retrieval overhead |

| Limiting Row Results | Reduces memory usage and speeds up processing |

By understanding and applying these optimization strategies, you can greatly improve the performance of your queries and demonstrate a strong grasp of efficient data management in assessments and real-world scenarios.

Practice Joins for Better Results

Mastering the art of combining data from multiple tables is a critical skill for anyone working with relational databases. Joins allow you to retrieve related information from different sources, enhancing the power and flexibility of your queries. By practicing various join techniques, you can unlock the full potential of your database and make your data retrieval process more efficient.

Types of Joins to Focus On

There are several types of joins, each serving a unique purpose depending on the data relationships. The most common types include INNER JOIN, which returns only the matching records between tables, LEFT JOIN, which includes all records from the left table and matching ones from the right, and RIGHT JOIN, which does the opposite. Understanding when and how to use these joins is essential for crafting effective queries.

Best Practices for Using Joins

When working with joins, it’s important to consider performance. Joining too many tables or using inefficient join conditions can lead to slow queries. To optimize, always make sure the join columns are indexed and avoid unnecessary joins by filtering early in your query. Additionally, using aliasing for tables and columns can improve query readability and reduce complexity.

Common Functions You Should Know

Understanding the essential functions available in a relational database system can significantly enhance your ability to manipulate and retrieve data efficiently. These functions allow you to perform calculations, format data, and handle text and date values with ease. Familiarity with the most commonly used functions is key to mastering data management tasks and improving your querying skills.

String Functions

String manipulation is a vital aspect of working with textual data. Functions such as CONCAT() to combine strings, LENGTH() to measure the length of a string, and SUBSTRING() to extract parts of a string are essential for many common tasks. Additionally, the UPPER() and LOWER() functions allow you to standardize text formatting, which is particularly useful for comparisons.

Date and Time Functions

Handling dates and times is another critical area, especially for applications that involve scheduling or logging events. Functions like NOW() to get the current date and time, DATE_FORMAT() to change the display format, and DATEDIFF() to calculate the difference between two dates are commonly used to process temporal data efficiently.

Database Normalization Explained

Database normalization is the process of organizing data in a way that reduces redundancy and dependency. By structuring data into multiple related tables, normalization helps to improve data integrity and make it easier to maintain. This process is essential for designing efficient and scalable databases, ensuring that data is stored in a logical and systematic manner.

Key Benefits of Normalization

- Reduces Data Redundancy: By breaking data into smaller, related tables, it prevents unnecessary repetition of information.

- Improves Data Integrity: Ensures that updates, deletions, and insertions are consistent across the entire database.

- Enhances Query Performance: Properly normalized databases often result in faster queries by reducing the amount of data processed.

Normalization Stages (Normal Forms)

Normalization typically involves several stages, each aimed at removing specific types of redundancy or dependency. The most commonly used normal forms are:

- First Normal Form (1NF): Eliminates duplicate columns from the same table and ensures that each column contains atomic values.

- Second Normal Form (2NF): Removes partial dependencies, where non-key columns depend only on part of a composite primary key.

- Third Normal Form (3NF): Removes transitive dependencies, where non-key columns depend on other non-key columns.

- Boyce-Codd Normal Form (BCNF): An advanced version of 3NF that eliminates any remaining anomalies by ensuring that every determinant is a candidate key.

By applying these normalization techniques, you ensure that the database design is efficient, easy to maintain, and free from unnecessary complexities that could impact performance and data consistency.

Handling Errors in Database Environments

When working with databases, encountering errors is inevitable, especially in time-sensitive settings. Knowing how to quickly identify, troubleshoot, and resolve these issues can significantly improve both the accuracy of your queries and your overall performance. Whether it’s a syntax error, a connection issue, or a constraint violation, having a clear strategy for managing errors is essential for effective data management.

Common Types of Errors

- Syntax Errors: These occur when the query does not conform to the required structure. They are usually easy to spot, as the database will typically provide a description of the problem.

- Connection Issues: Errors that arise when the database cannot be reached, often due to network problems or incorrect configuration settings.

- Constraint Violations: Occur when data entered violates rules defined in the database schema, such as unique constraints, foreign key constraints, or check constraints.

- Timeout Errors: Happen when a query takes longer to execute than the allowed time limit, often due to inefficient query design or a heavily loaded server.

Approaches to Handling Errors

Being proactive in error handling can save valuable time and reduce frustration during critical tasks. Below are some steps to take when facing errors:

- Read the Error Message: Most database systems provide error messages that describe the issue in detail. Understanding these messages is the first step in troubleshooting.

- Check the Query Syntax: Double-check for typos, missing commas, or incorrect keywords. Online documentation or syntax-checking tools can be invaluable in these situations.

- Verify Database Connections: Ensure that the database server is running, and that you have the correct credentials and network access to connect.

- Review Constraints: If data is being rejected, check if the values meet the requirements set by the database schema, such as correct data types, or adherence to foreign key relationships.

By understanding common errors and having effective strategies for resolving them, you can ensure a smoother experience when interacting with databases, especially under pressure.

Advanced Queries for Experts

As you progress in working with relational databases, mastering complex queries becomes essential. These advanced queries enable you to extract, manipulate, and aggregate data in powerful ways, often across multiple tables and conditions. Developing expertise in crafting intricate statements can drastically improve performance and efficiency in data retrieval tasks.

Complex Joins and Subqueries

One of the key skills for experts is the ability to work with joins and subqueries. Using INNER JOIN, LEFT JOIN, and RIGHT JOIN effectively allows you to combine data from various tables while maintaining the integrity of the query. Additionally, subqueries are useful for performing operations that require nested queries, helping you to handle more complex filtering and aggregation tasks.

Subqueries can be used in various ways:

- SELECT subqueries: For filtering records based on a set of values returned by another query.

- WHERE subqueries: Used to filter results in the WHERE clause based on another query.

- JOIN subqueries: To combine data from multiple tables dynamically within the same query.

Advanced Data Aggregation and Window Functions

When working with large datasets, advanced aggregation and window functions allow for more precise analysis. Functions like GROUP_CONCAT() allow you to aggregate values from rows into a single string, while HAVING enables filtering after aggregation. Additionally, window functions such as ROW_NUMBER(), RANK(), and LEAD() provide ways to perform calculations across a set of rows, enabling detailed analytics and complex reporting.

Examples of advanced queries:

- Aggregate Functions: SUM(), AVG(), COUNT(), MIN(), MAX() for summarizing data.

- Window Functions: ROW_NUMBER(), NTILE(), CUME_DIST() for ranking and partitioning data.

These techniques provide the ability to solve complex data problems with precision and efficiency, helping to unlock deep insights from large datasets.

Indexing Techniques for Performance

Indexing plays a crucial role in optimizing the performance of database queries. By creating an efficient structure that allows quick access to data, indexing significantly reduces the amount of time required for searching, sorting, and filtering records. Understanding the different types of indexing techniques and knowing when to apply them can vastly improve the performance of your queries, especially when working with large datasets.

Types of Indexes

There are several types of indexes that can be created depending on the requirements of the query and the data structure:

- Single Column Indexes: These indexes are created on a single column and are most effective when queries often filter or sort by that column.

- Composite Indexes: Also known as multi-column indexes, these are created on multiple columns and can improve performance when queries use multiple columns in their filters or sorting clauses.

- Unique Indexes: These indexes ensure that no two rows in the table have the same value for the indexed columns, which is useful for maintaining data integrity.

- Full-Text Indexes: These indexes are used for searching large text fields and are especially useful for text-based queries where traditional indexes might not be effective.

- Spatial Indexes: These are used for handling geometric data, such as points, lines, and polygons, making them ideal for applications that require spatial queries.

Best Practices for Indexing

While indexes can greatly improve performance, they should be used wisely to avoid overhead in terms of storage and write operations. Here are some best practices to follow:

- Choose the Right Columns: Index columns that are frequently used in WHERE, JOIN, ORDER BY, and GROUP BY clauses to speed up query performance.

- Avoid Over-indexing: Creating too many indexes can slow down data insertion, updates, and deletions, as each change requires the indexes to be updated.

- Use Composite Indexes Wisely: While composite indexes can optimize complex queries, they should only include columns that are often queried together.

- Monitor and Optimize: Regularly check the performance of your queries and indexes. Use tools to analyze query execution plans and adjust indexes based on query patterns.

By applying these indexing techniques, you can significantly enhance the performance of your database and ensure efficient querying even with large volumes of data.

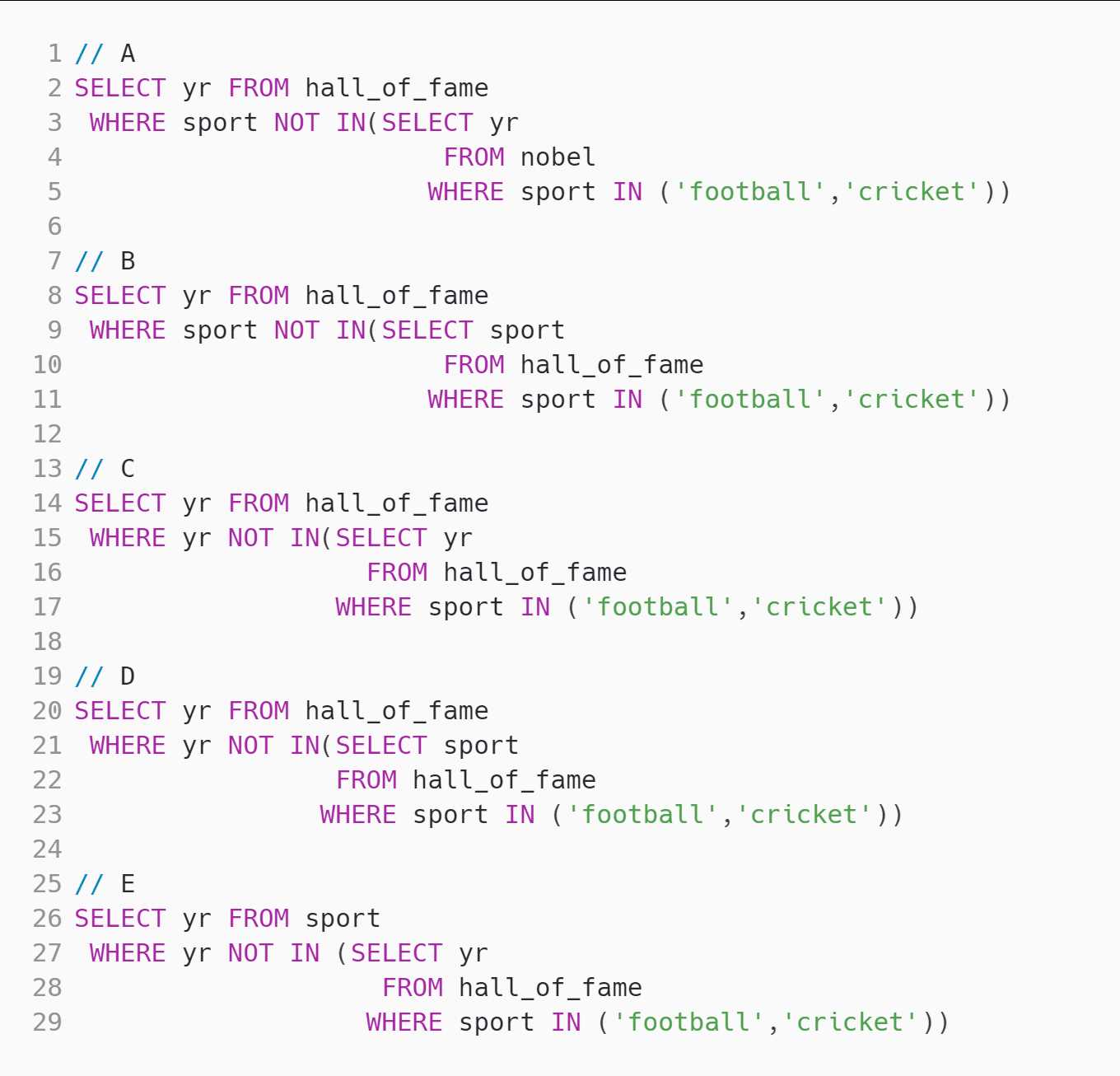

Working with Subqueries in Tests

Subqueries are powerful tools for querying data within another query, allowing complex operations that would be difficult or inefficient in a single statement. By using nested queries, you can break down complex tasks into simpler, manageable components. Understanding how to implement subqueries effectively can greatly enhance your ability to tackle advanced database tasks and solve problems that require multiple steps or conditions.

Types of Subqueries

Subqueries can be used in various places within a query, each serving a different purpose. There are three main types of subqueries:

- Scalar Subqueries: These return a single value and can be used in places where an expression is expected, such as in the SELECT list or WHERE clause.

- Row Subqueries: These return a single row of data and are often used with comparison operators like IN, EXISTS, or NOT EXISTS.

- Table Subqueries: These return a set of rows and are typically used in FROM clauses, providing a temporary table for further manipulation or joining.

Common Use Cases for Subqueries

Subqueries can be applied in various contexts to simplify complex operations or create more readable queries:

- Filtering Data: Subqueries are frequently used to filter results based on a set of values returned by another query, helping to refine the results further.

- Aggregating Data: You can use subqueries to calculate aggregations like averages or sums, and then filter or compare the results in the main query.

- Joining Data: A subquery can also act as a temporary table in a JOIN operation, allowing more flexible combinations of data from different sources.

Mastering the use of subqueries will enable you to write more efficient and powerful queries, solving complex data problems with ease.

Essentials of Transactions for Exams

Transactions are essential for ensuring data integrity and consistency in a database. They allow multiple operations to be grouped together as a single unit, ensuring that either all operations succeed or none are applied. Mastering the core concepts of transactions is crucial for handling complex scenarios where data consistency and reliability are critical, especially when working with concurrent operations.

Key Concepts of Transactions

To fully understand transactions, it is important to grasp the following concepts:

- ACID Properties: Transactions are governed by the ACID principles–Atomicity, Consistency, Isolation, and Durability–which ensure that database operations are processed reliably.

- Commit: The commit operation permanently saves the changes made during a transaction to the database, ensuring that the results are final and visible to others.

- Rollback: The rollback operation undoes any changes made during the transaction if an error occurs, ensuring that the database is left in a consistent state.

- Savepoints: Savepoints allow you to set intermediate points within a transaction, so you can roll back to a specific point without undoing the entire transaction.

Transaction Control Statements

There are several key SQL statements used to manage transactions:

| Statement | Description |

|---|---|

| BEGIN | Marks the start of a transaction. After this statement, all operations are part of the same transaction until a commit or rollback is issued. |

| COMMIT | Saves all changes made during the transaction and makes them permanent in the database. |

| ROLLBACK | Reverses all changes made during the transaction, restoring the database to its state before the transaction began. |

| SAVEPOINT | Creates a point within the transaction to which you can roll back, without affecting the entire transaction. |

Understanding how to use transactions effectively ensures that data integrity is maintained in even the most complex scenarios, where multiple operations need to be executed as a cohesive unit. Proper knowledge of transaction control is essential for handling various database challenges efficiently.

Examining Database Constraints and Keys

Understanding the mechanisms that ensure data integrity and maintain relationships between tables is vital in database management. Constraints and keys serve as foundational tools for enforcing rules and preventing errors in stored data. By mastering these concepts, one can ensure that data remains consistent, valid, and logically connected across various operations.

Types of Constraints

Constraints are rules that are applied to columns in a table to ensure data integrity. These constraints help in maintaining the quality and accuracy of the data entered into the database. Some common types include:

- NOT NULL: Ensures that a column cannot have a NULL value. This constraint guarantees that a specific field always contains data.

- UNIQUE: Ensures that all values in a column are distinct. No two rows can have the same value for a column with a UNIQUE constraint.

- CHECK: Ensures that values in a column satisfy a specific condition. For example, a constraint may require a column to only contain positive numbers.

- DEFAULT: Assigns a default value to a column if no value is provided during data insertion.

- FOREIGN KEY: Enforces referential integrity between two tables by ensuring that a value in one table corresponds to a valid value in another table.

Types of Keys

Keys are used to identify records in a database table uniquely. They are essential for establishing relationships between tables and ensuring that the data is accessed and manipulated efficiently. The key types include:

- PRIMARY KEY: A column or set of columns that uniquely identifies each row in a table. Each table can have only one primary key.

- FOREIGN KEY: A column that establishes a link between two tables by referring to the primary key of another table. This ensures referential integrity.

- UNIQUE KEY: Ensures that all values in a column are unique, similar to a primary key, but can allow NULL values.

- COMPOSITE KEY: A combination of two or more columns that together uniquely identify a row. This is often used when a single column is insufficient.

By understanding and applying constraints and keys, one can effectively manage data integrity, optimize queries, and maintain reliable relationships between different entities within a database. Mastering these concepts is essential for working with complex data structures and ensuring data consistency.

Understanding Backup and Recovery

Effective data management requires robust strategies for protecting data against unexpected losses and ensuring its availability in case of failure. Backup and recovery are essential practices for safeguarding database content. These processes enable you to restore your data to a consistent state after any system disruption or hardware failure, ensuring minimal downtime and data loss.

Backup Strategies

Backups can be categorized into different types, each serving specific needs based on frequency, size of data, and recovery speed. Here are the primary backup methods:

- Full Backup: Involves copying all data in the database, ensuring that a complete replica of the system is saved. While it requires more storage, it simplifies the recovery process.

- Incremental Backup: Only captures the changes made since the last backup. This method is more efficient in terms of storage space but may take longer to restore because all incremental backups must be applied.

- Differential Backup: Backs up data that has changed since the last full backup. This method strikes a balance between the storage efficiency of incremental backups and the speed of recovery.

Recovery Methods

Recovery methods are designed to restore data to its original or a consistent state after failure. The choice of recovery method depends on the type of backup used, the nature of the data loss, and the desired recovery point:

- Point-in-Time Recovery: Allows you to restore the database to a specific moment, which is essential for recovering from errors like accidental data deletion or corruption.

- Restore from Full Backup: The simplest method, where a full backup is restored, and the system is brought back to its most recent state. This is often used when no incremental or differential backups are available.

- Restore from Incremental or Differential Backup: Involves applying the full backup first, followed by the incremental or differential backups to bring the database to its most recent state.

Understanding these backup and recovery techniques is crucial for anyone working with databases, as they are foundational to maintaining data integrity and ensuring business continuity. Regular backups and well-planned recovery procedures will help avoid catastrophic data loss and ensure the resilience of your database system.

Security Features in Database Systems for Assessment Scenarios

In any database management system, securing data against unauthorized access and potential threats is a top priority. This is particularly important in test settings where understanding security mechanisms is essential for ensuring the protection of sensitive information. The ability to implement strong security practices is a crucial skill for database administrators and developers alike.

Core Security Mechanisms

Several core security features play a key role in protecting data and ensuring that only authorized users can access and manipulate it. Here are the primary techniques employed:

- Authentication: Ensures that only valid users can access the system. This often involves verifying usernames and passwords, but more advanced systems may use multi-factor authentication for added security.

- Authorization: Once authenticated, users are granted specific permissions based on their roles. This determines what actions they can perform, such as reading, writing, or modifying data.

- Encryption: Protects data by converting it into a format that can only be read with the correct decryption key. Both data-at-rest and data-in-transit can be encrypted to secure sensitive information.

- Auditing: Keeps a detailed record of user actions and system changes. Auditing logs are critical for monitoring suspicious activity and tracking unauthorized access attempts.

Best Practices for Database Security

To ensure the security of a database system, a number of best practices should be followed:

- Use Strong Passwords: Passwords should be long, complex, and difficult to guess. Implementing password policies that enforce these practices can significantly reduce the risk of unauthorized access.

- Limit User Privileges: Adopting the principle of least privilege ensures that users only have access to the data and functions necessary for their tasks, minimizing the potential impact of a security breach.

- Regular Security Updates: Keeping the database software up to date is essential for protecting against known vulnerabilities. Patch management should be performed regularly to address emerging threats.

- Secure Backup Practices: Backups should be encrypted, stored securely, and regularly tested to ensure data can be recovered in the event of a breach or system failure.

Understanding these security features and best practices is essential for anyone involved in managing or developing database systems, especially when working in environments where data integrity and confidentiality are critical. Whether in theoretical scenarios or practical applications, mastering these concepts is fundamental for ensuring data safety.