Preparing for a comprehensive assessment in programming requires a clear understanding of core principles and practical techniques. The right strategies can significantly improve performance and ensure that the most essential concepts are mastered. Success depends on a well-rounded approach, combining theoretical knowledge with hands-on experience.

Effective preparation includes familiarizing oneself with key tools and methods used in computational problem-solving. From manipulating and analyzing large volumes of information to creating robust models, a variety of techniques are required to tackle complex tasks. Proficiency in these areas is crucial for performing well in any technical evaluation.

Whether it’s refining your coding practices or understanding the underlying mathematical concepts, this guide will provide insights into the most critical topics to focus on. With a combination of practical exercises and theoretical knowledge, anyone can improve their readiness for a challenging assessment.

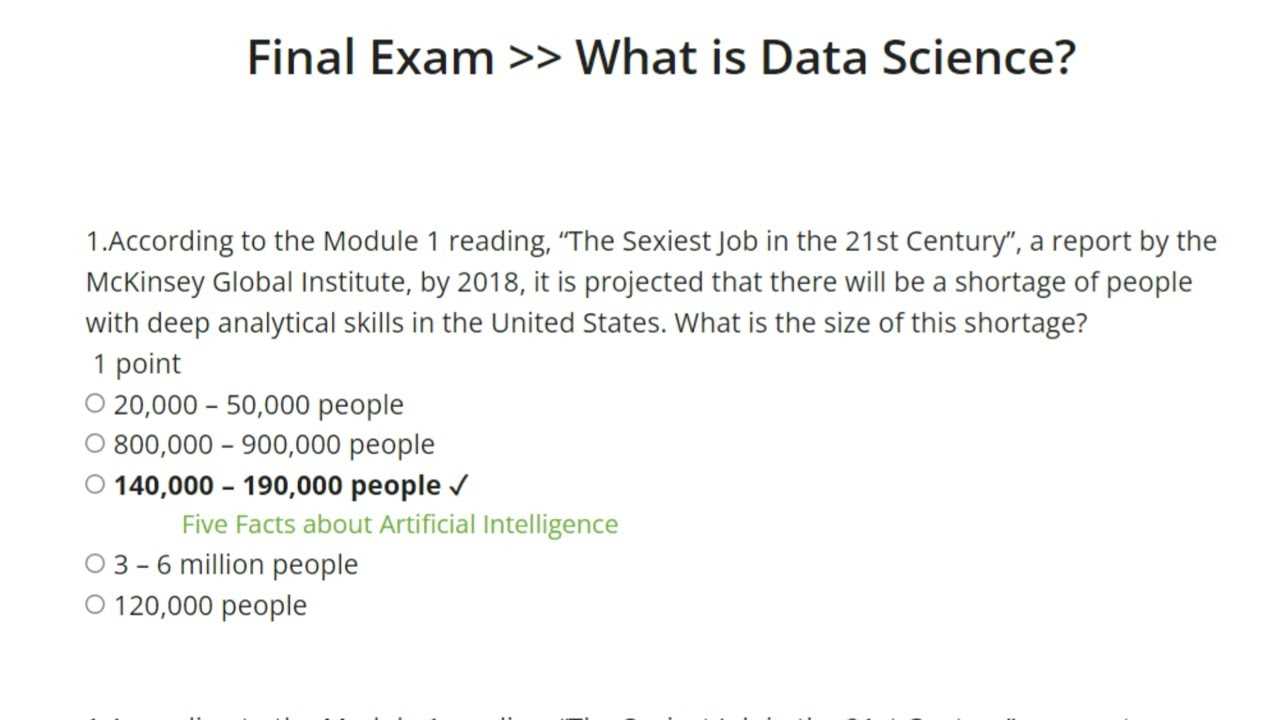

Python for Data Science Final Exam Answers

In this section, we will explore the essential techniques and tools necessary to excel in programming assessments focused on computational problem-solving. Whether tackling questions on algorithms, data manipulation, or model building, mastering the foundational methods is crucial for success. The following concepts are vital in demonstrating a strong grasp of the subject matter and applying the appropriate approaches to complex tasks.

Understanding key libraries and functions is the first step in developing the required skills. These tools are integral for handling and analyzing large sets of information, enabling efficient processing and insightful results. Familiarity with libraries such as NumPy, pandas, and others will make it easier to navigate challenges effectively.

Common pitfalls often arise when students fail to understand the best practices for writing clean, efficient code. This section covers how to identify and avoid these issues, providing examples of well-structured code that highlights best practices in terms of readability and efficiency. Additionally, we focus on debugging strategies to ensure that solutions are both correct and optimized.

Lastly, applying theoretical knowledge through practical exercises solidifies understanding and builds confidence. Solving problems in real-world scenarios helps reinforce key concepts and prepares individuals for similar challenges in assessments. This combination of theory and practice is the foundation for achieving success in this subject area.

Overview of Data Science Python Exam

This section provides a general understanding of the core topics and skills assessed during a comprehensive programming evaluation. The focus is on how to approach complex problem-solving tasks, with an emphasis on using advanced tools and techniques to analyze and process information effectively. The assessment often challenges individuals to apply their knowledge in real-world situations, requiring both theoretical understanding and practical application.

The evaluation typically covers a broad range of areas, including algorithm development, data manipulation, and statistical modeling. Candidates are expected to demonstrate their ability to write efficient, scalable code while adhering to best practices. This ensures that solutions are not only accurate but also optimized for performance, especially when dealing with large datasets.

Preparing for such an assessment involves familiarizing oneself with the most commonly used tools, libraries, and methods. From handling arrays and matrices to building machine learning models, the ability to tackle a variety of challenges is key to success. Proper preparation will help candidates develop the confidence needed to navigate the questions effectively and provide solutions with clarity and precision.

Key Python Concepts to Master

Mastering the essential principles of programming is crucial when preparing for any assessment in computational problem-solving. A solid understanding of the fundamental building blocks allows individuals to confidently tackle a wide range of challenges. These key concepts form the backbone of many tasks and help ensure that solutions are both accurate and efficient.

One of the core areas to focus on is control flow, which involves managing the order in which statements are executed. Understanding loops, conditional statements, and how to structure logic is essential for writing functional code. Additionally, being proficient with functions and methods allows for modular and reusable code, which is critical for solving complex problems in an organized manner.

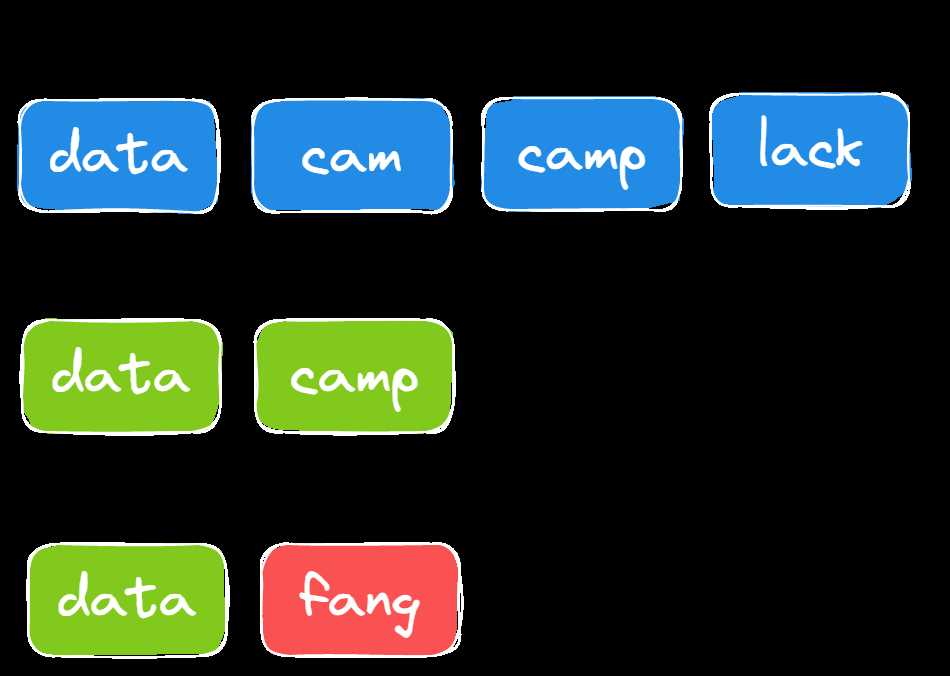

Another vital concept is data structures. Proficiency with lists, dictionaries, tuples, and sets enables programmers to store and manipulate information efficiently. These structures are fundamental when working with large amounts of information, as they allow for quick access and easy modification of elements.

Finally, familiarity with object-oriented programming (OOP) is necessary for creating scalable solutions. By organizing code into classes and objects, individuals can design flexible and maintainable systems. This approach also supports the creation of more advanced algorithms and models, which are often part of more complex assessments.

Essential Libraries for Data Science

When preparing for technical assessments, familiarity with key libraries is essential for efficiently solving complex problems. These powerful tools offer pre-built functions and methods, enabling quick manipulation, analysis, and visualization of large datasets. Mastery of these libraries not only saves time but also improves the quality and performance of solutions.

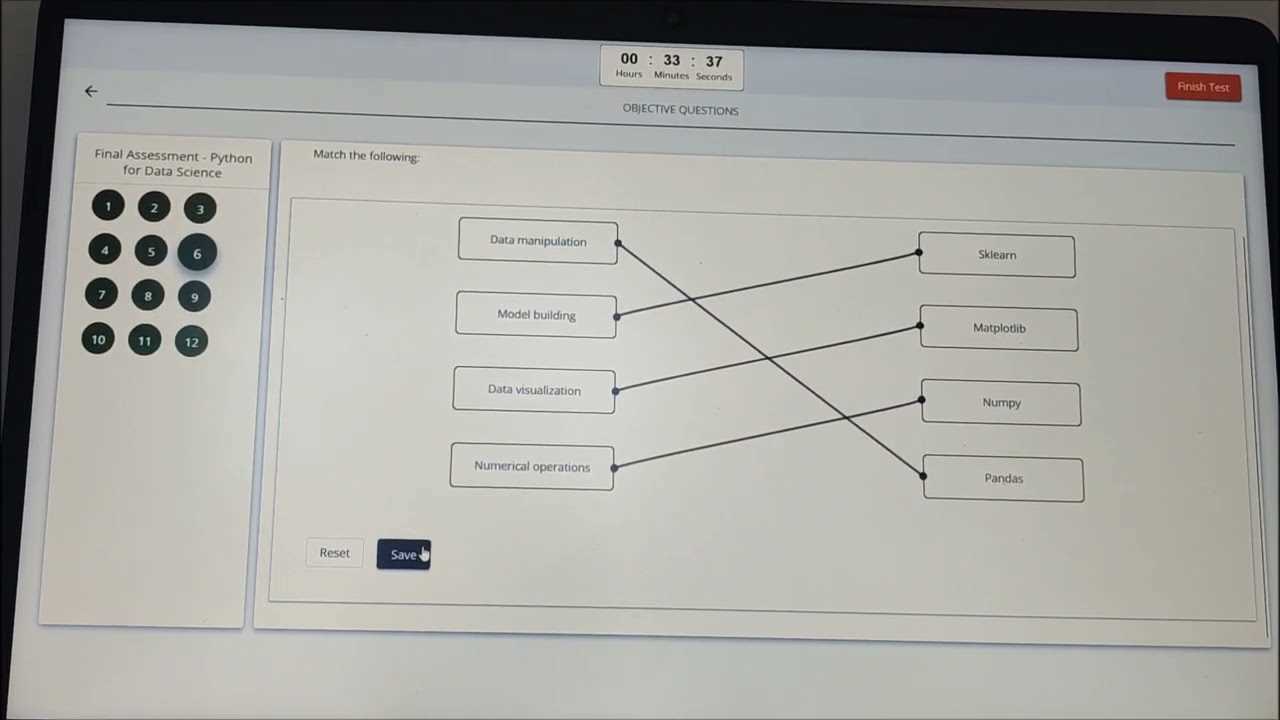

One of the most commonly used libraries is NumPy, which provides support for large, multi-dimensional arrays and matrices, as well as a collection of high-level mathematical functions. It is essential for performing numerical operations and is often used as the foundation for other more specialized libraries.

Another key library is pandas, which simplifies the process of working with structured data. It offers robust tools for manipulating data, including handling missing values, filtering data, and merging datasets. This library is invaluable for data cleaning and transformation tasks.

For visualizing information, Matplotlib and Seaborn are widely utilized. These libraries make it easy to create a wide range of static, animated, and interactive plots. Mastering them is crucial for effectively communicating insights and trends in any analytical work.

Additionally, libraries like Scikit-learn play a vital role in machine learning tasks. This toolkit provides a variety of algorithms for classification, regression, clustering, and dimensionality reduction. Proficiency in these libraries allows for the implementation of efficient and scalable machine learning models.

Essential Libraries for Data Science

When preparing for technical assessments, familiarity with key libraries is essential for efficiently solving complex problems. These powerful tools offer pre-built functions and methods, enabling quick manipulation, analysis, and visualization of large datasets. Mastery of these libraries not only saves time but also improves the quality and performance of solutions.

One of the most commonly used libraries is NumPy, which provides support for large, multi-dimensional arrays and matrices, as well as a collection of high-level mathematical functions. It is essential for performing numerical operations and is often used as the foundation for other more specialized libraries.

Another key library is pandas, which simplifies the process of working with structured data. It offers robust tools for manipulating data, including handling missing values, filtering data, and merging datasets. This library is invaluable for data cleaning and transformation tasks.

For visualizing information, Matplotlib and Seaborn are widely utilized. These libraries make it easy to create a wide range of static, animated, and interactive plots. Mastering them is crucial for effectively communicating insights and trends in any analytical work.

Additionally, libraries like Scikit-learn play a vital role in machine learning tasks. This toolkit provides a variety of algorithms for classification, regression, clustering, and dimensionality reduction. Proficiency in these libraries allows for the implementation of efficient and scalable machine learning models.

How to Work with DataFrames in Python

Handling structured information efficiently is a critical skill when tackling computational problems. DataFrames provide a powerful way to store, manipulate, and analyze tabular data, offering numerous built-in methods for various operations. Understanding how to work with these structures is essential for anyone looking to process large datasets or conduct detailed analyses.

The first step in working with DataFrames is learning how to import and create them. Typically, this involves reading data from external sources such as CSV files, Excel sheets, or databases. Using tools like pandas, you can easily load this information into a DataFrame for further processing. Once the data is loaded, it is essential to explore its structure, checking for missing values, data types, and basic statistics.

Next, mastering basic operations is crucial. You can filter rows, select specific columns, and apply conditions to subset the data. The ability to sort, group, and aggregate information helps summarize key insights from large sets. Additionally, learning how to handle missing or inconsistent data is a vital step in ensuring clean and accurate results.

Advanced tasks include merging and joining multiple DataFrames, which is useful when dealing with data from different sources or tables. Understanding how to reshape and pivot data enables users to reformat datasets into more usable forms. These operations, combined with the ability to visualize trends, make DataFrames an indispensable tool for handling structured information.

Data Cleaning Methods in Python

Ensuring that your dataset is accurate and free of errors is a critical step before any analysis or modeling. Cleaning the information involves identifying and handling inconsistencies, missing values, and outliers. By applying the right techniques, you can transform raw data into a reliable resource for insightful conclusions.

One of the first steps in the cleaning process is removing duplicates. Redundant entries can skew analysis and lead to incorrect conclusions. This can be done easily by checking for duplicate rows and removing them using specific commands.

Another important method is handling missing values. There are several approaches to deal with this issue:

- Removing rows with missing data, if they are not significant to the analysis.

- Filling missing values with a default value, such as the mean or median of the column.

- Using interpolation or other advanced techniques to estimate missing values.

Standardizing formats is another key method. Inconsistent formats, such as dates written in different styles or inconsistent capitalization, can cause issues in analysis. Ensuring uniformity helps simplify the dataset and reduces potential errors in processing.

Outlier detection is a vital step when cleaning. Outliers can distort statistical measures and affect machine learning models. Methods like z-scores, IQR (Interquartile Range), or visual techniques such as box plots can help identify and handle extreme values appropriately.

Finally, transforming the data into the desired format for further analysis is essential. This could include converting categorical variables into numerical representations, normalizing numerical values, or reshaping the dataset for use in specific models.

Exploring Data Visualization Tools

Data visualization is a crucial aspect of understanding and presenting complex information. By transforming raw numbers and trends into visual formats, it becomes easier to identify patterns, detect outliers, and communicate findings effectively. Various tools and libraries offer powerful capabilities for creating a wide range of visual representations, from simple charts to interactive plots.

One of the most widely used tools is Matplotlib, a library that provides comprehensive functionality for creating static plots. Whether it’s line charts, bar graphs, histograms, or scatter plots, this tool gives users control over the appearance and layout of their visuals, making it a go-to solution for quick and customizable plotting.

Seaborn is built on top of Matplotlib and simplifies the process of creating attractive, informative graphics. It integrates well with structured datasets and is especially useful for generating more sophisticated plots such as heatmaps, pair plots, and violin plots. Seaborn’s aesthetic features make it an excellent choice for producing high-quality visualizations with minimal effort.

For more interactive and dynamic visualizations, Plotly is an excellent option. It allows for the creation of interactive graphs that can be easily embedded in web applications or dashboards. Plotly supports a wide range of chart types, including 3D plots and geographic maps, enabling users to explore data from different perspectives.

Additionally, Altair offers a declarative approach to visualization, which allows users to build plots by specifying the relationships between data elements. This tool is particularly useful when working with complex datasets and is known for producing clean, responsive visualizations that can be customized with ease.

Each of these tools has its strengths and can be chosen based on the specific needs of the project. Whether aiming for quick, static plots or detailed, interactive visualizations, these libraries offer the flexibility and functionality necessary for presenting data in a compelling and informative way.

Common Python Functions for Data Analysis

When working with complex datasets, having a set of versatile functions at your disposal can significantly improve the efficiency of your analysis. These built-in functions allow users to perform common tasks like aggregation, transformation, and computation with minimal effort. Mastering these essential functions is key to simplifying repetitive tasks and accelerating the analysis process.

Here are some of the most commonly used functions for handling and analyzing structured information:

| Function | Description |

|---|---|

| len() | Returns the number of items in an object, such as a list or string. |

| sum() | Calculates the sum of all the elements in an iterable, like a list or array. |

| max() | Finds the highest value in an iterable. |

| min() | Finds the lowest value in an iterable. |

| mean() | Calculates the average of a list of numerical values. |

| median() | Finds the middle value in a sorted dataset. |

| map() | Applies a function to all items in an iterable and returns a new iterable with the results. |

| filter() | Filters elements in an iterable by applying a function that returns True or False. |

| sorted() | Returns a new sorted list from the elements of any iterable. |

| zip() | Combines multiple iterables element-wise into a single iterable of tuples. |

These functions can be combined in various ways to perform more complex analyses, enabling quick calculations and efficient processing of large volumes of information. Using them effectively can save time and ensure more accurate, streamlined results during analysis.

Statistical Methods for Data Science

Statistical methods are crucial tools for analyzing and interpreting large sets of numerical information. These techniques help uncover trends, relationships, and insights that might otherwise remain hidden. By applying various statistical principles, analysts can make informed decisions, predict outcomes, and validate hypotheses based on the available data.

Descriptive Statistics

Descriptive statistics involves summarizing or describing the key features of a dataset. It helps in understanding the overall structure and distribution of the information. Common methods include:

- Mean: The average of all the values in the dataset, which provides a central measure.

- Median: The middle value, useful for datasets with skewed distributions.

- Mode: The most frequent value, which helps identify common occurrences.

- Standard Deviation: A measure of how spread out the values are, indicating variability.

- Range: The difference between the maximum and minimum values, showing the spread of the data.

Inferential Statistics

Inferential statistics involves using sample data to make generalizations or predictions about a larger population. Key techniques include:

- Hypothesis Testing: A method for testing assumptions and determining if results are statistically significant.

- Confidence Intervals: A range of values that is likely to contain the population parameter, offering a measure of uncertainty.

- Regression Analysis: A tool for modeling relationships between variables, predicting outcomes based on historical data.

- Correlation: Measures the strength and direction of the relationship between two variables.

By mastering these statistical methods, analysts can draw meaningful conclusions, identify patterns, and provide insights that guide decision-making. These techniques form the foundation of any robust analytical process and are essential for working with large-scale, complex information.

Machine Learning Algorithms Overview

Machine learning techniques are essential for developing systems that can learn from experience and improve their performance over time. These algorithms form the core of many modern applications, from recommendation engines to predictive analytics. By recognizing patterns in data, machine learning models can make decisions, classify information, and forecast future outcomes based on past trends.

Supervised Learning

Supervised learning algorithms are trained using labeled data, where the output for each input is already known. The goal is for the model to learn the mapping between inputs and outputs, and then use that knowledge to predict the output for new, unseen data. Some commonly used supervised learning techniques include:

- Linear Regression: Used for predicting continuous values based on a linear relationship between the input and output variables.

- Logistic Regression: A classification algorithm used for binary outcomes, such as yes/no or true/false scenarios.

- Decision Trees: These models split the data into subsets based on the most significant features, and use these subsets to make predictions.

- Support Vector Machines (SVM): A powerful classifier that separates data points into different classes using a hyperplane in high-dimensional space.

Unsupervised Learning

Unsupervised learning algorithms, unlike their supervised counterparts, work with data that does not have predefined labels. These methods aim to find hidden patterns or intrinsic structures in the data. Some popular unsupervised learning techniques include:

- K-Means Clustering: This algorithm groups similar data points into clusters based on distance metrics, allowing for segmentation of the data.

- Hierarchical Clustering: Builds a hierarchy of clusters, either agglomerative (merging smaller clusters) or divisive (splitting larger clusters).

- Principal Component Analysis (PCA): A dimensionality reduction technique that transforms data into a smaller number of variables while retaining most of the variance.

Each algorithm serves different purposes depending on the type of problem at hand and the structure of the data. By understanding the strengths and applications of each method, practitioners can choose the best approach to achieve their desired outcomes and enhance model performance.

Python Code Snippets for Exam Preparation

In order to prepare effectively for assessments that involve coding, having a collection of reusable code snippets can be invaluable. These small blocks of code help streamline the process of solving common problems, saving time and reducing errors. By practicing with these snippets, you can familiarize yourself with key concepts and improve your ability to write clean, efficient solutions during the test.

Basic String Manipulation

String operations are fundamental and often appear in many coding challenges. Here are some useful examples:

- Reversing a String: This simple snippet reverses the order of characters in a string.

text = "example"

reversed_text = text[::-1]

print(reversed_text)text = "Hello, world!"

index = text.find("world")

print(index)text = "hello"

char_list = list(text)

print(char_list)Working with Lists and Loops

Lists and loops are often essential for processing and iterating over data. Here are some common operations:

- List Comprehension: A compact way to create a list by applying an expression to each item in an iterable.

numbers = [1, 2, 3, 4]

squared = [x**2 for x in numbers]

print(squared)numbers = [1, 2, 3, 4]

total = sum(numbers)

print(total)numbers = [10, 25, 30, 40]

max_value = max(numbers)

print(max_value)By practicing these essential snippets, you can develop a more intuitive understanding of the language and boost your problem-solving skills. These examples are just a few of the many patterns that will prepare you for coding challenges in assessments.

Best Practices for Python Programming

Writing clean, efficient, and readable code is essential for building maintainable software. Adhering to coding conventions and following best practices can improve both the quality of your code and your productivity. By focusing on principles such as clarity, efficiency, and reusability, developers can ensure that their work is easily understood and maintained by others. Below are several key practices to follow when writing code.

1. Code Readability and Structure

Readability is critical, as code is often written once but read many times. Following certain conventions can make your code more understandable to others and easier to debug.

- Use Descriptive Variable Names: Choose meaningful names that describe the content or purpose of the variable. Avoid using vague names like

tempordata1. - Keep Functions Short: Functions should focus on one task. If a function is doing too much, break it into smaller, more manageable parts.

- Indent Consistently: Consistent indentation makes your code easier to follow. Stick to one style (usually 4 spaces per indent level) and avoid mixing tabs and spaces.

2. Efficiency and Performance

While writing clean code is important, performance also matters. Optimizing algorithms and understanding how to manage resources effectively can lead to faster and more responsive programs.

- Minimize Loops: Avoid unnecessary loops. Instead, try to use built-in functions and methods that are optimized for performance.

- Use List Comprehensions: List comprehensions are often faster and more concise than traditional loops for creating new lists.

- Leverage Built-in Libraries: Python offers a rich set of standard libraries. Using built-in functions can significantly improve performance by avoiding the need to reinvent common functionality.

3. Error Handling and Debugging

Proper error handling and debugging techniques can save you time and effort, especially when working on large projects. Handling exceptions correctly prevents unexpected crashes and makes your code more robust.

- Use Try-Except Blocks: When performing operations that might fail (like file handling or network requests), wrap them in a

try-exceptblock to handle potential exceptions gracefully. - Log Errors: Implement logging to track and store error messages. This can help identify issues and monitor your program’s behavior over time.

- Test Your Code: Write unit tests to check if your code functions as expected. Automate testing to catch potential issues early in the development process.

By adhering to these best practices, developers can write code that is more efficient, easier to maintain, and less prone to errors. Following these guidelines helps you build better programs that can be shared, understood, and improved by others over time.

Handling Large Datasets in Python

Working with large datasets can be challenging due to memory limitations and computational constraints. Efficient techniques for handling these datasets are essential for both processing speed and resource management. By employing strategies such as chunking, parallel processing, and using optimized libraries, it is possible to process vast amounts of information without overwhelming system resources.

Efficient Memory Management

When dealing with large amounts of information, memory usage can become a significant bottleneck. Optimizing how data is loaded, processed, and stored can help avoid crashes and slowdowns.

- Load Data in Chunks: Instead of loading the entire dataset into memory, split the data into smaller chunks that can be processed sequentially. Libraries such as

pandasanddasksupport chunking for efficient memory usage. - Use Data Compression: Storing data in compressed formats like

parquetorcsv.gzcan drastically reduce the memory footprint and speed up input/output operations. - Use Appropriate Data Types: Choose the smallest data types possible for each column (e.g.,

int8instead ofint64) to save memory when working with large tabular data.

Parallel and Distributed Processing

For more complex tasks, parallel and distributed computing techniques can speed up processing by taking advantage of multiple cores or even multiple machines.

- Parallelize Computations: Utilize multi-threading or multi-processing to divide tasks among different CPU cores. Libraries like

concurrent.futuresorjoblibmake it easier to parallelize operations. - Use Distributed Computing Frameworks: Leverage frameworks like

Apache Sparkordaskthat allow you to distribute the processing load across multiple machines, enabling the handling of much larger datasets. - Out-of-Core Computation: For extremely large datasets, some libraries allow computations to be performed without loading the entire dataset into memory by processing data in smaller segments.

By incorporating these methods, you can efficiently manage and process large datasets while minimizing the impact on system resources, ensuring faster and more effective analysis even with enormous volumes of information.

Testing and Debugging Python Code

Ensuring the reliability and functionality of your code is a critical aspect of software development. Testing and debugging help identify issues early, streamline the development process, and ensure that the code performs as expected. By adopting structured methods for detecting errors and verifying that the code behaves correctly, developers can produce robust applications that are easier to maintain and extend.

Common Debugging Techniques

Debugging involves identifying and fixing errors in the code. Whether the problem is syntax-based or logic-related, there are several strategies that can help isolate the issue.

- Print Statements: Adding print statements throughout the code is a quick way to observe variable values and the flow of execution. This can help pinpoint the exact location where errors are occurring.

- Interactive Debugging: Use tools like the

pdbdebugger to step through the code, inspect variables, and control execution at runtime. This is especially helpful for tracing complex issues. - Error Logs: Review the error logs generated by the interpreter to understand the cause of the failure. Often, the stack trace will provide valuable clues regarding the problem’s source.

Testing Strategies

Testing involves writing specific code to verify that the application behaves correctly under various conditions. A comprehensive testing strategy can catch errors before deployment.

- Unit Testing: This type of testing focuses on individual functions or components. The

unittestmodule allows you to define test cases that ensure each part of your code performs as expected. - Integration Testing: After unit tests are conducted, integration tests check if different parts of the system interact correctly. Tools like

pytesthelp automate this process and can be used for more complex scenarios. - Mocking: When testing functions that depend on external services or databases, mocking allows you to simulate responses from these services without actually calling them, thus speeding up the testing process.

Key Debugging and Testing Tools

| Tool | Description |

|---|---|

pdb |

Python’s built-in debugger for interactive debugging and code inspection. |

unittest |

Built-in module for writing and running unit tests to verify code correctness. |

pytest |

Popular testing framework that supports unit, integration, and functional tests. |

mock |

Library to replace parts of the system under test with mock objects during testing. |

By consistently using debugging and testing methods, you can ensure that your software is both reliable and maintainable, reducing the risk of errors and improving the overall development process.

Time Management Tips for the Exam

Effective time management is crucial when preparing for and taking any assessment. The ability to allocate time wisely ensures that you cover all necessary topics, reduce stress, and improve your overall performance. By following a few key strategies, you can ensure that you make the most of the time available during preparation and the actual test.

During Preparation

Proper planning during your study phase can help you stay organized and reduce last-minute panic. Prioritize important topics and break your study sessions into manageable chunks.

- Create a Study Schedule: Set aside specific times each day for studying and stick to the plan. Having a structured timetable helps ensure you cover all the material while avoiding procrastination.

- Use Active Learning Techniques: Instead of passively reading, engage with the material by solving problems, writing summaries, and teaching concepts to others. This approach helps reinforce knowledge and boosts retention.

- Take Breaks: Avoid burnout by scheduling short breaks between study sessions. The Pomodoro technique, which suggests a 25-minute study period followed by a 5-minute break, is a popular method for staying focused.

During the Test

Managing your time during the actual test is just as important as the preparation. Proper time allocation ensures that you complete all tasks to the best of your ability.

- Read the Instructions Carefully: Before you start, spend a few minutes reading the instructions and reviewing the entire test. This helps you understand what is expected and gives you a clear idea of how to allocate your time.

- Allocate Time to Each Section: Divide your time based on the number of sections and the complexity of each. If a section is more difficult or contains more points, give it more time, but don’t spend too long on any one question.

- Don’t Get Stuck: If you find a question difficult, move on to the next one and return to it later. It’s better to complete the easier tasks first and come back to the tougher ones when you have time.

- Review Your Work: If time permits, review your answers at the end. Double-check for errors or missed details that may lower your score.

By practicing these time management techniques, you can reduce anxiety and approach the test with a clearer, more focused mindset. Remember, the key is to stay organized and use your time wisely to maximize performance.

Common Mistakes to Avoid During the Exam

When facing a challenging assessment, it’s easy to make mistakes under pressure. These errors can sometimes be avoidable with a bit of foresight and careful attention. By recognizing and preventing common pitfalls, you can improve your chances of success and avoid losing valuable points due to simple oversights.

Pre-Test Mistakes

Before you even start answering questions, there are some common missteps that can impact your readiness and confidence. Being aware of these will help you approach the test with a clearer mind.

- Procrastination: Leaving preparation to the last minute can lead to rushed study sessions and incomplete understanding. Consistent study habits spread over time help retain more information.

- Neglecting Rest: Underestimating the importance of rest before a test can result in fatigue and poor concentration. Aim for a good night’s sleep to refresh your mind.

- Skipping Instructions: Not carefully reading instructions before diving into the questions can lead to confusion. Always take a moment to understand the requirements before starting.

In-Test Mistakes

During the assessment itself, there are several common actions that can lead to errors, often due to nervousness or rushing. Avoiding these can make a significant difference in your performance.

- Mismanaging Time: Spending too much time on one section or question can leave you with insufficient time for others. Time management is crucial to ensure all parts of the test are addressed.

- Overlooking Simple Errors: While focusing on complex questions, don’t forget to check for small mistakes, such as miscalculations or overlooked details, which can easily reduce your score.

- Misinterpreting Questions: Rushing through questions without fully understanding them can lead to incorrect answers. Read each question carefully to ensure you know exactly what is being asked before responding.

- Leaving Questions Blank: If unsure about an answer, don’t leave it blank. Even partial answers can earn points, so attempt all questions to maximize your potential score.

Being aware of these common mistakes and taking steps to avoid them will help you remain calm, focused, and confident, ensuring that you can perform at your best during the test.

Where to Find Additional Resources for Practice

Expanding your understanding and sharpening your skills requires consistent practice. Fortunately, there are numerous online platforms and materials available to enhance your learning journey. Exploring a variety of resources will help reinforce your knowledge and provide you with the necessary experience to tackle any challenge.

Online Platforms and Communities

Several websites and forums offer opportunities for hands-on practice, ranging from interactive tutorials to community-driven challenges. These platforms offer valuable tools to hone your abilities and get immediate feedback.

- Interactive Coding Platforms: Websites like Codecademy, LeetCode, and HackerRank offer interactive coding exercises that allow you to practice real-world problems in a guided environment.

- Online Learning Platforms: Courses on platforms such as Coursera, edX, and Udemy provide in-depth lessons and assignments to help you solidify your knowledge.

- Community Forums: Engaging with communities like Stack Overflow or Reddit can expose you to real-life challenges and solutions, while also enabling peer support.

Books and E-books

In addition to online resources, traditional learning materials like textbooks and e-books can be invaluable for mastering concepts at your own pace. Many books provide a structured approach and include exercises to practice key topics.

- Textbooks: Books such as “Automate the Boring Stuff with Programming” or “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” offer deep dives into various aspects of the subject, with exercises and projects to help reinforce what you’ve learned.

- E-books and PDFs: Many free and paid e-books are available online for different skill levels, ranging from beginner to advanced. Websites like GitHub and Project Gutenberg also host valuable open-source educational materials.

Practice Datasets

Working with real-world datasets is an excellent way to apply your knowledge and solve practical problems. Websites offering public datasets allow you to practice cleaning, analyzing, and visualizing data in real-world contexts.

| Resource | Description |

|---|---|

| Kaggle | An online platform with competitions and datasets across a wide range of fields, from business to healthcare. |

| UCI Machine Learning Repository | A collection of datasets available for machine learning research and practice. |

| Google Dataset Search | A tool for discovering datasets from across the web, including publicly available data from universities, governments, and organizations. |

By incorporating these resources into your study routine, you can strengthen your practical skills and be better prepared to handle any challenges that arise. Regular practice with different materials will ensure that you’re equipped with the knowledge and experience necessary to succeed.