The study of advanced systems principles encompasses a variety of critical topics essential for understanding the inner workings of modern computing systems. This area focuses on the design and functionality of key components that drive performance, efficiency, and reliability in machines.

Mastering these concepts requires a strong grasp of both theoretical frameworks and practical applications. By delving into areas such as processing units, memory organization, and data flow, students can prepare to tackle complex challenges. This guide covers essential topics, offering insights and helping reinforce knowledge through practical examples and common scenarios encountered in assessments.

Preparing effectively involves a clear understanding of the core elements that influence system behavior. Exploring the interactions between various subsystems allows for a deeper comprehension of how machines process and manage information. With the right preparation, success in any evaluation related to these subjects is within reach.

Computer Systems Review for Assessments

As you prepare for assessments in advanced systems design, it’s crucial to understand both foundational concepts and specific details that are often evaluated. This section highlights the key topics that frequently appear in evaluations, helping you focus on essential areas for review. The primary goal is to offer clarity on complex principles and their real-world applications, guiding your study process efficiently.

Key Areas to Focus On

In this section, we cover the main topics that are often tested, providing you with a structured approach to mastering each concept. From memory management techniques to processing unit functions, these subjects form the backbone of system-level evaluations. Each area is critical for understanding how different components of a machine interact to perform various tasks.

| Topic | Description | Key Concepts |

|---|---|---|

| Processing Unit Functions | Understanding the role of processing units in managing tasks. | Instruction cycle, data paths, control signals |

| Memory Systems | Exploring different types of memory and their interaction with the processor. | Cache, RAM, virtual memory |

| Data Flow Management | Examining how data moves through a system during computation. | Bus systems, pipeline architecture, data hazards |

| Advanced Processors | Delving into the differences between complex processors and simpler ones. | RISC vs CISC, pipelining, parallelism |

Effective Preparation Techniques

To excel in these assessments, it’s important to not only review theoretical concepts but also apply them through practice. Solving previous tasks, understanding the underlying principles, and being able to explain the functionality of each component are key strategies. Consistent practice with problem-solving will enhance your ability to approach new challenges with confidence.

Key Topics for Computer Architecture Exams

To effectively prepare for evaluations in advanced systems design, it’s essential to focus on several core areas that form the foundation of this field. These topics not only encompass the theoretical principles but also emphasize their practical implications. Understanding how components interact and how systems are optimized is crucial for success in any related assessment.

- Processing Unit Design: The heart of any system, responsible for executing instructions and managing tasks.

- Memory Management: Understanding different memory types, their hierarchies, and how data is stored and retrieved.

- Data Flow and Control: The mechanisms that govern how data moves through a system and the role of control units in managing that flow.

- Parallelism and Pipelining: Techniques that increase system performance by allowing multiple tasks to be processed simultaneously.

- Instruction Sets: A detailed look at the sets of commands that processors understand and how they are executed.

- Performance Metrics: Key indicators such as clock speed, throughput, and efficiency that determine system performance.

Familiarity with these topics ensures a deeper understanding of how modern systems operate. By focusing on the interplay between components like processing units, memory, and data pathways, you can effectively address various challenges typically presented in assessments.

Be sure to also review:

- Cache memory design and its optimization strategies.

- The impact of I/O systems on overall system performance.

- Comparative analysis of RISC and CISC processor architectures.

- Advanced data storage solutions and their integration with system design.

Understanding Processor Design and Functions

At the core of any system lies the processing unit, which plays a vital role in executing tasks and controlling the flow of operations. The design of these units dictates how efficiently data is processed, how instructions are executed, and how resources are managed. A solid understanding of how processing units are structured and function is essential for grasping the overall performance of a system.

Processing units are responsible for carrying out instructions that make a system operational. They fetch, decode, and execute commands, interacting with memory and other subsystems to complete operations. The design of these units directly impacts factors such as speed, efficiency, and the ability to handle multiple tasks simultaneously.

Key elements of processor design include:

- Control Unit (CU): Directs the operation of the processor by interpreting instructions and coordinating the actions of other components.

- Arithmetic Logic Unit (ALU): Performs mathematical and logical operations essential for executing commands.

- Registers: Small, fast storage locations used to temporarily hold data during processing.

- Clock Speed: Determines the rate at which the processor executes instructions, influencing overall system performance.

Efficient processor design aims to optimize these components to reduce bottlenecks and maximize throughput. Techniques such as pipelining, where multiple stages of instruction processing overlap, are often employed to increase efficiency and processing speed.

Memory Systems in Computer Architecture

Memory systems are a fundamental aspect of any machine, as they store and retrieve data required for processing tasks. A well-designed memory system allows for quick access to critical information, optimizing performance and efficiency. These systems are composed of various types of storage that differ in speed, capacity, and cost. Understanding how they interact is essential for comprehending the overall functioning of a system.

Types of Memory

Different memory types serve distinct roles within a system. Each memory type is characterized by specific attributes that influence its interaction with processing units. Some memories offer rapid access times but limited capacity, while others offer larger storage but slower access. A balanced memory hierarchy is key to achieving optimal performance.

| Memory Type | Characteristics | Use Case |

|---|---|---|

| Cache Memory | Small, high-speed storage that stores frequently accessed data. | Improving data retrieval speed for the processor. |

| RAM | Volatile memory used to store active data and programs. | Storing data currently in use for quick access. |

| ROM | Non-volatile memory that stores essential instructions. | Storing firmware and system boot instructions. |

| Hard Drive/SSD | Large, non-volatile storage with slower access times. | Storing long-term data and files. |

Memory Hierarchy and Optimization

To achieve high performance, systems employ a memory hierarchy that includes fast, small memories and slower, larger ones. Data is moved between different levels of memory depending on the current need, with frequently accessed data stored in faster types of memory. Optimizing this hierarchy is crucial for reducing latency and ensuring efficient operation.

Instruction Set Architectures Explained

The foundation of any processing unit lies in its ability to interpret and execute instructions. The set of commands a processor can understand and perform is referred to as its instruction set. This collection of instructions defines the machine’s functionality and determines how it interacts with data and memory. Understanding the structure and types of these instruction sets is essential for grasping how different systems operate and perform tasks.

Types of Instruction Sets

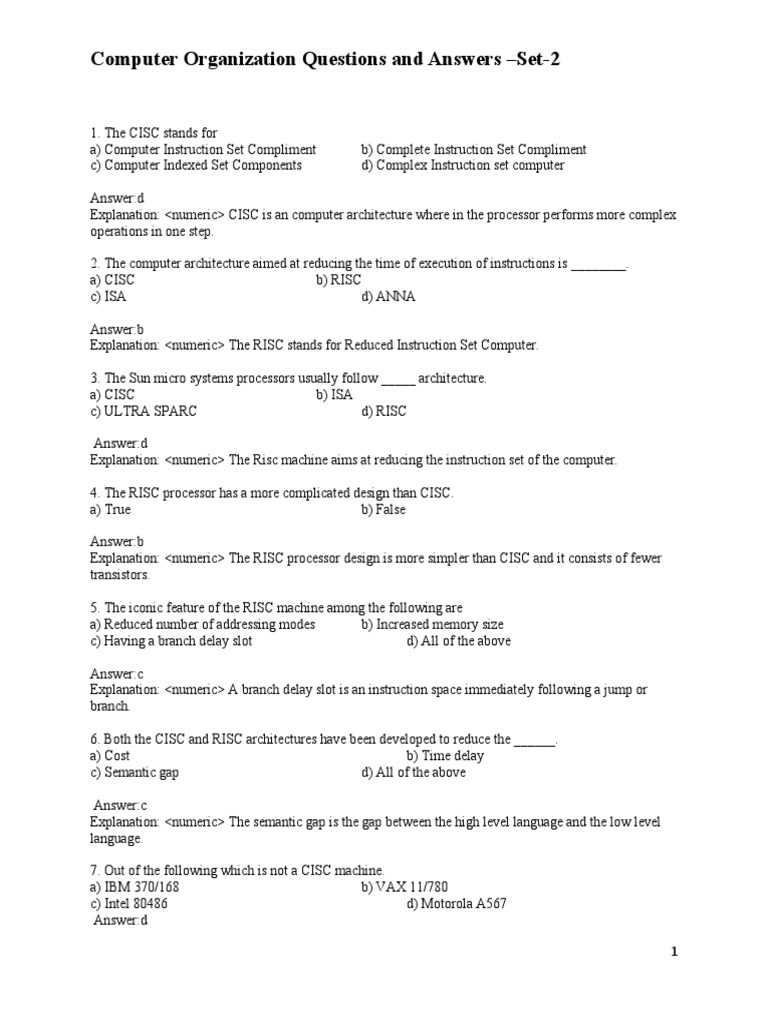

Instruction sets can vary significantly in terms of complexity and efficiency. Two main categories commonly discussed are Reduced Instruction Set (RISC) and Complex Instruction Set (CISC). Each type offers distinct advantages depending on the design goals of the system. RISC systems aim for simplicity and speed by using a smaller number of simpler instructions, while CISC systems use a larger set of more complex commands to perform more tasks per instruction.

| Instruction Set Type | Characteristics | Example Systems |

|---|---|---|

| RISC | Simpler, fewer instructions designed for fast execution. | ARM, MIPS |

| CISC | More complex, capable of performing multiple operations per instruction. | Intel x86 |

Key Elements of Instruction Sets

Each instruction set consists of various elements that work together to carry out commands. These include the opcodes (which define the operation), operands (the data or addresses on which the operation will act), and addressing modes (which specify how to access the operands). Understanding these components is crucial for programming and optimizing the performance of any processing system.

Evaluating Cache Memory and Its Importance

Cache memory plays a critical role in modern systems by bridging the gap between fast processing units and slower main storage. It is designed to hold frequently accessed data, allowing for quicker retrieval and reducing delays. Its efficiency significantly impacts overall system performance, making it a key factor in optimizing task execution and minimizing wait times.

Cache Types and Functionality

There are several types of cache memory, each serving a specific purpose in a memory hierarchy. The primary types include level 1 (L1), level 2 (L2), and level 3 (L3) caches. L1 cache is the fastest and closest to the processor, while L2 and L3 caches offer larger storage capacities but with slightly slower access times. These different levels work together to maximize the speed of data access and minimize bottlenecks.

Performance Benefits of Cache Memory

The presence of a well-designed cache system can greatly enhance system responsiveness. By storing frequently used data close to the processor, cache memory reduces the time required to fetch information from slower storage devices. The ability to access data rapidly ensures that processing tasks are completed more efficiently, leading to faster overall system performance.

Exploring Virtual Memory Mechanisms

Virtual memory is an essential concept in modern systems, enabling efficient management of memory resources by using a combination of physical memory and disk storage. This mechanism creates an illusion for programs, making them believe they have access to a large, contiguous block of memory, even though the actual physical memory may be limited. By using virtual memory, systems can run more applications simultaneously, improving multitasking and resource utilization.

Paging and Segmentation

The two main techniques for implementing virtual memory are paging and segmentation. Paging divides memory into fixed-size blocks, known as pages, while segmentation splits memory into variable-sized sections based on logical divisions of a program. Both methods allow the system to efficiently manage memory, ensuring that processes can access the data they need without running out of space.

Page Tables and Address Translation

Page tables are used to map virtual addresses to physical addresses. When a process requests data, the system uses the page table to translate the virtual address to a corresponding location in physical memory. This translation process allows the system to access the data stored in memory, whether it is currently in physical RAM or swapped out to disk. The efficiency of this process is crucial for maintaining optimal system performance.

Comprehending Data Hazards in Pipelines

In modern processing systems, pipelines are used to execute multiple instructions concurrently, improving throughput and overall system efficiency. However, when instructions depend on the results of previous ones, conflicts can arise, leading to delays or incorrect results. These conflicts, known as data hazards, can significantly impact performance if not properly managed. Understanding the different types of data hazards and how to resolve them is crucial for optimizing pipeline performance.

Types of Data Hazards

There are three main types of data hazards: read-after-write (RAW), write-after-read (WAR), and write-after-write (WAW). Each type represents a different conflict that can occur during instruction execution. RAW hazards, often referred to as true dependencies, occur when an instruction needs to read data that a previous instruction is writing. WAR hazards happen when an instruction tries to write to a register before it has been read by another instruction. WAW hazards arise when two instructions attempt to write to the same register simultaneously.

Resolving Data Hazards

Several techniques are employed to handle data hazards effectively. One common method is data forwarding, which allows the result of a previous instruction to be passed directly to subsequent instructions without waiting for it to be written back to memory. Another approach is pipeline stalling, where the pipeline temporarily halts certain stages to allow the necessary data to become available. While stalling can introduce delays, it helps ensure the correctness of the instructions being executed.

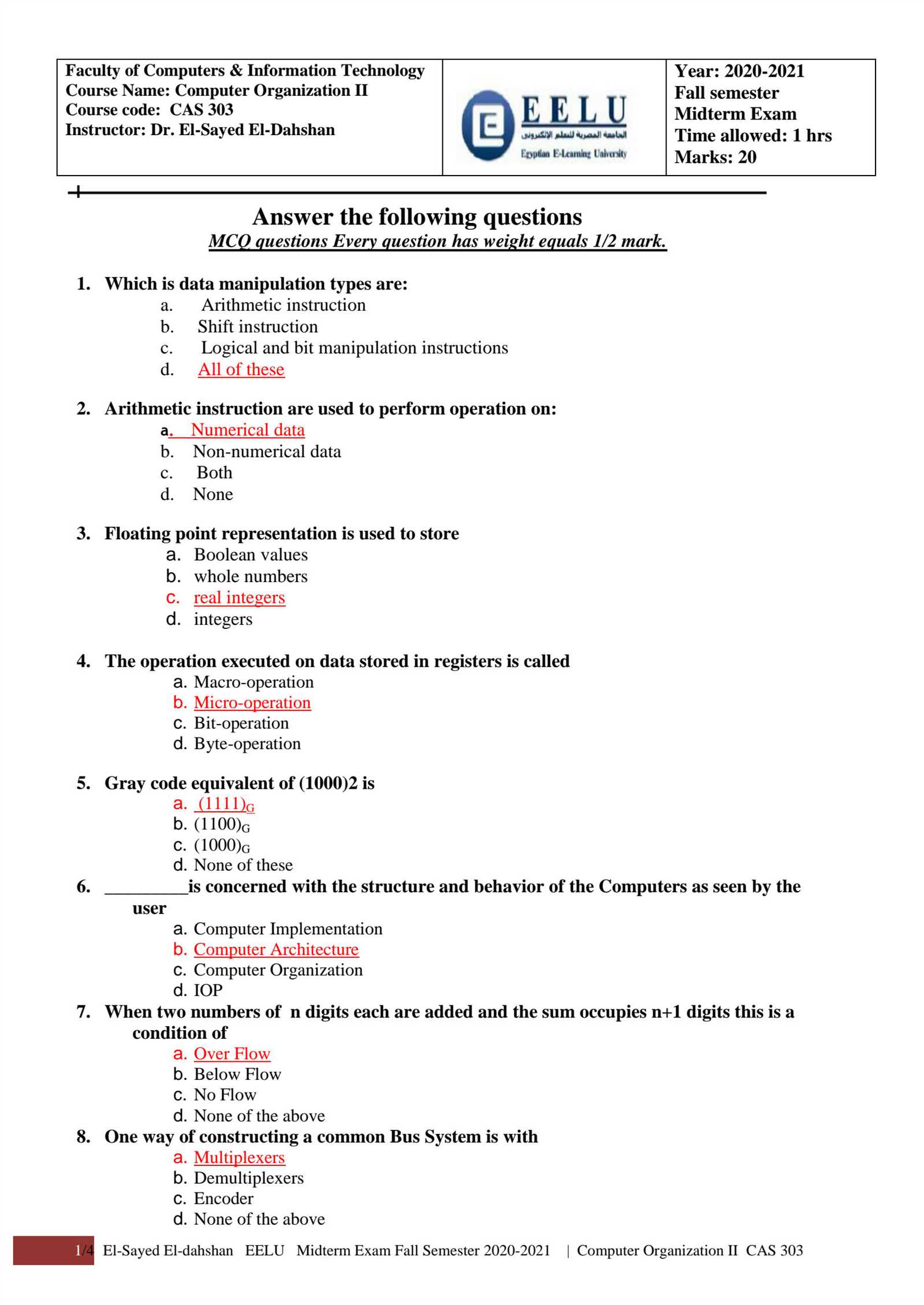

Arithmetic Logic Units and Their Role

The Arithmetic Logic Unit (ALU) is a fundamental component in any processing unit, responsible for carrying out various arithmetic and logical operations. It forms the core of the computational tasks performed by a system, ranging from simple calculations to more complex decision-making processes. By executing a series of operations, the ALU ensures that the system can perform a wide array of tasks, from adding numbers to performing bitwise logic.

Functions of the Arithmetic Logic Unit

The ALU is designed to handle both arithmetic operations, such as addition, subtraction, multiplication, and division, as well as logical operations like comparison, bitwise AND, OR, and NOT. These operations are crucial for the execution of instructions, enabling the system to manipulate data and make decisions based on conditional checks. The versatility of the ALU is key to its ability to support complex algorithms and applications.

Impact on System Performance

Efficient operation of the ALU is critical to the overall performance of a system. A high-performance ALU can significantly reduce the time required to process tasks, allowing for faster execution of programs. Additionally, the integration of multiple ALUs in parallel processing systems can further enhance computational throughput, enabling the simultaneous execution of multiple operations for increased efficiency.

Impact of I/O Systems on Performance

The efficiency of input/output (I/O) systems plays a critical role in determining the overall performance of a system. These systems facilitate the communication between the central processing unit (CPU) and external devices, such as storage drives, networks, and peripherals. The speed at which data can be transferred between these components directly affects the responsiveness and throughput of the entire system. Inefficient I/O operations can lead to significant delays and bottlenecks, hindering performance even if the processing power of the CPU is high.

Key Factors Influencing I/O Performance

Several factors influence the effectiveness of I/O systems, which include:

- Data Transfer Rate: The speed at which data moves between devices and memory plays a central role in system performance. High transfer rates reduce the time spent waiting for data, increasing overall system efficiency.

- Device Access Time: The time it takes to access data from storage devices can significantly impact performance. Faster access times ensure that tasks are completed more quickly, improving system responsiveness.

- Concurrency: The ability to handle multiple I/O operations simultaneously can enhance performance by reducing idle time. Systems that allow parallel data transfers often experience better throughput.

Improving I/O Efficiency

To maximize system performance, optimizing I/O operations is essential. Some techniques to achieve this include:

- Buffering: Storing data temporarily in memory before it is processed can help to reduce the load on I/O channels and prevent delays.

- Direct Memory Access (DMA): By allowing peripheral devices to transfer data directly to memory, DMA reduces CPU involvement in I/O operations, freeing up resources for other tasks.

- Prioritization: Giving priority to critical tasks or I/O operations can help ensure that time-sensitive processes are completed faster, avoiding unnecessary delays.

By addressing these aspects, systems can significantly enhance the speed and efficiency of I/O interactions, contributing to improved overall performance.

Understanding the Role of Multiprocessing

Multiprocessing is a technique that involves using multiple processing units to execute tasks concurrently, allowing a system to handle more complex and demanding operations efficiently. This approach divides work into smaller, parallel tasks, which are processed simultaneously, leading to improved performance, faster computation, and better resource utilization. By leveraging multiple processors, systems can perform computations at a higher speed, optimize throughput, and manage multiple workloads effectively.

Types of Multiprocessing

There are different types of multiprocessing systems that vary in how they manage tasks and resources. These include:

- Symmetric Multiprocessing (SMP): In SMP systems, all processors have equal access to the memory and resources, and they share the workload equally. This type of system is widely used in general-purpose computing.

- Asymmetric Multiprocessing (AMP): In AMP systems, one processor, often called the master processor, controls the operation of the other processors, which perform specific tasks. This setup is useful in systems that require dedicated resources for specialized tasks.

- Clustered Multiprocessing: This configuration involves linking multiple independent computers (or nodes) to work together as a unified system. This model is often used for high-performance computing in research and data centers.

Advantages of Multiprocessing

Multiprocessing offers several significant benefits that enhance system efficiency:

- Increased Performance: By processing multiple tasks at once, systems can complete operations much faster than with a single processor.

- Improved Reliability: Multiprocessing allows tasks to be distributed across different processors, reducing the risk of failure affecting the entire system.

- Scalability: As workloads increase, additional processors can be added to the system, allowing for scalable performance.

Through these advantages, multiprocessing enables systems to handle larger, more complex tasks while maintaining high efficiency and responsiveness.

Principles Behind RISC and CISC Architectures

The design of processing systems is influenced by different approaches that focus on the number and complexity of instructions used to execute tasks. Two prominent models, known as RISC and CISC, have distinct philosophies when it comes to how instructions are structured and processed. These two models aim to optimize performance in different ways, depending on the target application and system requirements.

RISC: Reduced Instruction Set

RISC systems prioritize simplicity by using a small, highly optimized set of instructions. Each instruction is designed to execute in a single clock cycle, ensuring fast processing. The primary idea behind this model is that simpler instructions can be executed more efficiently, allowing for higher performance in many scenarios. By limiting the number of instructions, the hardware becomes less complex, leading to faster instruction execution and lower power consumption.

- Efficiency: The simplified set of instructions results in quicker execution, reducing the time spent on complex tasks.

- Lower Hardware Complexity: A small number of instructions reduces the overall complexity of the processor, making it easier to design and maintain.

- Improved Performance: With fewer cycles needed per instruction, tasks are completed faster, leading to enhanced processing speed.

CISC: Complex Instruction Set

On the other hand, CISC systems use a larger, more complex set of instructions. Each instruction can perform multiple operations in a single cycle, which aims to reduce the number of instructions required for a task. While this approach can be more efficient in terms of memory usage, it also introduces complexity in the processing, as each instruction may take more clock cycles to execute. CISC is often favored in applications where memory constraints are critical and where fewer instructions are needed to complete complex operations.

- Reduced Program Size: Complex instructions allow for more functionality within a single instruction, which can reduce the overall size of the program.

- More Flexibility: The ability to execute more complex tasks with fewer instructions provides greater flexibility in handling intricate operations.

- Increased Memory Utilization: By combining multiple tasks into a single instruction, memory usage can be optimized.

Both RISC and CISC have their unique advantages and limitations. The choice between the two depends on the specific needs of the system and the applications it is designed to support.

Memory Hierarchy and Its Optimization

In modern systems, efficient data storage and retrieval are essential for maximizing performance. Memory hierarchy refers to the organization of different types of storage, each optimized for specific roles. This structure is designed to reduce the time it takes to access data by leveraging faster, more expensive memory at the top of the hierarchy and slower, more cost-effective memory at the bottom. The goal is to create a balance that minimizes latency while optimizing resource usage.

Levels of Memory Hierarchy

The memory hierarchy typically consists of several levels, each designed to provide varying amounts of speed and capacity. The closer the memory is to the processor, the faster it operates, but the more expensive it is per byte. Conversely, memory that is farther from the processor is slower but offers larger storage capacity at a lower cost.

- Registers: Located within the processor, these are the fastest form of memory but are very limited in size.

- Cache: A small amount of fast memory situated between the processor and main memory, which helps speed up data access by storing frequently used information.

- Main Memory (RAM): This is the primary storage used by the system, offering larger capacity but slower access times compared to cache.

- Secondary Storage: Includes hard drives and solid-state drives, which provide long-term storage but are significantly slower than RAM.

Optimizing Memory Hierarchy

Efficient memory hierarchy optimization is crucial for improving system performance. Several techniques can be used to enhance the overall memory management process, ensuring that the right type of memory is accessed at the right time.

- Data Locality: Optimizing the use of data locality–both temporal (frequent reuse of data) and spatial (data stored close together)–is essential for reducing latency and improving cache hit rates.

- Cache Management: Implementing strategies such as cache replacement algorithms (e.g., LRU or LFU) helps ensure that the most relevant data is stored in faster memory, improving access speeds.

- Prefetching: Predicting which data will be needed next and loading it into faster memory before it is accessed can help reduce waiting times.

- Memory Bandwidth: Enhancing the memory bandwidth ensures that data can be transferred to and from memory faster, reducing bottlenecks in data retrieval.

By understanding the structure of memory hierarchy and employing optimization techniques, systems can achieve better performance, responsiveness, and efficiency. Effective memory management ensures that processing speed is maximized without overwhelming the available resources.

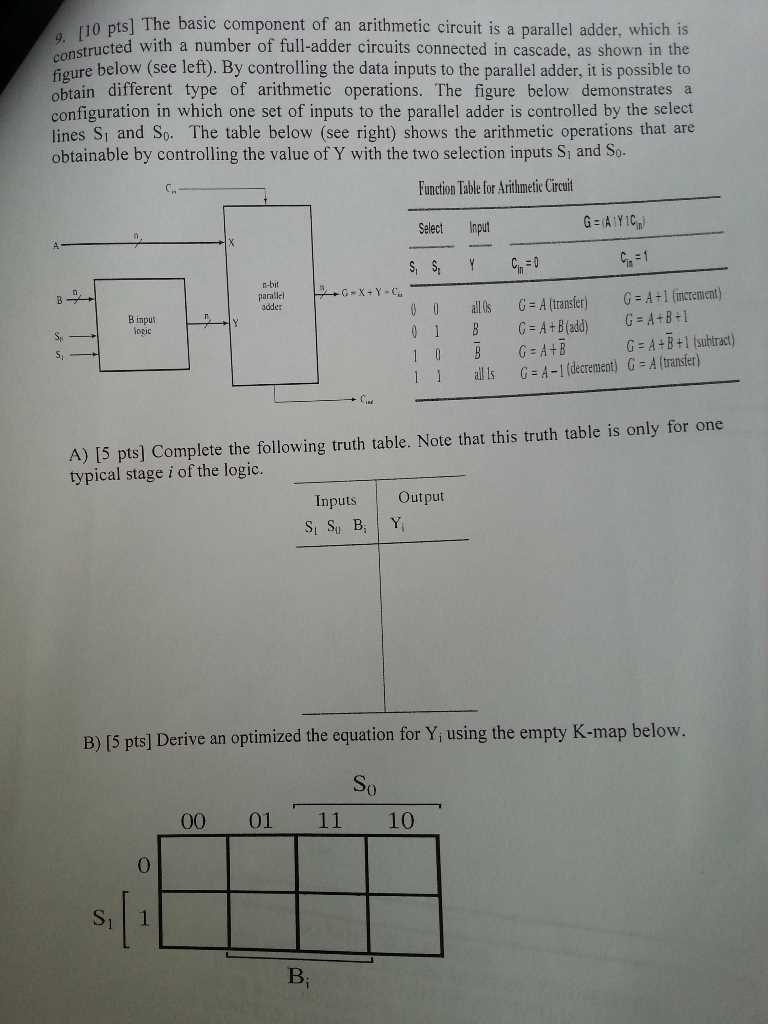

Detailed Look at Computer Arithmetic

Arithmetic operations are at the core of how systems process data, enabling everything from basic calculations to complex computations. The design of these operations involves specialized methods to handle numbers, both whole and fractional, as well as to manage overflow, precision, and rounding errors. Understanding how systems perform arithmetic tasks is essential for optimizing processing power and ensuring accurate results in various applications.

Types of Arithmetic Operations

There are several types of operations that systems commonly perform, each requiring distinct methods of execution. The most basic are addition, subtraction, multiplication, and division, but more advanced functions such as exponentiation, square roots, and trigonometric functions also play important roles in certain applications.

- Addition: This is typically the simplest operation, implemented using binary addition algorithms. For signed numbers, methods like two’s complement are often used to represent negative values.

- Subtraction: Often handled by converting the operation into an addition of a negative value, subtraction involves a similar method to that of addition, but requires additional handling of borrow bits.

- Multiplication: Multiplication is often more complex, involving repeated addition or bit-shifting methods to achieve results quickly. Algorithms like Booth’s multiplication are commonly employed in hardware implementations.

- Division: This operation generally requires more time and resources, often utilizing long division methods or more efficient algorithms like restoring and non-restoring division.

Handling Special Numbers and Precision

In addition to basic operations, managing precision and handling special types of numbers, such as floating-point values, are critical in arithmetic systems. Floating-point numbers allow for the representation of very large or very small numbers but introduce challenges with rounding errors and precision loss.

- Floating-Point Arithmetic: This involves representing numbers in scientific notation, breaking them down into mantissa and exponent. Specialized hardware often handles floating-point operations with methods like the IEEE 754 standard to minimize errors.

- Overflow and Underflow: Systems must be designed to detect when results exceed or fall below the range of representable values. Overflow occurs when the number is too large to fit, while underflow happens with very small numbers.

- Rounding Errors: When performing calculations, especially with floating-point numbers, rounding errors can accumulate. Various rounding methods, such as round-to-nearest or round-toward-zero, are implemented to mitigate these effects.

Understanding these key concepts in arithmetic operations helps developers and engineers design more efficient systems that can handle computations quickly and accurately, minimizing errors and improving overall performance.

Fault Tolerance in Computer Systems

Ensuring reliability and continuous operation is crucial in any system, especially when it comes to handling unexpected failures. Fault tolerance involves the strategies and techniques used to prevent system downtime or data loss when certain components malfunction. The goal is to design systems that can detect, recover from, and continue operating in the presence of faults, ensuring minimal disruption to services and processes.

Key Techniques for Achieving Fault Tolerance

Several methods can be employed to build fault-tolerant systems, each addressing different potential risks and failure points. By implementing redundancy, error detection, and recovery mechanisms, systems can maintain their integrity even when individual components fail.

- Redundancy: This involves duplicating critical system components, such as processors, memory, or storage, so that if one component fails, the system can continue functioning by switching to the backup. Techniques like RAID (Redundant Array of Independent Disks) are commonly used for storage redundancy.

- Error Detection and Correction: By using specialized codes, such as Hamming code or cyclic redundancy checks (CRC), systems can detect and correct errors in data transmission or storage, reducing the likelihood of failure affecting operations.

- Graceful Degradation: In this approach, when a failure occurs, the system does not stop entirely. Instead, it continues to operate at a reduced capacity, allowing essential functions to persist while less critical ones are compromised.

Applications and Importance of Fault Tolerance

Fault tolerance is critical in environments where system availability is paramount, such as in data centers, critical infrastructure, or aerospace systems. Systems in these fields often face high operational demands, and even small outages can lead to significant consequences.

- Data Centers: In these high-demand environments, fault tolerance ensures that services like cloud computing or web hosting remain available even when hardware or software failures occur.

- Aerospace and Medical Systems: These systems rely on fault tolerance to guarantee safety and operational reliability. Failures in these fields can lead to catastrophic outcomes, making fault tolerance an essential aspect of system design.

- Consumer Electronics: From smartphones to laptops, fault tolerance allows devices to maintain functionality despite potential issues with individual components like batteries or processors.

Incorporating fault tolerance into system design ensures that potential failures are managed proactively, minimizing downtime and maintaining system reliability in critical operations.

Recent Advancements in Computer Systems Design

The evolution of system design has seen remarkable progress in recent years, with new innovations focused on improving processing power, energy efficiency, and overall performance. Modern systems are now pushing the boundaries of what was once considered possible, leveraging cutting-edge technologies to meet the growing demands of diverse applications. From the development of multi-core processors to the emergence of specialized computing models, these advancements are reshaping how systems handle complex tasks and workloads.

Innovations in Processing Power

One of the key areas of advancement is in processing capabilities. Increased parallelism and the introduction of new processing paradigms have enabled systems to handle more complex operations efficiently. Significant breakthroughs include:

- Multi-Core Processors: By integrating multiple processing units on a single chip, systems are able to execute several tasks simultaneously, significantly improving performance for multi-threaded applications.

- Quantum Computing: While still in its infancy, quantum computing holds the potential to solve problems that are currently intractable for traditional systems, offering exponential speedups in certain types of computations.

- Neuromorphic Computing: Inspired by the human brain, neuromorphic systems utilize artificial neural networks for complex decision-making processes, offering promising applications in AI and machine learning.

Improving Energy Efficiency and Sustainability

As the demand for computing power continues to rise, energy consumption has become a critical concern. Recent advancements focus not only on improving performance but also on making systems more energy-efficient:

- Low-Power Processors: The development of energy-efficient chips, such as ARM-based processors, has made it possible to run high-performance applications while minimizing power consumption, which is essential for mobile and embedded systems.

- Optimized Cooling Techniques: Advanced cooling methods, such as liquid cooling and heat dissipation technologies, are being employed to address the thermal challenges of high-performance computing systems.

- Green Computing: Sustainable computing practices are being integrated into system design, focusing on reducing environmental impact through the use of recyclable materials and low-energy components.

These innovations in processing power and energy efficiency are enabling systems to meet the demands of today’s applications, from data centers and cloud computing to IoT and edge devices, making them more powerful, efficient, and sustainable.

Study Strategies for Final Success

Achieving success in any challenging assessment requires a combination of focused effort, effective time management, and a deep understanding of key topics. To excel in the most demanding tests, it is essential to develop a strategic approach that encompasses active learning, consistent review, and smart test-taking techniques. The following strategies provide a structured path to maximize performance and boost confidence when preparing for high-stakes assessments.

Time Management and Planning

Proper time management is crucial for making the most of study sessions. A well-organized schedule helps break down tasks into manageable parts, ensuring that each area receives the attention it requires. Consider the following tips:

- Start Early: Avoid last-minute cramming by beginning your preparation well in advance. Allocate specific time blocks for studying, and stick to them consistently.

- Prioritize Topics: Identify the most important concepts or those that need more attention. Focus on difficult or unfamiliar material first, and review easier topics as the date approaches.

- Set Realistic Goals: Break your study material into smaller chunks and set achievable daily goals. This keeps the process from feeling overwhelming and ensures steady progress.

Active Learning Techniques

Engaging with the material actively rather than passively reading or listening is key to retention. Here are some methods to enhance active learning:

- Practice Problems: Solve as many practice questions as possible. This allows you to apply theoretical knowledge in practical scenarios, reinforcing learning.

- Teach Others: Explaining concepts to someone else forces you to organize and clarify your understanding. Teaching is an excellent method for reinforcing material.

- Utilize Flashcards: Create flashcards for key definitions, formulas, and concepts. Review these regularly to enhance memory recall.

Test-Taking Strategies

When the assessment day arrives, it’s important to approach it with a clear mindset and strategies to maximize your performance:

- Stay Calm: Anxiety can hinder your ability to think clearly. Practice relaxation techniques like deep breathing before and during the test.

- Read Instructions Carefully: Take time to read all questions and instructions thoroughly. Misunderstanding a question can cost valuable time and marks.

- Manage Your Time: Ensure that you allocate enough time for each section. If a question is too difficult, move on and return to it later.

By combining effective planning, active study methods, and efficient test-taking strategies, you can approach assessments with confidence, knowing you have done your best to prepare. These strategies not only help maximize success but also build habits that contribute to long-term academic achievement.