In the ever-evolving world of technology, the ability to extract valuable insights from raw information has become essential for making informed decisions. This process involves a series of methods aimed at uncovering hidden patterns and relationships within large sets of raw numbers and facts. As more industries embrace sophisticated tools and techniques, mastering these skills is becoming increasingly vital for professionals across various sectors.

Effective interpretation of complex information is crucial for identifying trends, solving problems, and forecasting future outcomes. However, it is not without its challenges. From handling inconsistent data to choosing the right approach for each situation, there are several factors that influence the quality and reliability of the results. This section will explore common hurdles, methodologies, and the best practices to help you navigate the intricacies of this essential field.

Whether you’re a beginner or a seasoned expert, understanding the core principles behind processing, modeling, and interpreting raw facts is fundamental to unlocking their full potential. By addressing key hurdles and providing practical solutions, we aim to offer valuable insights to sharpen your skills and enhance your problem-solving capabilities.

Data Evaluation Insights and Solutions

Understanding the key concepts behind interpreting complex information is essential for making accurate predictions and decisions. Throughout this process, many professionals encounter similar hurdles and uncertainties. Addressing these challenges requires not only knowledge of the tools available but also the ability to choose the right techniques to uncover valuable insights.

One common concern is selecting the appropriate method for processing raw numbers and facts. There are various strategies for tackling this, each with its strengths and limitations. Another challenge involves ensuring the quality and consistency of the input material. Without reliable input, even the most advanced tools may yield misleading results.

Clarity in interpreting outcomes is also a critical element. Professionals often struggle with extracting meaningful trends and patterns from seemingly chaotic data. By focusing on the right indicators and avoiding unnecessary complexity, it’s possible to extract actionable insights more effectively.

Techniques such as statistical modeling, visual representation, and machine learning algorithms play a vital role in simplifying these processes. Each approach offers unique advantages depending on the type of task at hand. Gaining proficiency with these tools helps practitioners improve the accuracy and relevance of their findings, making them better equipped to solve real-world problems.

What Is Data Analysis

At its core, this process involves examining raw information to uncover patterns, trends, and insights that can inform decisions and predictions. It’s a methodical approach used to convert unstructured material into meaningful conclusions. The primary goal is to extract useful details from large amounts of unorganized content, transforming them into clear, actionable findings.

Key Objectives of the Process

- Identifying significant patterns and correlations

- Enhancing decision-making by providing clear insights

- Improving processes by recognizing inefficiencies

- Forecasting future outcomes based on historical evidence

Common Methods Used

- Statistical Techniques: Applying mathematical models to identify trends and make predictions.

- Machine Learning: Using algorithms to identify patterns and automate decision-making processes.

- Visual Representation: Converting raw numbers into graphs and charts to highlight key points.

Each approach serves a unique purpose, depending on the nature of the material being examined. By applying the right method, professionals can ensure they are drawing valid conclusions that drive actionable outcomes.

Common Challenges in Data Analysis

Working with large sets of raw information often presents various obstacles. From inconsistent records to misinterpreted patterns, there are numerous hurdles professionals face when trying to extract useful insights. Navigating these difficulties requires a combination of technical expertise, careful planning, and the right set of tools.

Data Quality Issues are among the most significant challenges. Incomplete, incorrect, or outdated records can severely affect the reliability of the outcomes. Ensuring that the material being examined is accurate and up-to-date is crucial for drawing valid conclusions.

Complexity of the Process is another common issue. The techniques used to process raw facts can be highly intricate, requiring significant expertise to implement correctly. Moreover, selecting the right approach for each specific task can often be confusing, leading to errors in judgment.

Time Constraints also play a major role. Professionals often work under tight deadlines, making it difficult to thoroughly assess large volumes of information. Speeding up the process without compromising accuracy requires careful balancing and efficient use of available resources.

Finally, communication of results can be problematic. Even when meaningful trends are uncovered, conveying these findings in a clear and understandable way remains a challenge for many. Presenting complex material in a simple, actionable format is key to ensuring that insights are useful to decision-makers.

Key Tools for Data Analysis

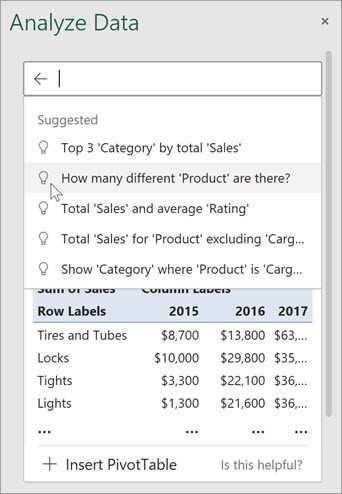

To successfully examine and interpret large volumes of raw information, a variety of specialized tools are essential. These resources help professionals manage, process, and visualize complex data efficiently. With the right tools at hand, it’s possible to unlock valuable insights and make more informed decisions.

Software for Processing Raw Information

- Microsoft Excel: A widely used tool for basic calculations, graphing, and organizing small datasets.

- R: A programming language designed for statistical computing and graphics, ideal for more complex tasks.

- Python: Known for its flexibility and power, Python offers numerous libraries such as Pandas and NumPy for advanced data manipulation.

Tools for Visualization and Reporting

- Tableau: A leading platform for creating interactive data visualizations and dashboards.

- Power BI: A business analytics tool that allows users to create reports and visualize data in real-time.

- Google Data Studio: A free tool for creating customizable reports and dashboards, integrating seamlessly with other Google services.

These tools are essential for professionals looking to process complex information, create visual representations, and communicate insights effectively. By selecting the right platform or software for the task, it becomes easier to manage challenges and achieve accurate results.

Understanding Data Types and Structures

When working with large volumes of raw information, recognizing the different categories and arrangements of that information is crucial. Different kinds of raw material require distinct methods for processing and interpreting. Understanding the structure and type of each piece of information allows professionals to apply the appropriate techniques and tools for effective analysis.

Common Types of Information

- Numeric: Represents values that can be measured and quantified, including integers and decimals.

- Categorical: Represents values that classify or group items into categories, such as colors, names, or labels.

- Boolean: Represents true/false or yes/no values used to signify binary outcomes.

- Text: Consists of alphanumeric characters used for storing written information, such as comments or descriptions.

Common Structures for Organizing Information

- Arrays: A collection of elements, typically of the same type, stored in an indexed format.

- Tables: Organized into rows and columns, making it easier to organize and analyze large sets of related values.

- Graphs: A visual representation of relationships between various elements or data points.

- Trees: A hierarchical structure where each element is connected to others in a branching format.

By understanding the types of information you’re dealing with and how it’s structured, it becomes easier to choose the right methods for processing, interpreting, and visualizing that content.

How to Clean Data Effectively

Preparing raw material for thorough examination often requires careful cleaning to ensure its accuracy and reliability. Inconsistent, incomplete, or erroneous records can significantly impact the quality of insights derived from the information. By following a systematic approach, it’s possible to improve the overall quality of the dataset, making it more useful for further processing.

Steps for Cleaning Raw Information

- Identify Missing Values: Locate and address any gaps in the dataset. Options include filling in values, removing incomplete entries, or replacing with averages or defaults.

- Remove Duplicates: Ensure no repeated records exist, which can skew analysis and lead to misleading conclusions.

- Fix Errors: Correct any obvious mistakes, such as incorrect data formats, out-of-range values, or inconsistent naming conventions.

- Standardize Formats: Ensure all entries follow a consistent format, such as using a single unit of measurement or date format, for easier processing.

Tools for Efficient Cleaning

- Excel: Use built-in functions like “Find & Replace” or “Remove Duplicates” to quickly clean and organize material.

- Python: Libraries such as Pandas provide powerful tools for cleaning, filtering, and transforming raw content.

- R: Offers various packages that allow for data preprocessing, such as tidyr for cleaning and dplyr for manipulating content.

By following these steps and utilizing the right tools, it’s possible to ensure that the raw information is clean, accurate, and ready for further processing or interpretation.

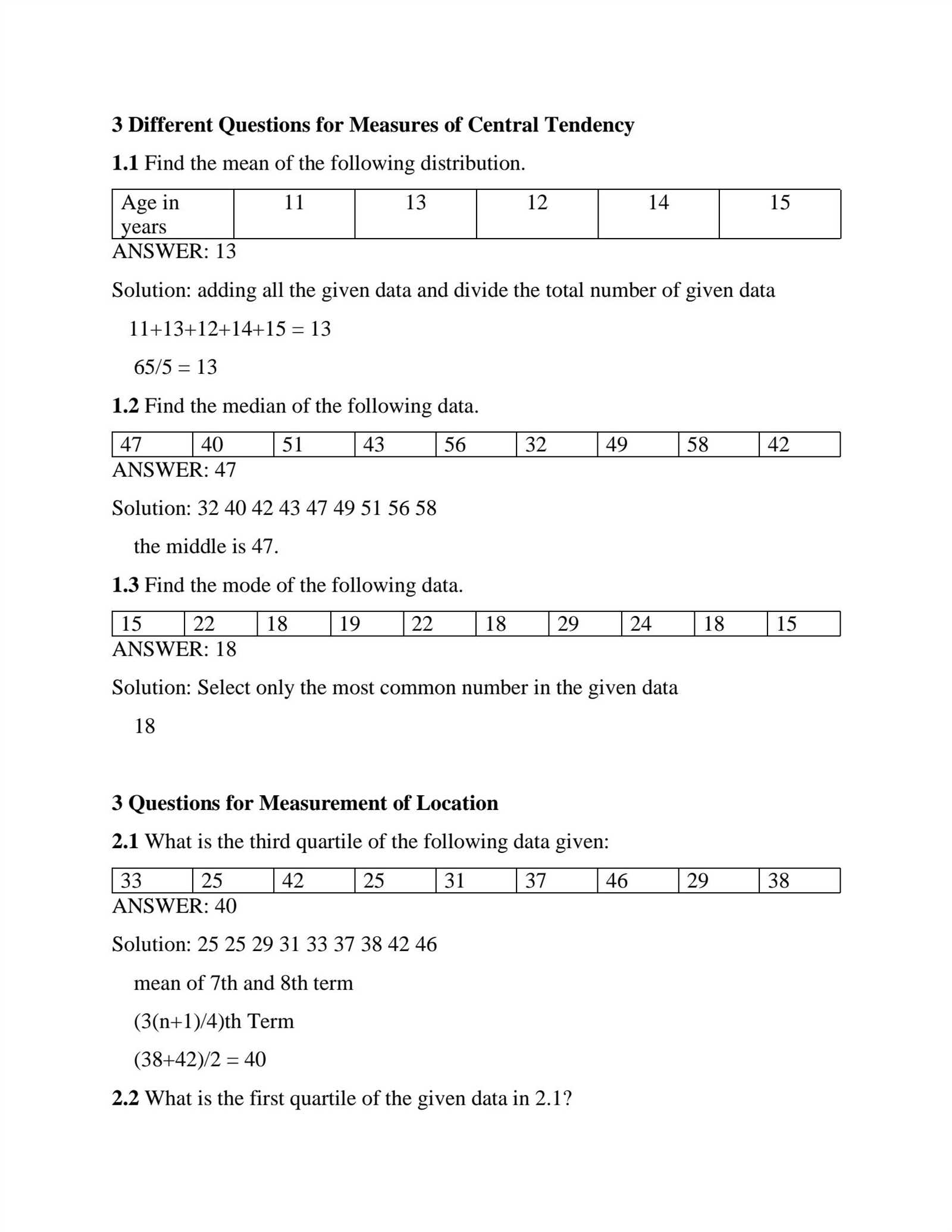

Exploring Statistical Methods in Data

In the quest to draw meaningful insights from large sets of raw information, statistical techniques play a pivotal role. These methods help identify trends, test hypotheses, and uncover hidden relationships that might not be immediately obvious. By applying the right statistical approach, professionals can make more informed decisions and predictions based on solid evidence.

Common Statistical Techniques

- Descriptive Statistics: Involves summarizing or describing the key characteristics of the dataset, such as averages, medians, and standard deviations.

- Regression Analysis: A method for determining the relationship between a dependent variable and one or more independent variables, often used for prediction.

- Hypothesis Testing: Used to validate assumptions or claims about a population by comparing sample data to theoretical expectations.

- Correlation: Measures the strength and direction of the relationship between two variables, helping to identify patterns and dependencies.

Choosing the Right Technique

The choice of statistical method depends largely on the type of material being examined and the objectives of the analysis. For example, if the goal is to understand a simple trend, descriptive statistics may be sufficient. However, if the goal is to make predictions or determine cause-and-effect relationships, more advanced techniques such as regression or hypothesis testing might be required. Selecting the appropriate method ensures more accurate results and a deeper understanding of the information.

Best Practices for Data Visualization

Presenting raw material visually can help transform complex patterns and trends into easily digestible insights. However, creating clear and effective visual representations requires attention to detail and a thoughtful approach. By following key best practices, you can ensure that your charts, graphs, and diagrams convey information in a meaningful and accessible way.

Essential Guidelines for Effective Visuals

- Keep It Simple: Avoid cluttering the visual with unnecessary elements or excessive information. Focus on the key message you want to convey.

- Use Clear Labels: Ensure that all axes, categories, and data points are clearly labeled, so viewers can easily interpret the information being presented.

- Choose the Right Chart Type: Select the appropriate visual representation for the data you are showing. For example, use bar charts for comparisons, line charts for trends, and pie charts for proportions.

- Maintain Consistency: Stick to a consistent color scheme and style throughout the visual to avoid confusion. Use colors that are distinguishable and meaningful.

Maximizing Impact with Visualization Tools

- Tableau: A powerful tool that allows users to create dynamic and interactive visuals for in-depth exploration.

- Power BI: Known for its easy-to-use features, it enables users to create professional-looking charts and dashboards quickly.

- Google Data Studio: Offers a free and flexible platform for building customizable reports with various visual options.

By adhering to these best practices, you can ensure that your visualizations not only look appealing but also serve their intended purpose of clearly communicating insights and trends.

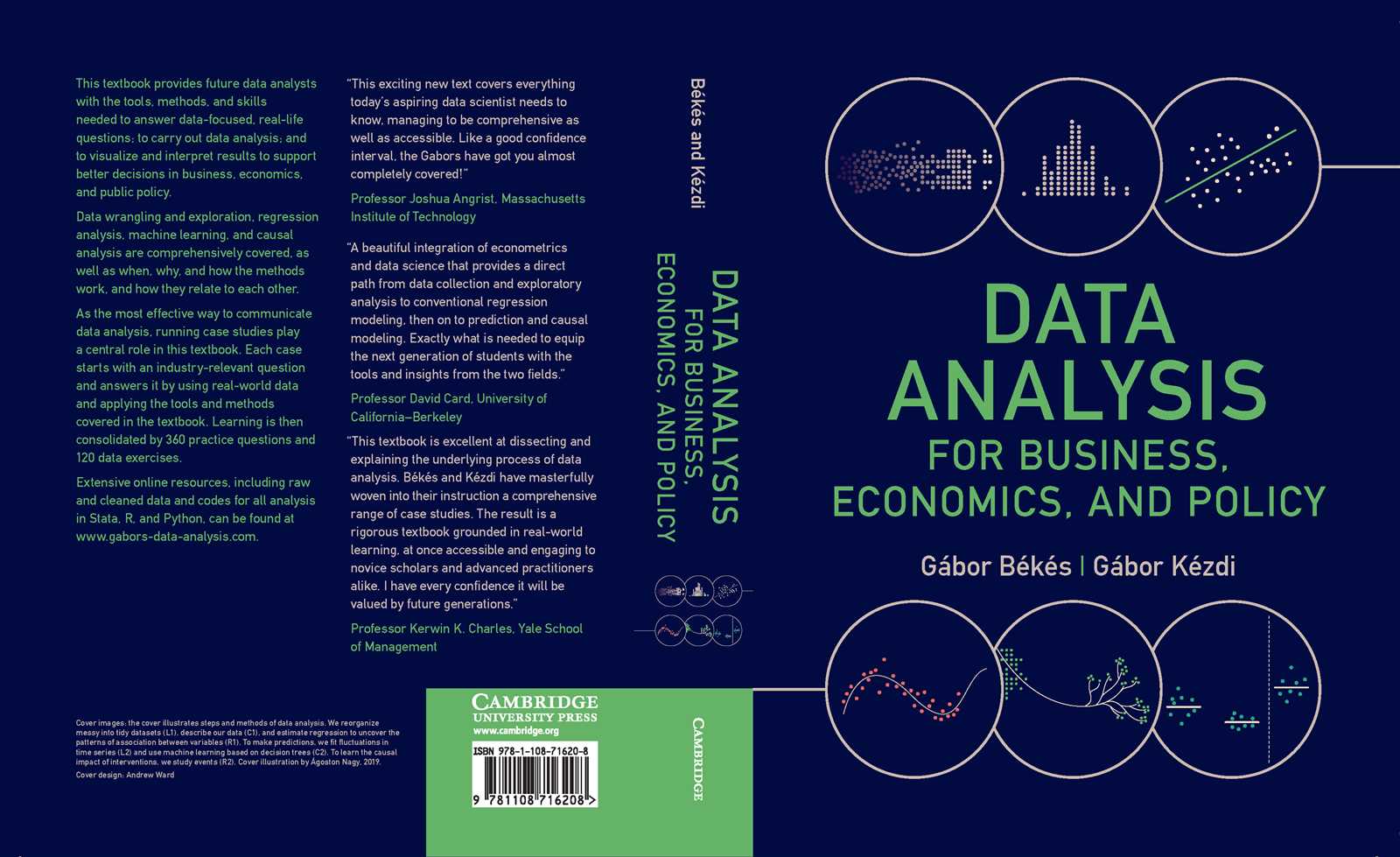

Data Analysis in Machine Learning

The process of extracting insights from raw material plays a critical role in the development of intelligent systems. In machine learning, the ability to process, interpret, and clean this raw material is essential for building accurate models. The more refined and relevant the input, the better the model can predict, classify, or optimize based on that information.

Machine learning models depend heavily on how well the raw information is prepared before it is used for training. This includes selecting the right features, cleaning inconsistencies, and ensuring that the material is representative of the problem at hand. Proper preparation allows machine learning algorithms to learn from patterns more effectively, leading to improved performance and generalization.

Various techniques such as normalization, transformation, and feature engineering are used to ensure that raw material is in the optimal format for model training. Additionally, understanding the inherent relationships within the material can greatly enhance the predictive power of machine learning models. Without a structured approach to processing this raw content, even the most advanced algorithms may struggle to produce accurate results.

How to Interpret Data Insights

Understanding the key takeaways from raw material can often be the most challenging part of any project. The ability to extract valuable meaning from the patterns, trends, and relationships present in the information is crucial for making informed decisions. By interpreting these findings correctly, professionals can drive strategy, innovation, and improvements in various fields.

Key Steps for Interpreting Insights

- Contextualize the Findings: Always consider the broader context in which the material was collected. Understand the environment, conditions, and objectives that shaped the data.

- Identify Key Patterns: Look for trends or anomalies that stand out. These could indicate areas that require further exploration or represent important changes over time.

- Verify with Statistical Methods: Use techniques to validate the insights, ensuring that what you’re seeing is not due to random chance but reflects real underlying patterns.

Avoiding Common Pitfalls

- Beware of Bias: Always question whether the material or the process used to collect it has introduced any bias, as this can skew interpretation.

- Don’t Overgeneralize: Avoid jumping to conclusions or applying insights too broadly. Ensure the findings are relevant to the specific scope of the study or problem.

- Consider Alternative Explanations: Before making a decision based on the insights, consider other potential factors or reasons that could explain the observed patterns.

Interpreting insights requires not only technical expertise but also a critical, thoughtful approach to understanding what the material is truly conveying. By following these steps and being mindful of potential pitfalls, you can ensure that the conclusions you draw are both accurate and actionable.

Top Mistakes in Data Analysis

While working with raw material to uncover insights, it’s easy to fall into common traps that can lead to inaccurate conclusions. These missteps can stem from incorrect methodologies, misunderstandings of the information, or misapplication of tools. Being aware of these mistakes is essential for ensuring reliable outcomes and making well-informed decisions.

| Mistake | Consequence | Solution |

|---|---|---|

| Ignoring Outliers | Can distort results and lead to skewed insights. | Evaluate outliers carefully; decide whether to exclude or investigate further. |

| Overfitting Models | Results in models that perform well on training material but fail on unseen data. | Use cross-validation techniques to check for overfitting. |

| Failing to Clean Raw Material | Leads to inaccurate results and potential misinterpretations. | Ensure thorough cleaning of all material before analysis. |

| Overlooking the Importance of Context | Can result in misinterpretations and incorrect conclusions. | Always consider the context in which the material was collected. |

| Using the Wrong Tool or Technique | Can lead to misleading or incomplete insights. | Select the most appropriate methods based on the specific objectives and material. |

Avoiding these mistakes requires careful attention to the methods used, a critical mindset, and the right tools for the job. By understanding these common pitfalls, you can significantly improve the quality of your work and ensure that the insights drawn are both accurate and actionable.

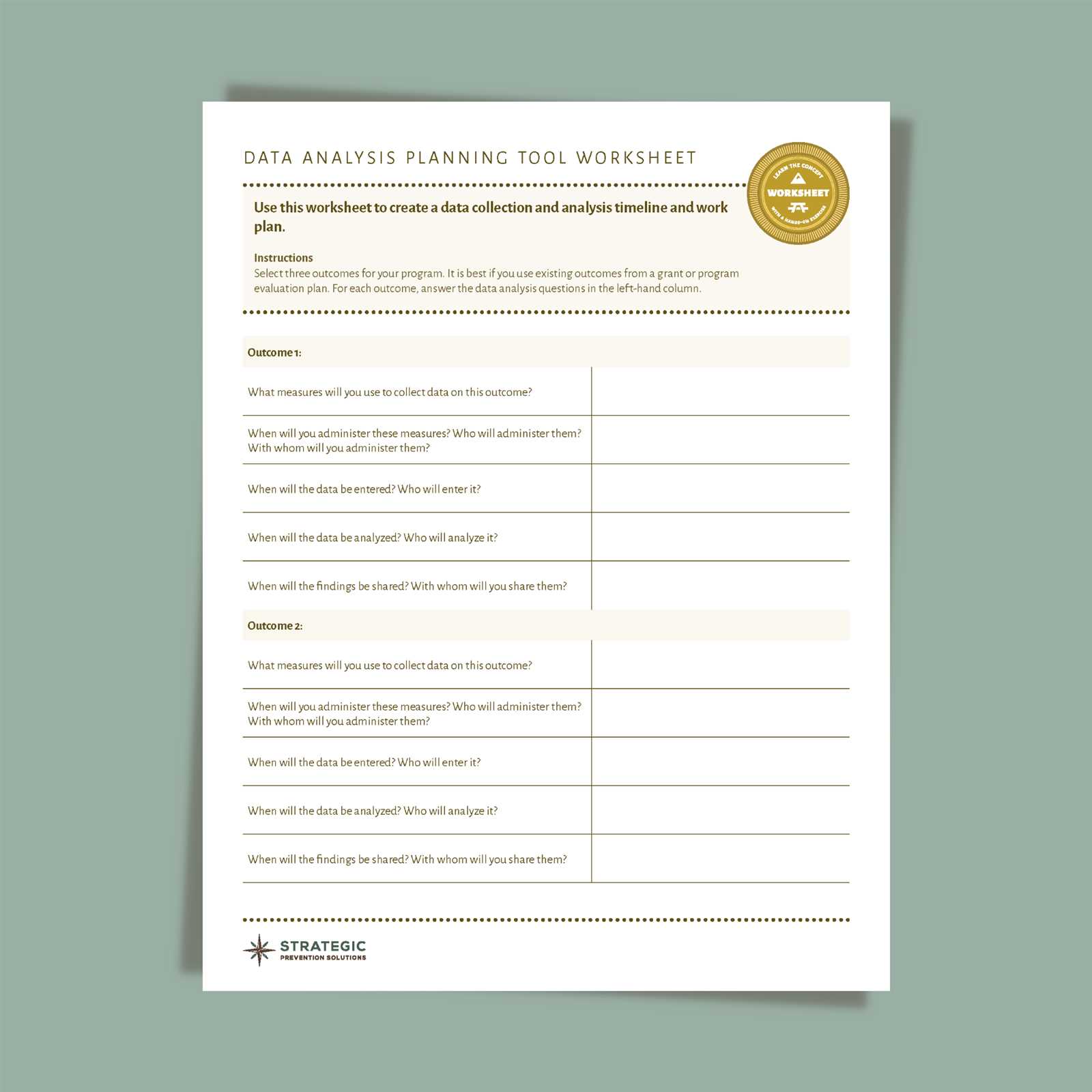

How to Improve Data Accuracy

Ensuring precision in collected material is crucial for deriving reliable insights. Accuracy plays a vital role in decision-making, forecasting, and strategy development. Without it, any conclusions drawn may lead to costly errors or ineffective solutions. Improving the precision of the collected information involves a series of best practices that help reduce errors and enhance the overall quality of results.

To begin with, it is important to clean the raw material thoroughly, removing any inconsistencies or irrelevant entries. This step ensures that only the most relevant and correct information is being used in the process. Additionally, using reliable sources and verifying the input regularly will reduce the chances of introducing errors. Employing robust tools and methods for processing, such as automated validation checks, can also significantly reduce human error.

Consistency is another key factor in ensuring precision. Regular updates and checks on the material can help maintain its reliability over time. Incorporating feedback loops that enable continuous evaluation and correction further contributes to enhancing accuracy. By following these strategies, the integrity of the material improves, leading to more dependable outcomes and informed decisions.

Exploring Big Data Techniques

Handling vast amounts of information requires specialized techniques to process, store, and derive meaningful insights. The challenge lies in efficiently managing this immense volume while maintaining accuracy and speed. Various approaches have been developed to address these challenges, ensuring that organizations can derive value from large-scale material and make informed decisions.

One of the most commonly used methods is distributed computing, where tasks are divided across multiple systems to process large datasets concurrently. This approach allows for faster processing and scalability. Additionally, advanced algorithms such as machine learning and artificial intelligence play a crucial role in extracting patterns and predicting outcomes from massive sets of information. These techniques enable businesses to uncover hidden trends, automate decision-making, and optimize operations.

Another important aspect of managing large volumes of information is cloud computing. By utilizing cloud platforms, organizations can store vast amounts of material and access it remotely, reducing infrastructure costs. With the cloud, it’s possible to scale up or down based on demand, making it a flexible solution for businesses dealing with fluctuating needs. By combining these techniques, organizations can handle the complexities of large-scale material, uncover valuable insights, and stay competitive in a data-driven world.

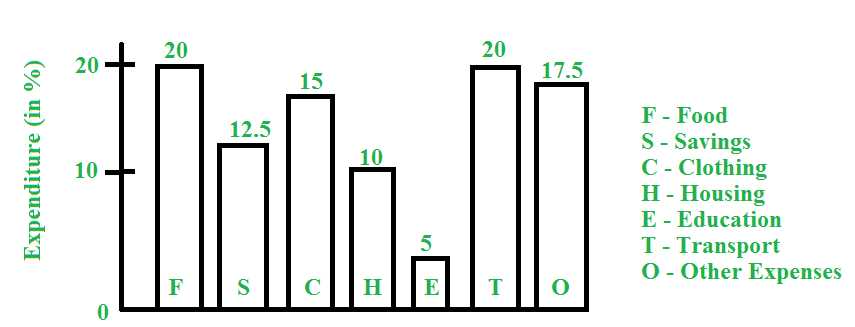

Data Analysis for Business Decisions

Effective decision-making in business relies on understanding patterns and trends within the vast amounts of material available. By examining the collected information, businesses can make informed choices that drive growth, reduce costs, and optimize operations. The ability to interpret these insights is essential for creating strategies that align with market demands and organizational goals.

One of the key benefits of leveraging information for business decisions is the ability to identify emerging trends early. By analyzing patterns, companies can adapt to changes in consumer behavior, market conditions, or even anticipate potential risks. This proactive approach enables businesses to stay ahead of the competition and respond quickly to challenges.

To effectively use collected material for decision-making, it’s important to apply the right tools and techniques. The following table outlines some key approaches that help businesses extract actionable insights:

| Approach | Purpose | Benefit |

|---|---|---|

| Predictive Modeling | Forecast future outcomes based on historical trends | Helps anticipate market shifts and optimize resource allocation |

| Segmentation | Group customers or products based on shared characteristics | Enables targeted marketing strategies and personalized customer experiences |

| Trend Analysis | Track changes in key performance metrics over time | Identifies long-term growth opportunities and areas for improvement |

| Benchmarking | Compare performance against industry standards or competitors | Provides insights into competitive positioning and operational efficiency |

By incorporating these approaches, businesses can make smarter decisions that drive innovation, improve customer satisfaction, and enhance profitability. The ability to turn information into actionable insights is a key factor in long-term success.

Data Ethics and Privacy Considerations

When handling large volumes of collected information, it’s essential to consider not only its value but also the ethical implications associated with its use. Organizations must be mindful of privacy, fairness, and transparency when processing any form of sensitive material. Adopting responsible practices is key to maintaining trust with customers, complying with regulations, and promoting ethical standards within the industry.

The rise of technology and the ability to gather vast amounts of material bring significant benefits, but they also introduce important ethical challenges. Organizations must navigate the complexities of informed consent, confidentiality, and ensuring that individuals’ rights are respected at every stage of handling the information. These considerations are crucial for preventing misuse and safeguarding the integrity of processes.

Ethical Guidelines in Information Management

To guide businesses in ethically handling sensitive material, several best practices and regulations must be followed:

| Guideline | Description | Key Benefit |

|---|---|---|

| Informed Consent | Ensuring individuals are fully aware of how their information will be used before collection | Promotes transparency and builds trust with stakeholders |

| Data Minimization | Only collecting the minimum amount of information necessary for the intended purpose | Reduces risk of exposure and ensures efficient processing |

| Confidentiality | Protecting personally identifiable information (PII) and sensitive material from unauthorized access | Maintains privacy rights and reduces the potential for data breaches |

| Accountability | Holding organizations responsible for how they collect, process, and store sensitive material | Enhances corporate responsibility and encourages ethical business practices |

Privacy Laws and Regulations

Compliance with legal frameworks such as GDPR, HIPAA, and CCPA is essential for organizations that deal with sensitive information. These regulations establish clear guidelines regarding consent, data usage, retention, and individual rights. Failure to comply with these laws can result in substantial fines and damage to an organization’s reputation. By adhering to privacy laws, businesses ensure that they are acting in the best interest of their customers while maintaining ethical standards.

Overall, integrating privacy considerations into every stage of the information lifecycle is crucial for creating a secure and responsible framework. Ethical practices in managing sensitive material foster customer trust, support legal compliance, and ultimately lead to more sustainable business operations.

How to Present Data Findings

When conveying insights drawn from complex sets of information, it is essential to communicate clearly and effectively. The way in which results are shared plays a crucial role in ensuring the audience understands key points, makes informed decisions, and can take appropriate actions. Proper presentation involves not only clear visual representation but also the structuring of content to highlight significant trends and relationships.

Effective communication involves choosing the right tools and techniques to transform raw results into understandable formats. Whether through charts, reports, or presentations, it’s important to tailor the approach to the audience’s level of understanding and the nature of the findings. The goal is to simplify complex insights without losing their depth or relevance.

Choosing the Right Visualization Tools

Selecting the appropriate methods to display findings is one of the most important aspects of a successful presentation. Different types of visuals serve different purposes. For example:

- Bar charts: Ideal for comparing quantities across different categories.

- Line graphs: Useful for showing trends over time.

- Pie charts: Best for displaying proportions or percentages of a whole.

- Heatmaps: Effective in visualizing patterns across large datasets or geographical regions.

Each of these tools helps to convey specific insights quickly, which is critical when communicating findings to stakeholders or decision-makers who may not be familiar with the technical details.

Structuring the Presentation for Clarity

Once the right visuals are selected, it’s equally important to organize the presentation logically. Here are a few key steps to ensure clarity:

- Start with a summary: Provide a concise overview of the most important findings.

- Provide context: Explain the background or research question, as well as the methodology used.

- Highlight key points: Focus attention on the most important insights that lead to actionable conclusions.

- Conclude with recommendations: Suggest how the findings can be applied or what decisions should be made based on the results.

By following this structure, the audience can quickly grasp the core messages, understand the relevance of the findings, and use the insights to guide their next steps. It’s important to avoid overwhelming the audience with too much information at once and to focus on the most impactful elements.

Ultimately, presenting findings in an accessible, clear, and structured manner ensures that the information can drive meaningful actions and decisions.

Future Trends in Data Analysis

The landscape of information processing and insight generation is evolving rapidly. As technology advances, new techniques and tools emerge that promise to make the interpretation of complex patterns more accurate, faster, and more accessible. These shifts are set to change the way businesses, researchers, and organizations approach problem-solving and decision-making in the future.

Emerging trends indicate a growing reliance on automation, artificial intelligence, and advanced computational methods to manage the increasing volume and complexity of available data. The goal is to streamline the entire process, from gathering raw information to extracting actionable insights, with greater efficiency and precision.

Integration of Artificial Intelligence and Machine Learning

Machine learning models and AI algorithms are expected to become even more integral in uncovering patterns and trends. As these technologies evolve, they will be capable of handling more sophisticated tasks such as:

- Predictive Modeling: Anticipating future trends based on historical data with greater accuracy.

- Automated Insights: Identifying correlations and patterns without human intervention, leading to faster decision-making.

- Real-time Analytics: Offering immediate analysis as new information becomes available, enabling faster reactions to changing conditions.

These innovations promise to significantly reduce the time required to process and interpret large volumes of information, while improving the quality and reliability of insights generated.

Enhanced Data Visualization and Accessibility

As the complexity of data grows, so does the need for more sophisticated visualization techniques. Future trends will likely involve:

- Interactive Dashboards: Allowing users to explore and manipulate data in real time to uncover personalized insights.

- Augmented Reality (AR) and Virtual Reality (VR): Offering immersive ways to visualize large datasets, making complex trends more tangible.

- Natural Language Processing (NLP): Enabling users to query data and generate insights through conversational interfaces, without needing technical expertise.

These developments aim to make complex patterns easier to understand and more accessible to a wider range of users, beyond just data specialists.

As these trends unfold, it is clear that the future of information interpretation will be more automated, interactive, and integrated into everyday business processes. The continuous advancements in technology will unlock new possibilities, driving innovation across industries and opening up fresh avenues for decision-makers to explore and leverage valuable insights.