When it comes to managing multiple tasks in a computing environment, ensuring smooth coordination among them is crucial. This section focuses on fundamental principles and techniques that help in achieving such coordination, allowing various processes to work efficiently and without interference.

Understanding these concepts is essential for tackling practical challenges in modern computing systems, where the need for shared resources and concurrent execution is ever-present. Whether it’s handling multiple threads or preventing conflicts, a clear grasp of these principles will greatly aid in both theoretical studies and real-world applications.

Key concepts like resource management, handling conflicting demands, and the methods used to ensure that processes do not interfere with each other form the foundation of this subject. By diving deeper into these topics, one can not only prepare for assessments but also build a solid understanding for future projects involving task coordination.

Process Synchronization Exam Questions and Answers

This section covers key topics related to managing concurrent tasks in a system where multiple operations need to interact without conflict. Understanding how to handle shared resources, prevent interference, and ensure proper execution flow is essential for mastering these concepts. The following material is designed to help you grasp the essential techniques used to avoid issues like race conditions, deadlocks, and resource contention in a multi-tasking environment.

By working through these examples, you will develop a clear understanding of the various methods employed to coordinate tasks effectively. The focus is on familiarizing yourself with common strategies such as locks, semaphores, and critical sections, along with their applications in different scenarios. This preparation will help you not only in academic assessments but also in real-world scenarios where efficient task coordination is required for optimal system performance.

Key topics explored in this section will cover practical problems, their solutions, and the theoretical underpinnings behind each technique. Each example will provide insight into how different strategies can be applied to solve specific challenges in environments with concurrent operations.

Key Concepts of Process Synchronization

This section explores the fundamental principles that allow multiple tasks within a system to operate in harmony without interfering with each other. The main goal is to ensure that operations are completed in a controlled manner, preventing issues such as data corruption and conflicting resource usage. Various methods and tools are used to achieve coordination and maintain consistency across tasks.

Understanding these basic concepts is critical for managing concurrent tasks efficiently. The following elements are central to this domain:

- Critical Sections: These are sections of code where shared resources are accessed, and their protection is crucial to avoid conflicts.

- Locks: Tools used to ensure that only one task can access a critical section at a time, preventing race conditions.

- Semaphores: Mechanisms used for signaling between tasks, allowing coordination and controlled access to resources.

- Deadlock: A situation where tasks are unable to proceed due to mutual blocking. Understanding how to prevent or resolve deadlocks is vital.

- Mutual Exclusion: A principle that ensures that no two tasks can access the same resource simultaneously, preventing conflicts.

By becoming familiar with these core ideas, one can better understand the challenges faced in environments where multiple tasks run concurrently. Mastery of these concepts provides the foundation for solving more complex issues related to task coordination and resource management.

Understanding Critical Section Problem

The critical section problem arises when multiple tasks need to access shared resources, such as memory or files, simultaneously. Without proper management, this can lead to conflicts, data corruption, or unpredictable behavior in the system. The challenge is to ensure that only one task can access the critical section at any given time, while others are either waiting their turn or performing other operations.

To solve this problem, various techniques have been developed to provide mutual exclusion, guaranteeing that only one task can execute within the critical section at a time. These methods are essential for maintaining data integrity and the smooth functioning of systems with concurrent execution.

| Method | Description | Advantages | Disadvantages |

|---|---|---|---|

| Locks | Mechanisms that prevent more than one task from entering the critical section simultaneously. | Simple and effective for small-scale applications. | Can lead to performance issues if overused or not managed properly. |

| Semaphores | Tools that use signaling to control access to shared resources. | Flexible and useful in complex scenarios with multiple tasks. | Can become difficult to manage in large systems. |

| Monitors | High-level synchronization constructs that manage shared resources automatically. | Automatic management simplifies programming and avoids common errors. | Can be less efficient than lower-level approaches in some cases. |

By understanding these solutions and their trade-offs, you can develop systems that handle concurrent execution efficiently, preventing conflicts and ensuring smooth operation even in complex environments.

Types of Synchronization Mechanisms

In environments where multiple tasks run concurrently, it is crucial to ensure that these tasks do not interfere with each other while accessing shared resources. Different tools and techniques are employed to control and coordinate these tasks, each designed to manage access to critical sections, prevent conflicts, and ensure smooth execution. These mechanisms are key to maintaining consistency and preventing issues like data corruption or system crashes.

Locks and Mutexes

Locks and mutexes are some of the most common methods used to protect shared resources. They ensure that only one task can enter a critical section at a time, blocking other tasks from accessing the same resource simultaneously. This mechanism prevents race conditions, where two or more tasks could otherwise alter shared data in an unpredictable manner. While effective, improper use of locks can lead to problems like deadlocks or performance bottlenecks if tasks are not managed efficiently.

Semaphores and Monitors

Semaphores and monitors are higher-level synchronization tools that offer more complex control over task coordination. Semaphores allow tasks to signal each other about resource availability, while monitors automatically manage access to shared resources, simplifying the synchronization process. These mechanisms are particularly useful in multi-threaded environments, where multiple tasks need to operate in parallel without interference. However, they can be more challenging to implement correctly, especially in large-scale systems with complex dependencies.

Common Synchronization Algorithms Explained

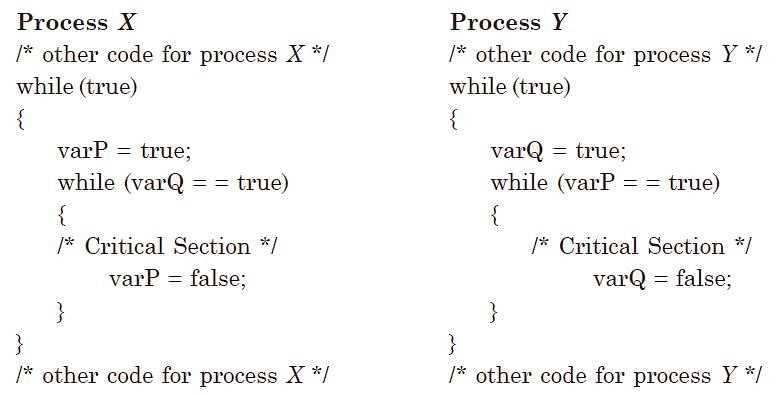

In systems with multiple concurrent tasks, it is essential to employ algorithms that ensure proper coordination, preventing conflicts while sharing resources. Several well-established techniques are used to control access to critical sections and manage resource allocation. These algorithms play a crucial role in maintaining data integrity and ensuring that tasks can proceed without interference, even in complex environments.

Each synchronization technique has its strengths and weaknesses, depending on the specific requirements of the system. Some algorithms are designed to be simple and efficient for small-scale applications, while others provide more advanced control for larger, multi-threaded systems. Below are some of the most widely used methods:

- Binary Semaphores (Mutexes): This algorithm uses a signaling mechanism to ensure that only one task can access a shared resource at a time. It is simple and effective but requires careful management to avoid deadlocks.

- Counting Semaphores: Unlike binary semaphores, counting semaphores allow a fixed number of tasks to access a resource simultaneously, making it suitable for managing shared pools of resources such as buffers.

- Peterson’s Algorithm: A classic algorithm for ensuring mutual exclusion between two tasks, it ensures that no two tasks can enter their critical sections at the same time without first obtaining permission.

- Lamport’s Bakery Algorithm: This algorithm ensures that tasks request access to the critical section in an orderly manner, much like a bakery ticketing system, preventing conflicts in situations with many tasks.

- Monitor-based Synchronization: A more advanced technique, monitors provide a high-level abstraction for controlling access to shared resources, simplifying the process of coordination for developers while preventing race conditions.

Each algorithm has its particular use cases, and understanding when and how to apply them is critical for achieving efficient and error-free task coordination in concurrent environments.

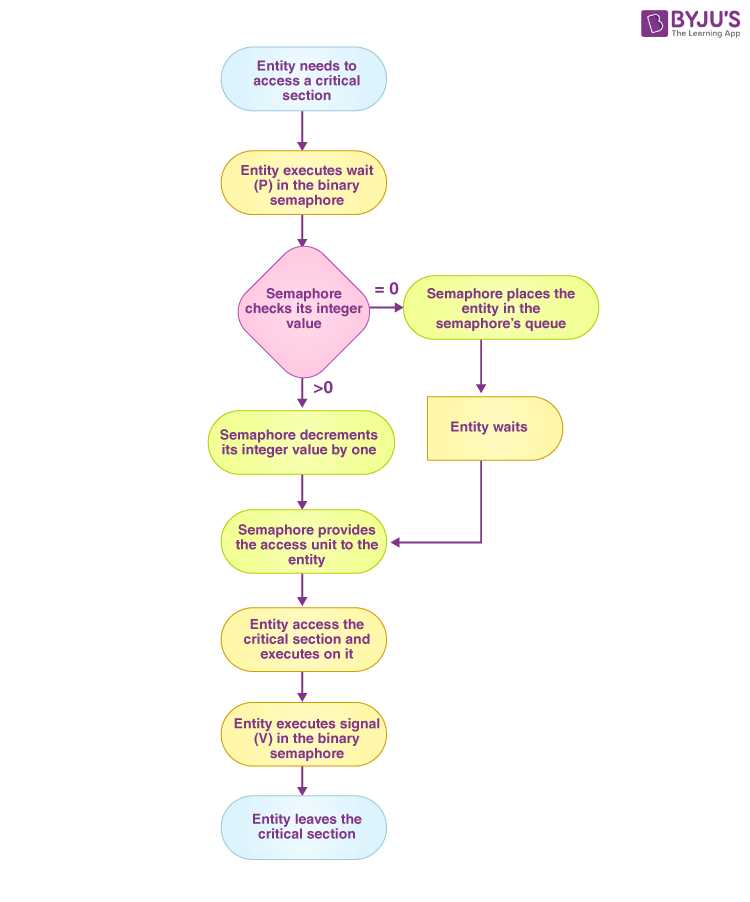

Role of Semaphores in Process Sync

Semaphores play a crucial role in managing access to shared resources in a system where multiple tasks operate concurrently. By using semaphores, it is possible to control when and how tasks interact with each other, ensuring that conflicts are avoided and resources are allocated efficiently. These mechanisms help regulate access to critical sections, preventing issues such as data corruption or deadlocks when multiple tasks need to perform operations simultaneously.

Binary Semaphores

One of the simplest forms of semaphores is the binary semaphore, often referred to as a mutex. This type of semaphore allows only one task to access a shared resource at any given time. It acts as a lock, with the task that holds the semaphore having exclusive control over the resource. When the task is finished, it releases the semaphore, allowing another task to gain access. This approach is effective for managing mutual exclusion in systems with low complexity.

Counting Semaphores

Counting semaphores are more versatile, allowing multiple tasks to access a resource simultaneously, as long as the number of tasks does not exceed a predefined limit. This type of semaphore is commonly used in systems with a pool of shared resources, such as buffers or printers, where a specific number of tasks need to access the resource concurrently. Counting semaphores track the number of available resources, blocking tasks when the limit is reached and allowing them to proceed once a resource becomes available.

By using semaphores, systems can efficiently manage access to shared resources, ensuring that tasks are executed in a coordinated manner and preventing issues that arise from uncontrolled access.

Mutexes and Their Applications

Mutexes are a fundamental tool used to manage access to shared resources in environments where multiple tasks operate concurrently. They ensure that only one task can access a resource at any given time, providing a mechanism to prevent conflicts that could arise from simultaneous access. This exclusive access is crucial for maintaining data integrity and ensuring that operations on shared resources do not interfere with each other.

How Mutexes Work

A mutex works by providing a locking mechanism. When a task needs to access a shared resource, it must first acquire the mutex associated with that resource. If the mutex is already held by another task, the requesting task must wait until it is released. Once the task has completed its work, it releases the mutex, allowing other tasks to proceed.

- Exclusive Access: Only one task can hold the mutex at any time, ensuring that no other task can access the shared resource during this period.

- Blocking: Tasks that cannot acquire the mutex immediately are blocked, meaning they wait until the mutex is available again.

- Prevention of Race Conditions: Mutexes help eliminate issues like race conditions, where tasks may simultaneously alter data, leading to unpredictable results.

Applications of Mutexes

Mutexes are widely used in many systems and applications where concurrent execution is required. Their primary function is to ensure that shared resources are used safely and efficiently, particularly in environments with multiple threads or tasks. Some common areas where mutexes are applied include:

- Database Systems: Mutexes are used to manage access to records or tables, ensuring that multiple users do not modify the same data simultaneously.

- Multithreaded Applications: In software with multiple threads, mutexes prevent threads from accessing shared variables or memory locations at the same time, ensuring that data remains consistent.

- File Systems: When multiple processes need to read or write to a file, mutexes are used to prevent conflicts that could corrupt the file content.

By using mutexes, developers can create more reliable and efficient systems, ensuring that resources are accessed in a controlled manner and that tasks do not conflict with one another. The simplicity and effectiveness of mutexes make them an essential tool in the design of concurrent systems.

Race Conditions and Prevention Methods

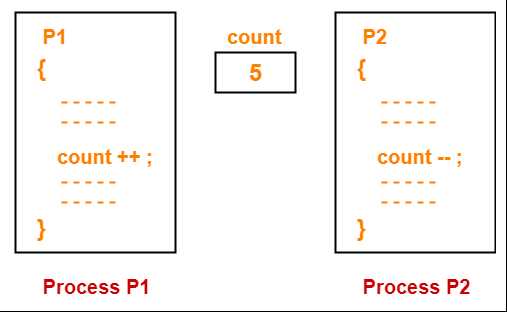

Race conditions occur when two or more tasks access shared resources simultaneously in an uncontrolled manner, leading to inconsistent or incorrect outcomes. These issues arise when tasks interact with shared data in a way that the final result depends on the sequence or timing of their execution. If not properly managed, race conditions can cause unpredictable behavior, data corruption, and system instability.

Understanding Race Conditions

In systems with multiple tasks, race conditions typically arise when several tasks attempt to read or write to the same data without proper coordination. The result of these operations depends on the order in which tasks execute, and if the timing is not synchronized, it can lead to conflicting changes or errors. Common examples include updating shared variables, accessing critical sections, or modifying files without proper locking mechanisms.

Prevention Techniques

To prevent race conditions, several methods can be employed to ensure tasks do not interfere with each other. The following techniques are commonly used in concurrent systems:

- Mutual Exclusion: Ensuring that only one task can access a critical section at any time by using locks, semaphores, or mutexes to protect shared resources from concurrent access.

- Atomic Operations: Performing operations that are indivisible, ensuring that tasks cannot interrupt or alter the operation midway. This guarantees the consistency of shared data.

- Transaction-Based Approaches: Using transactions to group a series of operations, ensuring that all operations within the transaction are completed successfully or none are applied, preventing partial updates.

- Priority Scheduling: In some cases, giving higher priority to certain tasks over others can prevent race conditions by ensuring critical tasks are executed first, reducing the chance of conflict.

By applying these prevention methods, systems can maintain data integrity, ensure predictable results, and avoid the detrimental effects of race conditions in multi-tasking environments. These techniques are fundamental in designing reliable and robust systems that perform consistently under concurrent execution.

Deadlock: Causes and Solutions

Deadlock is a state in which a set of tasks are unable to proceed because each is waiting for another to release resources or perform an action, resulting in a standstill. This situation can severely affect system performance, as tasks are left in a waiting state indefinitely. The challenge lies in both identifying and preventing deadlocks before they can disrupt system operations.

Causes of Deadlock

Deadlock typically occurs when a set of tasks hold resources while simultaneously waiting for resources held by other tasks. This creates a cycle of dependencies that prevents any task from making progress. The following factors contribute to the occurrence of deadlocks:

- Mutual Exclusion: A resource is allocated to only one task at a time, and other tasks must wait until it is released. If multiple tasks hold different resources and wait for each other to release them, deadlock can occur.

- Hold and Wait: A task holding one resource is waiting for another resource held by another task, creating a cycle of dependency that leads to deadlock.

- No Preemption: Once a task acquires a resource, it cannot be forcibly taken away from it. This means tasks can be stuck in a waiting state, preventing other tasks from making progress.

- Circular Wait: A set of tasks exists where each task is waiting for a resource held by the next task in the cycle. This circular dependency forms the core of deadlock.

Solutions to Prevent Deadlock

Preventing deadlock requires careful management of resources and task scheduling. Various strategies can be employed to avoid or mitigate the risks of deadlock:

- Resource Allocation Graphs: By representing tasks and resources as a graph, it is possible to detect circular dependencies and avoid the conditions that lead to deadlock.

- Timeouts: Implementing time limits for tasks waiting for resources ensures that they do not wait indefinitely. If a task cannot acquire a resource within the time limit, it can release any held resources and retry.

- Preemption: Allowing resources to be forcibly taken from tasks and allocated to others can break the cycle of waiting, effectively preventing deadlock.

- Resource Ordering: Assigning an order to resources and requiring tasks to request them in a consistent sequence helps prevent circular wait conditions and reduces the likelihood of deadlock.

- Avoidance Algorithms: Some algorithms, such as the Banker’s Algorithm, analyze resource requests and determine whether granting a request would lead to a potentially unsafe state. If so, the request is denied.

By understanding the causes of deadlock and employing these prevention techniques, systems can ensure smooth operation and avoid the detrimental effects of tasks being stuck in a state of inaction.

Message Passing in Synchronization

Message passing is a fundamental communication technique used for coordinating tasks and sharing data in environments where multiple entities need to work together. This method allows tasks to send and receive messages, enabling the exchange of information or requests for resources. By controlling the flow of communication between tasks, message passing can ensure smooth interaction and prevent conflicts in resource usage.

In systems that rely on message passing, tasks typically send messages to other tasks to request resources, exchange data, or notify about state changes. This approach offers an alternative to shared memory communication, as it allows tasks to communicate without directly accessing each other’s data. By utilizing message passing mechanisms, such as queues or buffers, tasks can maintain separation while still coordinating their actions effectively.

Types of Message Passing

Message passing can be categorized into two main types based on how communication is handled:

| Type | Description |

|---|---|

| Direct Communication | In this approach, tasks communicate directly with each other by sending messages through a predefined channel or endpoint. The sender knows the receiver, and messages are exchanged one-to-one. |

| Indirect Communication | Here, tasks send messages through an intermediary, such as a message queue or a shared mailbox. The sender does not necessarily know the receiver, and multiple tasks can receive messages from the same queue. |

Message passing simplifies the coordination between tasks in complex systems by avoiding the need for them to share memory spaces directly. It provides flexibility, allowing tasks to operate independently while still interacting in a controlled manner. However, the efficiency and reliability of message passing depend on the underlying communication mechanisms and protocols used in the system.

Monitors for Process Synchronization

Monitors are a high-level abstraction used to control access to shared resources in concurrent systems. They provide a structured way to manage synchronization and ensure that multiple tasks do not interfere with each other when accessing critical sections of code. By encapsulating both data and the methods that operate on that data, monitors help to prevent conflicts without requiring explicit locks or complex mechanisms.

A monitor typically consists of two main components: a set of shared variables and a set of procedures or functions that can operate on those variables. The key feature of a monitor is that it guarantees that only one task can execute a procedure at a time, ensuring mutual exclusion. If another task attempts to enter the monitor while another task is already inside, it will be blocked until the monitor becomes available.

Additionally, monitors often include condition variables that allow tasks to wait for certain conditions to be met before proceeding. These condition variables enable tasks to suspend their execution until specific criteria are fulfilled, ensuring that tasks are coordinated in a way that prevents unnecessary delays or resource contention.

Overall, monitors simplify synchronization by encapsulating complex logic in a structured manner. This abstraction allows developers to focus on higher-level application logic without worrying about low-level synchronization details.

Comparing Busy Waiting and Blocking

In systems where multiple tasks need to coordinate their execution, two common techniques for managing access to shared resources are busy waiting and blocking. Both approaches aim to handle situations where a task cannot proceed because the required resource is unavailable, but they differ significantly in how they manage the task’s execution while waiting.

Busy waiting involves a task continuously checking if the resource is available, without releasing control to other tasks. This approach can be inefficient because it consumes CPU resources even when the task is not making any progress. While it is simple to implement, it can lead to wasted computation power and a decrease in system efficiency, especially in multi-tasking environments.

On the other hand, blocking allows a task to voluntarily suspend its execution until the resource becomes available. This method typically involves placing the task in a waiting queue, where it remains inactive until it is signaled to resume. Unlike busy waiting, blocking frees up the CPU for other tasks to execute, resulting in better overall system resource utilization.

- Busy Waiting

- Task remains active, constantly checking for availability.

- Consumes CPU time unnecessarily.

- Simple implementation but inefficient in resource use.

- Blocking

- Task is suspended until the resource becomes available.

- Releases the CPU, allowing other tasks to run.

- More efficient in terms of system resource management.

In general, while busy waiting may be suitable for scenarios with very short waiting times, blocking is considered a more efficient strategy in most real-world applications, especially when the waiting period is long. Understanding the trade-offs between these two approaches is essential for optimizing system performance and resource usage.

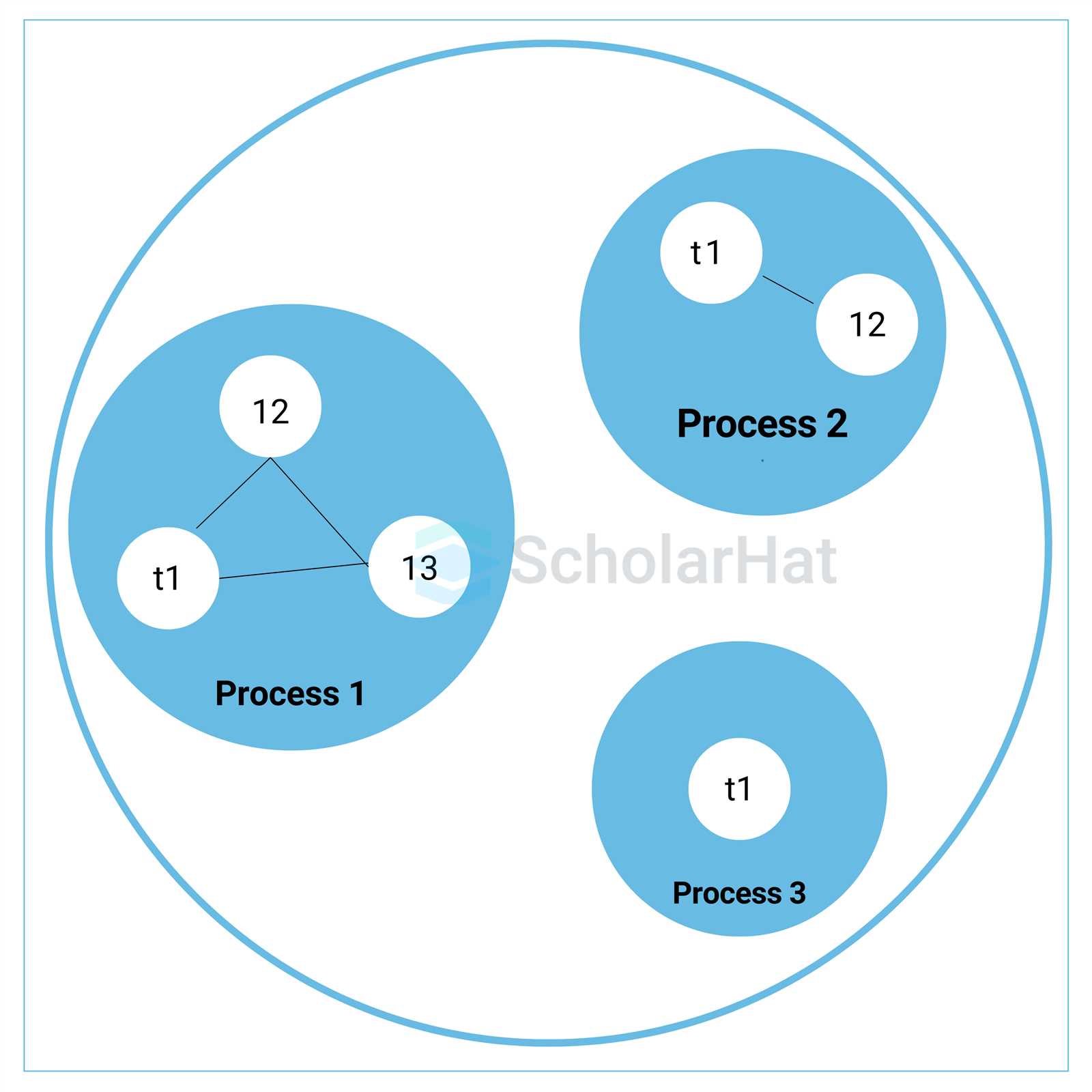

Synchronization in Multi-threaded Environments

In systems where multiple tasks or threads are executing concurrently, ensuring that they do not interfere with each other when accessing shared resources is crucial. Without proper management, threads may cause inconsistent or incorrect behavior, such as data corruption or race conditions. Effective coordination is needed to prevent conflicts while maintaining high performance and resource efficiency.

In multi-threaded environments, different mechanisms are used to control access to shared variables, ensuring that only one thread can modify a critical section of code at a time. These mechanisms help prevent undesirable behaviors like data inconsistency or deadlocks, where threads may be stuck waiting for each other indefinitely.

Common Techniques for Managing Access

There are several common approaches used to manage access to shared resources:

- Locks: A basic mechanism where a thread must acquire a lock before accessing shared data. This ensures that only one thread can operate on the data at a time.

- Semaphores: Counters that control access to a set of resources. Semaphores allow a specific number of threads to access a resource simultaneously, helping manage concurrency efficiently.

- Monitors: A higher-level abstraction that encapsulates shared data and methods. Monitors provide automatic mutual exclusion and help avoid complex locking mechanisms.

Challenges in Multi-threaded Systems

While these synchronization techniques are vital, they also introduce challenges that need to be carefully addressed:

- Deadlocks: Situations where two or more threads are blocked indefinitely, waiting for each other to release resources. Proper management of locks and resources is needed to avoid such scenarios.

- Starvation: When certain threads are indefinitely postponed because other threads continuously gain access to resources. This issue can be mitigated through fair scheduling policies.

By understanding and applying these techniques, developers can create efficient and reliable multi-threaded applications that operate smoothly in complex environments. Effective thread management is essential for optimizing performance and maintaining consistency in multi-threaded systems.

Resource Allocation and Process Sync

In any system where multiple tasks are running simultaneously, the allocation of resources becomes a critical aspect. Properly distributing available resources such as memory, CPU time, or I/O devices is essential to prevent conflicts or inefficiencies. Without careful management, tasks may compete for the same resources, leading to delays or even failure to complete certain operations.

To ensure smooth operation, coordination mechanisms are necessary to regulate how tasks access and share these resources. This is especially important when resources are limited and cannot be simultaneously allocated to all tasks. A robust resource management system helps in preventing bottlenecks, ensuring that resources are used optimally and fairly.

One key challenge in this domain is to balance the needs of different tasks while avoiding scenarios where one task holds a resource for too long, blocking others from proceeding. This can lead to issues such as starvation, where some tasks never get the resources they need, or deadlocks, where tasks are stuck waiting indefinitely for resources held by one another.

Effective resource allocation strategies also include techniques such as priority scheduling, which ensures that high-priority tasks are allocated resources first, and fairness algorithms, which aim to give each task an equitable share of the available resources. By implementing these strategies, systems can maintain both efficiency and fairness while preventing resource contention problems.

Priority Inversion and Its Impact

In systems where tasks with varying importance share resources, priority inversion can occur. This phenomenon happens when a higher-priority task is delayed by a lower-priority task holding a resource. As a result, the system’s efficiency and response time are significantly compromised, especially in time-sensitive applications. Priority inversion can lead to unexpected delays and potentially catastrophic failures in real-time systems.

The impact of priority inversion becomes more pronounced when a task that needs immediate access to resources is forced to wait behind lower-priority tasks. This creates a situation where the priority of the task requesting the resource is effectively inverted, causing a breakdown in the expected task scheduling model.

Effects on System Performance

When priority inversion occurs, the response time of high-priority tasks increases, potentially leading to missed deadlines in critical systems. For instance, in real-time applications such as embedded systems, priority inversion can lead to severe consequences, including system crashes or failures in operations that require precise timing.

Solutions and Mitigations

Several methods can mitigate the effects of priority inversion. One common technique is priority inheritance, where a lower-priority task temporarily inherits the priority of the higher-priority task it is blocking. Another approach is priority ceiling, which ensures that tasks requesting access to shared resources are assigned the highest priority available in the system.

By addressing priority inversion, system designers can ensure that critical tasks are executed in a timely manner, improving overall system reliability and performance.

Importance of Atomic Operations

In systems that involve shared resources, ensuring data consistency is paramount. Atomic operations play a crucial role in this process, as they guarantee that a series of actions are completed in a single, indivisible step. This means that no other task can interfere during the execution of these operations, ensuring that data remains intact and free from inconsistencies caused by interruptions or concurrent modifications.

Without atomic operations, tasks could read or modify data in inconsistent states, leading to unpredictable outcomes or errors. In environments with multiple tasks or threads, where tasks need to access and modify shared data, the proper use of atomic operations ensures that these interactions are seamless and error-free, thereby maintaining the integrity of the entire system.

How Atomic Operations Enhance System Reliability

Atomic operations ensure that certain actions are completed fully or not at all, which eliminates the risk of incomplete or corrupted data. For example, when updating a shared variable, an atomic operation ensures that no other task can alter the data between the start and end of the update, thus avoiding race conditions or data corruption. This is particularly important in systems where timing is critical, and the failure to maintain consistent states can have significant repercussions.

Common Atomic Operations

There are several types of atomic operations, each designed for different use cases. Some common ones include incrementing or decrementing counters, setting flags, and comparing values before performing updates. These operations are typically supported directly by hardware, making them fast and efficient. Additionally, many modern programming languages and libraries offer built-in support for atomic operations, further simplifying their implementation in multi-threaded environments.

By leveraging atomic operations, developers can build more reliable, stable, and efficient systems that handle shared resources with confidence.

Understanding the Producer-Consumer Problem

In multi-tasking environments, different tasks often need to interact with each other while sharing resources. One of the classic challenges is managing the interaction between tasks that produce and consume data. The problem arises when there is a need to balance the production of data with its consumption, ensuring that neither the producer nor the consumer is overwhelmed or left idle.

At its core, the issue involves a shared buffer where data is stored temporarily. The producer is responsible for generating and adding data to this buffer, while the consumer retrieves and processes it. The challenge lies in ensuring that the buffer doesn’t overflow when the producer is too fast or underflow when the consumer is too slow, thus avoiding errors or inefficiencies in the system.

The Key Issues in Managing Data Flow

There are a few critical aspects that need to be addressed when handling the producer-consumer problem:

- Buffer Capacity: The buffer has limited space, and it’s crucial to manage its capacity so that it doesn’t overflow or underflow. If the producer adds too much data while the consumer isn’t consuming fast enough, an overflow occurs. Conversely, if the consumer tries to retrieve data when the buffer is empty, an underflow happens.

- Synchronization: Both the producer and consumer must operate without interfering with each other. Synchronization ensures that data is added and removed in a coordinated way, preventing simultaneous read/write conflicts.

Common Solutions and Approaches

Various techniques have been proposed to solve this problem, with the goal of maintaining an efficient flow of data between producers and consumers. Some common approaches include:

- Locks and Semaphores: These mechanisms control access to the shared buffer, ensuring that only one task can modify it at a time, thereby preventing race conditions.

- Condition Variables: These are used to signal when the buffer is full or empty, allowing the producer and consumer to wait for the right conditions before proceeding.

- Queues: Advanced data structures like queues can help streamline data flow, offering automatic management of buffer states and making it easier to handle multiple producers and consumers.

By understanding the dynamics of the producer-consumer problem and applying the appropriate techniques, it’s possible to achieve an efficient and smooth interaction between the generating and consuming tasks in any system.

Study Tips for Mastering Coordination Techniques

When preparing for assessments focused on coordinating multiple tasks and managing shared resources, a clear understanding of key concepts is essential. To succeed, one must not only learn the theoretical aspects but also practice applying them to real-world scenarios. Effective preparation involves a combination of reading, solving problems, and revisiting foundational principles regularly.

Mastering Core Concepts

Start by building a strong foundation in the basic principles that govern task coordination. This includes understanding common challenges like resource contention, task interactions, and state management. Focus on:

- Understanding Different Techniques: Familiarize yourself with methods such as locks, condition variables, semaphores, and barriers. Knowing when and how to apply each will help you navigate complex scenarios.

- Recognizing Potential Issues: Be able to identify common problems like deadlocks, race conditions, and starvation, as well as the strategies to avoid them.

Practical Exercises and Problem Solving

Theoretical knowledge is vital, but practice is key to truly mastering the concepts. Focus on:

- Solving Problems: Work through sample problems that involve managing task coordination, focusing on situations where you need to ensure that multiple tasks operate smoothly without conflicts.

- Implementing Solutions: Code simple programs that simulate task coordination, testing your ability to handle resources and prevent errors like deadlocks or race conditions.

By combining theoretical understanding with hands-on practice, you will develop the confidence to handle more complex challenges. This balanced approach will help you prepare effectively for assessments and deepen your grasp of task management techniques.