For those preparing for assessments in system design, mastering key concepts is essential to succeeding. Understanding the fundamental components of computing systems, from memory management to processing speed, is crucial. Each area holds its own set of principles that must be well-grasped for comprehensive understanding and problem-solving.

Mastering the essentials of how different parts of a computing unit interact provides the foundation needed to tackle various challenges. Topics range from performance optimization to handling system resources efficiently. By exploring these core principles, learners can develop the ability to approach any scenario methodically and with confidence.

In this guide, we will cover essential themes that frequently appear in evaluations, offering both theoretical explanations and practical examples. From understanding internal workflows to optimizing system design, each section is designed to help you prepare effectively for your upcoming assessments.

System Design Assessment Preparation

To effectively prepare for evaluations in system design, it is crucial to familiarize yourself with key topics that often appear in the tests. Mastering the foundational concepts enables you to approach complex scenarios with a clear understanding of how different elements interact. This section aims to guide you through common subjects, providing insight into what you can expect and how best to approach them.

Key Areas to Focus On

Topics that commonly appear in evaluations include memory management, processing units, and performance optimization. By thoroughly understanding the role of each component, you can improve your ability to identify key details in questions. Furthermore, practical examples can help solidify your understanding of theoretical concepts.

Techniques for Effective Problem Solving

Practical application is essential for mastering system design. It is not enough to simply memorize concepts; being able to apply them to real-world scenarios is key. Practice solving problems related to resource allocation, processing flow, and system performance to enhance your readiness for the assessment.

Understanding Basic System Design Concepts

Grasping the fundamentals of system components is essential for understanding how the core elements of a processing unit interact. By focusing on the primary building blocks, you gain a clearer perspective on how data is processed, stored, and transferred. This section covers the basic principles that form the foundation of more complex topics.

Core Components of a Processing Unit

- Control Unit: Manages instructions and coordinates activities between different parts of the system.

- Processing Unit: Carries out the execution of operations based on input data.

- Memory: Stores data temporarily or permanently, ensuring quick access to frequently used information.

- Input/Output: Handles the transfer of data between the system and external devices.

Important Concepts to Learn

- Data Flow: Understanding how data moves through the system helps you anticipate bottlenecks and optimize performance.

- Resource Management: Efficient management of resources such as memory and processing power ensures smooth operation and minimal delays.

- Instruction Set: Familiarizing yourself with the set of instructions a unit can process is crucial for effective problem-solving.

Key Topics for System Design Assessments

To succeed in evaluations focused on system design, it’s essential to understand the core areas that are typically covered. These topics form the foundation of how processing units operate, manage resources, and execute tasks. A deep knowledge of these areas will help in tackling a variety of challenges that may appear during an assessment.

Fundamental Concepts to Focus On

- Memory Hierarchy: Understanding the different levels of memory (cache, RAM, hard drive) and how data is accessed efficiently.

- Processing Units: Familiarity with how central units perform calculations and manage control flow.

- Instruction Pipelines: Grasping how instructions are processed in stages to enhance throughput.

- Parallelism: Learning the principles behind executing multiple tasks simultaneously to improve performance.

Advanced Concepts to Master

- Multiprocessing: How multiple processing units work together to handle complex tasks more effectively.

- Virtualization: The creation of virtual versions of resources like memory and processors, often used in cloud computing.

- System Bus: Understanding the pathways through which data is transmitted between different components.

- Optimization Techniques: Methods for improving system performance, from hardware configuration to software processes.

Commonly Asked Topics in Assessments

In assessments related to system design, there are several core topics that frequently appear, testing your understanding of essential principles and their application. Familiarity with these recurring themes will help you better prepare for a range of potential scenarios and improve your problem-solving abilities.

Typical Areas of Focus

- Memory Management: Understand how different memory types function and how they impact performance.

- Instruction Execution: Know the steps involved in executing a task and how various units interact to complete it.

- Data Flow Optimization: Be able to identify bottlenecks and propose ways to streamline data movement.

- Parallel Processing: Understand how multiple operations can run concurrently to enhance system efficiency.

Frequently Covered Concepts

- Performance Metrics: Be prepared to calculate and interpret key performance indicators such as throughput and latency.

- Resource Allocation: How resources like CPU time and memory are distributed for optimal task execution.

- Pipeline Stages: Know how instruction pipelines work and the benefits of each stage in the process.

- System Bus: Understand the function of the bus in communication between system components.

Memory Hierarchy and Its Importance

In any processing system, the arrangement of memory plays a crucial role in determining how quickly data can be accessed and manipulated. The structure of memory–from the fastest but smallest types to the larger, slower ones–impacts system performance. Optimizing memory access is essential to reduce delays and improve efficiency during data processing tasks.

Memory hierarchy refers to the layered system of storage types, each offering different trade-offs between speed, capacity, and cost. At the top of this hierarchy are the fastest memory types, such as registers and cache, which provide quick access to frequently used data. As you move down the hierarchy, storage types become slower but offer more capacity, such as RAM and disk storage.

Understanding the role of each level in the hierarchy allows for better resource management, ensuring that data is retrieved and stored in the most efficient way possible. By designing systems that optimize the use of each memory type, significant improvements in processing speed and overall performance can be achieved.

CPU Design and Performance Factors

The design of the central processing unit plays a fundamental role in determining the speed and efficiency of a system. Several factors influence how effectively a unit executes instructions, from its internal architecture to the way it handles multiple tasks. A well-designed processor can significantly boost overall system performance, while poor design can lead to delays and inefficiencies.

Key elements that impact CPU performance include clock speed, instruction pipelines, and the number of cores. Each of these factors determines how quickly and effectively a processor can handle operations, process data, and communicate with other system components. Additionally, the size and structure of caches, as well as how tasks are scheduled, affect how efficiently a processor can handle complex workloads.

Optimizing these components allows for improved throughput and reduced latency, which is essential for tasks that require intensive calculations or real-time processing. Understanding how different design decisions impact performance helps in selecting or building systems that meet specific performance requirements.

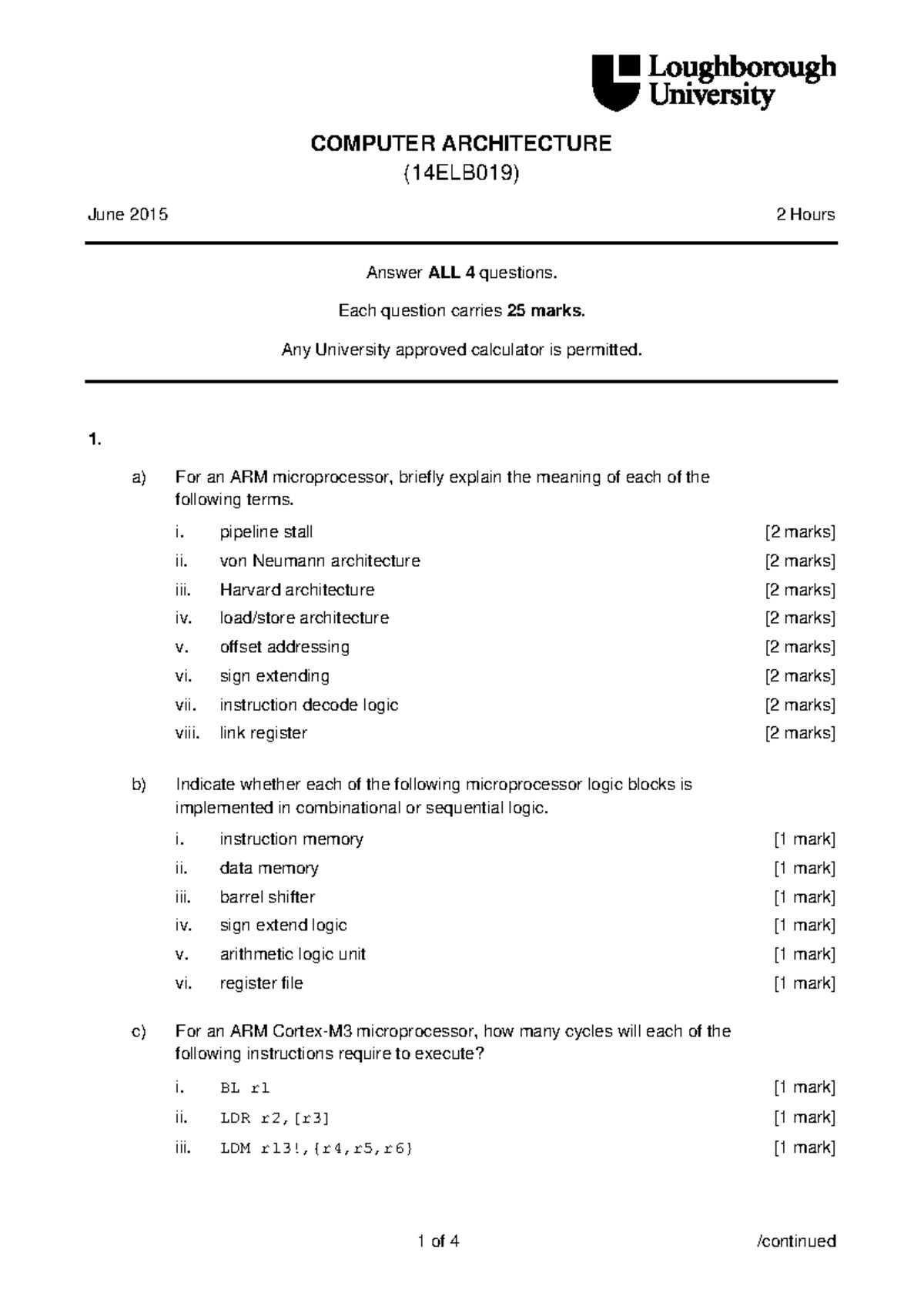

Understanding Pipelining in System Design

Pipelining is a crucial concept in optimizing the performance of processors by allowing multiple instructions to be processed simultaneously at different stages. Instead of executing tasks one by one, pipelining enables a system to handle several operations in parallel, with each instruction being processed in stages. This approach significantly reduces the overall time needed to execute a sequence of instructions.

At its core, pipelining breaks down an instruction cycle into distinct phases, such as fetching, decoding, executing, and writing results. While one instruction is being executed, another can be decoded, and a third can be fetched. This overlapping of tasks enhances throughput and makes better use of system resources, leading to faster processing times and more efficient operation.

However, pipelining also introduces challenges, such as hazards that can cause delays. These include data hazards, control hazards, and structural hazards, each of which can disrupt the smooth flow of instructions through the pipeline. Understanding how to manage and mitigate these issues is key to maximizing the benefits of pipelining in system design.

Difference Between RISC and CISC Architectures

When discussing the design of processing units, two primary instruction set architectures stand out: RISC (Reduced Instruction Set Computing) and CISC (Complex Instruction Set Computing). These approaches differ in their philosophies of how instructions should be executed and optimized for efficiency. Understanding these differences is key to choosing the right type of processor for specific tasks and applications.

Key Characteristics of RISC

RISC focuses on simplicity and efficiency by using a smaller set of instructions, each designed to execute in a single clock cycle. This allows for faster execution of instructions, as the processor spends less time decoding complex operations. RISC processors often rely on larger registers and a higher number of general-purpose registers to minimize the need for memory access during operations.

Key Characteristics of CISC

CISC, on the other hand, uses a larger set of instructions, some of which can perform multiple operations in a single instruction. While this can reduce the number of instructions needed to perform a task, each instruction often takes multiple clock cycles to execute. This architecture is more complex and can be less efficient in terms of speed, but it allows for more compact code and can be advantageous in applications where memory space is limited.

Cache Memory: Structure and Function

Cache memory plays a vital role in enhancing the performance of a system by providing quick access to frequently used data. It acts as a high-speed intermediary between the processor and main memory, allowing for faster retrieval of information. The structure of cache memory is designed to store a small subset of data, reducing the need to access slower memory types, which in turn accelerates overall system performance.

The cache is typically divided into levels, each with its own characteristics in terms of speed and capacity. Lower-level caches are faster but smaller, while higher-level caches have more storage capacity but may be slower. This hierarchical design ensures a balance between speed and storage, optimizing access times based on data usage patterns.

| Cache Level | Speed | Size |

|---|---|---|

| L1 Cache | Fastest | Smallest |

| L2 Cache | Fast, but slower than L1 | Larger than L1 |

| L3 Cache | Slower than L1 and L2 | Largest |

Each level of cache is designed to reduce the latency involved in fetching data from the main memory. The most frequently accessed data is stored in the faster, smaller caches, while less frequently used data is kept in the larger, slower caches. This efficient structure allows for faster data retrieval, improving the overall performance of the system.

Interrupts and Their Handling in Systems

Interrupts are essential for managing events that require immediate attention within a system. When an interrupt occurs, the normal flow of operations is temporarily paused to address the event, allowing for more efficient handling of tasks that cannot wait. This mechanism is crucial for real-time responsiveness and ensuring the proper functioning of multitasking environments.

Types of Interrupts

- Hardware Interrupts: These occur when external devices, such as input devices or peripherals, need immediate attention from the system.

- Software Interrupts: These are generated by running programs or the operating system to request services or to signal errors.

- Timer Interrupts: These are used to maintain time or schedule tasks in a multitasking environment, ensuring that processes are managed effectively.

Handling Interrupts

- Interrupt Service Routine (ISR): This is a specialized function or code that is executed when an interrupt occurs. It is responsible for addressing the specific cause of the interrupt.

- Interrupt Vector: A memory location used to store the address of the ISR. When an interrupt happens, the system refers to the interrupt vector to determine which routine to execute.

- Prioritization: Some interrupts are more urgent than others, so systems often use priority levels to determine the order in which interrupts are processed.

Efficient handling of interrupts is key to maintaining system stability and responsiveness. By pausing regular operations and processing high-priority tasks, systems can continue functioning smoothly even when dealing with unexpected events or errors.

Data Path and Control Path Explained

In any processing system, the data path and control path work together to ensure that instructions are executed properly and efficiently. These two components play distinct but complementary roles in determining how data flows through the system and how various operations are managed. Understanding their functions is essential for grasping how a system processes information and performs tasks.

Data Path

The data path is responsible for the movement of data between different components of the system. It includes registers, arithmetic units, multiplexers, and buses that carry data from one part of the system to another. The data path ensures that the appropriate values are available for processing at the right time, and it is critical for performing arithmetic and logical operations.

Control Path

The control path, in contrast, manages the sequence of operations by directing the flow of data through the system. It generates the necessary control signals that coordinate the activities of the various components in the data path. The control path ensures that the correct operation is executed at each stage of instruction processing, such as selecting which data to fetch or which operation to perform.

Together, the data path and control path enable the system to function effectively by ensuring both the proper handling of data and the correct sequencing of operations. Efficient interaction between these paths is key to achieving optimal performance and accurate task execution.

Effect of Clock Speed on System Performance

Clock speed is a critical factor in determining how quickly a system can perform tasks. It defines the frequency at which the system’s internal operations are synchronized, with higher clock speeds generally leading to faster processing times. However, while a higher clock speed can boost performance, it is not the sole determinant of a system’s overall efficiency. Other factors, such as the number of cores and the efficiency of the architecture, also play important roles.

Impact of Increased Clock Speed

When clock speed is increased, the system can execute more instructions per second. This means that tasks such as calculations and data processing are completed more quickly. A higher clock frequency allows the processor to handle more operations in a given period, improving overall throughput and reducing the time required for certain types of workloads.

Limitations of High Clock Speed

However, simply increasing the clock speed does not always result in linear performance gains. As clock speed rises, so does the heat generated by the processor, which can lead to thermal limitations. Additionally, at very high frequencies, the system may encounter diminishing returns due to factors such as instruction-level parallelism and the efficiency of the data path. In some cases, other optimizations, like multi-core processing or improving memory access speed, may provide greater performance boosts than increasing clock speed alone.

In summary: While a higher clock speed can enhance processing power, it is important to consider a balanced approach, where other performance factors like system design, cooling, and workload distribution are also optimized.

Analyzing Multiprocessing and Multithreading

Multiprocessing and multithreading are two key techniques used to improve the efficiency of systems by enabling parallel execution of tasks. While both approaches allow multiple tasks to run simultaneously, they differ in how they manage processes and threads. Understanding the strengths and limitations of each technique is important for optimizing performance in various applications, from high-performance computing to everyday software.

Multiprocessing

Multiprocessing involves using multiple processors or cores to execute tasks concurrently. Each processor works independently on a separate task, which can significantly speed up execution when tasks are well-suited for parallelism. This approach is ideal for computationally intensive workloads, such as simulations, data analysis, and large-scale processing tasks.

- Advantages: Increased processing power, better for CPU-bound tasks, and capable of handling complex parallel workloads.

- Disadvantages: High resource consumption, overhead in managing multiple processors, and potential for bottlenecks in communication between processors.

Multithreading

Multithreading, on the other hand, involves running multiple threads within a single process. Each thread can perform a different task or work on parts of the same task concurrently. Unlike multiprocessing, which uses multiple physical processors, multithreading takes advantage of a single processor’s capability to handle multiple tasks at the same time through time-slicing.

- Advantages: Lower overhead compared to multiprocessing, more efficient use of system resources, and better suited for I/O-bound tasks like web browsing or file handling.

- Disadvantages: Threads within the same process share resources, which can lead to contention and data synchronization issues if not properly managed.

In summary, multiprocessing is generally more effective for CPU-bound tasks that benefit from independent processing, while multithreading is better suited for tasks that involve a lot of waiting or interaction with external resources. Understanding the specific needs of an application is key to choosing the right approach for parallel execution.

Virtual Memory and Paging Concepts

Virtual memory is a technique that allows systems to compensate for physical memory limitations by using a portion of the storage device as additional, temporary memory space. This concept enables processes to use more memory than is physically available, providing the illusion of a larger memory pool. One of the key methods used in managing virtual memory is paging, which helps divide memory into fixed-size blocks for more efficient storage and retrieval.

Understanding Virtual Memory

Virtual memory allows a program to access a large, contiguous block of addresses, even if the physical memory is fragmented or smaller than the addressable space. This is achieved by mapping virtual addresses to physical addresses, with the system handling the translation between them. Virtual memory enables running multiple applications simultaneously, even when the total memory required exceeds the system’s physical RAM.

Paging in Virtual Memory

Paging is a memory management scheme that breaks down the virtual memory into small, fixed-size blocks called pages, and similarly divides physical memory into blocks called page frames. The system maintains a page table to map virtual pages to physical page frames. When a program accesses data, the system translates the virtual address to a physical address using the page table. If the required page is not currently in physical memory, a page fault occurs, and the system retrieves the page from secondary storage.

Advantages of Paging: It simplifies memory management by eliminating the need for contiguous memory allocation, reducing fragmentation, and improving the efficiency of memory usage. However, managing the page table and handling page faults can introduce overhead.

System Bus and Communication Mechanisms

The system bus serves as the primary pathway for communication between different components within a system, enabling data transfer, control signaling, and address routing. By providing a shared connection, it ensures that various parts of the system, such as the processor, memory, and peripherals, can exchange information efficiently. The effectiveness of communication mechanisms, such as the bus structure and protocols, plays a crucial role in overall system performance and speed.

System Bus Structure

The system bus is typically composed of three main types of lines: the data bus, address bus, and control bus. Each serves a specific function in the communication process:

- Data Bus: Responsible for transferring actual data between components, allowing data exchange during read and write operations.

- Address Bus: Carries the memory addresses to which data is being sent or from which data is being fetched, directing traffic between components.

- Control Bus: Sends signals that manage and coordinate the operations of the system, ensuring that components work in sync with each other.

Communication Mechanisms

Communication mechanisms govern how data is transferred over the bus and how components access the bus. One of the most common methods is serial communication, where data is sent bit by bit over a single channel. This is often used in cases where high-speed transfer is less critical.

Parallel communication uses multiple channels to send data simultaneously, allowing for faster transfers but potentially leading to issues like signal interference and limited distance. Another mechanism, bus arbitration, ensures that when multiple components attempt to use the bus simultaneously, a method of resolving conflicts is in place, typically managed by an arbitration protocol.

In summary, the system bus and its communication mechanisms ensure that all parts of the system can exchange information in a structured and organized way, balancing speed, efficiency, and reliability for optimal performance.

Optimization Techniques for Hardware Efficiency

Improving the efficiency of hardware systems is essential for enhancing performance while reducing power consumption, cost, and heat generation. Various techniques are employed at different levels of system design to achieve these goals. These optimizations can involve both architectural changes and improvements in the physical components of the system.

Power Efficiency Techniques

One of the main challenges in hardware design is reducing power consumption while maintaining or improving performance. Several techniques are used to achieve power efficiency, including:

- Dynamic Voltage and Frequency Scaling (DVFS): Adjusts the voltage and frequency according to the workload to save power during idle or low-demand operations.

- Power Gating: Disables unused components or circuits to prevent unnecessary power consumption when certain parts of the system are inactive.

- Clock Gating: Turns off the clock signal to sections of the circuit that are not in use, minimizing power usage while maintaining performance where needed.

Performance Optimization Techniques

To maximize hardware efficiency, improving the overall performance is equally important. The following methods are commonly used:

- Parallelism: Leveraging multiple processors or cores to divide tasks and execute them simultaneously, improving throughput and reducing execution time.

- Pipelining: Divides processing into discrete stages so that multiple instructions can be processed simultaneously, increasing throughput without increasing the clock speed.

- Branch Prediction: Enhances performance by predicting the flow of control and reducing delays caused by branching operations.

Trade-off Analysis

Optimization often involves trade-offs between performance, power consumption, and cost. For example, reducing power might lead to slower processing speeds, while increasing speed may raise power consumption. Designers must balance these factors to meet the specific needs of the system.

| Technique | Impact on Performance | Impact on Power Consumption |

|---|---|---|

| Dynamic Voltage and Frequency Scaling (DVFS) | Variable performance based on workload | Reduces power during low demand |

| Power Gating | Minimal performance impact | Significant reduction in power |

| Parallelism | Increases throughput | Higher power consumption with more cores |

| Pipelining | Improves throughput without increasing clock speed | Minimal power increase |

By utilizing these optimization techniques, hardware can be made more efficient, achieving the desired balance between performance, power, and cost while adapting to various operational demands.

Best Practices for Exam Preparation

Effective preparation for assessments requires a combination of strategic planning, efficient study habits, and proper time management. By following proven techniques, learners can maximize their retention, manage stress, and improve their overall performance. This section outlines the best practices that can help individuals approach their studies in a structured and focused manner.

Planning and Time Management

One of the most critical aspects of preparation is managing time effectively. Breaking down large topics into manageable sections and allocating specific time slots for each can help ensure thorough coverage. Creating a study schedule, setting clear goals, and prioritizing areas of difficulty can optimize learning sessions.

Active Study Methods

Passive reading or simple note-taking may not be enough for effective retention. Active study methods, such as summarizing material, teaching concepts to others, and practicing problems, can significantly improve understanding. Using flashcards or creating mind maps can also aid in reinforcing key ideas.

| Study Method | Benefits | Best For |

|---|---|---|

| Summarization | Improves retention by condensing material | Reviewing large sections of content |

| Teaching Others | Strengthens understanding by explaining concepts | Complex topics or unclear areas |

| Practice Problems | Builds familiarity with problem-solving techniques | Subject areas requiring application of theory |

| Mind Maps | Visualizes connections between ideas | Organizing concepts and improving clarity |

Stress Management

Managing stress is equally important in achieving optimal performance. Regular breaks during study sessions, deep breathing exercises, and proper sleep contribute to mental clarity and focus. Staying hydrated and maintaining a healthy diet can also improve cognitive function during preparation.

By incorporating these best practices into a study routine, learners can approach assessments with greater confidence, efficiency, and effectiveness, leading to better results and a less stressful experience.