Understanding key mathematical concepts can be challenging, but with the right approach, you can enhance your ability to solve complex problems efficiently. This section will help you develop a solid foundation in fundamental topics and improve your problem-solving skills.

By focusing on essential principles, you will learn how to tackle common problem types effectively. Whether you are revising specific methods or looking for tips to improve your performance, this guide will provide valuable insights to help you succeed.

Practice and consistent review are crucial for mastering these topics. With the right techniques, you will build the confidence needed to approach any challenge with ease and precision. Focus on understanding the logic behind each method, and the solutions will come naturally.

Essential Concepts in Elementary Statistics

Mastering the foundational principles of data analysis is critical for approaching complex problems. Understanding the core concepts provides a strong base for tackling more advanced topics with confidence. A clear grasp of fundamental ideas enhances problem-solving skills, making it easier to navigate various challenges.

Key Principles of Data Interpretation

Grasping how to interpret numerical data is crucial in solving analytical problems. It involves recognizing patterns, drawing conclusions, and making predictions based on evidence. Essential techniques include measuring central tendencies, identifying variability, and understanding distributions, all of which form the backbone of most analytical approaches.

Understanding Relationships Between Variables

Recognizing how different variables interact is a vital skill. Whether examining correlations or regression models, understanding these relationships helps in making predictions and identifying trends. This knowledge allows for more accurate conclusions and can guide decision-making in various fields, from research to practical applications.

Understanding Probability for Statistics Tests

Grasping the concept of likelihood is fundamental for making informed predictions and drawing conclusions based on data. By understanding how events are likely to occur, you gain the ability to assess risks, forecast trends, and make decisions supported by evidence. This section will help you build a strong foundation in interpreting probabilities and applying them to practical scenarios.

The Basics of Probability

Probability involves calculating the chance that a specific event will happen. By assigning numerical values between 0 and 1, you can quantify the uncertainty of different outcomes. The closer the probability is to 1, the more likely the event is to occur, while a value closer to 0 indicates that the event is less likely.

Conditional Probability and Independence

In many situations, understanding how events influence each other is crucial. Conditional probability refers to the likelihood of an event occurring, given that another event has already taken place. This concept plays a significant role in many problem-solving scenarios, especially when analyzing multiple dependent factors. Knowing whether events are independent or related can drastically change how you interpret outcomes.

Types of Data in Statistics Assessments

Different kinds of information require different methods of analysis. Understanding the variety of data you encounter is essential for selecting the right tools and techniques for interpretation. Identifying the type of data helps determine how to organize, summarize, and make inferences based on the available information.

Categorical Data

Categorical information is used to describe distinct groups or categories. This type of data can be nominal, where there is no inherent order, such as colors or types of fruit. It can also be ordinal, where categories have a specific rank or order, like rating scales or educational levels. While categorical data cannot be measured numerically, it is still crucial for classification and grouping purposes.

Quantitative Data

Quantitative data involves numbers that can be measured and compared. It can be discrete, like counting the number of students in a class, or continuous, like measuring height or weight. This type of data is ideal for calculations, such as finding averages, variances, or standard deviations, and plays a significant role in making more complex analyses possible.

How to Interpret Descriptive Statistics

Interpreting summary measures is a crucial step in understanding patterns and trends in data. These measures help you to quickly grasp the key characteristics of a dataset, allowing for more informed conclusions. By focusing on central tendencies, variability, and distribution, you can better assess the overall structure of the information you are analyzing.

Key Measures of Central Tendency

The central tendency of a dataset refers to the typical or most common value. Common measures include the mean, median, and mode. Each measure offers a different perspective on the data, depending on the nature and distribution of the values.

| Measure | Description | Use |

|---|---|---|

| Mean | The average of all values | Best for normally distributed data |

| Median | The middle value when data is sorted | Useful for skewed data |

| Mode | The most frequent value | Helpful for categorical data |

Understanding Dispersion and Spread

Measures of variability or spread, such as range, variance, and standard deviation, help to understand how much the data deviates from the central value. A dataset with high dispersion shows a wider spread of values, while low dispersion indicates that the values are closely packed around the central tendency.

Common Errors in Statistics Exams

When preparing for analytical assessments, students often encounter pitfalls that can hinder their performance. Being aware of these common mistakes allows you to avoid them and approach problems with more confidence. Recognizing errors in reasoning or calculations can make a significant difference in your exam results.

Typical Mistakes to Watch For

- Misunderstanding the Question – Sometimes, the way a problem is worded can cause confusion. Be sure to read the question carefully and understand what is being asked before beginning your calculations.

- Incorrect Use of Formulas – Using the wrong formula or applying the right one incorrectly is a common mistake. Always double-check that the formula matches the problem requirements.

- Not Identifying Assumptions – Many problems require certain assumptions to be made. Failing to recognize these assumptions can lead to incorrect conclusions.

- Rounding Prematurely – Rounding numbers too early in your calculations can lead to inaccuracies. Keep your numbers as precise as possible until the final answer.

- Ignoring Units – Neglecting to include or convert units correctly can result in wrong answers. Always ensure that units are consistent throughout your work.

How to Avoid These Mistakes

- Take Your Time – Rushed decisions often lead to errors. Allocate enough time to carefully read the problem and perform each step methodically.

- Double-Check Your Work – After completing your calculations, go back over your work to ensure everything aligns with the problem’s requirements.

- Practice Regularly – The more problems you solve, the more familiar you become with common scenarios and how to avoid pitfalls.

Strategies for Solving Hypothesis Tests

When working through hypothesis problems, it’s essential to approach them with a clear, structured method. Following a logical sequence can ensure that your conclusions are based on accurate reasoning. By focusing on key steps, you can make more informed decisions and avoid common errors in interpretation.

Step-by-Step Approach

- State the Hypothesis – Begin by clearly stating both the null and alternative hypotheses. These hypotheses define the expectations of the analysis and guide the testing process.

- Select the Significance Level – Determine the significance level (alpha) for your analysis, which defines the threshold for rejecting the null hypothesis. Common values are 0.05 or 0.01.

- Choose the Appropriate Test – Depending on the data type and research question, choose the correct statistical method to test your hypothesis (e.g., t-test, chi-square test).

- Calculate the Test Statistic – Perform the necessary calculations to find the test statistic, which will help determine whether to reject or fail to reject the null hypothesis.

- Make a Decision – Compare the test statistic with the critical value or p-value. If the result falls within the rejection region, reject the null hypothesis; otherwise, fail to reject it.

Tips for Success

- Understand the Context – Ensure that you understand the context of the problem. Knowing the background and the type of data can help you make the right decisions.

- Check Assumptions – Hypothesis testing methods often come with assumptions. Verify that your data meets these assumptions before proceeding.

- Stay Consistent – Be consistent with your approach to hypothesis testing. Following the same procedure each time ensures reliability in your results.

Essential Formulas for Statistics Questions

Understanding key mathematical relationships is crucial for solving complex problems effectively. Whether you’re analyzing data distributions or calculating probabilities, having the right formulas at hand is necessary for accuracy and efficiency. Mastering these essential equations enables better interpretation and reliable conclusions in various scenarios.

Key Formulas for Data Analysis

- Mean (Average) – The mean is the sum of all data values divided by the number of values in the dataset:

Mean = (Σx) / n, where Σx is the sum of all values and n is the total number of values. - Variance – Variance measures the spread of data points from the mean:

Variance = Σ(x – μ)² / n, where μ is the mean and x represents each data point. - Standard Deviation – The standard deviation is the square root of variance:

Standard Deviation = √Variance. - Probability of an Event – The probability is the ratio of favorable outcomes to total outcomes:

P(A) = Number of favorable outcomes / Total outcomes.

Formulas for Hypothesis Testing

- T-Statistic – Used in hypothesis testing when the sample size is small:

T = (X̄ – μ) / (s / √n), where X̄ is the sample mean, μ is the population mean, s is the sample standard deviation, and n is the sample size. - Z-Score – A measure of how many standard deviations a data point is from the mean:

Z = (X – μ) / σ, where X is the data point, μ is the mean, and σ is the population standard deviation. - Chi-Square Statistic – Used for categorical data to assess the goodness of fit:

χ² = Σ (O – E)² / E, where O is the observed frequency and E is the expected frequency.

Working with Normal Distributions in Tests

Understanding how data behaves in a bell-shaped curve is essential for analyzing many real-world phenomena. The normal distribution is a foundational concept used to describe the spread of values in a dataset, where most observations cluster around the central value, with fewer occurring as you move further away from the mean. Recognizing patterns in this type of distribution allows for more precise decision-making in various situations, including evaluation and prediction.

When dealing with problems that involve normal distributions, key measures such as the mean and standard deviation play a significant role in determining probabilities and making informed conclusions. Additionally, concepts like z-scores help in standardizing data, enabling comparisons across different datasets or distributions.

Applying this knowledge allows for more effective analysis of real-world data, ensuring that inferences made are grounded in well-understood statistical principles.

Key Sampling Methods for Statistical Analysis

Effective sampling is crucial for obtaining accurate insights from large datasets. Choosing the right approach allows for the collection of representative data, minimizing biases and ensuring reliable results. Different methods offer distinct advantages depending on the type of data and the goals of the analysis. The most common strategies include random sampling, stratified sampling, and systematic sampling, each of which plays a unique role in gathering data for research or assessment purposes.

Random Sampling

Random sampling involves selecting individuals or items in such a way that every element in the population has an equal chance of being chosen. This method is widely used due to its simplicity and the unbiased nature of the selection process.

Stratified Sampling

Stratified sampling divides the population into distinct subgroups or strata, ensuring that each subgroup is proportionally represented in the sample. This method improves precision and provides a more detailed understanding of the overall population.

Systematic Sampling

Systematic sampling selects every nth item from a list or sequence, which can be more efficient when dealing with large populations. It is often used when data is organized in a specific order, ensuring the sample is spread across the entire population.

| Sampling Method | Advantage | Disadvantage |

|---|---|---|

| Random Sampling | Simple and unbiased | May not represent all subgroups |

| Stratified Sampling | Ensures representation of subgroups | More complex and time-consuming |

| Systematic Sampling | Efficient and easy to implement | May miss patterns in non-random data |

By understanding these methods, researchers can select the best approach for gathering data, ultimately ensuring that their findings are robust, accurate, and meaningful.

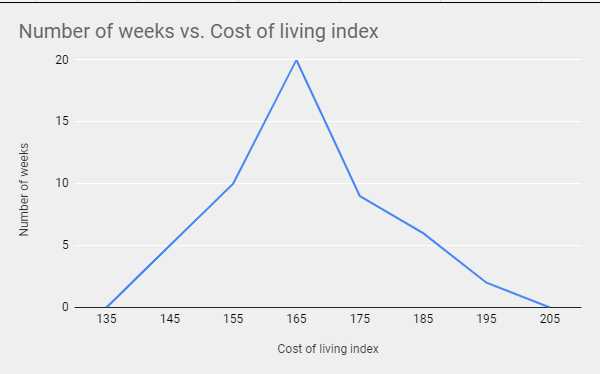

Data Visualization Techniques in Statistics

Effective data representation is key to communicating insights from complex datasets. By transforming raw data into clear visual formats, one can highlight patterns, trends, and relationships that are often hidden in numerical tables. Various visualization techniques provide distinct advantages, whether it’s displaying distributions, comparisons, or changes over time. Choosing the right method is essential for conveying information in a meaningful and accessible way.

Common Visualization Tools

There are several common ways to visually represent data, with each technique serving a specific purpose. Among the most popular options are bar charts, histograms, line graphs, and scatter plots. Each of these methods highlights different aspects of the data, making it easier to draw conclusions.

| Visualization Type | Purpose | Best Use |

|---|---|---|

| Bar Chart | Compare quantities across categories | Comparing data in distinct groups or categories |

| Histogram | Show distribution of continuous data | Analyzing frequency distributions and patterns |

| Line Graph | Show trends over time | Tracking changes or trends across a time series |

| Scatter Plot | Identify relationships between variables | Examining correlation and trends between two continuous variables |

Choosing the Right Tool

When deciding which method to use, consider the type of data, the message you want to communicate, and the audience. For instance, bar charts are excellent for comparing discrete categories, while scatter plots are ideal for exploring potential correlations. Ultimately, a well-chosen visualization can significantly enhance the clarity and impact of data-driven insights.

Tips for Managing Time During Exams

Effective time management during assessments is crucial for success. Without a proper plan, it’s easy to become overwhelmed by the number of tasks and questions to answer. Implementing a structured approach helps ensure that every section is addressed and that you complete the exam within the allotted time. Proper organization and prioritization can make a significant difference in your performance and reduce stress levels.

Start by reviewing the entire exam to get an overview of the sections and their difficulty. Allocate time based on the complexity of the tasks and the number of points each section offers. This ensures that you spend enough time on the more demanding parts without rushing through simpler questions.

Another important strategy is to avoid getting stuck on a single question. If you find yourself spending too much time on one, move on to the next and come back to it later. This helps maintain momentum and prevents unnecessary stress.

Lastly, keep track of the time throughout the exam. Set mini-deadlines for each section to ensure you’re staying on track. This will help you pace yourself and avoid last-minute panic.

Understanding Confidence Intervals in Statistics

A confidence interval provides an estimated range of values which is likely to contain an unknown parameter. It offers insight into the reliability and precision of a measurement, allowing one to make inferences about a population based on sample data. By constructing a confidence interval, researchers express a degree of certainty about the accuracy of their estimates. This concept is fundamental when interpreting results and understanding the potential variability within data.

Key Components of Confidence Intervals

The essential elements of a confidence interval include the sample estimate, the margin of error, and the confidence level. These components work together to provide a range that reflects both the estimate’s precision and the level of certainty associated with it.

- Sample Estimate: The observed value or statistic obtained from the sample data.

- Margin of Error: The amount added or subtracted from the sample estimate to form the interval, accounting for variability.

- Confidence Level: The probability that the interval contains the true population parameter (e.g., 95% confidence means the interval has a 95% chance of containing the true value).

Calculating Confidence Intervals

To calculate a confidence interval, the sample statistic is combined with the margin of error, which is derived from the standard error of the sample. Depending on the distribution and sample size, different formulas or methods are used. For example, in small samples, the t-distribution is often used, while larger samples typically rely on the normal distribution.

- For a mean: The confidence interval is typically calculated using the formula: Sample Mean ± (Critical Value × Standard Error).

- For a proportion: The interval is calculated using a similar approach, but based on the proportion’s standard error.

Understanding how to construct and interpret confidence intervals is essential for making informed decisions and assessing the reliability of data-driven conclusions.

How to Approach Regression Analysis Questions

When facing problems that involve understanding relationships between variables, it’s crucial to approach them systematically. Regression analysis helps to quantify these relationships and predict outcomes based on known data. The process involves identifying key variables, understanding the assumptions of the model, and interpreting the results in a meaningful way. A clear and structured approach ensures that insights derived from the analysis are accurate and reliable.

Key Steps to Solve Regression Problems

To tackle regression analysis, there are several essential steps to follow. These steps guide you through setting up the model, interpreting the results, and ensuring that your conclusions are valid.

- Understand the Variables: Identify the dependent (response) variable and independent (predictor) variables involved in the analysis.

- Check Assumptions: Make sure that assumptions such as linearity, independence, homoscedasticity, and normality are met before proceeding with the analysis.

- Fit the Model: Use the appropriate regression technique to fit the model to the data, ensuring the relationship between variables is represented correctly.

- Evaluate the Model: Look at metrics like R-squared, p-values, and residuals to assess the model’s fit and significance.

Interpreting Regression Results

Once the model is fit, it’s important to interpret the coefficients, the significance levels, and other diagnostic information to make informed decisions.

- Coefficients: These values represent the impact of each independent variable on the dependent variable. Positive coefficients indicate a direct relationship, while negative coefficients suggest an inverse relationship.

- R-squared: This metric shows how well the independent variables explain the variability in the dependent variable. A higher R-squared value indicates a better fit.

- p-values: These values test the null hypothesis for each predictor. A small p-value (typically less than 0.05) indicates that the predictor is statistically significant.

By following these steps, you can systematically approach regression problems, ensuring that the results are both meaningful and actionable.

Commonly Tested Statistical Theorems

In the realm of data analysis, certain theorems provide the foundational principles that guide inference and decision-making. These theorems are essential tools for understanding the relationships within data sets, assessing probabilities, and making predictions. Being familiar with these key concepts is vital for solving a wide range of problems in this field. Below, we explore some of the most commonly encountered theorems that are critical for analysis.

Key Theorems in Data Analysis

Understanding these theorems can simplify complex problems and provide a clearer perspective on the relationships within the data. Below are some of the fundamental principles that you may come across in analysis-related tasks.

- Law of Large Numbers: This theorem states that as the sample size increases, the sample mean approaches the population mean, providing a basis for estimation and accuracy in predictions.

- Central Limit Theorem: It asserts that the distribution of sample means approaches a normal distribution as the sample size increases, regardless of the original population’s distribution.

- Bayes’ Theorem: A principle used to calculate the probability of an event based on prior knowledge and evidence, allowing for more informed decision-making in uncertain situations.

Applications of These Theorems

The application of these theorems extends across various methods in data analysis, influencing how predictions are made and how uncertainty is managed.

- Predictive Modelling: The Central Limit Theorem aids in the creation of predictive models by ensuring the reliability of sample estimates when dealing with large datasets.

- Hypothesis Testing: The Law of Large Numbers plays a role in hypothesis testing, ensuring that conclusions drawn from large samples are valid and close to the true population parameters.

- Risk Assessment: Bayes’ Theorem is widely used in decision-making models, helping to update probabilities based on new data, particularly in areas like finance and healthcare.

Mastering these theorems is essential for interpreting and analyzing data effectively, ensuring that conclusions are based on sound mathematical foundations.

Analyzing Chi-Square Test Results

Understanding the outcome of a chi-square analysis involves interpreting how observed data compares to expected values, helping to determine whether there is a significant relationship between variables. The test provides a way to assess the goodness of fit or test for independence between categorical variables. Properly analyzing these results ensures that conclusions drawn from the data are valid and meaningful.

Once the chi-square statistic is calculated, the next step is to compare it to a critical value from the chi-square distribution, taking into account the degrees of freedom and the chosen significance level. If the calculated statistic exceeds the critical value, the null hypothesis is rejected, indicating that there is a significant difference or association. If it is less than the critical value, the null hypothesis is not rejected.

Steps to Analyze Chi-Square Results

- Step 1: Calculate the chi-square statistic based on the observed and expected frequencies.

- Step 2: Determine the degrees of freedom, which typically equals the number of categories minus one for a goodness of fit test or (rows – 1) * (columns – 1) for a test of independence.

- Step 3: Find the critical value for the chi-square distribution using the degrees of freedom and the desired significance level.

- Step 4: Compare the calculated chi-square statistic to the critical value. If the statistic is larger than the critical value, reject the null hypothesis.

Interpreting Results

Interpreting the results is straightforward once the hypothesis is tested. A rejection of the null hypothesis suggests a significant difference or association between the variables, while failure to reject it indicates that no meaningful relationship exists within the data set.

- Significant Result: If the p-value is smaller than the chosen significance level (e.g., 0.05), the evidence suggests that the observed distribution is different from the expected one.

- Non-Significant Result: If the p-value is greater than the significance level, the observed data aligns with the expected distribution, indicating no significant difference.

By following these steps and correctly interpreting the outcome, one can make informed decisions based on the chi-square analysis, ensuring that the results are valid and useful for further investigation or decision-making.

Effective Study Habits for Statistics Tests

Developing strong study habits is essential for mastering the material and performing well during assessments. Efficient learning strategies, time management, and active engagement with the material can significantly enhance your ability to understand complex concepts and solve problems. By incorporating these methods into your preparation, you can approach assessments with confidence and clarity.

One of the most effective approaches is breaking down the material into smaller, more manageable sections. This allows for deeper focus on individual topics, making it easier to retain information. Additionally, regularly reviewing and practicing problems is crucial for reinforcing understanding and identifying areas that need further attention.

Key Strategies for Successful Studying

- Consistency: Set a study schedule and stick to it. Consistent, short sessions are more effective than cramming all the information in one go.

- Active Learning: Instead of passively reading, engage with the material by solving practice problems, summarizing key points, and explaining concepts out loud.

- Group Study: Collaborating with peers allows for a variety of perspectives, making it easier to clarify doubts and gain a deeper understanding.

- Utilize Resources: Use textbooks, online resources, or video tutorials to reinforce difficult topics. Resources such as flashcards or mind maps can help solidify key concepts.

Time Management Techniques

- Prioritize: Focus on the most challenging topics first and allocate more time to areas where you feel less confident.

- Breaks: Incorporate regular breaks to avoid burnout. The Pomodoro technique, for example, can help you stay focused while giving your brain time to rest.

- Practice Under Test Conditions: Simulate real testing conditions by timing yourself while solving problems. This helps improve time management skills and reduces anxiety during the actual assessment.

By adopting these habits, you can improve your comprehension and problem-solving skills, increasing your chances of performing well during evaluations. Consistency, active engagement, and proper time management are key to mastering the material and achieving success.

Preparing for Multiple-Choice Questions

Mastering multiple-choice assessments requires more than just memorization. A strategic approach to preparation is key to identifying correct answers efficiently while avoiding common pitfalls. By practicing problem-solving techniques, reviewing key concepts, and understanding the structure of the questions, you can significantly improve your performance. Effective preparation involves both active learning and smart test-taking strategies.

Effective Strategies for Preparation

- Understand Key Concepts: Focus on grasping the underlying principles behind each topic. This ensures you can tackle questions even if they are worded differently from what you’ve seen before.

- Practice with Sample Questions: Regular practice with sample problems can help familiarize you with the question format and refine your ability to pick out the most relevant information.

- Analyze Mistakes: When practicing, review incorrect answers carefully. Understanding why a choice was wrong is just as valuable as knowing why a choice is correct.

Test-Taking Techniques

When sitting for the exam, having a strategy to manage your time and answer questions efficiently is essential. One way to maximize your chances of success is to first skim through all the options for each question before selecting an answer.

| Strategy | Description |

|---|---|

| Eliminate Wrong Answers: | Start by eliminating obviously incorrect answers. This increases your chances of selecting the right one, even if you are unsure. |

| Use Process of Elimination: | If two answers seem correct, compare them closely. Look for subtle differences to identify which is more likely to be the correct one. |

| Guess Strategically: | If you must guess, choose an answer that seems most plausible based on your understanding of the subject. Avoid random guessing if possible. |

By combining thorough preparation with smart test-taking strategies, you can increase your chances of success in multiple-choice assessments. A clear understanding of the material, coupled with practiced techniques, will help you navigate the exam with confidence.

How to Review and Improve Your Responses

After completing an assessment, the review process is crucial for enhancing the quality of your responses. Carefully examining each answer allows you to identify potential errors, refine your reasoning, and ensure the best possible outcome. By reviewing methodically and making necessary adjustments, you can increase the accuracy of your responses and gain deeper insight into the material.

Steps to Improve Your Responses

- Check for Accuracy: Revisit each response to verify calculations or logic. Small mistakes, like misreading a question or overlooking a detail, can lead to incorrect answers.

- Ensure Clarity: For open-ended questions, confirm that your explanations are clear, concise, and easy to follow. Avoid unnecessary jargon and ensure you address the main point.

- Double-Check for Completeness: Make sure you have answered every part of the question. Often, multiple components need to be addressed in one response.

Common Mistakes to Watch Out For

- Overlooking Details: It’s easy to overlook small instructions, such as units of measurement or specific constraints in a problem. These details can drastically change the answer.

- Skipping Review: Avoid rushing through the review process. Even if you feel confident, taking extra time to go over your responses can catch hidden mistakes.

- Failing to Use the Process of Elimination: If unsure about an answer, apply a process of elimination to rule out obviously incorrect options and improve your chances of selecting the right one.

By implementing a thorough review strategy, you can refine your work and address mistakes that might otherwise go unnoticed. This approach not only improves your current performance but also helps you understand the reasoning behind your answers more clearly.