As you approach your upcoming assessment, it’s essential to focus on the core principles and techniques that will help you succeed. This section covers key topics and problem-solving strategies, ensuring you’re well-equipped for any challenges that might arise during the test. By understanding the concepts deeply, you’ll be able to approach even the most complex questions with confidence.

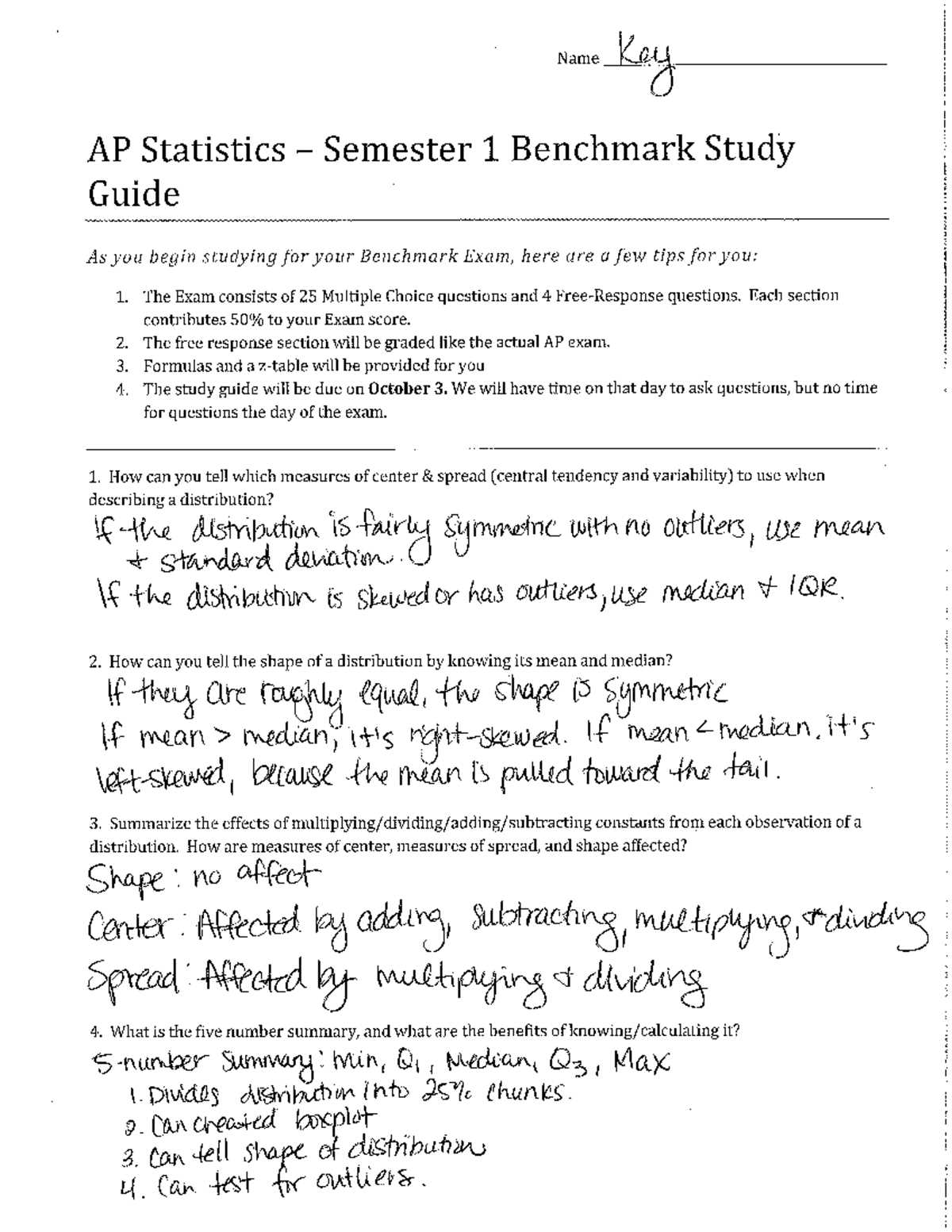

Mastering data interpretation is a critical skill, and you’ll encounter a variety of problems requiring sharp analysis. From understanding how to draw conclusions from data to calculating probabilities, each step will build on your foundational knowledge. Take the time to review these concepts, practice your skills, and refine your techniques. The more you engage with these principles, the more prepared you will feel.

Finally, don’t forget to practice actively. Solving sample problems and testing yourself on different types of tasks will solidify your understanding and help you identify areas that need improvement. The key to success is a combination of preparation, practice, and the ability to think critically under pressure.

AP Statistics First Semester Final Exam Review Answers

In this section, we’ll focus on the key concepts and problem-solving techniques that are essential for mastering the material covered in the assessment. The goal is to ensure that you understand the core ideas thoroughly and can apply them with confidence. We will walk through various topics, offering insights into how to approach questions and solve them effectively.

By practicing different problem types and gaining a deeper understanding of core methods, you’ll be prepared to tackle any challenges that may appear in the test. Make sure to focus on interpreting data, calculating probabilities, and analyzing trends, as these are frequently tested. Recognizing patterns in problems and knowing which tools to apply is crucial for achieving success.

Engage with sample questions to enhance your problem-solving speed and accuracy. The more you familiarize yourself with the techniques and strategies discussed here, the more equipped you’ll be to handle the variety of problems that you will face. Aim for both comprehension and practice to ensure you are ready for every aspect of the assessment.

Key Concepts to Master for AP Statistics

To succeed in the upcoming assessment, it’s crucial to have a solid grasp of the core principles that will be tested. Mastering these fundamental concepts will not only help you understand the material better but also enable you to solve complex problems with confidence. Below are some essential topics that you should focus on:

- Data Analysis Techniques: Be comfortable with summarizing data, identifying trends, and using different visualization tools to interpret information.

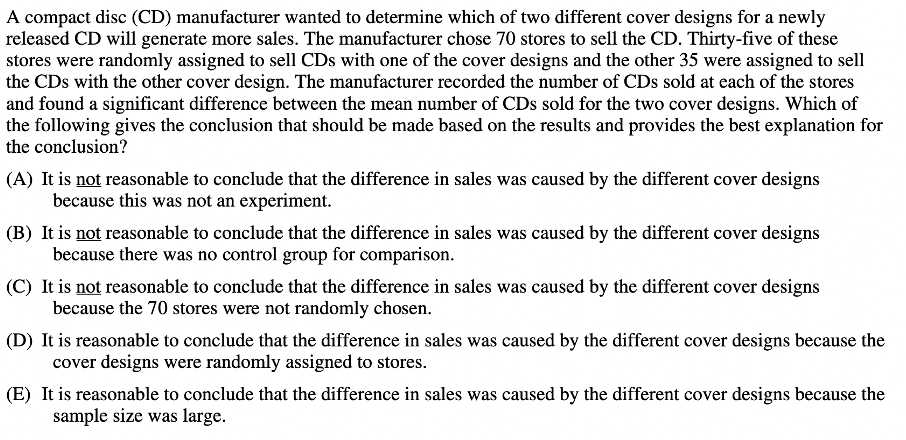

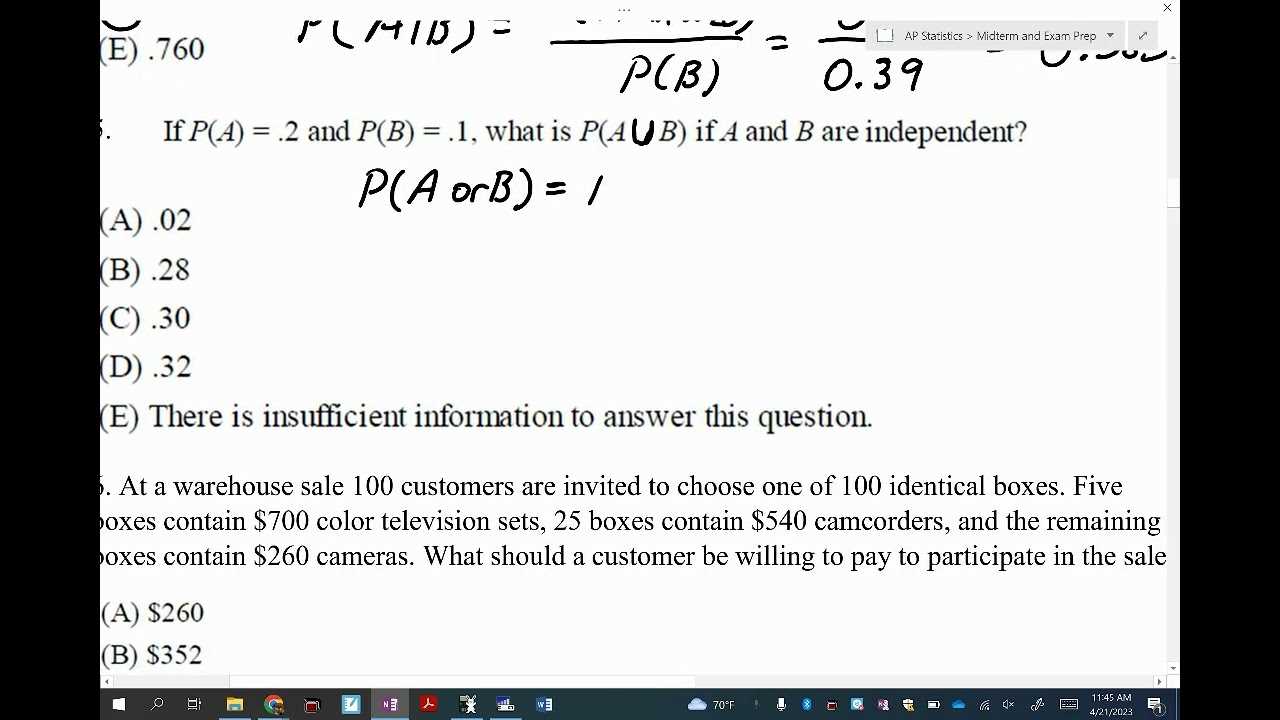

- Probability Models: Understanding the basics of probability, including conditional probability, random variables, and distributions, is key to answering many questions.

- Sampling Methods: Recognize the importance of sampling techniques, from simple random sampling to more advanced methods like stratified and cluster sampling.

- Hypothesis Testing: Be able to set up and analyze hypothesis tests, including understanding significance levels and p-values.

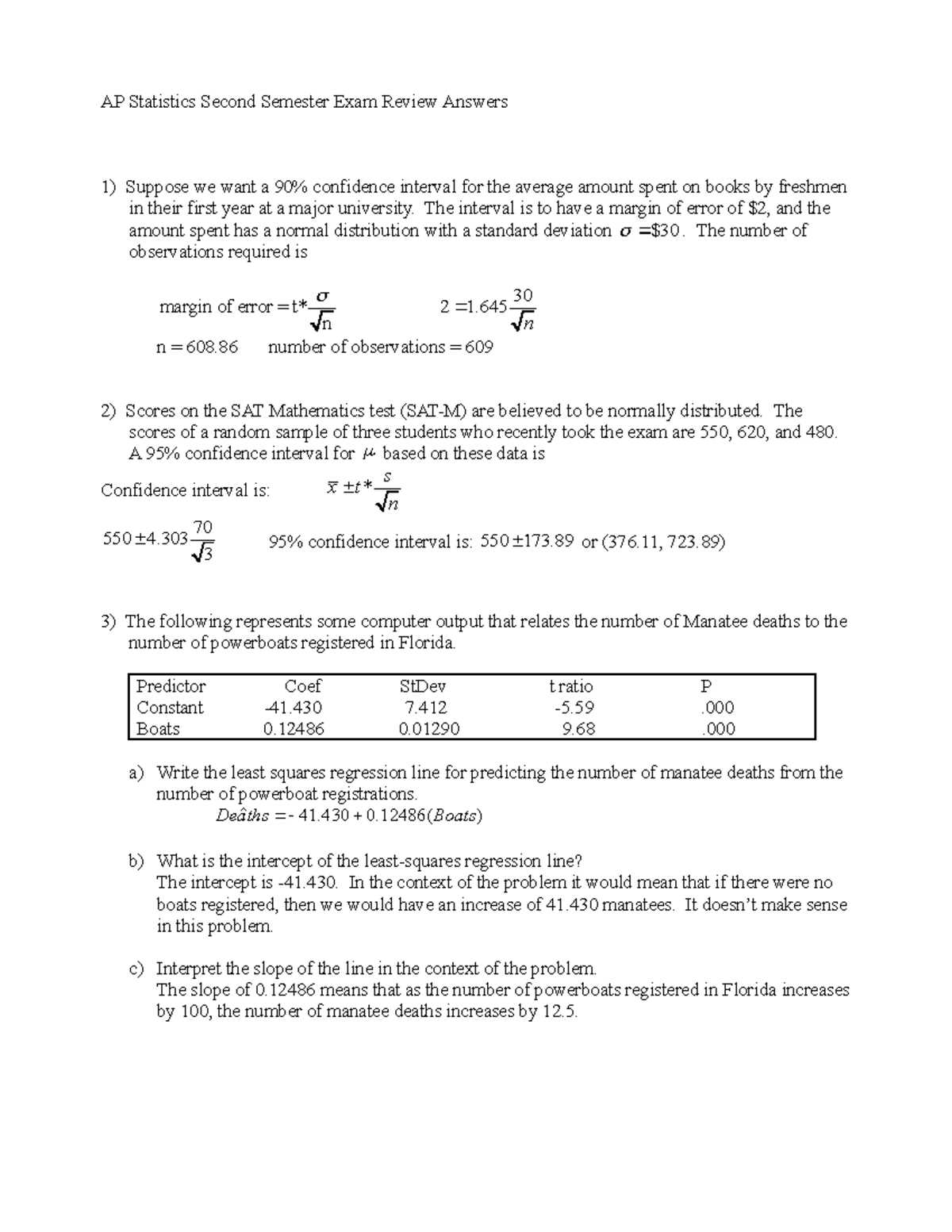

- Confidence Intervals: Know how to calculate and interpret confidence intervals for different populations and understand their real-world applications.

- Regression and Correlation: Be familiar with linear regression, correlation coefficients, and how to interpret these relationships in data.

Each of these topics represents a foundational area of knowledge. To master them, focus on practicing problems, reviewing formulas, and familiarizing yourself with common question formats. Consistent practice will ensure you are well-prepared for the challenges ahead.

Understanding Data Interpretation in AP Statistics

Being able to interpret and analyze data effectively is one of the most important skills you’ll need. This ability allows you to extract meaningful insights from numbers and visualize relationships between variables. In this section, we will focus on how to approach various types of data and how to draw accurate conclusions from them.

Key Data Interpretation Techniques

- Identifying Trends: Look for patterns or trends in the data, whether they indicate growth, decline, or stability over time.

- Comparing Data Sets: Understand how to compare different groups or sets of data, paying attention to their similarities and differences.

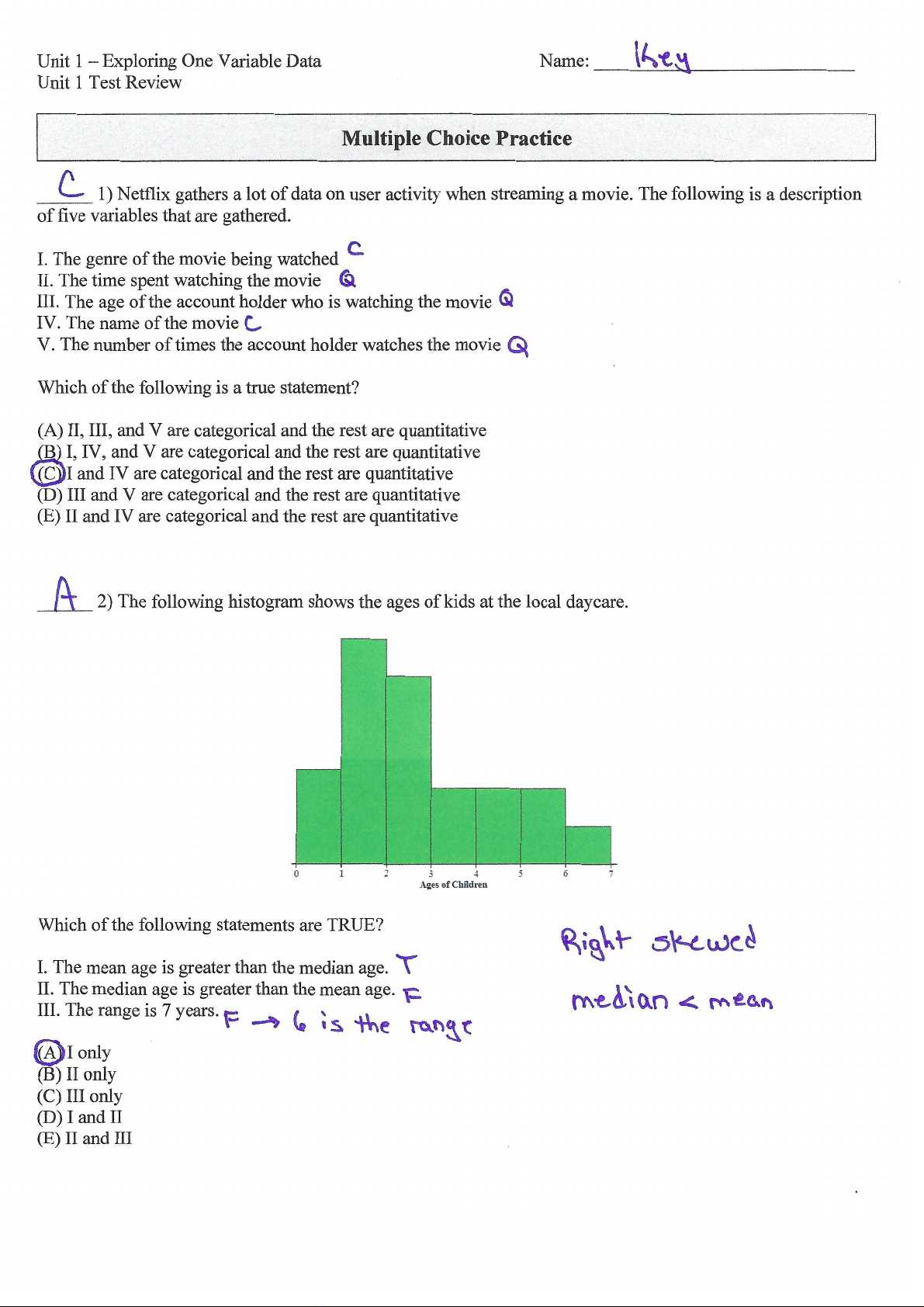

- Analyzing Graphs: Be able to interpret various types of visualizations, including histograms, boxplots, scatterplots, and bar charts, to understand the distribution and relationships in the data.

- Descriptive Measures: Use measures like mean, median, mode, range, and standard deviation to summarize and compare data sets.

Drawing Conclusions from Data

- Understanding Outliers: Recognize and understand the impact of outliers on the overall data set. Determine whether they are anomalies or significant data points.

- Making Inferences: Be able to make predictions or generalizations based on the data, using techniques like confidence intervals or regression models.

- Contextualizing Data: Always consider the context in which the data was collected and how that might affect your interpretation of the results.

Mastering these techniques will enhance your ability to make sense of data and provide accurate insights, which is crucial for solving complex problems in any setting.

Common AP Statistics Exam Question Types

In any assessment focused on data analysis, understanding the common question formats is essential for effective preparation. The questions are designed to test your ability to apply various concepts and techniques to real-world problems. Being familiar with the different types of questions will allow you to approach the test with more confidence and efficiency.

Question Types to Expect

- Multiple Choice Questions: These questions assess your knowledge of core concepts and require you to choose the correct answer from a set of options. They often focus on definitions, calculations, and interpreting data visualizations.

- Problem Solving Questions: These questions present a scenario and ask you to solve a problem using the appropriate techniques. They may involve calculating probabilities, constructing confidence intervals, or performing hypothesis tests.

- Interpretation of Graphs and Tables: You’ll be asked to analyze data presented in visual form, such as histograms, boxplots, or tables. These questions test your ability to extract relevant information and draw conclusions based on the data.

Tips for Answering Different Question Types

- Multiple Choice: Eliminate clearly incorrect options to increase your chances of choosing the right answer. Double-check calculations if time permits.

- Problem Solving: Break down the problem into smaller steps and carefully choose the correct formulas or methods. Keep track of units and ensure all calculations are clear.

- Data Interpretation: Focus on the context of the data, not just the numbers. Look for key details like the shape of distributions, outliers, or patterns in trends.

Familiarizing yourself with these question types and practicing regularly will help you become more adept at handling any challenge presented in the assessment.

How to Solve Probability Problems Effectively

Solving probability problems requires a clear understanding of the underlying principles and a systematic approach to applying them. The ability to break down complex scenarios into manageable parts is key to finding solutions quickly and accurately. In this section, we will discuss strategies for approaching probability problems and how to use different methods to determine outcomes.

Start by carefully reading the problem to identify the key elements, such as the events and their possible outcomes. Determine whether the problem involves independent or dependent events, as this will dictate the method you use. For independent events, the multiplication rule is typically applied, while for dependent events, you must adjust your calculations accordingly.

Next, consider the use of tree diagrams, Venn diagrams, or tables to organize the information. These tools can help visualize the problem, making it easier to calculate the probability of various outcomes. Always remember to double-check your assumptions, especially when dealing with conditional probabilities or multiple events occurring simultaneously.

Lastly, practice is essential. The more you work through different types of probability problems, the more intuitive the process will become. Develop a routine for solving problems step-by-step, and soon you’ll be able to approach any probability question with confidence and clarity.

Reviewing Statistical Inference Methods

Understanding how to make decisions or predictions based on data is a crucial aspect of many assessments. The ability to draw conclusions about a population from a sample allows you to make informed judgments. This section focuses on the core techniques used to perform inference, enabling you to apply them effectively when faced with real-world problems.

Start by familiarizing yourself with the two main approaches to statistical inference: estimation and hypothesis testing. Estimation involves determining a range of possible values for a population parameter based on sample data, often represented as confidence intervals. Hypothesis testing, on the other hand, allows you to test assumptions about a population and evaluate the strength of evidence against those assumptions.

For both methods, it’s essential to understand the associated conditions and assumptions. For example, when calculating confidence intervals, you need to consider the sample size and the distribution of the data. Similarly, when conducting hypothesis tests, you should be aware of the significance level and p-values, as these determine whether the evidence is strong enough to reject the null hypothesis.

By practicing various problems and working through real-life scenarios, you’ll develop a deeper understanding of these inference techniques. The key is to be methodical, double-check your steps, and always consider the context of the data before drawing conclusions.

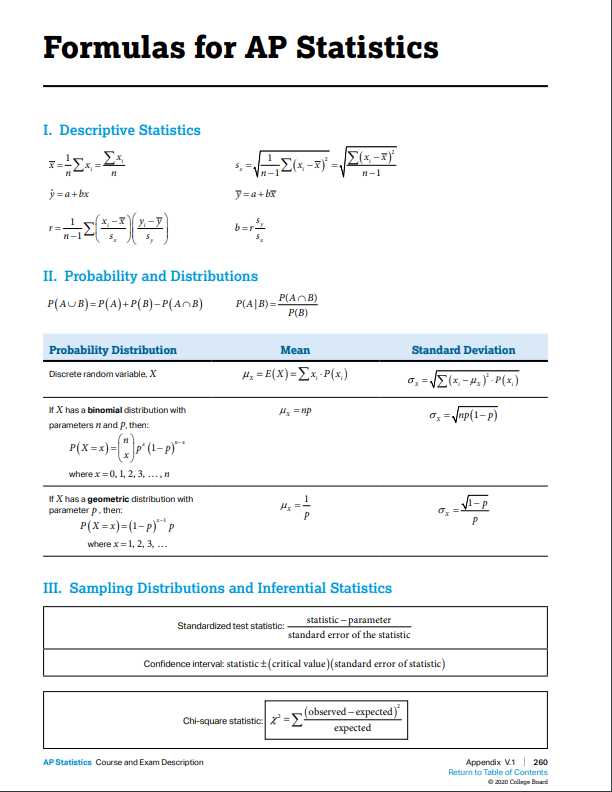

AP Statistics Formulas You Must Know

Mastering key formulas is essential for tackling a wide range of problems in any data analysis assessment. The right formulas allow you to quickly calculate measures of central tendency, variability, and probabilities, all while ensuring accurate conclusions. Below are some of the most important formulas you should be familiar with to solve problems efficiently.

Essential Formulas for Data Analysis

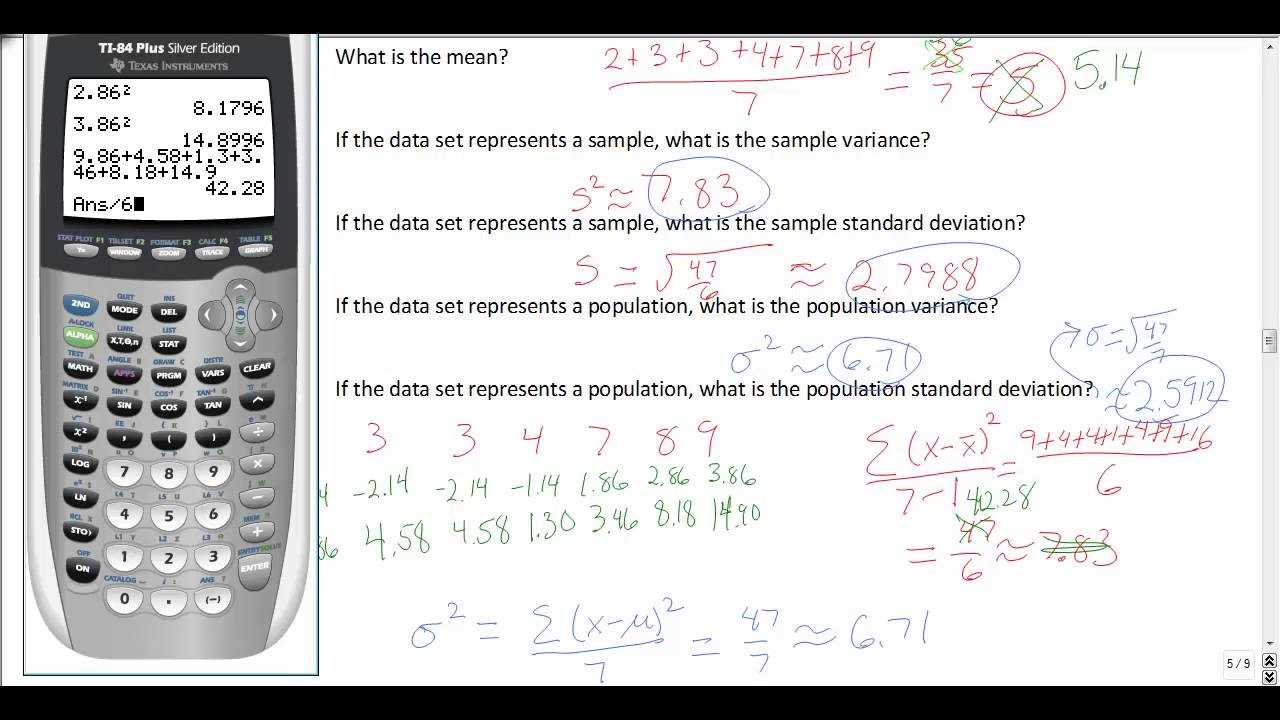

- Mean: The average of a data set, calculated as the sum of all values divided by the number of values.

- Standard Deviation (σ): A measure of the spread of data points from the mean, calculated by finding the square root of the variance.

- Variance (σ²): The average of the squared differences from the mean. It gives a measure of how much data points vary.

- Interquartile Range (IQR): The difference between the third quartile (Q3) and the first quartile (Q1), used to measure the spread of the middle 50% of data.

- Z-Score: A measure of how many standard deviations a data point is from the mean, calculated as (X – μ) / σ.

Key Probability and Inference Formulas

- Probability of Independent Events: P(A and B) = P(A) * P(B).

- Binomial Probability Formula: P(X = k) = (n choose k) * p^k * (1-p)^(n-k), for binomial distributions.

- Confidence Interval for a Mean (when σ is known): CI = X̄ ± Z * (σ/√n), where X̄ is the sample mean, Z is the Z-score, and n is the sample size.

- Hypothesis Test for a Mean (Z-test): Z = (X̄ – μ) / (σ/√n), used to test the significance of a sample mean against a population mean.

- Chi-Square Test Statistic: χ² = Σ[(O – E)² / E], where O is the observed frequency, and E is the expected frequency.

Be sure to familiarize yourself with these formulas and practice using them in various scenarios. Having a strong grasp of these equations will allow you to quickly and confidently solve problems during any assessment. Understanding the underlying principles behind each formula is just as important as memorizing them, so be sure to review both the formulas and their applications thoroughly.

Preparing for Hypothesis Testing Questions

Hypothesis testing is a critical component of any data analysis assessment. This process allows you to make decisions or draw conclusions about a population based on sample data. By testing a hypothesis, you assess the validity of a claim or assumption, using evidence to either support or reject it. Proper preparation for these questions requires not only a solid understanding of the concepts but also the ability to apply the correct methods and interpret results effectively.

To start, it’s essential to understand the two main types of hypotheses: the null hypothesis (H₀), which represents the default assumption, and the alternative hypothesis (H₁), which reflects the claim being tested. The goal is to collect data and determine whether the evidence supports the alternative hypothesis or if the null hypothesis remains valid.

In preparation, focus on the following key steps when tackling hypothesis testing problems:

- Formulating the Hypotheses: Clearly define the null and alternative hypotheses. The null hypothesis typically assumes no effect or difference, while the alternative suggests the presence of an effect or difference.

- Choosing the Significance Level (α): The significance level, often set at 0.05, represents the threshold for rejecting the null hypothesis. If the p-value is lower than α, you reject H₀.

- Calculating the Test Statistic: Depending on the type of test (e.g., Z-test, T-test), calculate the test statistic using the appropriate formula.

- Determining the P-Value: The p-value indicates the probability of obtaining results at least as extreme as those observed, assuming the null hypothesis is true. A small p-value suggests strong evidence against the null hypothesis.

- Making a Decision: Compare the p-value to the significance level. If p-value ≤ α, reject the null hypothesis. Otherwise, fail to reject it.

By practicing a variety of hypothesis testing problems, you will become more comfortable with these steps and develop a strategic approach to answering questions confidently. Always pay attention to the context of the problem, as it may provide important clues for selecting the right test and interpreting the results accurately.

Practice Problems for Descriptive Statistics

Mastering the ability to summarize and interpret data is essential for understanding the trends and patterns within a dataset. The following practice problems will help you strengthen your skills in analyzing data and applying various methods of central tendency, variability, and distribution. By working through these problems, you will gain a deeper understanding of how to extract meaningful insights from raw numbers.

Problem 1: Analyzing Central Tendency

Given the following set of data points, calculate the mean, median, and mode:

- 12, 15, 14, 18, 15, 20, 13, 15, 17, 16

Steps to follow:

- Find the mean: Add up all the values and divide by the total number of values.

- Find the median: Arrange the data in ascending order and find the middle value.

- Find the mode: Identify the most frequently occurring number.

Problem 2: Understanding Spread of Data

Given the following data set, calculate the range, variance, and standard deviation:

- 10, 12, 15, 18, 21, 19, 23, 25, 30, 35

Steps to follow:

- Find the range: Subtract the smallest number from the largest.

- Calculate the variance: First, find the mean, then subtract the mean from each value, square the result, and find the average of the squared differences.

- Find the standard deviation: Take the square root of the variance.

Problem 3: Interpreting Data Distribution

Consider the following data set representing the number of hours studied by students before a test:

- 4, 5, 6, 6, 7, 8, 9, 9, 9, 10

Steps to follow:

- Create a frequency distribution table to categorize the data into intervals.

- Plot a histogram to visualize the distribution of the data.

- Identify if the data is skewed to the left, right, or symmetric based on the histogram.

By working through these problems, you’ll develop the ability to summarize data effectively, recognize patterns, and identify outliers or unusual observations. These skills are fundamental for making informed decisions based on data.

Mastering Confidence Intervals in AP Statistics

Understanding how to construct and interpret confidence intervals is a vital skill in any data analysis task. Confidence intervals allow you to estimate the range of values within which a population parameter likely falls, based on sample data. The broader the interval, the more uncertainty is present in the estimate. Mastering this concept involves not only calculating the interval but also interpreting what it means in the context of a given dataset.

Steps to Construct a Confidence Interval

To build a confidence interval, there are several key steps you need to follow:

- Determine the sample mean and standard deviation: These provide the starting point for calculating the interval.

- Choose the appropriate confidence level: Common choices are 90%, 95%, and 99%. The confidence level represents the likelihood that the interval will contain the true population parameter.

- Find the critical value: Depending on the confidence level and the sample size, the critical value is typically found using a Z or t-distribution table.

- Calculate the margin of error: Multiply the critical value by the standard error (standard deviation divided by the square root of the sample size).

- Construct the interval: The confidence interval is then given by the sample mean ± the margin of error.

Example Problem

Suppose a sample of 100 people is surveyed to estimate the average number of hours they work per week. The sample mean is 40 hours, and the sample standard deviation is 5 hours. Construct a 95% confidence interval for the true population mean.

Steps to follow:

- Sample mean = 40 hours

- Sample standard deviation = 5 hours

- Sample size = 100

- Critical value (for 95% confidence) = 1.96 (from Z-distribution)

- Standard error = 5 / √100 = 0.5

- Margin of error = 1.96 × 0.5 = 0.98

- Confidence interval = 40 ± 0.98, which gives (39.02, 40.98)

This means we are 95% confident that the true average number of hours worked per week by the population falls between 39.02 and 40.98 hours.

By practicing these steps and understanding the underlying concepts, you will gain confidence in using this powerful tool to estimate parameters and assess the uncertainty in your estimates.

Strategies for Analyzing Regression Data

When dealing with the relationship between two or more variables, regression analysis is a powerful tool that allows you to model and make predictions based on data. The process involves fitting a line or curve to the data and interpreting the relationship between the independent and dependent variables. By understanding key strategies for analyzing regression data, you can make more accurate predictions and gain deeper insights into the underlying patterns in the dataset.

1. Check for Linearity

Before performing any analysis, it is essential to ensure that the relationship between the variables is linear. This can be done by creating a scatterplot of the data. If the points follow a straight-line pattern, then a linear regression model is appropriate. However, if the relationship is curvilinear, you might need to apply polynomial regression or transform the variables to fit a linear model.

2. Evaluate the Goodness of Fit

Once the regression model is applied, it’s important to assess how well the model fits the data. Key metrics to examine include:

- R-squared value: This statistic represents the proportion of the variance in the dependent variable that can be explained by the independent variable(s). A higher R-squared value indicates a better fit, though it’s important to not rely solely on this value.

- Residuals analysis: Residuals are the differences between the observed values and the predicted values from the regression model. By analyzing the residuals, you can check for patterns that suggest the model may not be appropriately capturing the data’s structure. Ideally, residuals should be randomly dispersed around the horizontal axis.

3. Check for Outliers and Influential Points

Outliers or influential points can have a significant impact on the results of a regression analysis. These points can skew the model, leading to incorrect predictions. Identifying and addressing outliers is critical. Use diagnostic plots, such as leverage and Cook’s distance, to detect influential points that could distort the regression results.

By following these strategies, you can ensure that your regression model provides a valid and reliable representation of the data, leading to better insights and predictions.

Common Pitfalls to Avoid on the Exam

When tackling any assessment, it’s essential to be aware of common mistakes that can affect your performance. Recognizing these potential pitfalls in advance can help you navigate the questions more confidently and avoid errors that could cost valuable points. From misinterpreting the question to rushing through calculations, being mindful of these traps is key to achieving success.

1. Misunderstanding the Question

One of the most frequent mistakes is not fully understanding what the question is asking. It’s easy to get caught up in technical terms or complex wording, but take a moment to read each question carefully. Pay attention to key words such as “mean,” “median,” “probability,” or “distribution” to ensure that you’re addressing the right concept. Always clarify what the question is truly asking before proceeding with an answer.

2. Ignoring Units and Labels

Forgetting to include units in your answers or mislabeling your results can lead to losing critical points. Whether it’s a measurement in terms of time, distance, or probability, always make sure to provide your answers with the appropriate units. Double-check your calculations to ensure that the units are consistent and properly carried through the problem.

3. Rushing Through Calculations

In an attempt to complete the assessment quickly, many students make the mistake of rushing through calculations. This can lead to simple arithmetic errors or forgetting steps in more complex formulas. Take your time, work methodically, and review your calculations to ensure accuracy. A few extra minutes spent on verifying your work can save you from careless mistakes.

4. Overlooking Assumptions or Conditions

Many questions require you to identify or state assumptions before proceeding with a solution. Overlooking these assumptions can invalidate your answer. Be sure to carefully read any conditions or prerequisites listed in the problem. Acknowledge any assumptions needed for applying specific methods or formulas, as failing to do so may result in incorrect conclusions.

5. Not Checking for Reasonableness

Finally, after you’ve worked through a problem, take a moment to check if your answer makes sense. Does the result seem reasonable in the context of the question? Does the magnitude match expectations? A quick mental check can help you catch outliers or mistakes in your approach before you submit your work.

Avoiding these common pitfalls requires attention to detail, careful planning, and time management. By being mindful of these mistakes, you can improve your chances of achieving a strong performance.

Understanding Sampling Distributions in Detail

In the field of data analysis, one crucial concept that often comes up is the distribution of sample statistics. This idea involves examining how different sample results can vary from one another when drawn from a population. Understanding the behavior of these sample statistics is essential for making accurate inferences and drawing conclusions based on limited data.

Sampling distributions represent the distribution of a given statistic–such as the sample mean, median, or proportion–over many repeated samples drawn from the same population. These distributions help us understand the variability of the statistic and how it behaves as the sample size changes. By examining the sampling distribution, we can make predictions and assess the reliability of statistical estimates.

The Central Limit Theorem

One of the foundational principles in understanding sampling distributions is the Central Limit Theorem (CLT). This theorem states that, regardless of the shape of the population distribution, the distribution of sample means will approach a normal distribution as the sample size increases, provided that the samples are independent and identically distributed. This is particularly helpful because it allows statisticians to apply normal distribution-based methods even when the population itself is not normally distributed.

Key Characteristics of Sampling Distributions

There are several important features of sampling distributions that you should understand:

- Mean of the Sampling Distribution: The mean of the sampling distribution is equal to the population mean, meaning that the average of all sample means will approximate the population mean.

- Standard Deviation (Standard Error): The variability of the sample statistic, also known as the standard error, decreases as the sample size increases. This means that larger samples provide more reliable estimates of the population parameter.

- Shape of the Distribution: As sample size increases, the shape of the sampling distribution becomes more symmetric and approaches a normal distribution, even if the original population distribution is skewed.

Applications of Sampling Distributions

Understanding sampling distributions is essential for hypothesis testing and constructing confidence intervals. By knowing the properties of the sampling distribution, we can determine the probability of obtaining a certain sample result and assess the likelihood of a given hypothesis being true. Additionally, these distributions allow us to quantify the uncertainty in our estimates and make informed decisions based on the data at hand.

In summary, a deep understanding of sampling distributions is key to making sound conclusions in any field that involves statistical inference. By mastering this concept, you gain the ability to evaluate data effectively and use it to support meaningful analyses and decisions.

Time Management Tips for the AP Test

Effective time management is essential for succeeding in any high-stakes test. With limited time to answer a large number of questions, knowing how to prioritize tasks and allocate your time wisely can make all the difference. By applying the right strategies, you can maximize your efficiency and reduce unnecessary stress during the test.

One of the key strategies is to understand the structure of the test and how much time you have for each section. Knowing what to expect and planning your time accordingly can help you avoid rushing through questions or leaving too much unanswered. Below are some practical tips to help you manage your time efficiently during the test:

| Strategy | Explanation |

|---|---|

| Prioritize Easy Questions | Start with the questions that you find easiest, as they will take less time and boost your confidence. This way, you can gain momentum early on. |

| Allocate Time to Each Section | Divide your total test time by the number of sections and allocate a specific amount of time for each. Stick to these time limits as closely as possible. |

| Don’t Get Stuck on One Question | If you find a question difficult, move on and come back to it later. Spending too much time on one problem can prevent you from answering others. |

| Use Process of Elimination | If you’re unsure about an answer, eliminate the clearly wrong options first. This increases your chances of selecting the correct answer even if you’re guessing. |

| Keep Track of Time | Check the clock periodically to ensure you’re staying on track. Use a watch or test-provided timer to keep an eye on how much time remains. |

By implementing these strategies, you can improve your time management and approach the test with greater confidence. Remember, staying calm and focused is key to making the most of the time you have.

How to Interpret Boxplots and Histograms

Visual representations of data, such as boxplots and histograms, are powerful tools for understanding the distribution and variability of a dataset. These charts help summarize large sets of data in a way that makes trends and patterns easier to see. By interpreting these plots correctly, you can gain valuable insights into the spread, central tendency, and potential outliers within your data.

Boxplots, also known as box-and-whisker plots, show the spread of data through its quartiles. The box itself represents the interquartile range (IQR), while the “whiskers” extend to the minimum and maximum values that are not outliers. Outliers are typically marked separately, making it easy to spot data points that are significantly different from the rest of the dataset. Here’s how you can interpret a boxplot:

- Median: The line inside the box represents the median of the data, which is the middle value when the data is ordered.

- Quartiles: The edges of the box indicate the 25th and 75th percentiles (Q1 and Q3), which divide the dataset into four equal parts.

- Whiskers: These lines extend from the quartiles to the minimum and maximum values, excluding outliers.

- Outliers: Data points that fall outside of the whiskers are typically considered outliers and are marked individually.

Histograms, on the other hand, provide a visual representation of the frequency distribution of continuous data. They consist of bars that represent the frequency of data within specific intervals or bins. The height of each bar shows how many data points fall into that range. To interpret a histogram effectively, consider the following:

- Shape: Look for the general shape of the histogram–whether it is symmetrical, skewed to the right or left, or has multiple peaks (bimodal).

- Center: The center of the distribution is often located near the peak of the histogram. This gives a rough idea of the data’s central tendency.

- Spread: The width of the histogram shows the variability in the data. A wider histogram indicates more spread, while a narrower one indicates less variability.

- Skewness: If the data is not symmetrical, it may be skewed either to the right or left. This can provide insights into the nature of the data distribution.

By understanding these visual tools, you can gain a deeper understanding of your data and make more informed conclusions. Boxplots help highlight the range and spread of your data, while histograms offer a clear view of its distribution, frequency, and shape.

Reviewing the Central Limit Theorem

The Central Limit Theorem (CLT) is one of the foundational principles in data analysis, and it provides a critical understanding of how sample means behave as the sample size increases. In simple terms, the CLT explains why sample averages tend to follow a normal distribution, even if the original data itself is not normally distributed. This theorem is essential for making inferences about a population from sample data.

The key idea behind the CLT is that, as you increase the sample size, the distribution of the sample means will become more symmetrical and approach a normal distribution, regardless of the shape of the original population distribution. This phenomenon is particularly powerful because it allows us to apply techniques based on the normal distribution to a wide range of problems, even when we don’t know the exact distribution of the data.

Conditions for Applying the Central Limit Theorem

There are specific conditions under which the Central Limit Theorem holds. These conditions help ensure that the sample means are sufficiently close to a normal distribution:

- Sample Size: A larger sample size generally leads to a more normal distribution of the sample means. A common rule of thumb is that a sample size of 30 or more is typically large enough for the CLT to apply, but the larger the sample, the better.

- Random Sampling: The samples should be drawn randomly from the population to ensure that each sample is independent and representative of the population.

- Independence: The individual data points in the sample should be independent of each other. If sampling without replacement, it is recommended that the sample size is less than 10% of the population to ensure this condition holds.

Implications of the Central Limit Theorem

The CLT has profound implications for statistical analysis, especially when it comes to hypothesis testing and confidence intervals. Since the sample means tend to be normally distributed, we can use normal probability methods to estimate population parameters even when the original data is skewed or not normally distributed.

| Sample Size (n) | Shape of Population Distribution | Distribution of Sample Means |

|---|---|---|

| Small (n | Skewed or Non-Normal | Not necessarily normal, especially if the population is highly skewed |

| Large (n ≥ 30) | Any | Approximately normal, even for non-normal populations |

Understanding the Central Limit Theorem allows you to use powerful statistical tools, regardless of the underlying distribution of the data. By recognizing that sample means tend to normality as the sample size grows, you can more confidently make predictions and inferences based on sample data.