When preparing for assessments on machine learning techniques, it’s crucial to grasp the fundamental concepts that drive classification algorithms. Understanding how data is processed, analyzed, and categorized lays the foundation for tackling a variety of problems effectively. Strengthening your knowledge in this area ensures you can confidently handle theoretical and practical tasks.

In this section, we will explore typical challenges that test your ability to apply classification methods. By focusing on problem-solving strategies and common pitfalls, you can sharpen your skills and develop a deep understanding of how to approach such tasks with precision. Whether you’re working through theoretical explanations or practical exercises, the goal is to approach each scenario with a solid grasp of the underlying principles.

Preparation for these types of challenges requires both theoretical knowledge and practical application. By examining example scenarios, reviewing key principles, and practicing various problems, you will be equipped to tackle each type of problem with clarity and confidence. Thorough preparation is the key to success in mastering classification techniques and their applications in real-world contexts.

Ultimate Guide to KNN Exam Questions

Preparing for assessments that focus on classification techniques involves understanding the core concepts that are frequently tested. Grasping how data points are grouped based on their characteristics is essential to excelling in this area. In this guide, we’ll delve into the common types of tasks you might encounter, helping you develop a clear strategy to tackle each one with confidence.

One of the most effective ways to approach these challenges is by practicing with a variety of sample problems. Below is a table outlining the most common problem types, along with helpful tips for solving them:

| Problem Type | Description | Key Tips |

|---|---|---|

| Classification Problems | Tasks where the goal is to categorize data into predefined classes. | Focus on the distance metrics and how data points are clustered based on similarity. |

| Distance Calculation | Calculating the distance between data points to determine their relationships. | Master the common distance measures like Euclidean and Manhattan distance. |

| Overfitting | Examining how models may perform poorly on unseen data due to excessive complexity. | Understand how to balance model complexity and accuracy, focusing on cross-validation techniques. |

| Practical Application | Applying theory to real-world scenarios, such as predicting outcomes based on historical data. | Consider data preprocessing steps and how feature selection can affect model performance. |

By practicing with these types of tasks, you will be well-prepared to handle a wide range of challenges. Remember, the key to success lies in mastering the fundamentals, applying them effectively, and being prepared for any scenario that may arise in the test environment.

Understanding KNN Algorithm Basics

The concept behind this technique revolves around classifying data points based on their proximity to other data points. By analyzing the surrounding data, it determines which group a new observation belongs to. This approach relies on measuring distances and considering the relationships between the data, making it essential for classification and prediction tasks.

Key Principles to Remember

- Distance Metrics: The algorithm uses distance calculations, such as Euclidean or Manhattan, to compare the similarities between points.

- Neighbors: The primary idea is to look at the closest data points (neighbors) to make a decision.

- Majority Voting: In classification tasks, the most common class among the nearest neighbors determines the category of the new point.

- Feature Space: The algorithm works by mapping data points into a multidimensional space, where each feature represents a dimension.

Steps in Implementing the Approach

- Step 1: Collect the dataset and organize the data points based on features.

- Step 2: Calculate the distance between the test data point and all other data points in the dataset.

- Step 3: Identify the nearest neighbors based on the calculated distances.

- Step 4: Classify the test point according to the majority class of the nearest neighbors.

- Step 5: Evaluate the model’s performance using appropriate metrics like accuracy or error rate.

By mastering these fundamental steps, you can effectively apply the technique to a variety of classification tasks. Understanding the underlying principles is key to troubleshooting and optimizing the model for better performance.

Key Concepts to Know for KNN Exams

When preparing for assessments on machine learning classification methods, it is essential to focus on the fundamental concepts that form the backbone of this approach. Mastering these key principles will not only help in theoretical understanding but also in practical application during tasks that require data classification based on similarity. In this section, we will explore the most critical ideas that you should be familiar with before facing such challenges.

Understanding the core elements, such as the role of distance measures, feature selection, and the importance of choosing the correct number of neighbors, is crucial. Additionally, it’s important to know how different distance metrics impact classification accuracy and how the algorithm performs in various situations. Below are the primary concepts to grasp:

- Distance Metrics: Familiarize yourself with common distance measures like Euclidean, Manhattan, and Minkowski, as they are central to calculating proximity between data points.

- Feature Selection: Learn how to choose the most relevant features for the model, as irrelevant or redundant features can reduce performance.

- Choosing Neighbors: Understand the process of selecting the optimal number of neighbors to consider when making classification decisions. Too few or too many can lead to suboptimal results.

- Overfitting and Underfitting: Grasp the balance between model complexity and generalization to avoid overfitting (when the model is too specific) or underfitting (when it’s too general).

- Cross-validation: Learn how cross-validation techniques help assess the model’s accuracy and ensure that it performs well on unseen data.

By focusing on these key ideas, you will be well-equipped to handle the challenges that may arise and apply the algorithm effectively across a variety of problems.

Common KNN Exam Question Formats

When preparing for assessments on classification techniques, it’s essential to understand the types of tasks you may encounter. These problems typically test both your theoretical knowledge and practical skills. Recognizing the common formats and how to approach them will make it easier to tackle any challenge that arises. In this section, we’ll break down the most frequent problem types and provide tips on how to address each one.

Problem Types You Might Encounter

- Conceptual Questions: These assess your understanding of how the method works, focusing on definitions, principles, and key components like distance metrics and neighbors.

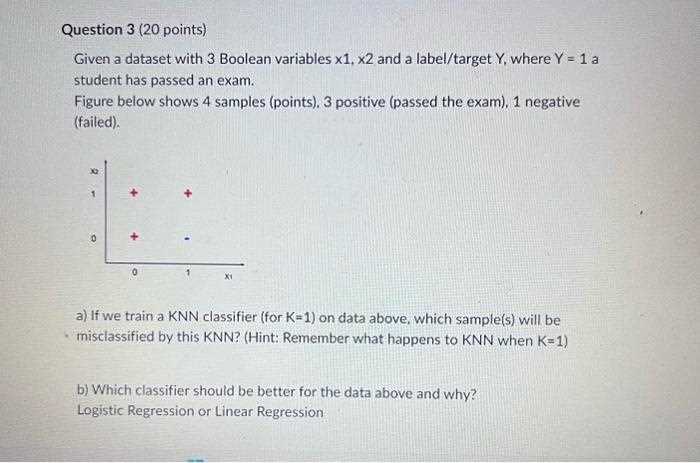

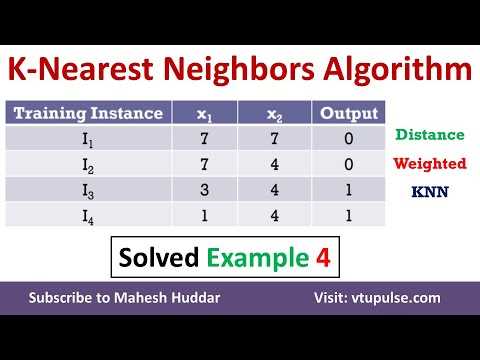

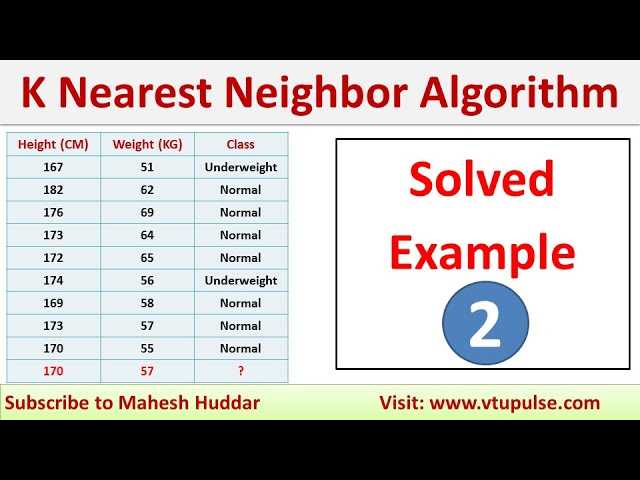

- Calculation-Based Tasks: You may be asked to calculate the distance between data points, identify the nearest neighbors, or predict the class of a new observation based on a given dataset.

- Scenario-Based Questions: These present a real-world situation where you need to apply the technique to solve a problem, such as classifying items based on different features.

- Comparison Tasks: These require you to compare different classification techniques, analyzing their advantages and limitations in various contexts.

- Performance Evaluation: You might be asked to evaluate a model’s effectiveness, such as calculating accuracy, precision, recall, or the effects of overfitting and underfitting.

How to Approach These Problems

- Understand the Theory: Make sure you know the fundamentals, such as how the algorithm works and how distance is calculated.

- Practice Calculations: Be comfortable with performing calculations, such as finding nearest neighbors and determining the class of a new data point.

- Analyze Scenarios: Practice applying the method to real-life problems to develop a practical understanding of how it works.

- Compare Methods: Understand the pros and cons of this technique compared to other classification methods, and be ready to explain why one might be preferable over another in a given situation.

- Evaluate Results: Be prepared to assess the performance of a model and discuss ways to improve it based on accuracy and other metrics.

By familiarizing yourself with these formats and practicing relevant problems, you’ll be better equipped to handle a variety of tasks and demonstrate your mastery of the technique.

How to Solve KNN Classification Problems

Solving classification challenges involves identifying patterns and grouping data points based on their features. The goal is to categorize new data by examining its similarity to known examples. Understanding the steps involved and following a systematic approach ensures accurate results. Below are key steps to successfully tackle these tasks.

Steps to Approach Classification Tasks

- Step 1: Understand the Data – Begin by examining the dataset, ensuring you understand the features and their relevance to the problem. Clean the data by handling missing values and normalizing the features if necessary.

- Step 2: Choose a Distance Metric – Select an appropriate method for calculating the distance between data points. The most common metrics are Euclidean and Manhattan distances, but others like Minkowski or cosine distance may be used depending on the problem.

- Step 3: Select the Number of Neighbors – Choose the optimal number of neighbors to consider when making predictions. Too few may lead to overfitting, while too many may result in underfitting. Experiment with different values and assess the model’s performance.

- Step 4: Apply the Classification – For each new data point, calculate its distance to all points in the training set. Identify the closest neighbors and assign the class based on a majority vote or weighted vote.

- Step 5: Evaluate the Model – After classification, assess the model’s accuracy using metrics such as precision, recall, and F1 score. Use cross-validation to validate the results and avoid overfitting.

By following these steps and practicing with various datasets, you can confidently solve classification problems, ensuring both reliability and accuracy in your predictions.

Testing KNN with Real-Life Scenarios

Applying classification techniques to real-world problems provides valuable insight into their practical effectiveness. In these scenarios, data points represent actual entities, and the goal is to make predictions based on the similarities between new and existing data. Understanding how to adapt the method to various fields is essential for solving real-life problems effectively.

Real-World Applications

- Medical Diagnosis: Classifying patients based on their symptoms and medical history can help predict potential health conditions. By analyzing past cases, this technique can recommend diagnoses for new patients.

- Customer Segmentation: Businesses often categorize customers based on purchasing behavior and demographics. This helps in tailoring marketing strategies or recommending products that are most relevant to specific groups.

- Image Recognition: The method can be used to identify objects in images by comparing the pixel data to a labeled dataset. Common applications include facial recognition and automated tagging systems.

- Recommendation Systems: This technique is widely used to suggest products, movies, or services based on user preferences, comparing a user’s past choices to those of similar users.

- Fraud Detection: Financial institutions can identify suspicious activities by classifying transactions as legitimate or fraudulent based on known patterns.

How to Approach These Problems

- Step 1: Gather and preprocess data from the real-world scenario. This may include cleaning the data, handling missing values, and normalizing features.

- Step 2: Choose the appropriate distance metric based on the nature of the data, ensuring it accurately reflects the relationships between the features.

- Step 3: Select the number of neighbors, balancing between overfitting and underfitting by testing different values.

- Step 4: Classify new data points based on the patterns observed in the training set. Use majority voting or weighted voting for classification tasks.

- Step 5: Evaluate the model’s performance using real-world metrics such as accuracy, precision, recall, or F1 score, and refine the model as needed.

By testing this technique with real-world data, you gain a deeper understanding of its strengths and limitations. This hands-on experience not only enhances theoretical knowledge but also prepares you for tackling complex, practical problems.

Top Mistakes in KNN Exam Solutions

When tackling problems related to classification methods, there are several common pitfalls that can lead to inaccurate or inefficient solutions. These mistakes typically arise from misunderstandings or oversights during problem-solving. Recognizing and avoiding these errors is essential for achieving accurate results and demonstrating mastery of the technique. In this section, we’ll explore the most frequent mistakes and provide guidance on how to avoid them.

Common Errors to Watch For

- Improper Distance Metric Selection: Choosing the wrong distance measure can significantly affect the accuracy of predictions. For example, using Euclidean distance when the data has categorical features can lead to incorrect classifications.

- Incorrect Number of Neighbors: Selecting too few or too many neighbors is a common mistake. A very small number may cause the model to overfit, while too many can lead to underfitting. It’s crucial to experiment with different values to find the optimal balance.

- Ignoring Feature Scaling: Not normalizing or standardizing the features can result in certain features dominating the distance calculation, leading to skewed results. Always scale features before applying the method, especially when they have different units or ranges.

- Overfitting or Underfitting: Focusing too much on achieving high accuracy on the training data can lead to overfitting, where the model performs poorly on unseen data. Alternatively, using too few features or neighbors can result in underfitting, where the model fails to capture the data’s complexity.

- Failure to Validate the Model: Relying solely on a training dataset without validating the model on new, unseen data can lead to overly optimistic assessments of its performance. Always use cross-validation or a separate test set to evaluate the model’s generalization ability.

How to Avoid These Mistakes

- Test Different Metrics: Experiment with different distance measures, such as Manhattan, Minkowski, or cosine similarity, depending on the data type.

- Optimize Neighbor Count: Use techniques like cross-validation to find the optimal number of neighbors, avoiding overfitting or underfitting.

- Scale Your Features: Normalize numerical features to ensure that no single feature disproportionately influences the distance calculation.

- Validate Your Model: Always check the model’s performance on a separate test set or through cross-validation to ensure it generalizes well.

By being mindful of these common mistakes and following best practices, you can improve your problem-solving process and ensure better performance when applying this classification method.

How to Interpret KNN Results Accurately

Interpreting the outcomes of a classification task is crucial for understanding the model’s effectiveness and reliability. While achieving a high level of accuracy is important, it is equally essential to consider how well the model generalizes to unseen data. In this section, we’ll discuss how to interpret the results of a classification method accurately, ensuring you can draw meaningful conclusions from the predictions.

Key Metrics for Evaluation

- Accuracy: This is the percentage of correct classifications out of the total predictions. While it is useful, it can be misleading, especially in cases with imbalanced data.

- Precision: This metric indicates how many of the predicted positive cases are actually positive. It’s important when the cost of false positives is high, such as in fraud detection.

- Recall: Recall measures the ability of the model to identify all relevant instances. It’s crucial when missing a positive case is costly, such as in medical diagnosis.

- F1 Score: The F1 score combines precision and recall into a single measure. It is particularly useful when you need to balance both false positives and false negatives.

- Confusion Matrix: This matrix displays the true positives, false positives, true negatives, and false negatives. It provides a more detailed picture of the model’s performance, helping to spot potential issues in classification.

Steps to Interpret Results

- Step 1: Begin by reviewing the accuracy, but don’t rely on it alone. In cases with class imbalance, accuracy may not reflect the model’s true performance.

- Step 2: Calculate precision, recall, and the F1 score to assess how well the model performs on each class, particularly the ones that matter most for your application.

- Step 3: Analyze the confusion matrix to understand where the model is making errors. This helps identify whether the model is favoring one class over another.

- Step 4: Consider cross-validation results. A high variance between training and testing performance may indicate overfitting, while consistent results suggest generalization.

- Step 5: Evaluate the model in the context of the specific application. For example, in fraud detection, minimizing false negatives (high recall) may be more important than minimizing false positives (high precision).

By considering these metrics and steps, you can ensure a thorough and accurate interpretation of classification results. This allows you to make well-informed decisions based on the model’s performance and adapt your approach accordingly.

Essential Mathematical Principles for KNN

Understanding the core mathematical principles behind classification techniques is key to applying them effectively. The process relies heavily on fundamental concepts from linear algebra, geometry, and probability theory. In this section, we’ll explore the mathematical foundations that drive classification methods, focusing on distance measures, similarity calculations, and the decision-making process involved in predicting labels for new data points.

Key Mathematical Concepts

- Distance Metrics: The choice of distance metric determines how the similarity between two data points is calculated. Common distance measures include Euclidean, Manhattan, and Minkowski distances.

- Vector Space: Data points are often represented as vectors in a multi-dimensional space, where each feature corresponds to a dimension. Understanding how these vectors interact geometrically is essential for accurate classification.

- Nearest Neighbors: The algorithm identifies the nearest points in the feature space to classify a new data point. The mathematical principle of finding the “closest” point relies on calculating distances between vectors.

- Weighting of Neighbors: In some cases, neighboring points are weighted based on their distance to the new point. This affects the final classification, with closer neighbors contributing more to the decision.

Distance Measures Explained

| Distance Metric | Formula | Use Case |

|---|---|---|

| Euclidean | d(x, y) = √(Σ(x_i – y_i)²) | Most common, used when features are continuous and on the same scale. |

| Manhattan | d(x, y) = Σ|x_i – y_i| | Used when grid-like distance calculations are needed, often for categorical data. |

| Minkowski | d(x, y) = (Σ|x_i – y_i|^p)^(1/p) | A generalization that includes both Euclidean (p=2) and Manhattan (p=1) distances. |

| Cosine Similarity | cosine(x, y) = Σ(x_i * y_i) / (||x|| * ||y||) | Used when angle-based similarity is needed, typically in text classification. |

These mathematical principles are the foundation upon which classification methods rely. Mastery of these concepts will enable you to select the right distance metric and interpret results accurately, ensuring the method’s optimal application across different datasets.

Optimizing KNN for Exam Situations

In high-pressure scenarios such as time-constrained assessments, optimizing classification models is essential to achieve both accuracy and efficiency. This involves selecting the right parameters, choosing appropriate distance metrics, and managing the complexity of the data. Here, we’ll explore practical techniques for optimizing a classification method to ensure the best performance under examination conditions.

Key Optimization Strategies

- Feature Scaling: Ensuring that all features are on a similar scale is crucial, as distance-based methods are sensitive to varying scales. Common techniques include standardization and normalization.

- Choosing the Right Distance Metric: Select a metric that aligns with the nature of the data. For continuous features, Euclidean distance is often ideal, while for categorical features, the Manhattan distance may be more appropriate.

- Optimizing the Number of Neighbors: The choice of ‘k’ (the number of neighbors) can greatly impact performance. A small value can lead to overfitting, while a large value may smooth out important patterns. Cross-validation can help find the optimal value for ‘k’.

- Dimensionality Reduction: Reducing the number of features can improve performance by minimizing noise and speeding up computations. Techniques like PCA (Principal Component Analysis) or feature selection can be effective.

Practical Tips for Effective Optimization

- Step 1: Begin with basic scaling and choose a simple distance metric, such as Euclidean, to assess initial performance.

- Step 2: Experiment with different values of ‘k’ to find the balance between underfitting and overfitting. Typically, a value between 3 and 10 is a good starting point.

- Step 3: Apply dimensionality reduction techniques if the dataset has many irrelevant or redundant features. This step can drastically reduce computation time without sacrificing accuracy.

- Step 4: Use cross-validation to test the model’s generalization ability and ensure optimal settings for both ‘k’ and distance metrics.

By implementing these optimization strategies, you can ensure that the classification model runs efficiently and provides accurate predictions, even under the time constraints of an assessment setting.

Best Strategies for KNN Exam Success

Achieving success in assessments that involve classification techniques requires a solid understanding of key concepts, efficient problem-solving strategies, and a systematic approach to implementation. Preparation is essential, and mastering the fundamentals allows you to apply these methods accurately under time constraints. This section outlines the best strategies to excel in assessments focused on classification models.

Preparation Tips for Success

- Understand Core Concepts: Make sure you have a firm grasp of the basic principles behind classification tasks, including distance calculations, the role of neighbors, and the impact of different parameters.

- Master Distance Metrics: Know when and why to use various distance measures, such as Euclidean, Manhattan, or Minkowski, based on the nature of the data you’re working with.

- Practice with Sample Problems: Solve multiple practice problems to become familiar with the types of scenarios you might encounter. This will help improve your speed and accuracy during the assessment.

- Review Parameter Tuning: Understand how adjusting parameters like the number of neighbors (k) or choosing different distance metrics can affect the outcome. Practice selecting the optimal parameters for various datasets.

Time Management During the Assessment

- Break Down the Problem: Start by analyzing the problem step by step. Break it down into manageable components: data preparation, choosing a distance metric, selecting k, and testing the results.

- Prioritize Accuracy Over Speed: While it’s important to manage time, focus first on getting the correct results. Speed will improve as you become more confident in your approach.

- Double-Check Your Work: If time allows, review your steps to ensure that you’ve chosen the best parameters and applied the method correctly. A small error in selecting k or distance can lead to incorrect conclusions.

By following these strategies, you will be well-equipped to tackle challenges in classification-related assessments. Understanding the theory, practicing key techniques, and managing your time effectively are the cornerstones of success.

Understanding KNN Distance Metrics

Distance metrics are a key component in classification tasks that use proximity-based techniques. These metrics determine how the similarity between data points is measured, which directly influences the performance of the model. The choice of metric can impact the results, especially when working with different types of data, such as continuous or categorical variables. In this section, we will explore the most commonly used distance measures and how they affect classification outcomes.

Types of Distance Metrics

There are several distance metrics available, each suited for specific types of data and use cases. Below is a summary of the most widely used distance measures:

| Metric | Description | Best for |

|---|---|---|

| Euclidean Distance | Measures the straight-line distance between two points in a multidimensional space. | Continuous numerical data |

| Manhattan Distance | Calculates the sum of absolute differences between coordinates of two points. | Data with grid-like structure or when dealing with taxicab geometry |

| Minkowski Distance | A generalization of Euclidean and Manhattan distances. It uses a parameter to control the metric’s behavior. | Data with varying dimensionality or need for flexible distance computation |

| Cosine Similarity | Measures the cosine of the angle between two vectors, focusing on the orientation rather than magnitude. | Text data, or when the magnitude of data points is not relevant |

| Hamming Distance | Measures the number of positions at which two strings of equal length differ. | Categorical data (e.g., binary or string-based data) |

Choosing the Right Metric

Selecting the correct distance measure depends on the nature of the data and the problem you’re solving. Below are some guidelines for choosing the best metric:

- Euclidean Distance: This is the most commonly used metric and works well for continuous variables. However, it assumes that all features have equal weight, so feature scaling is often required.

- Manhattan Distance: Ideal when the data is on a grid or when working with high-dimensional spaces where Euclidean distance may become less effective.

- Minkowski Distance: This is a flexible option that can adapt to different scenarios. By adjusting the parameter, you can customize it to behave like either Euclidean or Manhattan distance.

- Cosine Similarity: Useful for high-dimensional sparse data, such as text, where the magnitude of the vectors is less important than the directionality of the data.

- Hamming Distance: Best suited for categorical data, especially in binary or string comparison tasks where you need to identify exact matches or mismatches.

Understanding the strengths and weaknesses of each distance metric will help you make informed decisions about which to use for your specific data and classification problem. Choosing the right metric is crucial for ensuring accurate results in proximity-based tasks.

Important KNN Variations and Their Uses

In classification and regression tasks, different variations of the standard proximity-based method have emerged to address specific challenges and improve model performance. These adaptations tweak the core algorithm to better handle particular types of data, computational constraints, or accuracy requirements. Understanding these variations is essential for applying the technique effectively across various problems.

Weighted Nearest Neighbors

The weighted nearest neighbors approach modifies the basic algorithm by assigning weights to neighboring points. Instead of treating each neighbor equally, closer neighbors are given higher importance, often based on their proximity to the target data point. This variation helps refine the prediction by reducing the influence of distant neighbors, which may otherwise have a significant but less relevant impact.

- Improved Accuracy: This variation works well in scenarios where closer points are more indicative of the target class or value.

- Handling Imbalanced Data: It can be useful when dealing with unbalanced datasets, as it allows the model to focus more on the denser areas of the feature space.

Radius-Based Nearest Neighbors

In contrast to the traditional method, which considers a fixed number of nearest neighbors, the radius-based approach considers all neighbors within a specified radius. This variation is particularly useful when the data distribution is not uniform, and defining a fixed number of neighbors does not yield optimal results.

- Adaptability: It adapts better to varying densities in the data, allowing the algorithm to include a different number of points depending on the local distribution.

- Handling Sparse Data: This variation can perform well when working with sparse datasets where the number of close neighbors might not be fixed.

Efficient KNN Variants for Large Datasets

When working with large datasets, the computational cost of traditional KNN methods can be prohibitively high. Variations like approximate nearest neighbors (ANN) techniques or dimensionality reduction methods aim to increase the efficiency of the algorithm, allowing it to scale with the size of the data.

- Approximate Nearest Neighbors: Uses techniques like locality-sensitive hashing (LSH) to speed up the search process at the cost of a small reduction in accuracy.

- Dimensionality Reduction: Methods like PCA (Principal Component Analysis) reduce the number of features, making the search process faster and less computationally expensive without significantly compromising accuracy.

By understanding and selecting the right variation based on the problem at hand, you can greatly improve the performance and scalability of the model. Each variation has its strengths, and recognizing these can help you tackle specific challenges more effectively.

Preparing for KNN Theory Questions

When studying for theoretical topics related to proximity-based classification, it’s essential to focus on the core principles, algorithms, and their practical implications. Understanding the fundamental concepts, as well as the strengths and limitations of the method, will help you prepare for academic assessments and problem-solving scenarios. A solid grasp of these ideas is critical for tackling both theoretical inquiries and practical applications.

Mastering the Core Concepts

To answer theoretical questions effectively, start by mastering the foundational elements of the method. Understanding how the algorithm works, from calculating distances to classifying new data points based on neighboring instances, is key. Dive into how hyperparameters like the number of neighbors and distance metrics influence the model’s behavior and performance.

- Distance Metrics: Familiarize yourself with common distance measures like Euclidean, Manhattan, and Minkowski, and understand how each affects the classification results.

- Choosing the Right Parameters: Learn how the selection of the number of neighbors and distance metric influences the accuracy and generalization of the model.

- Bias-Variance Tradeoff: Recognize how model complexity (e.g., the number of neighbors) impacts the bias and variance, and how to tune these factors for optimal results.

Understanding Practical Applications

Equally important is the ability to apply theoretical knowledge to real-world scenarios. Many assessments test your understanding by presenting case studies or asking how to solve specific problems using proximity-based methods. Understand when and why this technique is appropriate, and be ready to discuss the advantages and limitations of using it in various situations.

- Imbalanced Data: Understand how this technique handles uneven class distributions and what adjustments (such as weighted neighbors) can be made to improve performance.

- Computational Complexity: Be prepared to explain how the computational cost increases with larger datasets and how optimizations like approximate nearest neighbors can help mitigate this issue.

- Dimensionality and Curse of Dimensionality: Discuss how high-dimensional data can affect model performance and what dimensionality reduction techniques can be applied to address these challenges.

By mastering these theoretical concepts, you’ll be well-prepared to answer a wide range of questions, both in written form and during practical assessments. With a deep understanding of how the method operates and how to adapt it to different scenarios, you’ll be able to approach each question with confidence and clarity.

Tips for Answering KNN Multiple Choice

When tackling multiple-choice assessments focused on proximity-based classification, having a strategy can make all the difference. The key to success lies in carefully evaluating each option, understanding core concepts, and eliminating incorrect choices based on your knowledge. This approach will not only improve accuracy but also save valuable time during the test.

Understand the Core Concepts

Before diving into the options, ensure that you have a solid understanding of the foundational principles. Proximity-based classification relies on a few essential factors, including how data points are compared, the significance of distance measures, and the impact of parameters like the number of neighbors. Review these concepts to guide your decision-making process and quickly eliminate answers that contradict basic principles.

- Distance Metrics: Be familiar with different metrics such as Euclidean and Manhattan, and understand which ones work best in various situations.

- Model Parameters: Know the influence of parameters like the number of nearest neighbors, as they determine the model’s sensitivity to data.

- Overfitting vs. Underfitting: Recognize how choosing too many or too few neighbors can lead to overfitting or underfitting the data, respectively.

Strategize Your Approach

When confronted with a multiple-choice question, it’s important to methodically analyze each answer before selecting the most appropriate one. Start by reading the entire question carefully, identifying the key terms and concepts it addresses. Then, follow these steps:

- Eliminate Clearly Wrong Choices: If an option doesn’t make sense based on your understanding, rule it out immediately. For example, an option suggesting an incorrect distance metric or unrealistic parameter values can be discarded.

- Consider Edge Cases: Think about special scenarios, such as imbalanced datasets or high-dimensional data, and how they might affect the results.

- Double-Check Your Work: If you’re uncertain, re-evaluate the remaining options by considering how they align with core principles. Often, the best answer will align with the method’s known strengths and limitations.

By applying these techniques, you can confidently navigate multiple-choice questions related to classification methods. With a clear understanding of the key concepts and a strategic approach to the test, you’ll be able to answer efficiently and effectively.

How KNN is Tested in Practical Exams

In practical assessments focusing on classification algorithms, testing often involves applying theoretical knowledge to real-world datasets. These evaluations are designed to assess how well you can implement, tune, and interpret models based on proximity-based classification techniques. The task typically involves working with data, selecting appropriate methods, and ensuring that the model performs optimally under various conditions.

Key Tasks in Practical Assessments

During a hands-on test, several key tasks are typically included to assess your understanding of proximity-based classification. These tasks often test your ability to perform the following:

- Data Preprocessing: Preparing the dataset by handling missing values, normalizing or standardizing features, and splitting data into training and testing sets.

- Model Training: Choosing the right number of nearest neighbors and distance metrics to build a model that can classify the data effectively.

- Model Evaluation: Evaluating the performance using metrics like accuracy, precision, recall, and F1-score to determine the model’s effectiveness.

- Parameter Tuning: Adjusting parameters such as the number of neighbors and distance weights to optimize model performance.

Approaching Practical Challenges

In practical settings, you may face datasets with noise, imbalance, or high-dimensionality. Handling these challenges requires both technical knowledge and a methodical approach. Here are some common strategies to address these issues:

- Dealing with Imbalanced Data: Use techniques like stratified sampling, or adjust class weights to ensure that minority classes are not ignored.

- Feature Selection: Use dimensionality reduction techniques like Principal Component Analysis (PCA) to reduce the feature space when working with high-dimensional data.

- Choosing the Right Distance Metric: Depending on the problem, you may need to experiment with various distance metrics (e.g., Euclidean, Manhattan, or Minkowski) to see which one yields the best results.

By focusing on these core tasks and applying the appropriate techniques, you can successfully navigate practical assessments and demonstrate your ability to apply proximity-based classification methods effectively.

Advanced KNN Topics for Exam Readiness

For those preparing for advanced assessments on classification methods, it is essential to explore the deeper, more technical aspects of distance-based models. These topics delve into complex concepts and techniques that go beyond basic implementations. Mastering these advanced topics will provide a comprehensive understanding, allowing you to tackle more challenging scenarios and questions with confidence.

Key Advanced Topics

Several advanced concepts in proximity-based classification are crucial for success in higher-level evaluations. Below are some of the most important areas to focus on:

- Distance Metric Selection: Understanding when to use different distance metrics, such as Euclidean, Manhattan, Minkowski, or cosine similarity, and how each affects model performance.

- Weighted Neighbors: Implementing distance-weighted classification, where closer neighbors contribute more to the classification decision, improving accuracy.

- Dimensionality Reduction: Using techniques like PCA (Principal Component Analysis) to reduce feature space without losing important information, particularly when dealing with high-dimensional datasets.

- Curse of Dimensionality: Identifying and managing the challenges that arise when working with high-dimensional data, where distances between data points become less informative.

- Handling Imbalanced Datasets: Learning strategies to address class imbalance, such as adjusting decision boundaries or using oversampling and undersampling techniques.

Performance Evaluation Techniques

When preparing for practical tests or theory-based assessments, evaluating the performance of a proximity-based classifier is essential. Here’s an overview of techniques used for assessing model effectiveness:

| Evaluation Metric | Purpose |

|---|---|

| Accuracy | Measures the overall percentage of correctly classified instances. |

| Precision | Focuses on the accuracy of positive predictions, important when false positives are costly. |

| Recall | Measures how well the model identifies all relevant instances, critical in cases of missing data. |

| F1-Score | A balance between precision and recall, useful in situations where both false positives and false negatives are critical. |

| Cross-Validation | Helps to ensure the model generalizes well by testing it on different subsets of data. |

By gaining proficiency in these advanced topics and understanding how to evaluate model performance effectively, you’ll be well-prepared to handle the more complex scenarios that may arise in higher-level assessments.

Key Takeaways for KNN Preparation

As you prepare for assessments focused on classification algorithms, it’s crucial to grasp the foundational and advanced principles of distance-based models. Mastering key concepts, understanding the intricacies of performance evaluation, and knowing how to implement various strategies will equip you with the knowledge needed to excel. Focus on a few core areas that can enhance your understanding and ability to apply these techniques effectively.

- Understand Core Concepts: Grasp the fundamentals such as how data points are classified based on proximity and the role of distance metrics like Euclidean and Manhattan distance. Recognize how these concepts influence decision-making in classification tasks.

- Know the Impact of Distance Metrics: Different tasks may require different distance measures. Be familiar with how to select the appropriate distance metric for various datasets and scenarios to optimize model performance.

- Focus on Performance Metrics: Understand the metrics used to evaluate model success, such as accuracy, precision, recall, and F1-score. Being able to calculate and interpret these metrics will help you assess the effectiveness of your model.

- Practice with Real-World Datasets: Apply the algorithm to practical datasets, especially those with varying complexities, such as imbalanced data or high-dimensional features. This experience will enhance your problem-solving skills during real assessments.

- Understand Overfitting and Underfitting: Learn to balance model complexity with the risk of overfitting or underfitting. A good grasp of regularization techniques and cross-validation strategies will help in tuning model parameters for optimal results.

- Prepare for Advanced Topics: Dive into more complex topics such as weighted neighbors, dimensionality reduction, and the curse of dimensionality. Mastery of these advanced areas will enable you to handle more challenging scenarios efficiently.

- Review Key Algorithms and Variations: Be aware of different variations and enhancements to the basic method, such as using different distance metrics, handling missing data, and dealing with large datasets.

By focusing on these areas, you will be well-equipped to approach any challenge related to distance-based models, whether it’s theoretical understanding or practical application.