Successfully completing a certification in advanced computational techniques requires a deep understanding of the core principles and the ability to apply them practically. Whether you’re preparing for an assessment or striving to refine your skills, it’s crucial to focus on the most important topics covered throughout the course. Mastery of these areas will not only ensure a higher score but also enhance your ability to solve real-world problems effectively.

Problem-solving skills are central to excelling in this field, where theory meets practice. By familiarizing yourself with the key topics and approaches, you’ll gain the confidence to tackle a wide range of tasks and questions. As you prepare, understanding the structure and flow of the evaluation process will allow you to allocate your time wisely and prioritize critical areas that demand attention.

In this guide, we’ll explore the essential techniques, strategies, and common challenges faced during assessments, offering helpful insights to support your preparation. From practical exercises to theoretical knowledge, each section is designed to guide you through the journey toward success. Emphasizing both theoretical understanding and hands-on experience, you’ll be ready to demonstrate your capabilities and achieve your goals.

Overview of IBM Machine Learning Exam

This section provides a comprehensive look at the evaluation process designed to test your proficiency in key computational techniques and your ability to apply them in real-world scenarios. The assessment is structured to assess both theoretical knowledge and practical skills, ensuring that individuals can demonstrate their understanding across a variety of topics. The format often includes a mix of multiple-choice questions, coding tasks, and scenario-based problems, designed to challenge learners at different levels of expertise.

The purpose of this review is to give you a clear understanding of what to expect and help you prepare effectively. With the right focus on key concepts and practical applications, you’ll be able to approach the evaluation with confidence. Below is a brief overview of the core areas covered in the test.

| Topic | Key Focus Areas |

|---|---|

| Fundamentals of Data Analysis | Data cleaning, transformation, and exploration techniques |

| Algorithm Understanding | Core models and methods for data analysis and prediction |

| Practical Application | Implementing models, tuning parameters, and optimizing solutions |

| Performance Evaluation | Assessing accuracy, precision, recall, and other metrics |

| Problem Solving | Applying techniques to solve real-world business challenges |

By understanding the structure of the assessment and the topics covered, you can target your study efforts more effectively, ensuring that you are prepared to demonstrate both theoretical knowledge and hands-on experience.

Key Concepts in Python for ML

To effectively tackle computational tasks and data-related challenges, familiarity with a set of essential programming concepts is crucial. These foundational ideas serve as the building blocks for more advanced techniques, enabling individuals to implement various algorithms, manipulate data, and evaluate results efficiently. Understanding these concepts is not just about writing code but knowing how to apply it to real-world problems.

Core Libraries and Tools

Several libraries are indispensable when working on computational models and data-driven tasks. These tools streamline the process of data handling, model building, and performance evaluation. Some key libraries include:

- NumPy: Provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these structures.

- Pandas: Essential for data manipulation, offering data structures like Series and DataFrame for working with structured data.

- Matplotlib: Used for plotting graphs and visualizing results, helping to present data in an accessible and interpretable format.

- Scikit-learn: A robust library for implementing and evaluating various statistical models, classifiers, and regressors.

Data Handling and Transformation

Data preparation is a critical step in any analytical task, and it involves several processes that ensure your dataset is ready for analysis. Key aspects include:

- Data Cleaning: Removing missing values, duplicates, and irrelevant information to ensure the dataset is accurate and usable.

- Feature Engineering: Creating new features from existing data to improve model performance and understanding.

- Normalization and Scaling: Ensuring that the dataset’s numerical features are on a similar scale to improve algorithm performance.

By mastering these concepts and tools, you will be well-equipped to build models, process data efficiently, and solve complex problems across a wide range of applications.

Understanding the Course Structure

The structure of any comprehensive program is designed to guide learners from basic concepts to more advanced applications, ensuring they can apply their knowledge effectively. This particular course is tailored to offer both theoretical insights and hands-on practice, creating a well-rounded approach to skill acquisition. Through a combination of lectures, practical exercises, and assessments, participants can build a strong foundation in key areas and progressively develop more complex abilities.

Course Modules Overview

The program is organized into several modules, each focusing on a different aspect of the subject. Here’s a breakdown of the key topics covered throughout:

- Introduction to Core Concepts: Basic principles, terminology, and foundational knowledge.

- Data Preparation: Techniques for cleaning, transforming, and organizing datasets.

- Model Building: Exploring different algorithms, frameworks, and methods for creating effective models.

- Model Evaluation: Understanding performance metrics, overfitting, and other evaluation techniques.

- Advanced Topics: Diving into optimization, real-world applications, and fine-tuning approaches.

Assessments and Practical Assignments

Throughout the course, learners are encouraged to apply their knowledge through a series of practical tasks and quizzes. These assignments help solidify the theoretical aspects by offering opportunities to engage directly with the material and solve relevant problems. The final part of the program typically involves a comprehensive project that synthesizes the learned material.

By following this structured approach, students can gain a deep understanding of the subject, ensuring they are well-prepared to tackle challenges in real-world applications.

Common Challenges in ML Exams

When preparing for an assessment that tests computational techniques and data-related tasks, there are several hurdles that learners commonly face. These challenges often arise due to the complexity of the subject matter, the need for both theoretical understanding and practical application, and the time constraints typically associated with such evaluations. Identifying these difficulties early on can help you prepare more effectively and approach the assessment with confidence.

Complexity of Problem Solving

One of the primary challenges is the level of complexity involved in solving problems during the assessment. Many tasks require not just knowledge of algorithms but also the ability to modify and adapt them to fit the specific problem at hand. This often means applying multiple techniques and making decisions about which method or model will work best for a given scenario. Students often struggle to think critically about how to approach these problems under time pressure.

- Understanding the underlying concepts of algorithms

- Choosing the right approach for specific tasks

- Dealing with incomplete or ambiguous data

Time Management and Pacing

Another significant challenge is effectively managing time during the test. With a limited amount of time to complete multiple tasks, students often find it difficult to balance between writing code, testing solutions, and reviewing their work. Proper time allocation is essential to ensure that all parts of the assessment are completed thoroughly, and rushing through tasks can lead to careless mistakes.

- Prioritizing tasks based on difficulty

- Balancing coding and debugging time

- Ensuring enough time for review and refinement

By recognizing these common challenges, you can adopt strategies to overcome them, whether through better preparation, practice, or adopting more efficient problem-solving techniques during the assessment.

Essential Python Libraries for Machine Learning

In the realm of data analysis and computational tasks, the right set of tools can significantly enhance your ability to solve complex problems. Python, being one of the most popular languages for such tasks, offers a wide range of libraries that streamline various stages of data processing, modeling, and evaluation. Familiarity with these libraries is crucial for both beginners and experienced practitioners alike, as they provide the building blocks for working efficiently with large datasets and implementing sophisticated models.

Core Libraries for Data Handling

Data manipulation and preparation form the foundation of any analytical task. Several libraries excel in handling data, allowing users to clean, transform, and analyze it seamlessly. Some of the most widely used libraries for this purpose include:

- NumPy: A fundamental library for numerical operations, providing support for multi-dimensional arrays and matrices, along with a suite of mathematical functions to process them.

- Pandas: Essential for handling structured data, offering powerful tools like DataFrame for easy manipulation, cleaning, and analysis of tabular data.

- OpenCV: While primarily used for computer vision tasks, it also aids in manipulating images and videos, which are often integral to dataset handling in certain applications.

Libraries for Model Building and Evaluation

Once the data is prepared, the next step is to build and evaluate models. There are several Python libraries that provide robust methods for model implementation, training, and testing:

- Scikit-learn: A go-to library for a wide range of algorithms, offering tools for classification, regression, clustering, and dimensionality reduction, as well as utilities for model evaluation.

- TensorFlow: A powerful framework for building and training deep learning models, widely used in both research and production environments.

- Keras: A high-level API built on top of TensorFlow, designed to simplify the process of creating neural networks with a user-friendly interface.

Mastering these libraries is key to effectively implementing models and extracting valuable insights from data, making them indispensable tools in any data scientist’s toolkit.

Step-by-Step Approach to Exam Preparation

Effective preparation for an assessment requires a clear strategy and consistent effort. Rather than cramming the night before, breaking down the study process into manageable steps allows you to gradually build up the knowledge and skills needed to succeed. By focusing on each stage of preparation, you ensure that you’re well-prepared and confident when the test day arrives. The following approach will help you organize your study plan and tackle the material systematically.

Phase 1: Review the Syllabus and Identify Key Areas

Start by thoroughly reviewing the syllabus or outline of the course to identify the most important topics. This will give you a clear idea of what areas to focus on and help you prioritize your study time effectively. Pay special attention to any topics that are highlighted as crucial or that have appeared frequently in practice exercises or quizzes.

- Go over all the main themes covered during the course.

- Identify areas you feel less confident about and need more time to review.

- Note any specific techniques, algorithms, or tools that are key to the subject matter.

Phase 2: Study the Theory and Key Concepts

Once you have your areas of focus, dive into the theoretical aspects of each topic. Make sure to understand the key concepts and their applications. This phase is about building a solid foundation of knowledge that will help you when it comes to problem-solving tasks during the assessment.

- Read through textbooks, online resources, and lecture notes to reinforce your understanding.

- Summarize important concepts in your own words to ensure deeper comprehension.

- Create mind maps or flashcards to aid memory retention and quick review.

Phase 3: Practice Through Problem Solving

Theoretical knowledge alone is not enough–applying what you’ve learned to real problems is essential. Practice solving different types of problems to gain hands-on experience and improve your problem-solving speed and accuracy.

- Work through practice exercises and coding challenges related to the material.

- Simulate real exam conditions by solving problems within a set time frame.

- Review your solutions and focus on areas where you made mistakes to learn from them.

Phase 4: Review and Reinforce Weak Areas

Before the assessment, make sure to revisit any topics where you feel unsure. Use your notes, practice exercises, and study groups to clarify concepts and strengthen your understanding. Reinforcement is key to ensuring you retain information under pressure.

- Revisit challenging topics and practice related problems.

- Ask peers or instructors for clarification if certain concepts are still unclear.

- Use online forums and communities to discuss tricky topics and get insights from others.

By following this structured approach, you can ensure that you’re not only prepared but well-equipped to tackle the challenges of the assessment with confidence and competence.

How to Solve Practical ML Problems

Solving real-world computational tasks requires a structured approach that combines both theoretical knowledge and hands-on experience. In practical scenarios, you must go beyond just understanding algorithms and models; you need to effectively implement and adapt them to address specific challenges. The ability to identify the right techniques, preprocess data appropriately, and evaluate model performance is crucial to solving practical problems efficiently.

Step 1: Understand the Problem

The first step in solving any problem is to fully understand the task at hand. This means gathering all necessary information, clarifying objectives, and defining the scope. It’s important to ask the right questions and determine exactly what you’re trying to achieve. Are you dealing with a classification, regression, or clustering task? Understanding the problem type will guide the selection of algorithms and approaches.

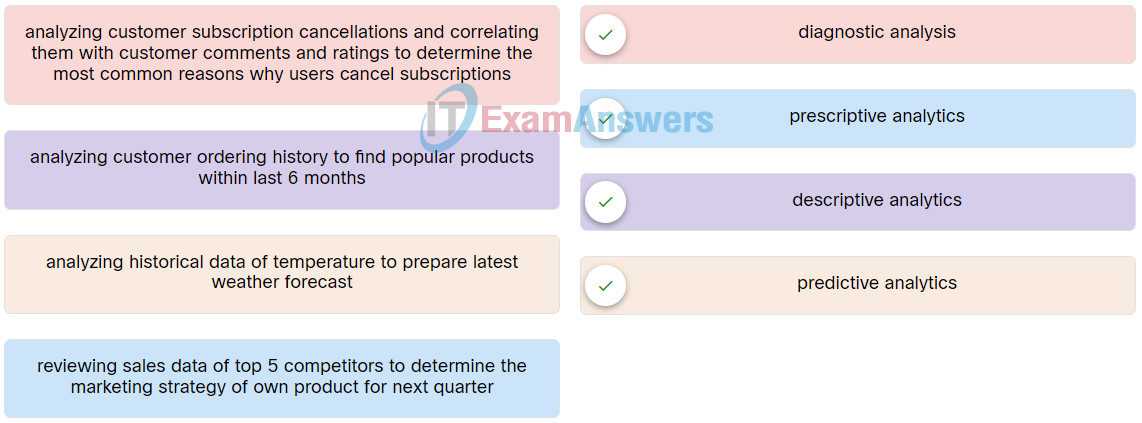

- Clarify the goal: Is it predictive, descriptive, or exploratory?

- Identify the target variable and features that may be relevant.

- Determine any constraints, such as time limits or computational resources.

Step 2: Prepare and Explore the Data

Before diving into model building, you need to prepare and explore the data. This step involves cleaning the dataset, handling missing values, normalizing or scaling features, and identifying potential outliers. Data exploration, such as visualizing distributions or correlations, helps uncover patterns and relationships that might not be obvious at first glance.

- Clean the data: Remove duplicates, handle missing values, and standardize formats.

- Explore the data: Use descriptive statistics and visualizations to understand the distributions and relationships.

- Feature engineering: Create new features or transform existing ones based on insights from data exploration.

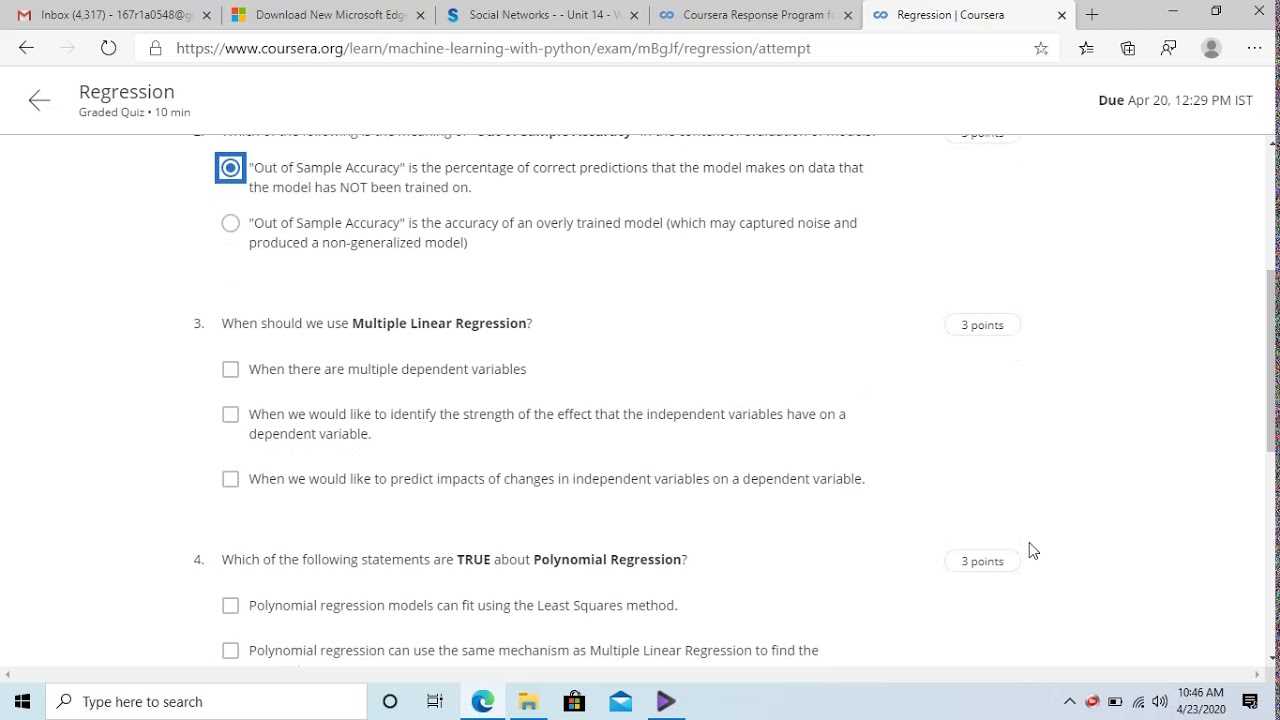

Step 3: Select the Right Model and Algorithm

Choosing the right algorithm is essential for solving the problem effectively. Depending on the nature of the task (classification, regression, etc.), different models will be more appropriate. It’s often helpful to start with simpler models and gradually progress to more complex ones if necessary. Cross-validation techniques and hyperparameter tuning can further help optimize the model’s performance.

- Start with simple algorithms: Test baseline models like linear regression or decision trees.

- Progress to more complex methods: Use ensemble methods or deep learning models if appropriate.

- Optimize performance: Fine-tune hyperparameters and use techniques like regularization to improve results.

Step 4: Evaluate and Interpret the Model

After training the model, evaluating its performance is key. Use appropriate evaluation metrics like accuracy, precision, recall, or mean squared error, depending on the task. Interpretation of results is also essential to ensure that the model is not only performing well but also making decisions in a meaningful way. This step includes understanding why certain predictions were made and ensuring that the model is not biased or overfitted.

- Evaluate model performance using relevant metrics.

- Check for overfitting or underfitting and adjust accordingly.

- Interpret the model’s decisions to ensure they align with domain knowledge.

By following these steps, you can methodically approach and solve practical challenges in computational tasks, making sure that your solutions are both effective and grounded in sound techniques.

Common Mistakes to Avoid in ML Exams

In any assessment related to computational tasks, it’s easy to fall into certain traps that can negatively impact performance. These common pitfalls often arise from misunderstandings, lack of preparation, or rushing through critical steps. Identifying and avoiding these mistakes can significantly improve your chances of success and help you tackle the questions more effectively.

One of the most frequent mistakes is failing to thoroughly understand the problem before diving into the solution. Without a clear grasp of the task, it’s easy to make incorrect assumptions or apply inappropriate techniques. Another common issue is neglecting proper data preprocessing, which can lead to unreliable results and skewed evaluations. Skipping the exploration of the data and directly jumping into model selection can cause major flaws in your approach.

Additionally, students often underestimate the importance of evaluating models correctly. Blindly assuming that a model is performing well without checking for overfitting or understanding the evaluation metrics can lead to misleading conclusions. It’s also important not to rush through the implementation of algorithms, as small coding errors or overlooked details can affect the outcome.

By being aware of these mistakes, you can take a more cautious and methodical approach, ensuring that your solutions are both accurate and well-thought-out.

Time Management Tips for Final Exam

Effective time management is essential when preparing for any assessment. With the pressure of multiple tasks and limited time, it’s easy to become overwhelmed. By organizing your study schedule and focusing on key areas, you can maximize productivity and ensure that you’re well-prepared. Properly allocating your time allows you to balance learning and revision efficiently, ensuring that no topic is overlooked.

Create a Study Plan

One of the most important steps in managing time effectively is creating a study plan. This allows you to break down complex topics into manageable chunks and allocate specific times for each. Having a clear roadmap for your preparation helps you stay focused and prevents procrastination.

- Start by identifying the key areas that need attention.

- Set realistic time slots for each topic, considering their complexity.

- Prioritize areas of weakness, but don’t neglect stronger topics.

Practice Timed Questions

Simulating real conditions by practicing under time constraints can significantly improve your performance. It’s crucial to get accustomed to the format and time limits of the assessment so you can work efficiently. This will also help you identify areas where you need to speed up or focus more attention.

- Use past papers or mock tests to practice solving questions under time pressure.

- Focus on time management techniques like reading questions quickly and prioritizing tasks.

- Review and assess your performance after each practice session to find areas for improvement.

Stay Organized and Avoid Cramming

Staying organized throughout the preparation period is essential for effective time management. Avoid cramming the night before the assessment, as it can lead to stress and poor retention. Consistent, manageable study sessions allow for better understanding and long-term retention of material.

| Time Management Tips | Benefits |

|---|---|

| Set Specific Goals | Improves focus and clarity on what needs to be done. |

| Take Breaks | Helps avoid burnout and maintains productivity levels. |

| Review Regularly | Enhances retention and reinforces learning. |

By implementing these strategies, you can manage your time effectively, reduce stress, and perform at your best during the assessment.

Best Resources for Study and Practice

When preparing for any assessment, having access to high-quality resources can make a significant difference in your success. The right materials help reinforce concepts, provide practical experience, and allow you to assess your understanding. Whether you’re looking for tutorials, exercises, or interactive platforms, there are numerous resources that cater to different learning styles and needs.

Books, online courses, and forums are great starting points for building a solid foundation of knowledge. Interactive platforms such as coding challenges and quizzes allow for hands-on practice, which is essential in retaining information and gaining real-world experience. Utilizing a combination of these resources will ensure well-rounded preparation and a deeper understanding of the subject matter.

Books and eBooks

Books are a traditional yet invaluable resource for in-depth learning. They provide structured content and explain fundamental principles step-by-step. Some of the best books to consider include:

- Data Science from Scratch by Joel Grus – A comprehensive guide that covers essential programming concepts and their applications.

- Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow by Aurélien Géron – A practical approach to building models and understanding their underlying mechanics.

Online Learning Platforms

Interactive online platforms offer courses that cater to all skill levels, from beginners to advanced learners. These platforms provide video lectures, exercises, and community support, which enhance the learning experience:

- Coursera – Offers in-depth courses, often taught by university professors, with hands-on assignments.

- edX – Provides a wide range of courses from universities around the world, covering both theoretical and practical aspects.

Coding Practice Websites

Practical coding challenges are essential for mastering the techniques and algorithms used in this field. These websites allow you to practice solving real-world problems in an interactive environment:

- LeetCode – Provides coding challenges focused on algorithms, data structures, and system design.

- Kaggle – Offers datasets, competitions, and notebooks where you can practice your skills and learn from others in the community.

Community and Discussion Forums

Engaging with others in the community can offer unique insights and solutions to difficult problems. Forums allow for the exchange of ideas, troubleshooting advice, and the opportunity to ask questions about concepts you may find challenging:

- Stack Overflow – A platform where you can ask technical questions and receive answers from the programming community.

- Reddit (r/learnmachinelearning) – A community-driven forum that provides resources, discussions, and peer support on various topics.

By incorporating these resources into your study plan, you’ll be better prepared to tackle any challenges and gain a strong command of the subject matter.

How to Interpret Machine Learning Results

Understanding the outcomes of predictive models is crucial to assessing their effectiveness and reliability. Interpreting the results allows practitioners to evaluate the model’s performance, identify areas for improvement, and make informed decisions based on the insights gained. Proper interpretation involves analyzing various metrics and visualizations that reflect how well the model generalizes to new, unseen data.

Key Metrics for Evaluation

When interpreting model outcomes, several key metrics are commonly used to assess the quality and accuracy of predictions. These metrics provide valuable insights into how well the model fits the data and whether it is overfitting or underfitting.

- Accuracy – Measures the percentage of correct predictions compared to the total number of predictions. It is a useful general metric but can be misleading in imbalanced datasets.

- Precision and Recall – Precision measures the proportion of true positive results in all positive predictions, while recall focuses on the proportion of actual positives correctly identified. These metrics are essential when dealing with imbalanced data.

- F1 Score – The harmonic mean of precision and recall, useful when balancing both is important in a model’s performance.

- Confusion Matrix – A table that summarizes the performance of a classification algorithm by comparing predicted values with actual outcomes.

- Mean Absolute Error (MAE) and Mean Squared Error (MSE) – Common metrics for regression tasks that measure the difference between predicted and actual values. MAE gives the average of absolute errors, while MSE gives more weight to larger errors.

Visualizing Model Performance

Visualizations are a powerful tool for interpreting model results. They help to clearly highlight where the model excels and where it might be failing. Some common types of visual aids include:

- ROC Curve (Receiver Operating Characteristic) – Plots the true positive rate versus the false positive rate at various thresholds, helping to understand the trade-off between sensitivity and specificity.

- Precision-Recall Curve – Especially useful in imbalanced datasets, this curve shows the trade-off between precision and recall for different threshold values.

- Learning Curves – These plots show how the model’s performance changes as the training progresses, helping to identify whether the model is overfitting or underfitting.

Interpreting the results of any predictive model involves both numerical and visual analysis. By combining these insights, practitioners can identify model strengths and weaknesses, enabling them to make adjustments and improve performance.

Key Algorithms Covered in the Exam

Understanding the key algorithms used in predictive modeling is essential for tackling complex tasks. These algorithms form the foundation for creating models that can analyze data, make predictions, and uncover patterns. A strong grasp of these techniques is crucial for success in assessments and real-world applications alike. In this section, we will explore the most important algorithms that are often featured in tests related to data analysis and prediction tasks.

Supervised Learning Algorithms

Supervised learning algorithms are used when the model is trained on labeled data. These algorithms aim to map inputs to outputs based on past observations, making them essential for classification and regression tasks.

- Linear Regression – A simple algorithm for predicting continuous outcomes based on the relationship between input variables and a target variable.

- Logistic Regression – Used for binary classification problems, this algorithm predicts the probability of an outcome based on one or more input features.

- Decision Trees – A hierarchical model that splits data into smaller subsets to make decisions based on input features. It’s particularly useful for both classification and regression tasks.

- Support Vector Machines (SVM) – A powerful classifier that finds the hyperplane that best separates different classes of data in high-dimensional space.

- K-Nearest Neighbors (KNN) – A simple, instance-based algorithm that classifies data points based on the majority class of their nearest neighbors.

Unsupervised Learning Algorithms

Unsupervised learning algorithms are used when the model is trained on data without labeled outcomes. These algorithms identify patterns or structures in the data, making them useful for clustering, dimensionality reduction, and anomaly detection.

- K-Means Clustering – A popular algorithm for grouping data points into clusters based on their similarity. It’s widely used for market segmentation and image compression.

- Hierarchical Clustering – Builds a tree of clusters by recursively merging or splitting data points. It’s useful when the structure of the data is unknown.

- Principal Component Analysis (PCA) – A dimensionality reduction technique that transforms high-dimensional data into a lower-dimensional space while preserving as much variance as possible.

- Autoencoders – Neural networks used for unsupervised learning tasks, particularly for feature extraction and data compression.

Mastering these algorithms not only helps in exams but also provides the foundation for solving real-world data challenges. By understanding when and how to apply each algorithm, one can effectively analyze data and build predictive models.

Understanding Data Preprocessing Techniques

Data preprocessing is a critical step in the process of building any predictive model. It involves transforming raw data into a clean, usable format to ensure the best possible results from algorithms. This stage is crucial as the quality of data directly impacts the performance of the model. Various techniques are applied to handle issues such as missing values, data inconsistencies, and scaling discrepancies, among others. By preprocessing data effectively, one can improve the efficiency and accuracy of the model.

Key Preprocessing Techniques

To understand the role of data preparation, it’s important to know the common techniques used to clean and transform data before feeding it into models.

| Technique | Description | Common Use |

|---|---|---|

| Handling Missing Data | Addresses gaps in data by either removing or imputing missing values. | Imputation or deletion when dealing with incomplete datasets. |

| Normalization | Scales features to a common range, typically [0,1], to avoid model bias towards variables with larger scales. | Useful for models sensitive to the scale of input data, such as neural networks or gradient-based algorithms. |

| Standardization | Transforms data to have a mean of 0 and a standard deviation of 1, making it more suitable for certain algorithms. | Commonly used with algorithms like Support Vector Machines or Logistic Regression. |

| Encoding Categorical Data | Converts categorical variables into numerical form, which is necessary for most algorithms. | Techniques like one-hot encoding and label encoding are often used for categorical features. |

| Outlier Detection | Identifies and handles data points that are significantly different from the rest of the dataset. | Removing or adjusting outliers to ensure they do not distort model results. |

Each of these techniques plays a vital role in improving the dataset’s suitability for analysis. Whether you are working with structured or unstructured data, applying the right preprocessing methods ensures the success of your model.

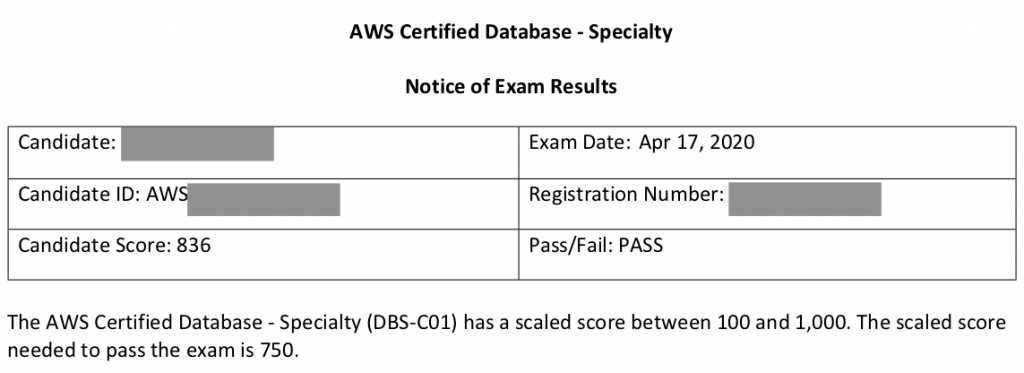

Evaluating Your Model’s Performance

Once a model has been trained, it is essential to assess its performance to ensure it can make accurate predictions on unseen data. Evaluation is not only about checking how well the model fits the training data but also about understanding its generalization capability. By evaluating a model, you can identify areas for improvement and ensure that it is both effective and reliable. The evaluation process involves various metrics and techniques that measure different aspects of performance, depending on the nature of the problem.

Common methods used to evaluate model effectiveness include metrics such as accuracy, precision, recall, F1 score, and confusion matrix. These help quantify how well the model performs in different scenarios and allow comparison between multiple models to choose the most appropriate one. For regression tasks, metrics like mean squared error (MSE) and R-squared are frequently used to determine how well predictions match actual outcomes.

Common Performance Metrics

- Accuracy: The proportion of correct predictions to total predictions. Often used in classification tasks.

- Precision: The ratio of true positive results to the total predicted positives. Useful in cases where false positives are critical to minimize.

- Recall: The ratio of true positive results to the total actual positives. Important when false negatives are more significant.

- F1 Score: The harmonic mean of precision and recall, providing a balance between the two.

- Confusion Matrix: A table used to evaluate the performance of classification models, showing the counts of true positive, false positive, true negative, and false negative predictions.

- Mean Squared Error (MSE): Measures the average squared difference between predicted and actual values, typically used for regression tasks.

- R-squared: A measure of how well the model’s predictions match the actual data, showing the proportion of variance explained by the model.

It is crucial to choose the right evaluation metric depending on the type of task and business objectives. For example, in a scenario where the cost of a false positive is high, precision would be a more valuable metric. On the other hand, recall would be a priority in situations where missing a positive case could have serious consequences. Understanding these metrics will enable you to evaluate your model’s performance effectively and make informed decisions on its suitability for deployment.

Real-World Applications of Machine Learning

In today’s world, advanced computational techniques are transforming industries by providing innovative solutions to complex problems. These methods are now used across various sectors, offering efficiency, automation, and the ability to analyze vast amounts of data. From healthcare to finance, the applications of these techniques are both diverse and impactful. Understanding how these technologies are applied in real-world settings helps to highlight their value and potential for continued growth.

One of the most exciting aspects of these technologies is their ability to learn from data, allowing businesses and organizations to gain insights, make predictions, and optimize processes. These applications span several fields, including customer service, supply chain management, fraud detection, and even creative industries such as music and art generation. Below are some of the key sectors that are benefiting from these powerful tools.

Key Applications in Various Industries

- Healthcare: Techniques are being used to assist doctors in diagnosing diseases, predicting patient outcomes, and personalizing treatment plans. Applications like image recognition for medical imaging and predictive models for disease outbreaks are just a few examples.

- Finance: Algorithms play a crucial role in detecting fraudulent transactions, automating trading strategies, and assessing credit risks. Predictive models are also used for forecasting market trends and customer behavior.

- Retail: Personalized recommendations based on customer behavior, optimizing inventory management, and enhancing supply chain efficiency are all made possible through advanced data processing techniques.

- Transportation: These methods are used to improve traffic management, optimize routes for delivery services, and power self-driving cars. Ride-sharing apps like Uber and Lyft leverage these technologies to predict demand and optimize fares.

- Entertainment: In media and entertainment, content recommendation systems used by platforms like Netflix and Spotify are powered by advanced algorithms that predict what users are likely to enjoy based on their preferences and behaviors.

- Manufacturing: Techniques are applied to predictive maintenance, quality control, and supply chain optimization. They help reduce downtime and improve operational efficiency by predicting equipment failures before they occur.

As the world becomes increasingly data-driven, the impact of these techniques on various industries will only continue to grow. The ability to harness data and use it to make informed decisions is revolutionizing business models and improving the quality of services and products across the globe.

What to Do After the Exam

Once you have completed your assessment, it’s important to focus on the next steps. Many students feel a sense of relief, but it’s crucial to take a thoughtful approach to what comes after the test. Whether you are awaiting results or preparing for the future, there are several ways to leverage your experience and ensure continuous growth.

First, take the time to reflect on the process. Evaluate your strengths and areas where you encountered challenges. This reflection can provide valuable insights for future learning and professional development. Additionally, it’s a good time to reinforce your knowledge and skills by applying what you’ve learned in real-world scenarios or side projects.

Here are a few strategies to consider after completing an assessment:

- Review Your Performance: Look back at the areas you found most difficult and identify any mistakes or areas for improvement. This can help you understand where additional study or practice may be necessary.

- Stay Consistent: Keep up with learning by continuing to explore new topics and concepts. This will help you stay sharp and ready for future challenges.

- Engage with the Community: Join forums, attend meetups, or connect with professionals in your field. Engaging with others will help reinforce what you’ve learned and expose you to different perspectives and approaches.

- Apply Your Knowledge: Start working on personal projects or internships where you can use your skills in real-world situations. This practical experience will deepen your understanding and make you more competitive in your field.

- Prepare for Next Steps: If there are additional certifications, courses, or workshops that can complement your knowledge, consider enrolling. Lifelong learning is essential for growth, and there are always new advancements to explore.

By staying proactive and continuing to challenge yourself, you can turn your assessment experience into a stepping stone for future success. Remember that the journey doesn’t end after the test – it’s just the beginning of a new phase in your learning and career path.