Preparing for an important academic evaluation requires a solid understanding of key concepts and the ability to apply them effectively. Focusing on the critical areas that are most likely to appear in your assessment will ensure you are well-equipped to tackle the challenges ahead. A structured approach to revision is essential for boosting your confidence and achieving high results.

In this guide, we will highlight the fundamental topics that often appear in assessments, providing you with a thorough overview and practical strategies to master each one. Understanding the underlying principles and practicing problem-solving techniques will help solidify your knowledge, allowing you to approach the evaluation with clarity and precision.

Targeted practice is a key factor in success. By revisiting typical scenarios and honing your ability to interpret data, you will develop the skills needed to answer complex problems with ease. This preparation method will not only improve your accuracy but also save valuable time during the test.

Preparation Guide for Assessment Success

Achieving excellent results in a challenging academic evaluation requires more than just memorizing facts. A strategic approach to preparation helps you develop a deep understanding of the material and improves your problem-solving abilities. By focusing on core principles, practicing key methods, and refining your analytical skills, you can approach your assessment with confidence.

Key Areas to Focus On

Start by reviewing the most important topics that are likely to appear in your assessment. Concentrate on areas such as data analysis techniques, hypothesis testing, and probability calculations. A strong grasp of these concepts is essential for answering complex scenarios accurately. Conceptual understanding is just as crucial as knowing how to perform calculations.

Effective Study Methods

For optimal preparation, create a study plan that includes regular practice sessions and active recall. Solving practice problems under time constraints will simulate the actual conditions of the test, helping you build speed and accuracy. Test yourself frequently and identify any weak spots to focus on before the assessment. This method ensures you are fully prepared to tackle any challenge that arises.

Key Concepts to Focus On

To excel in any comprehensive assessment, it’s crucial to concentrate on the fundamental principles that form the basis of the subject matter. A solid understanding of these core topics will enable you to navigate complex scenarios with ease and confidence. Prioritizing these areas during your preparation ensures that you can apply your knowledge effectively when faced with various challenges.

Among the key areas to focus on are statistical methods, probability theory, and data interpretation. These topics are essential for analyzing results, making informed decisions, and understanding how different variables interact in research settings. Mastering the ability to work with large datasets and accurately assess their significance is crucial for success in any assessment.

Additionally, paying attention to sampling techniques and statistical distributions will help you better understand how data is collected, organized, and used to draw conclusions. Gaining proficiency in these concepts will strengthen your overall ability to perform well under test conditions.

Commonly Asked Questions in Assessments

During comprehensive evaluations, certain topics tend to recur frequently. Understanding the types of problems that often appear will give you an advantage, allowing you to focus your efforts on mastering these areas. Familiarizing yourself with these common scenarios prepares you for the range of questions you may encounter.

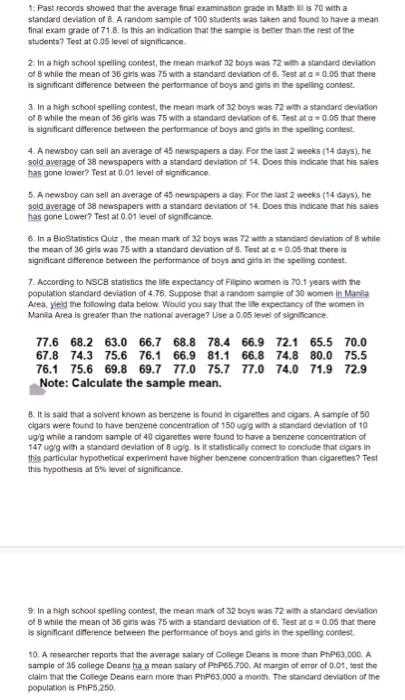

Some of the most commonly tested concepts include data interpretation, hypothesis testing, and the application of statistical models. These areas are fundamental for making accurate predictions and understanding trends within data. Expect to encounter questions that require both theoretical knowledge and practical application of statistical tools.

Another frequent focus is understanding the differences between various types of distributions, such as normal and binomial, as well as how to calculate probabilities and confidence intervals. Strengthening your ability to work with these concepts will increase your efficiency in handling complex problems.

Important Formulas for Assessment Success

Mastering key mathematical formulas is crucial for tackling a variety of problems effectively. Understanding the foundational equations allows you to apply them accurately under time pressure. Whether you’re working with probabilities, distributions, or data analysis techniques, being well-versed in these formulas can make a significant difference in your performance.

Essential Statistical Formulas

Here are some of the most important formulas to remember:

- Mean: x̄ = (Σxi) / n – The average of a data set, calculated by summing all values and dividing by the number of observations.

- Variance: σ² = (Σ(xi – x̄)²) / n – Measures the spread of data points around the mean.

- Standard Deviation: σ = √(σ²) – The square root of variance, providing a measure of the average distance of each data point from the mean.

- Probability: P(A) = n(A) / n(S) – The likelihood of an event occurring, where n(A) is the number of favorable outcomes and n(S) is the total number of possible outcomes.

- Confidence Interval: CI = x̄ ± Z(σ/√n) – Used to estimate the range within which the true population parameter lies, given a sample.

Key Formulas for Hypothesis Testing

In hypothesis testing, these formulas are particularly useful:

- Z-Score: Z = (x̄ – μ) / (σ/√n) – Used to determine how far a sample mean is from the population mean in terms of standard deviations.

- T-Test: T = (x̄ – μ) / (s/√n) – Used to test whether the sample mean significantly differs from the population mean when sample size is small.

- P-Value: P = Probability of observing a test statistic as extreme as the one observed – Helps in determining the strength of the evidence against the null hypothesis.

Understanding Statistical Distributions

Statistical distributions provide a powerful way to describe how data points are spread or arranged across different values. By understanding the different types of distributions, you can gain deeper insights into how data behaves and how to apply statistical models to make informed decisions. Each distribution has unique properties that influence the analysis and interpretation of data in various research fields.

Common Types of Distributions

There are several widely used distributions that are essential to grasp:

- Normal Distribution: Characterized by a symmetric bell curve, this distribution is commonly used in many statistical analyses, where most data points cluster around the mean.

- Binomial Distribution: Describes the number of successes in a fixed number of independent trials, with each trial having two possible outcomes (success or failure).

- Poisson Distribution: Used for modeling the number of events that occur in a fixed interval of time or space, often applied in cases of rare events.

- Uniform Distribution: Every outcome in the range has an equal probability of occurring, often used in simulations or randomized tests.

Key Characteristics to Understand

When working with distributions, it is important to understand several key features:

- Mean: The average value, often considered the center of the distribution.

- Variance: Measures the spread of data points around the mean.

- Skewness: Describes the asymmetry of the distribution. A positively skewed distribution has a longer tail on the right, while a negatively skewed one has a longer tail on the left.

- Kurtosis: Indicates the “tailedness” of the distribution, with a higher kurtosis meaning more outliers.

How to Analyze Data Sets

Effectively analyzing a data set involves understanding its structure, identifying patterns, and making meaningful interpretations. The goal is to extract valuable insights that can inform decisions, guide research, or solve problems. A systematic approach to data analysis helps ensure accuracy and reliability in the conclusions drawn from the data.

The first step in analyzing any data set is to organize the data properly. This can be done by creating tables or visual representations that allow for easier manipulation and interpretation. Once the data is organized, the next step is to calculate summary statistics, which provide a quick overview of the key features of the data.

| Statistic | Definition | Formula |

|---|---|---|

| Mean | Average of all values in the data set | x̄ = (Σxi) / n |

| Median | The middle value when the data is sorted | Arrange data, find middle |

| Mode | The most frequent value | Identify most common value |

| Range | The difference between the highest and lowest values | Range = max(x) – min(x) |

| Standard Deviation | Measures the spread of values around the mean | σ = √(Σ(xi – x̄)² / n) |

Once the basic statistics are calculated, more advanced techniques such as correlation analysis, regression modeling, or hypothesis testing can be applied to explore relationships between variables or make predictions. Visual tools like histograms, scatter plots, or box plots are also useful for identifying trends or outliers in the data.

Interpreting p-Values and Significance

Understanding the concept of statistical significance is crucial when evaluating research findings. The p-value is a key metric used to determine whether the observed results are likely due to chance or if there is enough evidence to support a particular hypothesis. Interpreting p-values correctly helps you make informed decisions about the validity of your results.

What Does the p-Value Represent?

The p-value is the probability of obtaining results as extreme as, or more extreme than, the observed results under the assumption that the null hypothesis is true. A smaller p-value indicates stronger evidence against the null hypothesis, suggesting that the observed effect is less likely to be due to random variation.

Threshold for Statistical Significance

In most studies, a threshold of 0.05 is commonly used to determine statistical significance. If the p-value is less than 0.05, the result is typically considered statistically significant, meaning there is enough evidence to reject the null hypothesis. However, a p-value greater than 0.05 suggests that the results are not significant, and the null hypothesis cannot be rejected.

It’s important to remember that the p-value does not provide a measure of the size or importance of an effect. A small p-value simply indicates that the observed result is unlikely under the assumption of no effect, but it doesn’t imply how large or meaningful the effect is. Therefore, researchers should also consider the effect size and confidence intervals when interpreting results.

Sampling Methods and Techniques

When conducting research or collecting data, selecting an appropriate sampling method is crucial for ensuring that the results are representative and reliable. The method used to select a sample can significantly influence the accuracy of the conclusions drawn from the data. Different techniques offer various advantages depending on the goals and scope of the study.

Types of Sampling Methods

There are several key methods for selecting a sample from a larger population:

- Random Sampling: Every individual in the population has an equal chance of being selected. This method helps eliminate selection bias and ensures that the sample is representative.

- Stratified Sampling: The population is divided into subgroups or strata based on a shared characteristic, and a random sample is then taken from each group. This technique ensures that specific subgroups are adequately represented.

- Systematic Sampling: A starting point is chosen at random, and then every nth individual from the population is selected. This is often simpler than random sampling but can introduce bias if there’s an underlying pattern in the data.

- Cluster Sampling: The population is divided into clusters, often based on geographical areas or other natural divisions. Then, entire clusters are randomly selected for study. This method is useful when it’s difficult or costly to sample individuals from the entire population.

Choosing the Right Sampling Technique

The choice of sampling technique depends on factors such as the population size, available resources, and the level of precision needed. Random sampling is generally ideal for eliminating bias, but it may not always be feasible in large populations. Stratified and cluster sampling methods are useful for ensuring representation of specific groups, while systematic sampling can be more efficient for large, homogeneous populations.

Probability Theory in Biostatistics

Probability theory plays a fundamental role in understanding uncertainty and variability in data analysis. It provides a framework for making inferences about a population based on sample data, guiding researchers in predicting outcomes and assessing risks. By applying probability concepts, researchers can quantify the likelihood of certain events occurring and make informed decisions in various scientific studies.

The use of probability in research allows for modeling random phenomena, such as disease occurrence or treatment effectiveness, where outcomes cannot be predicted with certainty. This theory helps to estimate the likelihood of different outcomes and interpret the strength of evidence against a hypothesis. Probability distributions, such as the normal distribution or binomial distribution, are commonly used to describe data patterns and assess the significance of findings.

Moreover, probability theory aids in designing studies, analyzing data patterns, and assessing the impact of random variation. It also helps determine the reliability of conclusions drawn from sample data and plays a key role in hypothesis testing and decision-making processes in scientific research.

Hypothesis Testing Strategies

Hypothesis testing is a crucial step in data analysis, allowing researchers to assess whether their findings are statistically significant and whether they support or contradict a proposed hypothesis. By applying various strategies, researchers can determine if the observed results are likely due to chance or if there is strong evidence to support the relationship between variables.

Steps in Hypothesis Testing

There are several key steps in the hypothesis testing process that help ensure accurate results:

- Formulating Hypotheses: The first step is to establish a null hypothesis (H₀) and an alternative hypothesis (H₁). The null hypothesis generally represents the status quo or no effect, while the alternative hypothesis proposes a potential effect or relationship.

- Selecting the Significance Level: The significance level (α) is chosen, typically set at 0.05, which represents a 5% risk of rejecting the null hypothesis when it is actually true.

- Choosing the Appropriate Test: Depending on the data type and research question, various tests, such as t-tests, chi-square tests, or ANOVA, may be used to evaluate the hypotheses.

- Analyzing Data and Calculating p-Value: After conducting the statistical test, the p-value is calculated to determine whether the results are statistically significant. A p-value below the significance level suggests evidence against the null hypothesis.

- Making a Decision: If the p-value is smaller than the chosen significance level, the null hypothesis is rejected, supporting the alternative hypothesis. Otherwise, the null hypothesis is not rejected, and no significant relationship is found.

Common Strategies for Effective Hypothesis Testing

Researchers often employ a variety of strategies to increase the accuracy and reliability of hypothesis tests:

- Choosing the Right Test: It’s essential to choose the correct statistical test based on data type, distribution, and sample size. For example, a t-test is suitable for comparing two groups, while an ANOVA is used for comparing three or more groups.

- Sample Size Considerations: Larger sample sizes generally provide more reliable results and increase the power of the test. Small sample sizes may lead to Type I or Type II errors, where false conclusions are drawn.

- Multiple Testing Corrections: When conducting multiple tests, it’s important to adjust for multiple comparisons to avoid Type I errors. Techniques like the Bonferroni correction or False Discovery Rate (FDR) can be applied to control for this risk.

Practical Applications of Biostatistics

The application of statistical methods to real-world problems plays a key role in making informed decisions across various fields, especially in the health and life sciences. By analyzing data, researchers and professionals can draw meaningful conclusions that help improve public health, guide policy decisions, and optimize medical treatments. Statistical tools allow for evidence-based insights into areas such as disease prevention, clinical trials, and environmental health.

Health and Medicine

In healthcare, statistical techniques are vital for assessing the effectiveness of treatments, understanding disease patterns, and predicting future health outcomes. For example, statistical models are used to determine the relationship between risk factors and diseases, such as heart disease or cancer. By analyzing patient data, clinicians can identify trends, predict the progression of diseases, and develop personalized treatment plans.

Public Health Research

In public health, statistical methods are applied to study population health trends, evaluate interventions, and assess the distribution of diseases. Epidemiologists use these techniques to identify factors that influence disease outbreaks, such as environmental exposures, lifestyle behaviors, or socioeconomic status. The goal is to prevent illness and promote health by understanding the causes and spread of diseases within communities.

Moreover, these methods are essential for planning and evaluating public health programs, as they help policymakers design strategies based on statistical evidence. By using data to guide decisions, public health officials can implement effective programs that improve the well-being of entire populations.

Data Visualization in Biostatistics

Data visualization plays a crucial role in understanding complex datasets by transforming numerical data into graphical representations. This approach allows researchers and analysts to quickly identify patterns, trends, and outliers in their data, making it easier to communicate findings to others. Visual tools help in simplifying intricate concepts and make statistical results more accessible and interpretable, especially when dealing with large volumes of information.

Common Visualization Techniques

There are several visualization techniques commonly used to represent statistical data effectively:

- Bar Charts: Useful for comparing quantities across different categories. Bar charts are ideal for showing discrete data and highlighting differences between groups.

- Histograms: These graphs are used to represent the distribution of a dataset, showing how data points are spread across various intervals. They are particularly useful for continuous data.

- Scatter Plots: Scatter plots display the relationship between two variables. They help identify correlations or trends, allowing for a visual assessment of potential associations between variables.

- Box Plots: Box plots are effective in showing the distribution of data based on quartiles. They also highlight outliers and give a clear summary of data spread and central tendency.

Benefits of Data Visualization

Visualizing data can enhance the decision-making process by providing insights that might be missed in raw numbers. Here are some key benefits:

- Clear Communication: Graphs and charts make complex data easier to understand and explain to non-experts, ensuring that important findings are communicated effectively.

- Trend Identification: Visualization helps detect patterns or trends that might not be immediately obvious, allowing researchers to make more informed predictions and decisions.

- Quick Data Interpretation: By presenting data visually, it becomes much easier to identify relationships, outliers, and anomalies, speeding up the analysis process.

Ultimately, integrating data visualization into statistical analysis enhances clarity, precision, and the overall impact of the findings, facilitating better decisions in research, policy, and practice.

Common Mistakes in Biostatistics Exams

During assessments in the field of statistical analysis, certain mistakes are frequently made by students, which can significantly impact their performance. These errors often stem from misunderstandings of core concepts or the improper application of statistical methods. Recognizing these common pitfalls can help students avoid them and improve their ability to answer questions accurately and effectively.

Misinterpreting Statistical Concepts

A major source of mistakes is the misunderstanding of key statistical principles. Many students confuse terms such as population vs. sample, or fail to grasp the differences between various statistical tests. It is essential to be clear on concepts like confidence intervals, hypothesis testing, and p-values, as these are foundational to proper analysis.

Incorrect Application of Formulas

Another common mistake occurs when students use formulas inappropriately. This may involve applying the wrong equation for a given data set or miscalculating variables. It is important to remember that each statistical method is suited for specific types of data or research questions, and selecting the wrong one can lead to inaccurate results.

Overlooking Assumptions

Every statistical model comes with a set of assumptions. Whether it’s normality, homoscedasticity, or independence, these assumptions need to be checked before conducting tests. Ignoring these assumptions can lead to biased conclusions and false inferences.

Neglecting Data Visualizations

Data visualizations are powerful tools for interpreting results, but students often overlook them. Properly using graphs and charts can provide deeper insights and help detect errors in calculations or assumptions. Failing to include or properly interpret visual representations of data can reduce the clarity of answers and analyses.

By being mindful of these common mistakes, students can strengthen their understanding and improve their performance in assessments. Careful attention to detail and a solid grasp of statistical principles are key to avoiding these pitfalls.

How to Interpret Confidence Intervals

Confidence intervals provide a range of values that are used to estimate the true value of a population parameter. Understanding how to interpret these intervals is crucial for drawing conclusions from statistical analysis. A properly constructed confidence interval gives insight into the precision and reliability of the estimated parameter, allowing you to assess how well the sample data represents the population.

Understanding the Basics

When you calculate a confidence interval, you’re essentially stating that there is a certain probability that the true value lies within the range. This probability is often set at 95%, but it can vary depending on the desired level of confidence. The wider the interval, the less precise the estimate, and vice versa.

Key Points to Consider

- Interval Range: The interval represents a range of plausible values for the population parameter. For example, if the interval is (10, 20), the true value of the parameter is believed to lie between 10 and 20 with a specified level of confidence.

- Confidence Level: The confidence level (commonly 95% or 99%) indicates the likelihood that the interval contains the true parameter. A 95% confidence interval means that if you were to repeat the study many times, 95% of the intervals calculated would contain the true parameter.

- Interpretation of Results: If a confidence interval for the mean difference between two groups is (3, 7), it suggests that the true difference in means is likely between 3 and 7. If zero is outside this interval, you might conclude that there is a statistically significant difference between the groups.

- Interval Width: Narrow intervals indicate high precision, whereas wider intervals suggest more uncertainty. A narrower interval can increase the reliability of your estimates, while a wider one may indicate the need for more data to obtain a clearer result.

Practical Application

In practice, interpreting confidence intervals allows you to evaluate the uncertainty in your results. For instance, in a clinical study measuring the effectiveness of a treatment, if the confidence interval for the mean reduction in symptoms does not include zero, it could indicate that the treatment has a statistically significant effect. However, if the interval includes zero, it suggests that the treatment might not have any effect at all.

By understanding how to interpret confidence intervals, you can make more informed decisions about the reliability of statistical estimates and the strength of the evidence. This is crucial in both research and practical applications, where the interpretation of data can influence important conclusions and decisions.

Calculating and Understanding Variance

Variance is a key measure of how spread out or dispersed a set of data points is. It tells you how much individual values in a dataset deviate from the mean, providing insight into the data’s consistency or variability. A higher variance indicates greater spread, while a lower variance suggests that the data points are closer to the mean. Understanding variance is essential for interpreting statistical results and making informed decisions based on data.

How to Calculate Variance

The calculation of variance involves several key steps. First, the mean of the dataset is found. Then, each data point is subtracted from the mean and squared. These squared differences are summed up, and the result is divided by the number of data points (for a population) or by the number of data points minus one (for a sample). The formula for variance is as follows:

- Population variance: σ² = Σ(xᵢ – μ)² / N

- Sample variance: s² = Σ(xᵢ – x̄)² / (n – 1)

Where:

- σ²: Population variance

- s²: Sample variance

- xᵢ: Each individual data point

- μ: Population mean

- x̄: Sample mean

- N: Total number of data points (population)

- n: Sample size

Interpreting Variance

Once you have calculated the variance, it is important to understand its implications. A higher variance means that the data points are more spread out, indicating greater variability in the dataset. A lower variance suggests that the values are closer to the mean, indicating less variability. Variance alone can be difficult to interpret because it is in squared units, making it hard to compare directly to the original data. To make the results more meaningful, you can take the square root of the variance to obtain the standard deviation, which is in the same units as the original data.

Variance plays a crucial role in many statistical analyses, including hypothesis testing and the construction of confidence intervals. It helps you understand the degree of uncertainty in your estimates and is fundamental for assessing the reliability of statistical conclusions.

Tips for Time Management During Exams

Effective time management is essential when preparing for any type of assessment. It ensures that you allocate sufficient time to each section, avoid rushing through tasks, and ultimately enhance your performance. Proper planning and a clear strategy can help you stay organized, reduce stress, and ensure you complete all the required tasks within the time limit.

Key Time Management Strategies

Below are several proven strategies that can help you manage your time more effectively during a test:

- Prioritize Your Tasks: Start by quickly reviewing the entire assessment and identify sections that are more complex or carry more weight. Tackle the challenging parts first when you’re most focused.

- Set Time Limits: Allocate a specific amount of time for each question or section. Stick to these limits to avoid spending too much time on any one part.

- Practice Under Time Constraints: Before the actual assessment, simulate real exam conditions. Practice solving problems within a set time to become familiar with pacing yourself.

- Stay Calm and Focused: If you get stuck on a difficult question, don’t panic. Move on to the next one and come back to the challenging question later with a fresh mind.

- Review Your Work: If time allows, leave a few minutes at the end to review your answers and make sure you haven’t missed anything important.

Time Allocation Table

The following table provides a sample time allocation guide based on the number of questions or sections in a typical assessment:

| Section | Time Allocation | Strategy |

|---|---|---|

| Introduction or Instructions | 5-10 minutes | Read thoroughly, plan your approach |

| Simple Questions | 1-2 minutes per question | Quickly read and answer |

| Complex Problems | 5-10 minutes per question | Allocate more time, but avoid overthinking |

| Review Time | 5-10 minutes | Check for errors, revisit difficult questions |

By following these strategies, you can effectively manage your time, ensure that you cover all the material, and reduce the likelihood of making careless mistakes. Remember, practice and preparation are key to mastering time management in any type of assessment.

Reviewing Past Papers Effectively

Analyzing previous assessments is one of the best strategies for improving performance. By thoroughly reviewing past materials, you can identify patterns in the types of tasks asked, understand the areas that require more attention, and build confidence in your approach. This method not only helps familiarize you with the format but also allows you to practice under realistic conditions, which can reduce anxiety during the actual assessment.

Steps for Effective Review

Follow these structured steps to make the most of reviewing previous materials:

- Identify Common Themes: Go through past tasks and identify recurring topics. This will help you focus your revision on the most important subjects.

- Understand the Structure: Pay attention to how questions are typically structured, the common instructions, and the format. This will make it easier to approach similar tasks in the future.

- Time Yourself: Try completing past papers under timed conditions. This will help you understand how long to spend on each section and improve your time management skills.

- Analyze Mistakes: When reviewing your past attempts, focus on the mistakes made. Understand why they happened and how you can correct them next time.

- Seek Clarification: If there are any concepts or tasks that you don’t fully understand, make sure to seek help or review relevant materials to reinforce your knowledge.

Sample Review Strategy Table

The following table outlines a basic strategy for reviewing previous papers effectively:

| Step | Time Allocation | Focus Area |

|---|---|---|

| Initial Review | 15-20 minutes | Skim through the paper to get an overview |

| Deep Analysis | 30-45 minutes | Focus on individual tasks, understand structure |

| Practice Under Timed Conditions | 1 hour | Recreate assessment conditions to test speed and accuracy |

| Review Mistakes | 20-30 minutes | Analyze errors, revisit concepts, and apply corrections |

By systematically reviewing previous materials and practicing with real-world tasks, you’ll improve both your understanding and your ability to perform under pressure. Consistency in this approach will lead to greater mastery over the subject matter.

Best Resources for Exam Preparation

When preparing for assessments, selecting the right materials is crucial for success. Resources range from textbooks to online courses, providing a variety of formats to suit different learning styles. By choosing high-quality materials, you can deepen your understanding of the core concepts and apply them effectively during the evaluation. This section highlights the best tools available for effective preparation.

Top Books for Study

Books are a great starting point for building a solid foundation. They offer structured content that helps break down complex topics into digestible sections. Some excellent titles include:

- Introduction to Statistical Methods: This book provides clear explanations of key concepts and is ideal for beginners.

- Advanced Statistical Analysis: For more in-depth study, this resource covers advanced techniques and their real-world applications.

- Statistical Inference and Decision Making: A comprehensive guide focusing on inference and making decisions based on data analysis.

Online Learning Platforms

For those who prefer interactive and flexible learning, online courses are an excellent option. Some popular platforms include:

- Coursera: Offers courses from top universities, featuring expert-led lectures, quizzes, and peer-reviewed assignments.

- edX: Provides free courses and certificates, with a wide range of materials tailored to various levels of expertise.

- Khan Academy: Known for its accessible tutorials and exercises, this platform breaks down difficult concepts into easy-to-understand segments.

Practice and Review Tools

To improve test-taking skills and apply theoretical knowledge, it’s important to practice regularly. These tools provide a wealth of sample problems and solutions:

- Past Papers: Reviewing previous tasks from past evaluations will give you a sense of the format and question types you can expect.

- Online Problem Solvers: Websites offering problem sets with step-by-step solutions help you practice in a structured way.

- Interactive Quizzes: These allow you to test your knowledge under timed conditions, simulating the pressure of real assessments.

Combining these resources will help you develop a deep understanding of the material and improve both your theoretical knowledge and practical application. By utilizing a mix of books, online platforms, and practice tools, you’ll be well-equipped to tackle any challenge in your upcoming evaluations.