The ability to efficiently manage system resources is essential in modern computing. A core part of this is how the system handles data storage, providing an illusion of vast, continuous space. This concept is fundamental to the functioning of operating systems, allowing them to run multiple tasks simultaneously without running out of space.

When preparing for assessments on this topic, it is crucial to grasp the mechanics behind data division and management strategies. Key components such as address translation, page swapping, and efficient resource allocation are often tested. A strong grasp of these principles will provide the clarity needed to tackle any related questions confidently.

By breaking down complex mechanisms into manageable sections, learners can build a solid foundation for addressing these concepts in both theoretical and practical contexts. Understanding the underlying processes, their benefits, and the potential drawbacks will give you the edge when preparing for your upcoming evaluation.

Virtual Memory Overview for Exams

Effective resource management is a key aspect of modern computing systems. A method exists that allows computers to simulate having more space than physically available, ensuring smooth multitasking and process isolation. Understanding how this system operates is vital for anyone preparing to tackle related topics in assessments.

Core Concepts

The following concepts are central to understanding how data is handled in a managed environment:

- Address translation techniques

- Process isolation and protection

- Storage abstraction methods

- Efficient allocation of system resources

Key Mechanisms

Several mechanisms work together to make this system effective:

- Page tables that map logical to physical addresses

- Swapping processes between storage and active memory

- Segmentation for dividing tasks into manageable chunks

By mastering these elements, you will be well-equipped to understand the practical applications and theoretical implications, which will be critical for your studies and future tests on the subject.

Key Concepts in Virtual Memory

When discussing efficient data handling within a computer system, there are several foundational principles that help ensure optimal performance. These principles are designed to improve the ability to run multiple processes simultaneously while maintaining the integrity of each task. Understanding these fundamental ideas is essential for grasping the underlying structure of how computing systems manage resources.

Important Ideas to Grasp

The following concepts are central to this field:

- Address Mapping: How logical addresses are converted to physical addresses.

- Process Isolation: Ensures that each process operates independently and securely.

- Efficient Resource Allocation: Techniques used to distribute resources dynamically.

Key Components

The system’s ability to manage data effectively relies on several core elements. These components work together to simulate more space than physically available, allowing for improved performance:

| Component | Function |

|---|---|

| Page Tables | Map logical addresses to physical locations in storage. |

| Swapping | Move processes between active space and storage when needed. |

| Segmentation | Divide large tasks into smaller, more manageable sections. |

Mastering these components and concepts will enable a deeper understanding of how data is efficiently managed in a computing environment, which is crucial for succeeding in any related assessments.

Understanding Page Tables and Paging

Efficiently managing the large amounts of data in a computing system requires a structured approach to map tasks and store information. A critical aspect of this management is the method that allows the division of data into smaller chunks, making it easier to handle and access. This process ensures that resources are used efficiently while providing the system with the flexibility to run multiple processes simultaneously.

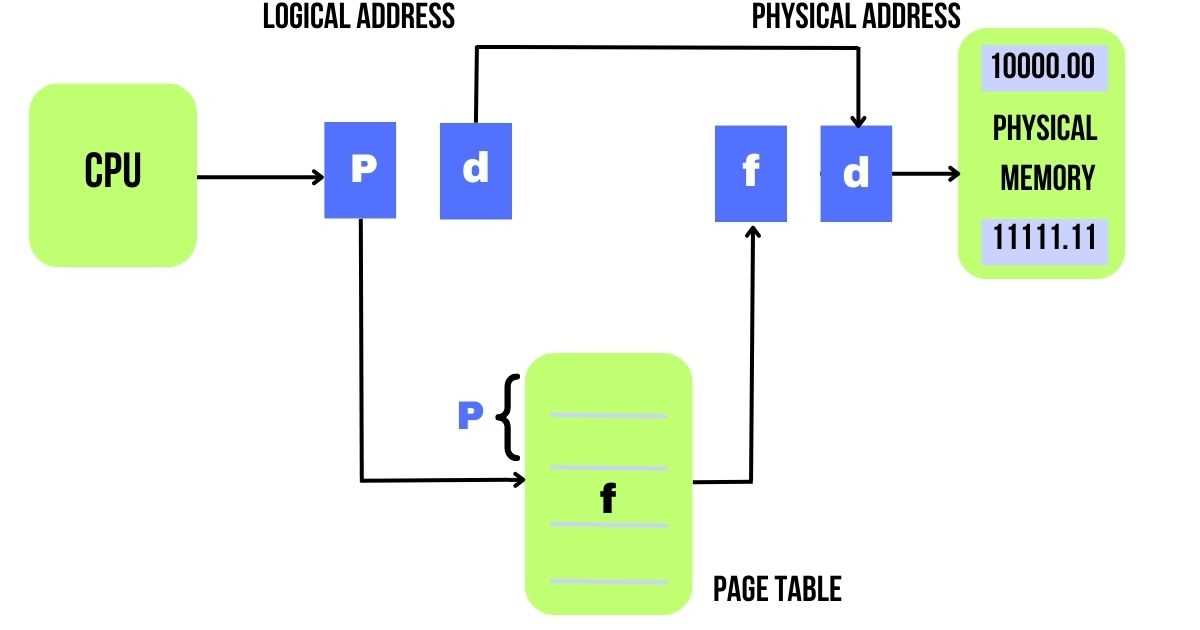

How Page Tables Work

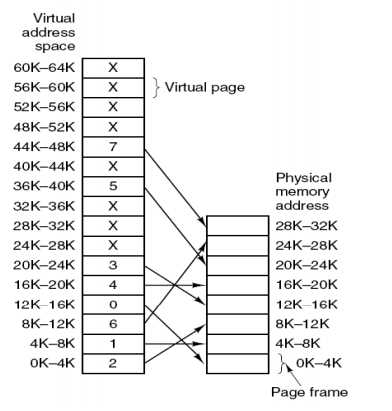

Page tables play a pivotal role in translating logical addresses into physical locations. This method allows the system to handle large amounts of data by breaking it into smaller, manageable pieces known as pages. The translation is done through a system that maps these pages to their corresponding locations in physical storage. Some key points to consider:

- Each entry in a page table corresponds to a single page of data.

- Pages are of fixed sizes, typically ranging from 4KB to 8KB.

- Page tables store the base address of each page in the system.

The Paging Process

Paging is the technique used to divide data into fixed-size blocks, making it easier to store and retrieve from physical storage. When a program accesses data, it uses a logical address, which is then mapped to a physical address through the paging mechanism. The process works as follows:

- The logical address is divided into a page number and an offset.

- The page number is used to find the page’s corresponding entry in the page table.

- The offset specifies the exact location within the page.

By using paging, systems can more effectively allocate storage, manage resources, and run multiple processes without running into issues of space or fragmentation.

Role of the Memory Management Unit

The effective allocation of system resources relies on the presence of a dedicated unit within the hardware. This unit serves as the intermediary between software and physical storage, ensuring that data is accessed efficiently while also maintaining the integrity of each process. It is crucial in enabling a system to manage large volumes of information without overloading the available resources.

Operating as a critical component in modern computing, this unit performs various essential functions that allow for smooth and uninterrupted operation. It translates logical addresses used by programs into actual physical addresses in storage, ensuring that every piece of data can be properly located. Additionally, it manages the movement of data between active processing space and storage, allowing programs to run without running into space limitations.

By handling tasks like address translation, protection, and the coordination of resources, this unit is integral to ensuring that the system functions efficiently. Its role is key in supporting both the performance and security of the overall system.

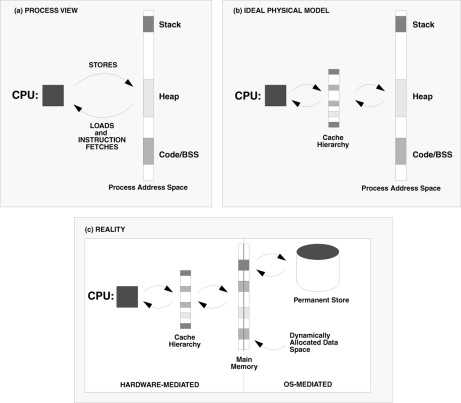

Differences Between Physical and Virtual Memory

In modern computing systems, data is managed through two distinct layers that serve different purposes. One refers to the actual hardware component where data is stored, while the other creates an abstraction, making it appear as though there is more space than physically available. These two layers work together to ensure smooth operation, yet they differ in how they handle data and address management.

The primary distinction between these two types of storage is that one represents the actual physical space available within a system, while the other represents an illusion of continuous, limitless space. The first is limited by the hardware, while the second allows the system to handle more tasks and larger datasets by dividing them into smaller, more manageable segments. Despite their differences, both are essential for the overall efficiency of a system.

Understanding the roles of each is critical for grasping how computing systems balance performance and resource usage. The first is more concerned with speed and direct access, while the second enables flexibility and scalability, allowing multiple processes to share limited resources without interfering with each other.

What is a Page Fault?

When a program attempts to access data that is not currently loaded into the active processing space, it encounters an issue that requires intervention from the system. This event, which occurs when the requested data is not where it is expected to be, triggers a series of steps to resolve the situation and retrieve the missing information.

This occurrence, known as a page fault, is a normal part of a system’s operation. It happens when the software tries to access a portion of data that has been swapped out of active space or has not yet been loaded into the system. Upon detecting this fault, the system pauses the program and retrieves the needed data from storage, bringing it into the active space so that the program can continue its execution.

While page faults are essential for managing resources efficiently, an excessive number of them can indicate inefficiencies, often referred to as thrashing. Understanding how these faults occur and how they are handled is critical for optimizing system performance.

How Segmentation Works in Virtual Memory

In modern systems, efficient data management requires dividing information into smaller, more manageable parts. One technique used to achieve this is segmentation, which organizes data into logical units, making it easier to process and retrieve. This approach helps ensure that tasks and processes are handled independently while also allowing them to share system resources effectively.

Key Concepts of Segmentation

Segmentation works by dividing data into segments, each with a specific purpose. These segments can vary in size and are tailored to meet the needs of the running program. Some of the key segments include:

- Code Segment: Contains executable instructions for the program.

- Data Segment: Holds variables and constants used by the program.

- Stack Segment: Used for function calls, local variables, and control information.

- Heap Segment: Manages dynamically allocated memory.

Benefits of Segmentation

By organizing data into logical sections, segmentation offers several advantages:

- Improved organization and easier management of program data.

- More flexible allocation of system resources, allowing better optimization.

- Increased security by isolating different segments from each other.

While segmentation provides many benefits, it also requires careful management to avoid fragmentation and ensure optimal performance. Understanding how segmentation works is crucial for grasping how modern systems handle complex data efficiently.

Swapping Mechanism Explained

In a system where resources are limited, efficient data management becomes essential to ensure that multiple processes can run concurrently without overwhelming the available capacity. One key technique used to achieve this is the swapping mechanism. This process allows the system to move data between the active workspace and storage, making room for other tasks while maintaining overall system functionality.

Swapping works by transferring portions of a program or data that are not actively needed from the processing area to a secondary storage device. When these sections are required again, the system retrieves them from storage and places them back into the active area. This dynamic movement of data helps to ensure that the system can run several processes even with limited resources.

Through swapping, a system can balance the active use of resources, providing an illusion of abundant space while minimizing delays. However, if the swapping process becomes excessive, it may lead to performance degradation, a phenomenon known as thrashing. In such cases, the system spends too much time moving data in and out of storage instead of executing tasks, which can slow down the entire system.

Key points to remember about swapping:

- Efficiency: It allows the system to handle more processes than could fit into the active space at one time.

- Performance Trade-offs: Frequent swapping can lead to performance bottlenecks and system delays.

- Resource Management: Ensures that resources are allocated efficiently, maximizing the system’s throughput.

Examining the Translation Lookaside Buffer

In modern computing systems, efficient data retrieval is key to maintaining fast processing speeds. One of the methods used to accelerate this process is the use of a specialized cache, designed to hold frequently accessed information. This cache, known as the Translation Lookaside Buffer (TLB), plays a crucial role in optimizing address translation, making it quicker and more efficient for the system to retrieve necessary data.

The TLB functions as a small, fast storage area that temporarily holds recently used address mappings. When a program requests data, the system first checks the TLB to see if the relevant address translation is already cached. If it is, the data can be retrieved immediately, avoiding the time-consuming process of searching through larger memory structures. If the required mapping is not in the TLB, the system must access a more comprehensive address translation table, which takes longer.

This mechanism greatly improves performance by reducing the time needed for address translation, especially in systems where large amounts of data are handled. However, the effectiveness of the TLB is dependent on how often its contents are used and how frequently address mappings change. A high miss rate, when the required data isn’t found in the cache, can lead to slower performance and increased system load.

How Address Translation Functions

In computing systems, translating between different types of addresses is a critical process that ensures data is accessed efficiently. When a program runs, it relies on addresses to locate data, but these addresses may need to be mapped from one form to another for proper execution. Address translation enables this mapping, converting logical addresses used by applications into physical addresses that point to the actual locations in hardware.

The address translation process involves multiple steps, where the system checks a mapping structure to find the physical location of the requested data. This conversion helps streamline the execution of programs, allowing for more efficient use of resources and greater flexibility in managing data. By translating logical addresses to physical ones, systems can effectively handle more complex workloads without overloading the hardware.

Key Steps in Address Translation

Here’s a simplified overview of how address translation typically works:

| Step | Action |

|---|---|

| 1 | The program requests access to a specific address. |

| 2 | The system checks the translation table to find the corresponding physical address. |

| 3 | If a mapping is found, data is retrieved from the physical location; if not, the system must perform further lookups. |

| 4 | The data is returned, and the program continues execution. |

Why Address Translation Matters

Without address translation, programs would need to know the exact locations of data in hardware, making them less flexible and more prone to errors. This process not only increases efficiency but also enhances security by isolating applications and ensuring they access only their own data. By allowing programs to work with logical addresses, the system can manage resources more effectively and prevent issues like memory conflicts or corruption.

Virtual Memory Allocation Strategies

Efficient resource management is essential in any computing system, especially when multiple processes require access to limited hardware resources. One way to manage these resources is by employing different allocation strategies that optimize how space is assigned and used. These strategies help ensure that each process receives the necessary resources while maintaining system performance and avoiding conflicts.

Different allocation methods are designed to cater to various needs of a system, depending on the type and frequency of tasks being processed. Some methods allocate space in fixed sizes, while others allow for dynamic adjustments based on the system’s current load. Understanding these strategies is crucial for optimizing performance and ensuring that the system runs smoothly under heavy workloads.

Common Allocation Strategies

Below are some of the most widely used strategies for assigning space in a system:

| Strategy | Description |

|---|---|

| Contiguous Allocation | Allocates a single continuous block of space to a process, simplifying the management of resources but potentially causing fragmentation. |

| Paged Allocation | Divides data into fixed-size pages, which are then mapped to available physical space, helping to eliminate fragmentation issues. |

| Segmented Allocation | Divides memory into variable-sized segments based on the logical divisions of a program, offering flexibility but requiring complex management. |

| Dynamic Allocation | Allocates space dynamically based on demand, allowing the system to adjust as needed, though it can be more complex to manage. |

Each of these strategies has its strengths and weaknesses, depending on the workload, resource availability, and desired performance. The choice of which to use is typically based on the specific requirements of the system or application being supported.

Impact of Virtual Memory on Performance

Efficient use of system resources is essential for achieving optimal performance in any computing environment. The allocation of space and management of processes can greatly influence the speed and responsiveness of a system. By providing the ability to use more space than is physically available, this resource management technique can improve overall efficiency, but it can also introduce delays under certain conditions.

When multiple programs require access to limited resources, using an advanced management technique can help avoid bottlenecks and ensure smooth operation. However, when systems need to frequently swap data between storage and active processes, performance may degrade. Understanding the balance between resource allocation and system demand is key to optimizing performance.

Positive Impacts on Performance

The use of resource management techniques has several benefits when used correctly:

- Increased Flexibility: Allows applications to use more space than is physically available, enabling larger and more complex programs to run efficiently.

- Improved Multitasking: Supports multiple programs running simultaneously without requiring each to fit entirely into physical storage.

- Better Resource Utilization: Ensures that idle or less-used resources are available for other tasks, preventing waste.

Challenges and Performance Drawbacks

However, there are also certain challenges that can reduce system performance:

- Increased Latency: When data must be swapped between active processes and secondary storage, it introduces delay, which can impact overall speed.

- Fragmentation Issues: Over time, the allocation of space can become fragmented, which can reduce efficiency and cause unnecessary delays in accessing data.

- Overhead from Management: Complex systems of managing allocated resources may require significant overhead, particularly under high-demand conditions.

Understanding these effects and carefully tuning the system’s resource management can help strike the right balance between flexibility and performance. By optimizing these strategies, a system can maintain high throughput without compromising responsiveness or stability.

Common Virtual Memory Exam Mistakes

When preparing for assessments on system resource management, students often encounter several common pitfalls that can affect their performance. These errors usually stem from misunderstandings or misinterpretations of how the system handles resources like space allocation, data swapping, and process isolation. Recognizing these mistakes can help prevent them and improve understanding of the core concepts.

One of the most frequent issues involves confusing key terms and their roles in a system’s functioning. Another mistake occurs when students overlook the practical aspects of how different components work together to manage tasks and handle memory-related operations effectively. By understanding these common mistakes, learners can better grasp the importance of efficient resource management.

Confusing System Components

Many learners mix up important components and their responsibilities. Some common confusions include:

- Physical vs. Logical Resources: Misunderstanding the difference between actual hardware resources and the abstracted resources used by processes.

- Misinterpreting Process Isolation: Failing to fully grasp how the system isolates one process from another, which could lead to mistakes when explaining security and process management.

- Confusing Data Caching and Swapping: Mixing up these techniques and their impact on performance can lead to a lack of clarity in how data is moved between storage and active processes.

Overlooking Key System Operations

Another common mistake is neglecting to study essential operational processes involved in system management:

- Underestimating Fragmentation: Failing to account for how space allocation can lead to fragmentation, which negatively affects performance over time.

- Ignoring Latency Issues: Not recognizing how delays from data swapping between different stages can impact overall system responsiveness.

- Overlooking Optimization Techniques: Forgetting to focus on system optimizations such as page replacement algorithms, which can influence overall efficiency.

By recognizing and addressing these common mistakes, students can improve their comprehension of the topic and approach assessments with greater confidence and accuracy.

Preparing for Virtual Memory Exam Questions

Effective preparation for assessments on system resource management requires a deep understanding of how processes interact with underlying hardware and the techniques used to optimize resource usage. A key part of this preparation is familiarizing yourself with the concepts, structures, and operations that govern the allocation and handling of resources. By understanding these principles, you can approach questions with clarity and confidence.

To excel in these assessments, it’s important to focus on both the theoretical knowledge and practical applications of the topics. Understanding how different components like paging, segmentation, and the memory management unit work together is crucial for answering complex questions. Additionally, practicing problem-solving techniques and scenario-based questions can further prepare you for any challenges that may arise during the assessment.

Mastering Core Concepts

Start by focusing on the fundamental principles that will form the basis of most questions. Some key areas to concentrate on include:

- Resource Allocation Strategies: Understand how resources are allocated, swapped, and managed across different processes.

- Address Translation: Learn how logical addresses are mapped to physical addresses and the importance of this process in system performance.

- Memory Protection Mechanisms: Study how the system ensures that one process does not interfere with the memory of another, providing security and stability.

Practical Applications and Problem Solving

Alongside theoretical knowledge, it is essential to practice solving practical problems related to resource management. Some strategies for practice include:

- Solving Scenario-Based Problems: Work through real-world scenarios to understand how theory applies to actual system behavior.

- Revisiting Mistakes: Learn from past errors or challenges you’ve encountered to prevent similar issues in the future.

- Mock Assessments: Take timed practice tests to improve your speed, accuracy, and ability to handle pressure during the actual assessment.

By mastering the core concepts and practicing with real-world problems, you’ll be well-equipped to tackle any challenge that may come your way during the evaluation process.

Practical Examples of Virtual Memory Use

Understanding the practical applications of resource management is key to grasping how modern systems efficiently allocate and utilize resources. Various techniques and processes rely heavily on this concept to ensure smooth and efficient operation, especially when handling multiple tasks or large applications. By studying real-world examples, one can gain a deeper understanding of how these mechanisms function in practice.

Here are a few examples where these techniques are commonly applied:

- Multitasking Environments: In systems where multiple processes are running simultaneously, resources are dynamically allocated to ensure each process has access to the necessary resources. This approach helps in optimizing system performance while maintaining stability.

- Running Large Applications: When handling large programs or software that require significant amounts of resources, such as video editing or 3D rendering, this mechanism ensures that the system can efficiently allocate space as needed, even if the physical resources are limited.

- Web Servers and Databases: Server-side applications often require efficient memory management to process hundreds or thousands of user requests simultaneously. This technique allows servers to handle larger loads without running out of available space, ensuring continued performance.

Common Techniques Used in Practice

- Paging: This technique divides data into smaller, manageable blocks that can be moved to and from the primary storage as needed. This allows applications to access data without overloading the system’s capacity.

- Segmentation: This approach is used to divide a program’s code or data into segments, each of which can be handled independently. This enables more flexible management of larger applications.

- Swapping: When the system reaches its capacity, less active processes are moved to secondary storage, allowing active ones to continue running without interruption.

By understanding these examples, it becomes clear how efficient resource management plays a critical role in maintaining optimal performance and ensuring that systems can handle a variety of tasks without crashing or slowing down.