As technology continues to evolve, the need for understanding the core principles of computer environments has never been greater. Whether you’re preparing for a technical certification or reviewing essential topics for an academic assessment, a solid grasp of fundamental concepts is crucial. This guide aims to provide clarity on various subjects you will encounter in your preparation journey.

In-depth comprehension of concepts such as resource management, data storage, and network communication forms the backbone of your success. With a focus on practical applications and key theoretical knowledge, this section will help you build a strong foundation, addressing the most important aspects of the field. From memory allocation to process control, each topic offers insights into how devices and software interact to provide the functionalities we rely on daily.

By breaking down complex topics into digestible parts, this resource will guide you through critical areas, giving you the tools needed to approach each subject with confidence. Prepare yourself to tackle the challenges that may arise, and boost your ability to demonstrate expertise in any related setting.

Operating Systems Exam Questions and Answers

When preparing for an assessment in the field of computing, it’s essential to focus on understanding key concepts and their practical applications. This section will help you familiarize yourself with common topics you may encounter, covering everything from resource management to data processing. Mastering these principles will allow you to tackle any challenge with confidence, regardless of the specific format or difficulty of the task.

Critical Topics to Focus On

Understanding the core principles behind memory management, process scheduling, and storage organization is vital for success. These areas often feature prominently in assessments, requiring a clear grasp of how each component functions independently and in relation to one another. Additionally, network communication and security measures are commonly tested, as these are integral to how devices interact and maintain data integrity across platforms.

Practical Insights for Success

To excel in your review, it’s important to focus not only on theoretical knowledge but also on real-world scenarios that reflect how these principles are applied in various settings. By working through different scenarios and examples, you’ll develop a deeper understanding of the material, making it easier to address both basic and advanced challenges. Understanding how to balance theory with practical execution will set you up for success in any evaluation.

Key Concepts in Operating Systems

Understanding the essential principles of how computers manage resources, processes, and data is fundamental to excelling in any technical evaluation. These core concepts form the foundation for addressing more complex topics and real-world applications. By mastering these ideas, you’ll be well-equipped to tackle challenges related to system efficiency, performance, and security.

Resource Management and Control

Resource management involves overseeing the allocation and optimization of critical assets, such as memory, processing power, and storage. These resources must be managed effectively to ensure smooth operation and avoid conflicts or inefficiencies. The ability to handle various processes concurrently, while maintaining the integrity of data, is essential for any advanced computer operation.

Security and Protection Mechanisms

Protection measures are essential for ensuring the integrity of both the system and the data it handles. Security techniques help prevent unauthorized access, protect against malware, and maintain confidentiality. By focusing on encryption, authentication, and user access control, it’s possible to create secure environments for users and applications alike.

| Concept | Description | Importance |

|---|---|---|

| Resource Allocation | Managing memory, processing, and storage efficiently | Ensures system performance and stability |

| Concurrency | Running multiple tasks at the same time | Improves efficiency and resource usage |

| Security | Protecting the system from unauthorized access and threats | Maintains data integrity and user privacy |

Understanding Process Management

Managing tasks effectively is a crucial part of any computing environment. The coordination of multiple activities, including their execution, prioritization, and synchronization, plays a key role in maintaining smooth operation. This process requires a structured approach to allocate resources, handle conflicts, and ensure efficient multitasking.

Key Aspects of Task Coordination

To understand how processes are managed, it’s important to focus on the following fundamental components:

- Process Creation – Initiating tasks and allocating necessary resources.

- Scheduling – Determining the order in which tasks are executed.

- Execution – Carrying out the actual operations of a task.

- Termination – Properly closing processes once completed.

Managing Task Prioritization

Effective prioritization ensures that critical tasks receive the necessary attention while less important ones are deferred or executed when resources allow. The methods used to assign priority can vary depending on the system’s needs, but they all aim to optimize resource use and minimize delays.

- First Come, First Served (FCFS) – Tasks are handled in the order they are requested.

- Shortest Job Next (SJN) – Tasks with the least execution time are prioritized.

- Round Robin – Tasks are assigned a fixed time slice and executed in cycles.

Efficient task management not only improves system performance but also ensures fair resource distribution across different operations. Understanding the core concepts of task handling will significantly enhance your ability to troubleshoot and optimize computing environments.

Memory Allocation Techniques Explained

Efficient distribution of available memory is critical to ensuring the optimal performance of any computing environment. Proper allocation ensures that each process or task has the necessary resources to execute without interfering with others. The method of assigning memory space can significantly impact the speed and reliability of operations, making it essential to understand the different approaches used in managing memory.

Common Allocation Strategies

There are several techniques used to allocate memory, each with its own advantages and limitations. These strategies determine how memory is divided and allocated to processes, as well as how unused space is handled.

- Contiguous Allocation – Allocates a single contiguous block of memory to a process. Simple but can lead to fragmentation.

- Paged Allocation – Divides memory into fixed-size pages and assigns them as needed, minimizing fragmentation.

- Segmented Allocation – Divides memory into segments based on logical divisions, such as code, data, and stack.

Fragmentation and Optimization

Over time, memory allocation can lead to fragmentation, where unused spaces are scattered across memory, reducing efficiency. Optimizing memory allocation techniques is crucial to prevent wasted space and ensure smooth operation.

| Allocation Method | Advantages | Disadvantages |

|---|---|---|

| Contiguous | Simple, fast access | Prone to fragmentation, inflexible |

| Paged | Reduces fragmentation, flexible | Requires additional memory management |

| Segmented | Logical grouping of data | Complex management, fragmentation within segments |

Understanding these methods allows for more effective troubleshooting and optimization, ensuring that memory resources are used to their fullest potential. Each technique has its place depending on the needs of the environment and the tasks at hand.

File System Fundamentals and Structures

A well-structured way of storing, organizing, and accessing data is fundamental to ensuring smooth operation in any computing environment. How files are arranged, named, and retrieved is a key factor in system performance, as well as data integrity. Understanding the underlying organization of data is essential for efficient management and troubleshooting of file storage.

File structures define how data is stored on storage devices and how it can be accessed. The method chosen influences speed, security, and the ability to handle large volumes of data. It is crucial to be familiar with different types of file structures and their characteristics in order to optimize data handling and retrieval.

Common File Structures

The following are some widely used methods for organizing data:

- Flat File Structure – Data is stored in a single, simple file without any hierarchy. This approach is easy to implement but lacks flexibility for large or complex datasets.

- Hierarchical File Structure – Data is organized in a tree-like structure with directories and subdirectories. This structure offers greater organization and scalability but can be complex to manage.

- Relational File Structure – Data is stored in tables with relationships between them, providing flexibility and efficiency for querying and handling large datasets.

Each structure serves specific purposes depending on the use case, ranging from simple storage needs to complex data manipulation and retrieval operations. Knowing when to apply each method can significantly enhance performance and make data management more effective.

Types of Operating Systems Overview

Different environments require specialized management of resources, tasks, and interactions between hardware and software. The type of control environment chosen for a system determines how efficiently it can perform its functions, balance tasks, and allocate resources. Understanding the various types of control systems is key to selecting the right solution for any given task.

Single-user vs Multi-user Control

Systems can be classified based on the number of users they are designed to support. A single-user environment focuses on providing resources to one user at a time, while multi-user setups allow simultaneous access for multiple individuals or processes.

- Single-user Control – Designed for use by a single person, offering simplicity and focus on personal tasks.

- Multi-user Control – Supports multiple users accessing the system at the same time, requiring more complex management of resources and security.

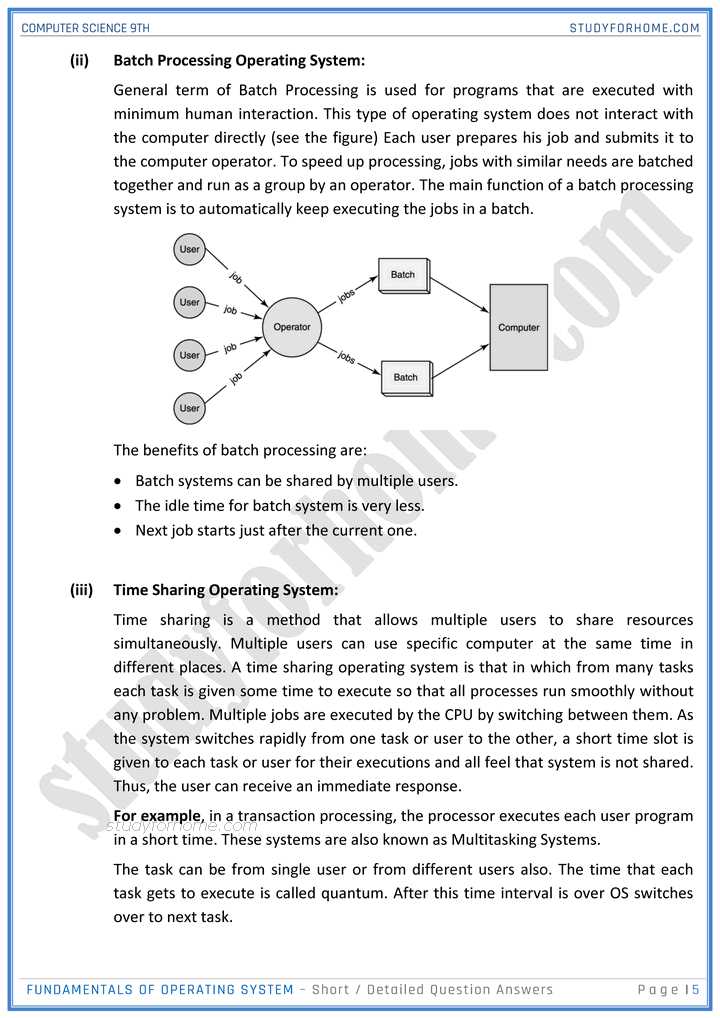

Real-time vs Batch Processing

Another distinction lies in how tasks are processed within the system. Real-time setups provide immediate responses to inputs, essential for critical applications, while batch environments handle tasks in groups, typically when system load is lower.

- Real-time – Provides immediate processing, ensuring timely completion of high-priority tasks.

- Batch Processing – Processes tasks in groups, making it ideal for non-urgent, scheduled tasks.

Each type of environment offers its own strengths and is best suited for particular applications and requirements. Knowing which system to implement based on workload and user needs can vastly improve efficiency and resource allocation.

Virtualization and Its Importance

In modern computing, the ability to simulate multiple environments or platforms on a single hardware setup has revolutionized resource management, scalability, and efficiency. By creating virtual instances, a single physical device can operate as if it were many, improving flexibility and reducing overhead costs. This method enables organizations to make the most of their infrastructure and optimize workloads.

Virtualization allows for the isolation of different processes or environments, making it easier to test software, run applications in varied configurations, and ensure better utilization of resources. It provides a high level of control over computing environments, improving security, fault tolerance, and ease of management.

Benefits of Virtualization

The following are some key advantages of virtualization:

- Resource Optimization – Virtualization allows for the efficient use of hardware resources by running multiple virtual machines on a single physical device.

- Scalability – It becomes easier to scale up or scale down operations based on demand without the need for additional hardware.

- Isolation – Virtual environments are isolated from one another, enhancing security and ensuring that one application’s issues do not affect others.

- Cost Reduction – By maximizing resource use and reducing hardware needs, organizations can significantly lower infrastructure costs.

Virtualization in Practice

From cloud computing to development environments, virtualization is a critical tool for IT infrastructure. It enables rapid deployment of new services, quicker recovery from failures, and efficient resource allocation, making it an essential component of modern technological environments.

Inter-Process Communication Methods

In any environment where multiple tasks or processes are running concurrently, efficient communication between them is essential. Whether it’s sharing data, synchronizing actions, or passing signals, the methods of interaction between tasks ensure smooth operation and prevent conflicts. These communication techniques are vital for coordinating actions, managing resources, and maintaining system stability.

Common Communication Approaches

There are several methods by which processes can exchange information, each suited to different use cases and environments. These methods are designed to ensure that data is transferred securely and efficiently without compromising performance or reliability.

- Message Passing – A communication method where data is sent between processes in the form of messages. This approach is commonly used in distributed systems where processes are running on separate devices.

- Shared Memory – This method allows multiple processes to access a common region of memory, enabling them to exchange data directly. It’s fast and efficient but requires synchronization mechanisms to prevent conflicts.

- Sockets – Often used for network communication, sockets allow processes on different machines to communicate over a network. This method is particularly useful in client-server architectures.

Synchronization Techniques

When multiple processes interact, managing access to shared resources is crucial to prevent conflicts and ensure consistency. The following methods are used to synchronize processes:

- Semaphores – A synchronization tool that controls access to shared resources by using flags to signal when a process can proceed.

- Mutexes – Short for mutual exclusion, this method ensures that only one process can access a shared resource at a time, preventing race conditions.

Choosing the right communication method depends on the specific requirements of the environment, including factors like performance, security, and scalability. By understanding the various techniques, system administrators and developers can optimize inter-process communication to improve efficiency and reliability.

Multithreading and Concurrency in OS

In modern computing, the ability to handle multiple tasks simultaneously is essential for improving efficiency and resource utilization. Multithreading and concurrency are two techniques that allow systems to perform various operations at once, providing better performance, responsiveness, and throughput. These methods enable complex applications to run faster and more smoothly by dividing tasks into smaller, manageable chunks that can be processed concurrently.

Multithreading: Managing Parallel Tasks

Multithreading allows a single process to execute multiple threads in parallel, each performing a part of the task. This approach helps maximize the utilization of available resources, especially in multi-core environments, where each thread can run on a separate processor core.

- Thread Creation – Threads are lightweight units of execution within a process. Creating and managing threads efficiently can significantly improve the performance of an application.

- Synchronization – While threads within a process may share data, it’s essential to manage access to shared resources to avoid conflicts. Synchronization tools like mutexes and semaphores are used to control access to these shared resources.

- Context Switching – The process of switching between threads quickly is known as context switching. It allows the CPU to alternate between threads in a way that gives the illusion of simultaneous execution.

Concurrency: Efficient Resource Management

Concurrency involves executing multiple tasks or processes in overlapping time periods, not necessarily simultaneously. It’s a way to structure programs and tasks so that they can be executed in parallel, optimizing the overall workflow and reducing idle times.

- Non-blocking I/O – In a concurrent system, tasks like input/output operations can be performed without blocking the execution of other tasks, improving responsiveness.

- Parallelism vs. Concurrency – While parallelism refers to executing tasks simultaneously, concurrency focuses on managing multiple tasks at once, ensuring they progress without delay.

- Deadlock Avoidance – In concurrent environments, managing resources and processes is crucial to avoid deadlocks, where tasks are stuck waiting on each other. Proper synchronization is key to preventing such issues.

By implementing multithreading and concurrency, applications can take full advantage of hardware capabilities, enhancing performance and ensuring more efficient processing of complex tasks. These techniques are fundamental in modern computing, from real-time applications to large-scale distributed systems.

Disk Management and Storage Solutions

Efficient handling of data storage and retrieval is essential in ensuring the smooth operation of any computing environment. Effective management of storage resources enables users to optimize disk space, improve performance, and ensure data reliability. A robust strategy for organizing and maintaining storage devices can help prevent data loss and ensure quick access to critical information when needed.

Key Disk Management Practices

Proper disk management involves organizing, maintaining, and ensuring efficient access to data across various storage devices. The following are key practices that are essential for managing storage effectively:

- Partitioning – Dividing a physical storage device into separate logical sections, known as partitions, helps optimize space usage and allows for more efficient data management.

- Formatting – Formatting a disk prepares it for use by organizing it into a file system that enables data storage and retrieval.

- Disk Optimization – Regular defragmentation or reorganization of data on the disk ensures faster access times by minimizing fragmentation.

- Backup Solutions – Implementing a regular backup strategy ensures that critical data is stored securely and can be recovered in case of hardware failure or accidental deletion.

Types of Storage Solutions

In addition to disk management practices, choosing the right type of storage solution is crucial for maintaining data integrity and availability. Different types of storage solutions offer varying benefits depending on the use case, including performance, scalability, and redundancy. Below are some common options:

- Local Storage – Devices like hard drives (HDDs) and solid-state drives (SSDs) provide direct access to data stored on a physical device. While cost-effective, they can have limited capacity compared to networked solutions.

- Network-Attached Storage (NAS) – This solution allows multiple devices to access a centralized storage unit over a network, providing shared access to files and data.

- Cloud Storage – Cloud services offer scalable storage solutions that allow data to be accessed remotely from any location. They provide flexibility, redundancy, and offsite backup for critical data.

- RAID (Redundant Array of Independent Disks) – RAID configurations combine multiple drives into a single logical unit, offering redundancy and improved performance. Different RAID levels (RAID 1, RAID 5, etc.) offer varying trade-offs between data protection and speed.

By selecting the right storage strategy and management practices, organizations can ensure data remains accessible, secure, and efficiently managed, supporting business continuity and performance optimization.

Network Communication in Operating Systems

In today’s interconnected world, the ability for different devices to exchange information efficiently is essential. Communication across networks is fundamental for ensuring that data flows smoothly between multiple machines, enabling remote services, cloud-based applications, and collaborative processes. The underlying mechanisms that govern this communication process are critical for system functionality and performance.

Core Communication Protocols

For devices to communicate over a network, they rely on standardized protocols that define how data is transmitted, received, and managed. These protocols ensure that systems can understand each other and maintain effective interactions, regardless of their location or configuration.

- TCP/IP (Transmission Control Protocol/Internet Protocol) – A fundamental suite of protocols that governs how data is broken into packets, transmitted over the network, and reassembled at the destination.

- UDP (User Datagram Protocol) – A connectionless protocol that allows for faster data transmission but does not guarantee delivery, often used for real-time applications.

- HTTP/HTTPS (Hypertext Transfer Protocol) – The foundation of web communication, enabling web browsers and servers to exchange web pages and resources securely (via HTTPS).

- FTP (File Transfer Protocol) – A protocol used for transferring files between systems over a network, providing mechanisms for authentication and file access.

Data Transmission Techniques

Efficient data transmission is key to ensuring smooth and reliable communication between devices. Different techniques are used depending on the requirements of the communication, such as reliability, speed, and error correction.

| Technique | Description | Use Case |

|---|---|---|

| Packet Switching | Data is broken into smaller packets that are transmitted independently and reassembled at the destination. | Used in most modern networks, including the internet. |

| Circuit Switching | A dedicated communication path is established between devices for the duration of the exchange. | Common in traditional telephone systems. |

| Message Switching | Messages are stored temporarily at intermediate points and forwarded when the next point is available. | Used in some legacy systems and email protocols. |

These protocols and techniques form the backbone of network communication, ensuring that devices can share information efficiently and securely. The development of more advanced protocols and methods continues to improve the speed, reliability, and scope of modern networked systems.

Security Measures and OS Protection

As technology evolves, the importance of securing digital environments grows. Ensuring that critical resources are protected from unauthorized access, manipulation, or damage is essential for maintaining the integrity and confidentiality of data. Security measures within an environment are designed to defend against a wide range of threats, from external hackers to internal errors, creating a barrier that prevents compromise.

Common Protection Mechanisms

There are several core approaches implemented to safeguard an environment from potential security breaches. These mechanisms are essential for controlling access, detecting threats, and ensuring safe operations in multi-user and networked contexts.

- Authentication – Verifying the identity of users or devices to ensure only authorized entities can access critical resources.

- Authorization – Defining the level of access granted to a user or device after authentication, ensuring they can only perform permitted actions.

- Encryption – Converting data into a secure format that can only be read by authorized users, protecting it during transmission or storage.

- Firewalls – Systems that monitor and control incoming and outgoing network traffic based on predetermined security rules.

Advanced Security Strategies

While basic security measures are crucial, more advanced strategies are necessary for defending against evolving threats. These strategies are designed to detect, respond to, and mitigate sophisticated attacks.

- Intrusion Detection Systems (IDS) – Tools designed to monitor network traffic and identify suspicious activities or potential security breaches.

- Access Control Lists (ACLs) – Detailed rules that restrict or allow access to specific resources based on user roles and permissions.

- Sandboxing – Isolating applications or processes from the main environment to limit their potential to affect the entire system if compromised.

- Regular Patching – Updating software components to close vulnerabilities and fix known issues that could be exploited by malicious entities.

Together, these measures form a layered approach to security, often referred to as “defense in depth,” where multiple barriers work in tandem to create a more resilient system. The combination of authentication, encryption, monitoring, and proactive updates ensures that environments remain secure against both known and emerging threats.

Scheduling Algorithms and Their Uses

Efficiently managing tasks and ensuring that computing resources are used optimally is a critical aspect of system management. Task allocation must prioritize certain jobs, minimize delays, and maximize the overall performance of the environment. Various strategies, referred to as scheduling algorithms, are employed to decide the order in which tasks should be executed, ensuring that resources are distributed effectively and fairly among all processes.

Types of Scheduling Strategies

Different methods of scheduling address unique challenges depending on system goals, such as fairness, efficiency, or responsiveness. Each approach is designed to manage task execution in a way that aligns with specific performance requirements.

- First Come First Serve (FCFS) – A simple approach where tasks are executed in the order they arrive, without preemption. It is easy to implement but can lead to inefficiencies in certain situations, like when a long task delays shorter ones.

- Shortest Job Next (SJN) – This method prioritizes tasks with the shortest execution time, aiming to reduce the average waiting time. It’s ideal for environments where jobs’ lengths are predictable.

- Round Robin (RR) – This strategy assigns each task a fixed time slice and rotates through them. It is particularly effective in multi-user environments, where fairness is important.

- Priority Scheduling – Tasks are assigned priorities, and those with the highest priority are executed first. This method is useful in systems where some processes need to be given precedence over others.

Applications of Scheduling Algorithms

Depending on the needs of the system, these algorithms are chosen to ensure smooth operation. Each algorithm has its advantages and is suited for particular types of workloads or environments.

- Real-Time Systems – For systems that require strict timing constraints, such as embedded or control systems, priority scheduling or real-time variants of other algorithms are used to guarantee timely execution.

- Time-Sharing Systems – In environments where multiple users share system resources, round robin is often used to ensure that each user receives a fair amount of processing time.

- Batch Processing – Systems designed for executing large volumes of similar jobs often use algorithms like FCFS or SJN, where long-running tasks are managed more efficiently.

- Interactive Systems – Scheduling algorithms are optimized to ensure that user commands and input are handled with minimal delay, typically using algorithms that balance responsiveness with efficiency.

The choice of scheduling algorithm plays a crucial role in determining system performance, influencing how effectively tasks are managed under varying workloads and conditions. Each method is designed to achieve specific objectives, such as fairness, reduced latency, or optimal throughput, contributing to the overall efficiency of the environment.

Understanding Deadlocks in Systems

In any complex environment where multiple processes or tasks compete for limited resources, a scenario known as a deadlock can occur. This situation arises when two or more tasks are unable to proceed because each one is waiting for resources held by the others, creating a cycle of dependency that leads to a standstill. Understanding how these situations develop, as well as how they can be detected and managed, is essential for maintaining smooth operation in such environments.

Conditions for Deadlock

Deadlocks typically emerge when a set of specific conditions are met, all of which contribute to a situation where tasks become blocked. The four main conditions that must exist simultaneously for a deadlock to occur are:

- Mutual Exclusion – At least one resource must be held in a non-shareable mode, meaning that only one task can use the resource at a time.

- Hold and Wait – A task holding at least one resource is waiting to acquire additional resources that are currently held by other tasks.

- No Preemption – Resources cannot be forcibly taken from tasks holding them; they must be released voluntarily.

- Circular Wait – A circular chain of tasks exists, where each task is waiting for a resource held by the next task in the chain.

Strategies for Dealing with Deadlocks

While deadlocks can severely hinder system performance, several strategies can be employed to detect, prevent, or recover from these situations:

- Prevention – By ensuring that at least one of the four necessary conditions for a deadlock is never met, deadlocks can be prevented from occurring. This may involve carefully designing resource allocation policies and ensuring that processes are never left waiting indefinitely.

- Avoidance – In some cases, systems use algorithms that monitor resource allocation to avoid entering a state where deadlock is possible. These algorithms often require the system to have knowledge of future resource requests, which is not always feasible.

- Detection – Systems can periodically check for cycles or other patterns of dependency that may indicate the presence of a deadlock. Once detected, resources can be reclaimed or processes can be terminated to resolve the issue.

- Recovery – When a deadlock is detected, recovery strategies may involve terminating one or more tasks or forcibly taking resources from a process to break the cycle.

Efficient management of resource allocation, combined with proactive deadlock detection and resolution strategies, is critical to ensuring uninterrupted operation in environments where multiple tasks or processes are running concurrently. Without these measures, performance can degrade, or even come to a halt, resulting in significant delays and inefficiencies.

Input/Output System Management

Efficient management of data transfer between a computing device and external components is essential for smooth operation. The process involves coordinating both input and output devices, ensuring that data flows correctly to and from memory and other subsystems. A well-structured management approach is critical to avoid bottlenecks, improve performance, and maintain system stability during interactions with peripherals.

Key Functions of Input/Output Management

The role of I/O management includes several important tasks, each designed to facilitate smooth communication between the internal and external components of a system:

- Device Scheduling – Ensures that resources are allocated efficiently, especially when multiple processes request access to I/O devices at the same time.

- Data Buffering – Temporarily holds data in memory to manage differences in speed between the producer and consumer of data, preventing data loss and enhancing performance.

- Data Caching – Involves storing frequently accessed data in high-speed memory to reduce access time and improve the overall speed of data retrieval.

- Device Drivers – Serve as interfaces between the system software and hardware devices, allowing higher-level software to interact with peripherals without needing to know their details.

- Error Handling – Detects and manages errors that occur during I/O operations, ensuring that the system can recover gracefully without data loss.

Types of Input/Output Techniques

There are several techniques employed to manage I/O processes effectively. These techniques are designed to optimize resource use and minimize waiting times:

- Polling – The system continuously checks the status of a device to see if it’s ready for data transfer. While simple, it can be inefficient if used excessively.

- Interrupts – Instead of continuously checking devices, the system is alerted by the hardware when a device is ready for data exchange. This method is more efficient and reduces CPU load.

- Direct Memory Access (DMA) – Allows data to be transferred directly between memory and I/O devices without involving the CPU, speeding up large data transfers and freeing up the processor for other tasks.

By implementing these strategies, systems can optimize the performance of I/O operations, reduce latencies, and ensure that data handling remains smooth and responsive, even in complex or high-demand environments.

Real-Time Operating Systems Explained

Real-time environments are designed to prioritize time-sensitive tasks, ensuring that they are completed within a defined deadline. This type of environment is crucial where delays or inconsistencies in processing could lead to failure or even danger. Unlike general-purpose environments, which focus on maximizing overall throughput, real-time platforms guarantee prompt execution and system stability under strict time constraints.

Key Features of Real-Time Environments

These platforms provide several unique features tailored to meet the needs of time-critical applications:

- Deterministic Behavior – Tasks are executed within a guaranteed timeframe, ensuring consistent system responses under all conditions.

- Priority Scheduling – Tasks are assigned priorities based on their urgency, with high-priority tasks receiving immediate attention, minimizing delays.

- Minimal Latency – Real-time environments are designed to respond to events quickly, minimizing the delay between an input event and the corresponding output action.

- Predictability – The system guarantees that all time-critical tasks will meet their deadlines, even under heavy workloads.

- Resource Management – Efficient allocation of system resources ensures that critical tasks are given precedence, preventing system overloads.

Types of Real-Time Platforms

There are two primary categories of real-time systems, each with distinct operational characteristics:

- Hard Real-Time – In these systems, missing a deadline is unacceptable, as it can lead to catastrophic consequences. Examples include embedded systems in medical devices or aerospace applications.

- Soft Real-Time – While timely task execution is important, missing a deadline here may lead to degraded performance, but not complete failure. Examples include multimedia applications or online transaction processing.

By guaranteeing strict control over task scheduling and resource management, real-time platforms ensure that critical applications operate reliably within specified time constraints, making them indispensable in fields like automotive, healthcare, and telecommunications.

System Performance Optimization Techniques

Enhancing the overall performance of a computing environment involves a variety of techniques that focus on maximizing resource utilization, reducing bottlenecks, and improving efficiency. Optimizing performance is crucial for ensuring fast response times, efficient task execution, and a smooth user experience. Whether you’re managing a server, a workstation, or embedded hardware, implementing the right strategies can result in significant improvements in processing speed, memory management, and energy consumption.

Key Techniques for Improving System Efficiency

The following are some of the most effective strategies to optimize performance:

- Load Balancing – Distributing workloads evenly across multiple resources, such as processors or servers, helps avoid overloading a single component, ensuring smooth and efficient task handling.

- Memory Management – Effective memory allocation and deallocation are vital for avoiding fragmentation and ensuring that programs can access the necessary resources without delays.

- Task Scheduling – Scheduling algorithms help prioritize tasks based on urgency, ensuring that critical operations receive resources promptly while preventing idle time and system overload.

- Disk Optimization – Techniques such as defragmentation and better file system management can improve disk read/write speeds, leading to faster data access and retrieval.

- Network Optimization – Improving network throughput, reducing latency, and enhancing error correction can speed up data transfer and communication between distributed components.

Performance Monitoring and Evaluation

Constant monitoring of system performance allows for the identification of potential issues and bottlenecks. Key metrics to track include CPU usage, memory consumption, disk activity, and network bandwidth. Below is a table summarizing common performance metrics and their impact:

| Metric | Impact |

|---|---|

| CPU Usage | High CPU usage can indicate that the processor is under heavy load, leading to slowdowns or delays in task processing. |

| Memory Utilization | Excessive memory consumption may result in swapping, where data is moved between RAM and disk, leading to slower performance. |

| Disk Throughput | Slow disk read/write speeds can significantly impact system performance, particularly in applications with high data processing needs. |

| Network Latency | High network latency can cause delays in communication between distributed systems, affecting real-time operations. |

By regularly monitoring these key performance indicators and implementing optimization techniques, you can ensure that your system operates at peak efficiency and handles workloads effectively, even under heavy demand.

Common Pitfalls in OS Exams

Many students face challenges when tackling assessments related to the inner workings of computing platforms. The complexity of underlying principles, as well as the intricacies of how various components interact, often leads to common mistakes. Understanding where these pitfalls lie and how to avoid them is key to achieving success in evaluations focused on this area.

Frequent Mistakes in Assessments

- Misunderstanding Key Concepts – A frequent issue is failing to fully grasp fundamental principles such as process management, memory handling, or scheduling mechanisms. Without a solid understanding, even straightforward questions can become confusing.

- Overlooking Details – Many learners focus too much on high-level concepts and neglect essential details like specific algorithms, data structures, or configuration nuances that can be critical to providing complete answers.

- Inaccurate Terminology – Using incorrect terminology or mixing up concepts can lead to confusion and incorrect responses. It is vital to use precise language to describe processes, protocols, and structures.

- Rushing Through Problem Solving – Hastily jumping to conclusions can result in missed steps or errors in calculations. It is important to methodically approach problems, working through them step-by-step.

- Failing to Connect Theory with Practice – Not relating theoretical knowledge to practical scenarios is another common issue. Real-world examples often clarify concepts, making it easier to apply knowledge effectively in assessments.

Tips to Avoid Common Mistakes

- Study Core Topics Thoroughly – Make sure to master the fundamental concepts and their real-world applications, as this will help you tackle both theoretical and practical problems more confidently.

- Pay Attention to Specifics – Don’t skim over critical details. Take time to review the instructions carefully and ensure you understand every part of the problem.

- Practice Problem-Solving – Regularly work on practice problems and mock scenarios to build familiarity with typical questions and how to approach them methodically.

- Ask for Clarification – If you encounter any confusion during the assessment, don’t hesitate to ask for clarification, especially if the question seems ambiguous or difficult to interpret.

By addressing these pitfalls and using these strategies, students can improve their chances of performing well in assessments related to the structure and functionality of computing platforms.