Understanding the core principles behind information extraction and analysis is crucial for excelling in this field. Whether you are just beginning or preparing for an important evaluation, mastering the key concepts will help you perform confidently. The ability to navigate complex scenarios and solve real-world problems efficiently is at the heart of this discipline.

In this section, we will cover essential topics commonly tested in assessments, offering insights into the concepts, methods, and techniques you should focus on. By familiarizing yourself with various challenges and familiarizing your approach to problem-solving, you will gain the skills needed to succeed in rigorous evaluations.

Preparation is key, and knowing which areas to focus on, how to approach different types of tasks, and what tools to use will give you an edge. From foundational theories to advanced methods, this guide provides a structured overview of what to expect.

Data Mining Exam Questions and Answers

When preparing for assessments in the field of information extraction, it’s essential to understand the various concepts, techniques, and methods typically tested. Mastering these topics will allow you to approach challenges with confidence and provide well-thought-out solutions. By practicing with realistic scenarios, you will be better equipped to handle the complexities of the subject.

The following table outlines common topics and problem types often featured in evaluations. Reviewing these areas will give you insight into the skills you should develop and the key concepts you need to be familiar with.

| Topic | Description |

|---|---|

| Classification Algorithms | Techniques for categorizing data into predefined classes, including decision trees, support vector machines, and nearest neighbor methods. |

| Clustering Methods | Approaches for grouping similar items together based on patterns, such as K-means or hierarchical clustering. |

| Model Evaluation | Understanding how to assess the performance of models using metrics like accuracy, precision, recall, and F1-score. |

| Feature Selection | Techniques for identifying the most important features that contribute to the predictive power of models. |

| Dimensionality Reduction | Methods like PCA and t-SNE to reduce the number of variables while retaining key patterns in the data. |

By focusing on these areas, you will be well-prepared to tackle challenges and demonstrate your understanding in assessments.

Key Concepts in Data Mining

In the field of information analysis, there are several fundamental principles that guide practitioners in extracting valuable insights. Understanding these core ideas is essential for effectively solving problems and making informed decisions. These concepts form the foundation for building models, analyzing patterns, and interpreting results, all of which are critical for success in this domain.

From preprocessing methods to advanced algorithms, each concept plays a vital role in transforming raw information into meaningful knowledge. Familiarity with these ideas ensures a deeper understanding of how techniques work together to reveal patterns, trends, and predictions that drive decision-making processes.

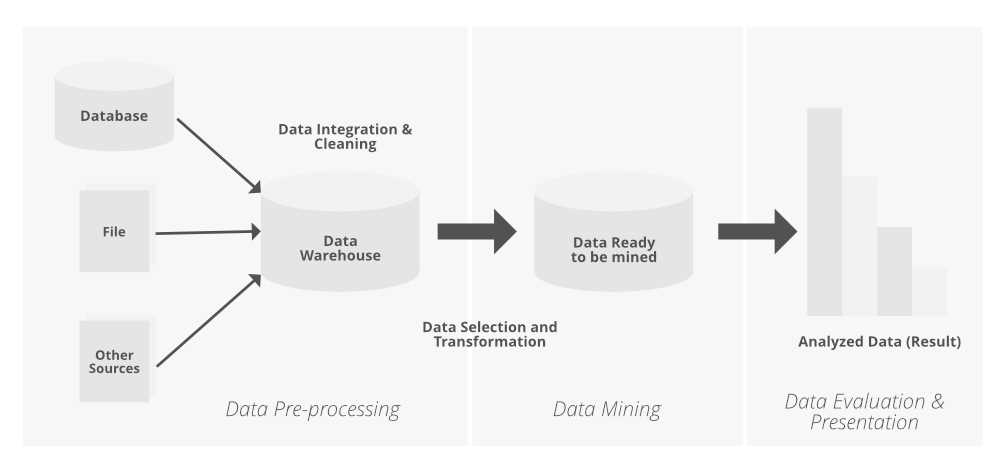

Understanding Data Preprocessing Techniques

Preparing raw information for analysis is a crucial step in any project. Before meaningful patterns can be extracted, the information needs to be cleaned, transformed, and structured in a way that enables accurate interpretation. These steps ensure that any subsequent models or algorithms work efficiently and produce reliable results.

Proper preparation involves several processes aimed at improving the quality of the dataset. This can include removing inconsistencies, dealing with missing values, and normalizing the scale of the features to ensure a balanced and clean dataset for analysis.

Key Preprocessing Steps

| Technique | Description |

|---|---|

| Data Cleaning | Involves identifying and correcting errors or inconsistencies in the dataset, such as duplicate entries or incorrect values. |

| Handling Missing Values | Addresses gaps in the dataset by either removing the missing entries or filling them with appropriate values like mean or median. |

| Normalization | Standardizes the range of values for each feature, ensuring that no particular variable dominates due to differing scales. |

| Encoding Categorical Variables | Transforms categorical data into numerical formats, enabling algorithms to process these types of features. |

Why Preprocessing Matters

Effective preparation ensures that the data is consistent, reliable, and ready for analysis. It improves model accuracy and helps avoid biases that can arise from unprocessed or poorly prepared information. Mastery of preprocessing techniques is key to successful outcomes in any information analysis task.

Popular Algorithms in Data Mining

In the field of information analysis, algorithms are the tools that drive the discovery of patterns, trends, and relationships within large datasets. These techniques allow practitioners to classify, cluster, and predict outcomes, offering powerful insights that can influence decision-making. By understanding the most commonly used algorithms, one can better approach problems and apply the right methods to various tasks.

Several algorithms have gained prominence due to their effectiveness in solving a wide range of problems. These methods are widely used in practical applications, from market segmentation to fraud detection, and are essential in extracting meaningful knowledge from complex datasets.

Commonly Used Techniques

| Algorithm | Description |

|---|---|

| Decision Trees | Used for classification tasks, decision trees break down data into smaller groups, offering an intuitive way to make predictions based on feature values. |

| K-Means Clustering | A popular clustering method that groups data points into a specified number of clusters based on similarity, widely used in segmentation tasks. |

| Support Vector Machines | A powerful technique for classification, SVM finds the optimal boundary between different classes of data by maximizing the margin between them. |

| Neural Networks | Inspired by the human brain, neural networks are used for both classification and regression tasks, particularly in complex pattern recognition. |

Why These Algorithms Matter

Mastering these algorithms is essential for anyone working in this field, as they form the backbone of many analytical and predictive tasks. Understanding when and how to apply each method ensures that one can derive the most accurate and relevant results from the available information.

Importance of Data Cleaning for Accuracy

In any analysis process, ensuring the quality of the underlying information is essential. Without proper cleaning, inconsistencies, errors, and irrelevant data can distort the results, leading to inaccurate or misleading conclusions. Therefore, cleaning the dataset is a critical step to guarantee that the outcomes of any analysis or model are reliable and valid.

Effective cleaning involves identifying and addressing several common issues that can affect the accuracy of the results. These issues can arise from missing values, outliers, duplicates, or incorrect formatting, all of which need to be handled appropriately to avoid biases in the analysis.

Key Cleaning Tasks

- Identifying and correcting errors or inconsistencies in the dataset

- Handling missing or incomplete values by imputation or removal

- Removing duplicate entries that could lead to overrepresentation of certain data points

- Ensuring proper formatting of values, such as standardizing date formats or numerical scales

Impact of Proper Cleaning

By thoroughly cleaning the information, you ensure that the analysis reflects the true nature of the data. Accurate results can only be derived from clean, well-organized information, which improves the performance of models and decision-making processes. Without this essential step, even the most advanced algorithms will struggle to provide useful insights.

Handling Missing Data in Analysis

In any analytical process, the presence of missing information can significantly affect the results. Incomplete datasets, whether due to errors in collection, data entry issues, or other factors, can introduce bias or distort conclusions. Properly addressing these gaps is vital to ensure that the analysis remains accurate and meaningful.

There are various techniques for handling missing values, each suited to different types of tasks and datasets. Choosing the appropriate approach depends on the nature of the missing information and the impact it could have on the overall results. Failing to handle missing entries appropriately can lead to skewed outcomes or unreliable models.

Common Approaches for Managing Missing Entries

- Imputation: Filling in missing values using statistical methods, such as the mean, median, or mode, based on available data points.

- Deletion: Removing entries with missing values, either by deleting the entire row or excluding specific variables.

- Prediction Models: Using machine learning algorithms to predict and fill in missing values based on patterns observed in the rest of the dataset.

- Flagging: Marking missing entries as a separate category or flag, which may be useful when missingness itself carries significant information.

Choosing the Right Technique

Each method has its own advantages and limitations. For instance, imputation can introduce bias if the missing values are not randomly distributed, while deletion may lead to a loss of valuable information. The key is to understand the cause of missingness and to apply the most suitable strategy to minimize its impact on the analysis.

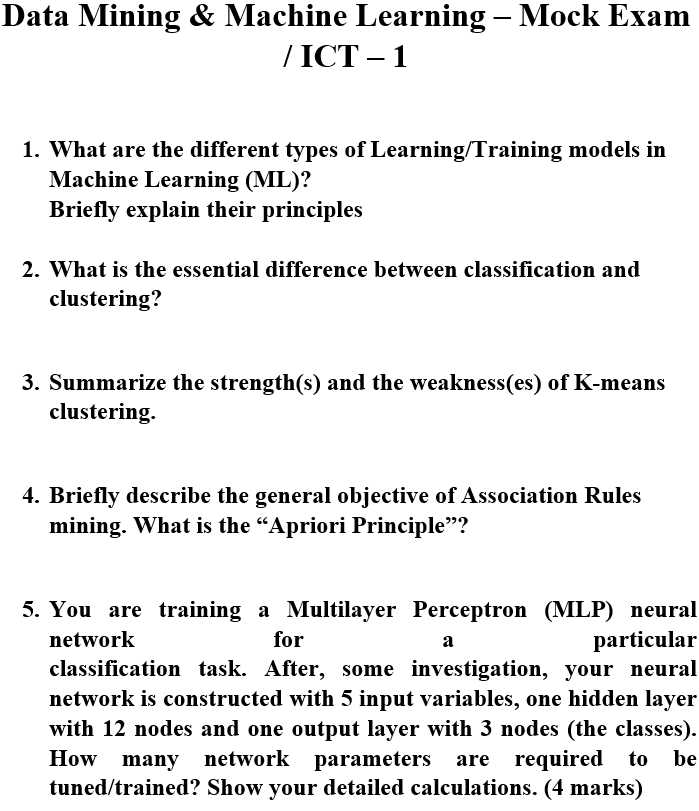

Classification vs Clustering in Mining

In the process of extracting meaningful insights from information, two of the most widely used approaches are sorting and grouping. These methods allow analysts to identify patterns and relationships within complex datasets. While they share the goal of uncovering hidden structures, they differ in their techniques and applications. Understanding the distinctions between these approaches is crucial for selecting the most appropriate method for specific tasks.

Sorting involves assigning information to predefined categories based on certain characteristics, while grouping works by identifying similarities without prior knowledge of categories. Each method plays a significant role in solving different types of problems and is applicable in various industries, from marketing to healthcare.

Key Differences Between Sorting and Grouping

| Aspect | Sorting | Grouping |

|---|---|---|

| Definition | Assigning items to predefined labels or classes. | Grouping items based on similarities without predefined labels. |

| Type of Task | Supervised learning. | Unsupervised learning. |

| Output | Categories or classes. | Clusters or groups. |

| Example Use | Email spam detection, disease diagnosis. | Customer segmentation, image organization. |

When to Use Sorting vs Grouping

Sorting is ideal when you have labeled data and want to predict or classify new instances based on past information. In contrast, grouping is more effective when the goal is to explore the data and discover hidden patterns without any prior assumptions. Both methods have their advantages, depending on the nature of the problem and the dataset at hand.

Evaluating Model Performance Metrics

Once a model is built and predictions are made, it’s essential to assess its effectiveness. Evaluating how well the model performs helps in understanding its strengths and weaknesses. This process is crucial to ensure that the model is not only accurate but also reliable across different data sets and real-world applications.

Various metrics can be used to measure a model’s performance, each suited to different types of tasks, such as classification or regression. Choosing the right metric is key to obtaining meaningful insights about the model’s capabilities and limitations. The goal is to provide a comprehensive view of its predictive power, highlighting areas where improvements may be needed.

Common Performance Metrics

| Metric | Type of Task | Description |

|---|---|---|

| Accuracy | Classification | Measures the proportion of correct predictions out of all predictions. |

| Precision | Classification | Calculates the ratio of true positive predictions to all positive predictions made. |

| Recall | Classification | Measures the ratio of true positive predictions to all actual positives. |

| F1 Score | Classification | Combines precision and recall into a single metric by calculating their harmonic mean. |

| Mean Absolute Error (MAE) | Regression | Measures the average of the absolute differences between predicted and actual values. |

| R-Squared | Regression | Assesses the proportion of variance in the target variable explained by the model. |

Choosing the Right Metric

The choice of evaluation metric depends on the specific goals of the analysis. For example, if false positives are critical to avoid, precision may be prioritized. Alternatively, when it is more important to capture as many true positives as possible, recall would be more relevant. Understanding the trade-offs between these metrics is essential to accurately evaluate a model’s performance.

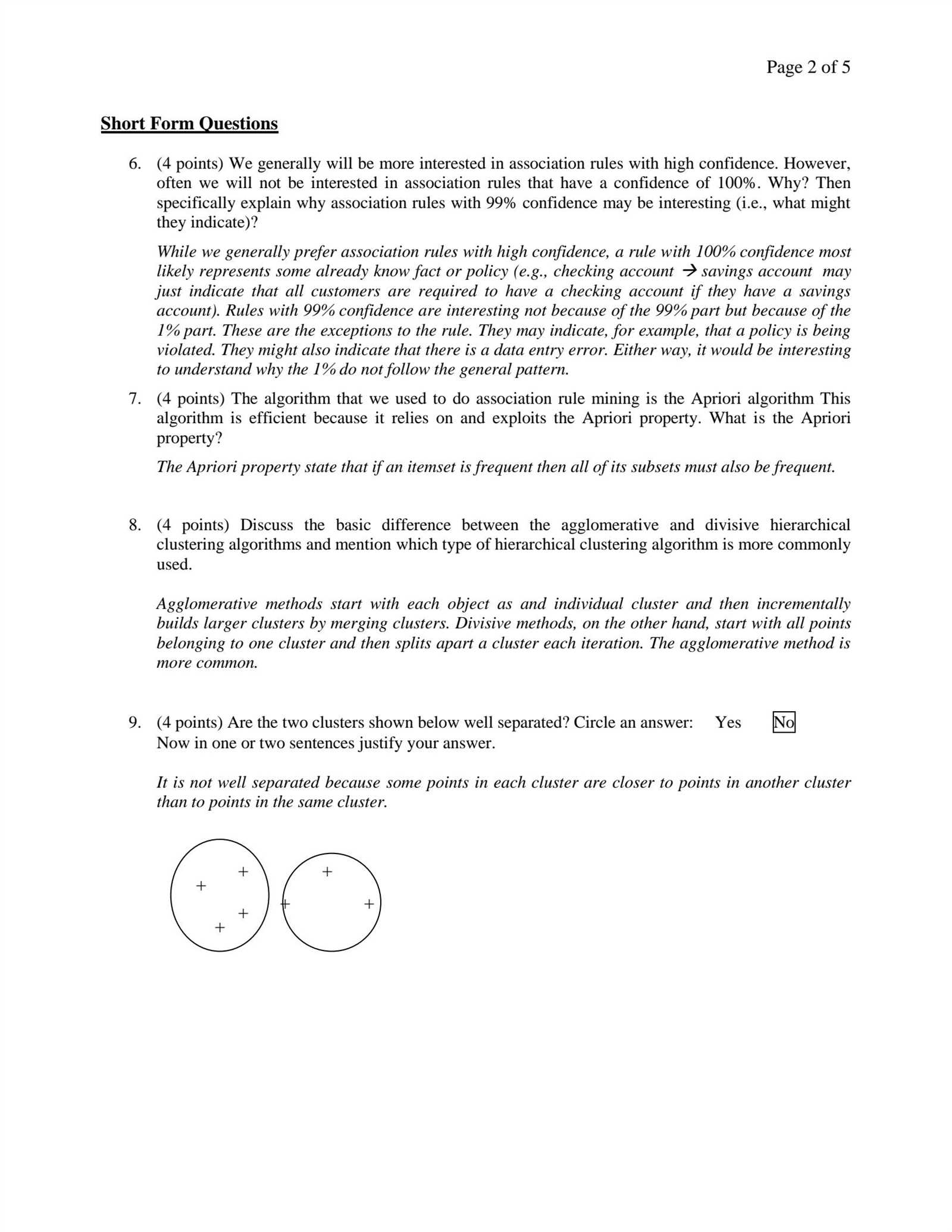

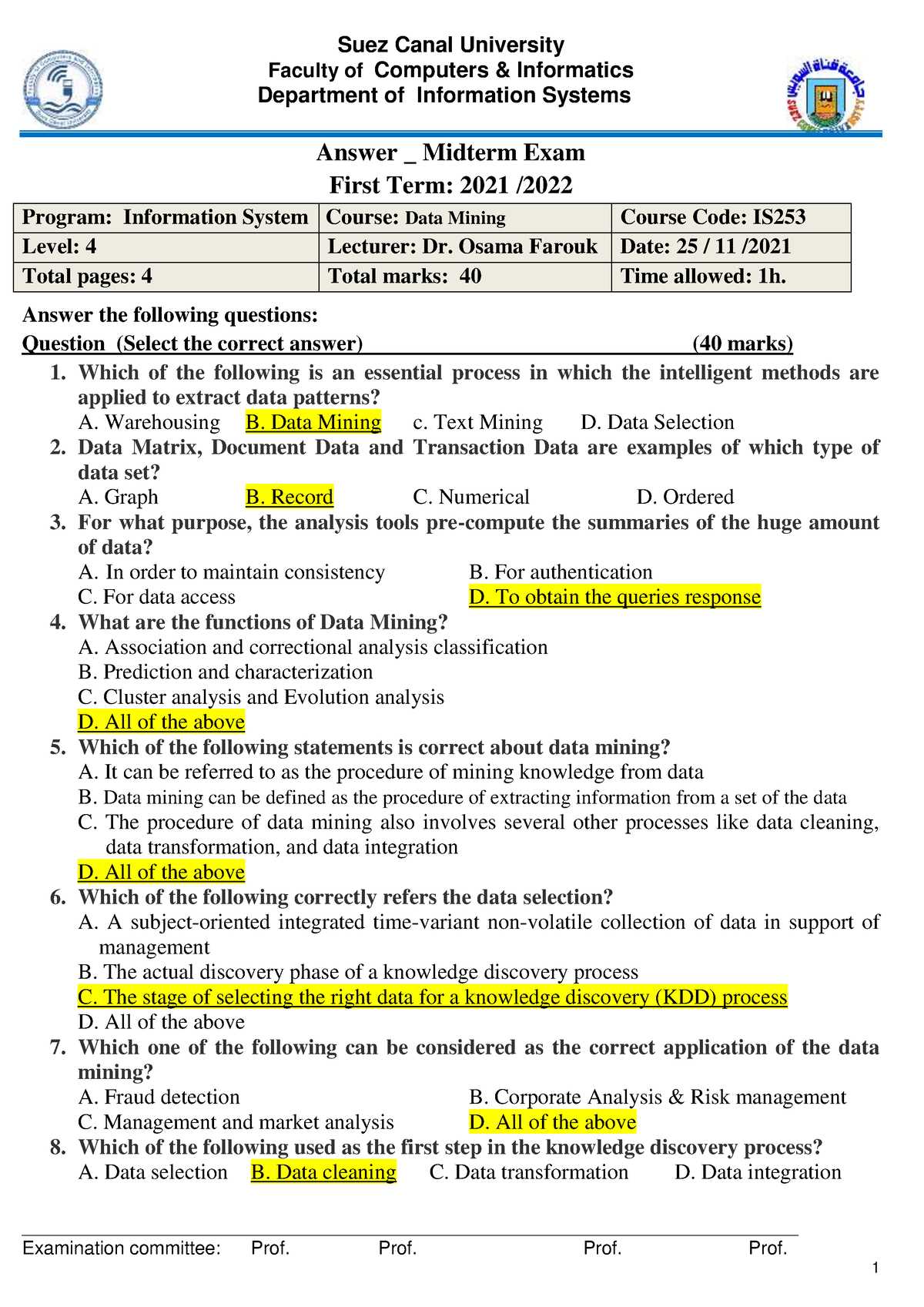

Commonly Asked Questions in Exams

In any assessment, certain topics tend to appear more frequently, helping both students and instructors focus on key concepts. These recurring topics often reflect fundamental ideas and techniques that are essential for mastering the subject. By preparing for these frequently addressed areas, individuals can strengthen their understanding and improve their performance.

The types of inquiries encountered in assessments vary in complexity, ranging from basic definitions to more advanced problem-solving scenarios. Understanding the structure of these queries is crucial for efficient study and successful completion of any task. Below, we highlight some of the most common topics that frequently arise, offering insights into how to approach them effectively.

Key Topics Frequently Covered

- Conceptual Understanding: Inquiries often test the grasp of core theories, terminology, and definitions.

- Problem Solving: Many assessments require students to apply learned concepts to real-world scenarios or hypothetical cases.

- Methodology: Understanding the steps involved in specific techniques or procedures is often a focal point of evaluation.

- Interpretation of Results: A frequent question type involves interpreting data, outputs, or graphs, assessing how well conclusions are drawn from provided information.

How to Approach These Topics

Effective preparation involves not only memorizing key concepts but also developing a deeper understanding of how to apply them. Practicing with past examples or mock assessments can help solidify the knowledge and improve performance. Additionally, focusing on the areas most commonly tested allows individuals to approach the task with confidence and clarity.

Frequently Tested Algorithms

In the study of extracting patterns and insights from large sets of information, several algorithms are commonly tested due to their wide applicability and importance. These techniques form the foundation for solving complex problems across various industries, from predicting trends to grouping similar items. Understanding how these algorithms work, along with their strengths and limitations, is essential for mastering the subject.

Throughout assessments, these algorithms are often examined for their practical applications, as well as for the underlying principles that drive their operation. Below are some of the most frequently tested algorithms that every student should familiarize themselves with to ensure a comprehensive understanding of the field.

Popular Algorithms

- Decision Trees: A hierarchical model used for classification tasks, where data is split into branches based on decision rules.

- Support Vector Machines: A supervised learning model used for classification and regression, particularly effective in high-dimensional spaces.

- K-Means Clustering: A popular unsupervised learning algorithm used to partition a set of data points into distinct groups based on similarity.

- Naive Bayes: A probabilistic classifier based on Bayes’ theorem, commonly used for classification tasks involving text data.

- Random Forest: An ensemble method that combines multiple decision trees to improve classification accuracy and prevent overfitting.

- Neural Networks: A computational model inspired by the human brain, used for both classification and regression tasks, especially in deep learning applications.

When to Use Each Algorithm

Choosing the right algorithm depends on the type of problem at hand and the characteristics of the data. For instance, decision trees are often preferred when interpretability is key, while support vector machines excel in scenarios with a clear margin of separation. Understanding these nuances helps in selecting the most suitable approach for solving specific tasks effectively.

Key Theories Behind Data Mining

Understanding the core theories that drive the extraction of meaningful insights from large datasets is essential for grasping the broader principles behind the field. These theories provide the foundation for developing various techniques used to identify patterns, make predictions, and support decision-making processes. By exploring these key concepts, one can better comprehend how complex systems work to uncover hidden knowledge from raw information.

Several foundational theories play a crucial role in shaping modern approaches to pattern discovery. These theories guide the development of algorithms and tools that aim to make sense of the vast amounts of data generated in today’s world. Below are some of the key theoretical frameworks that underpin this domain.

Core Theories in Pattern Recognition

- Information Theory: Focuses on quantifying the information content in datasets, providing a basis for compression, communication, and discovery of significant patterns.

- Statistical Learning Theory: Relies on probability theory and statistical methods to make predictions based on observed data, aiming to generalize findings to unseen examples.

- Bayesian Theory: A probabilistic model used to infer conclusions from uncertain or incomplete information, often used in classification and regression tasks.

- Cluster Analysis Theory: Focuses on partitioning datasets into groups based on similarity, helping to identify patterns without prior knowledge of the group labels.

Mathematical Foundations and Their Application

- Linear Algebra: Key to understanding dimensionality reduction techniques, such as principal component analysis (PCA), used to simplify complex datasets.

- Optimization Theory: Plays a critical role in improving algorithm performance, particularly in tuning models to minimize error or maximize accuracy.

- Graph Theory: Used in network analysis, where relationships between entities are modeled as graphs to find patterns and connections in social networks or other systems.

Challenges in Assessments

While evaluating knowledge in the field of pattern recognition and predictive modeling, various obstacles can arise due to the complexity and diversity of the subject matter. These difficulties are often tied to the dynamic nature of the field, which continues to evolve with the development of new techniques and technologies. As such, assessments must adapt to the changing landscape, presenting unique challenges for both instructors and students alike.

The evaluation process must not only assess theoretical understanding but also practical application, where the real challenge lies. Ensuring that learners can apply the right tools to analyze vast amounts of information, recognize patterns, and derive actionable insights is a key hurdle in designing effective assessments. Below are some of the main challenges that are often encountered in these types of evaluations.

Common Challenges in Assessing Practical Skills

- Complexity of Real-World Problems: Tasks often involve large, unstructured datasets, making it difficult to accurately evaluate students’ ability to extract meaningful results under time constraints.

- Choosing the Right Algorithm: With a vast array of algorithms available, selecting the most appropriate one for a specific problem can be daunting, requiring a deep understanding of the theoretical concepts behind them.

- Model Evaluation: Assessing the performance of predictive models requires the ability to properly validate results, handle overfitting, and account for errors, which can be difficult to quantify in a test environment.

- Overfitting and Underfitting: Balancing model complexity to avoid overfitting (too specific) or underfitting (too general) is a key challenge, especially when evaluating students’ ability to tune models correctly.

Assessing Theoretical Understanding

- Abstract Concepts: Theoretical concepts such as probability, optimization, and information theory can be difficult to test comprehensively, as they require both deep understanding and the ability to apply them to practical scenarios.

- Interpretation of Results: It is important for assessments to evaluate students’ ability to interpret results from models and techniques. Misinterpretation can lead to wrong conclusions, which can be detrimental to problem-solving in real-world contexts.

Time Management Tips for Data Mining Exams

Efficiently managing time during assessments in this field can be crucial to success. The complex nature of tasks, combined with the pressure of limited time, can lead to unnecessary stress and missed opportunities to showcase one’s knowledge. By applying strategic planning and smart prioritization, students can maximize their performance and navigate through the challenges more effectively.

Proper time allocation ensures that each section of the assessment receives the attention it deserves, while also preventing time from being wasted on less critical aspects. Here are some key strategies to consider when preparing for these types of evaluations.

Prioritize Tasks Wisely

- Understand the Format: Before diving into the test, familiarize yourself with the structure to allocate time efficiently across different sections.

- Focus on Easy Wins: Tackle simpler tasks first to secure quick points and boost confidence before moving on to more complex challenges.

- Leave Room for Review: Always reserve time at the end to review your work and make adjustments where needed.

Practice with Timed Simulations

- Replicate Exam Conditions: Set aside specific times for mock assessments to get used to working under pressure, simulating the exact environment of the actual test.

- Identify Bottlenecks: Recognize areas where you tend to spend too much time, so you can adjust strategies or focus on improving those areas before the real test.

Best Study Resources for Data Mining

When preparing for assessments in this field, having access to high-quality study materials is essential. A variety of resources can significantly enhance your understanding and retention of key concepts, making it easier to tackle difficult topics and improve your performance. Choosing the right materials helps streamline your preparation and ensures a well-rounded approach to mastering the subject.

Below are some of the most valuable tools and resources to help you excel in your studies. These resources will aid in building both theoretical knowledge and practical skills necessary for tackling complex challenges in this area.

Books and Textbooks

- Introduction to Machine Learning with Python: A solid choice for learning the fundamentals of algorithms and their applications, offering hands-on examples with Python.

- Pattern Recognition and Machine Learning: This book provides a deeper dive into advanced techniques, perfect for those seeking to understand the mathematical foundations of the subject.

- Data Science for Business: Focuses on real-world applications of algorithms and models, helping learners connect theory with practice.

Online Courses and Tutorials

- Coursera – Machine Learning by Stanford University: An in-depth, structured course covering the key techniques and methods widely used in the field.

- edX – Data Science MicroMasters: A series of courses designed to provide a comprehensive understanding of data-driven decision-making and analysis.

- Udemy – Python for Data Analysis: A practical course that helps learners apply Python programming skills to data analysis and problem-solving.

Forums and Community Groups

- Stack Overflow: A go-to forum for asking specific questions and learning from others’ experiences, especially when dealing with programming-related issues.

- Reddit – r/MachineLearning: A community-driven subreddit where you can discuss recent trends, share resources, and ask for advice from fellow learners and professionals.

Utilizing a combination of these resources can significantly enhance your understanding and problem-solving abilities, ensuring that you’re well-prepared for any challenge that comes your way.

Real-World Applications of Data Mining

The ability to uncover patterns, trends, and associations in large datasets has transformed various industries. This process is used to drive decision-making, predict future outcomes, and optimize business operations. Across fields like healthcare, finance, retail, and technology, these techniques are continuously proving to be invaluable in tackling complex challenges and improving efficiency.

From personalized recommendations to fraud detection, the applications of these methods are vast and impactful. Below are some key areas where this approach has demonstrated real-world significance:

Healthcare

Predictive Analytics: In healthcare, predictive models are used to forecast patient outcomes, such as the likelihood of disease progression or the success of treatment plans. By analyzing historical medical data, these models help doctors make informed decisions and improve patient care.

Medical Diagnosis: Techniques are applied to identify patterns in patient symptoms, medical histories, and test results, aiding in early detection of diseases and improving diagnostic accuracy.

Finance

Fraud Detection: Financial institutions rely on these techniques to detect fraudulent activities. By analyzing transaction patterns, unusual behaviors are flagged, enabling quicker responses to potential security threats.

Credit Scoring: This approach is used by banks and lenders to assess the creditworthiness of individuals and businesses. By examining past financial behaviors, institutions can predict future risk and make more accurate lending decisions.

Retail

Customer Segmentation: Retailers use these techniques to segment customers based on purchasing behavior, preferences, and demographics. This allows for targeted marketing strategies, improving sales and customer satisfaction.

Recommendation Systems: E-commerce platforms use algorithms to suggest products based on past behaviors, helping drive sales by offering personalized shopping experiences for each customer.

Technology

Social Media Analysis: Online platforms use these methods to understand user behavior, identify trends, and predict future content engagement. This data helps in developing targeted advertising strategies and enhancing user experiences.

Search Engine Optimization: Algorithms are employed to analyze search patterns and improve search engine results, ensuring that users find the most relevant content quickly and efficiently.

These examples illustrate how techniques that analyze large volumes of information are transforming industries, providing valuable insights that lead to smarter decisions, cost reductions, and better outcomes in many sectors.

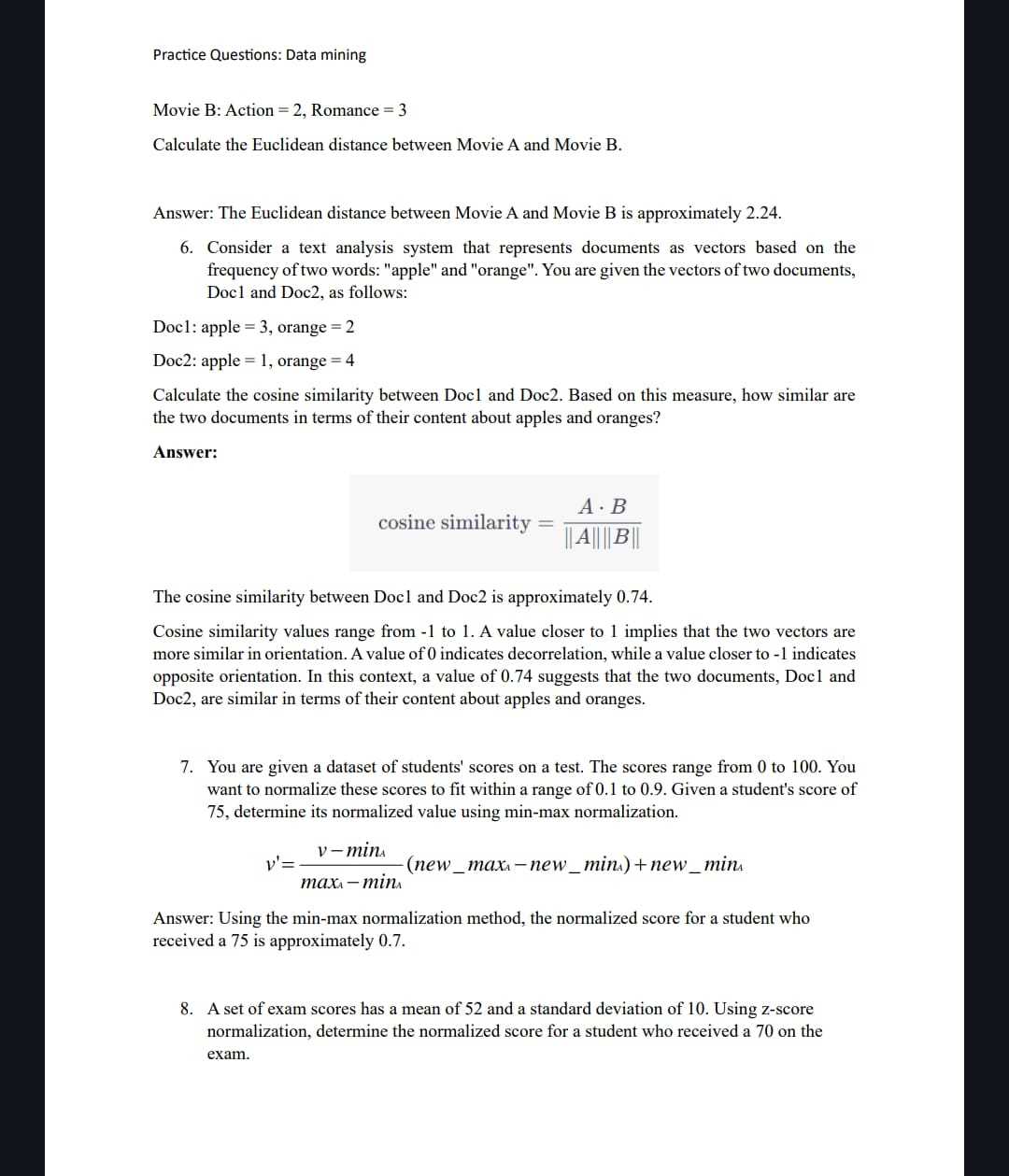

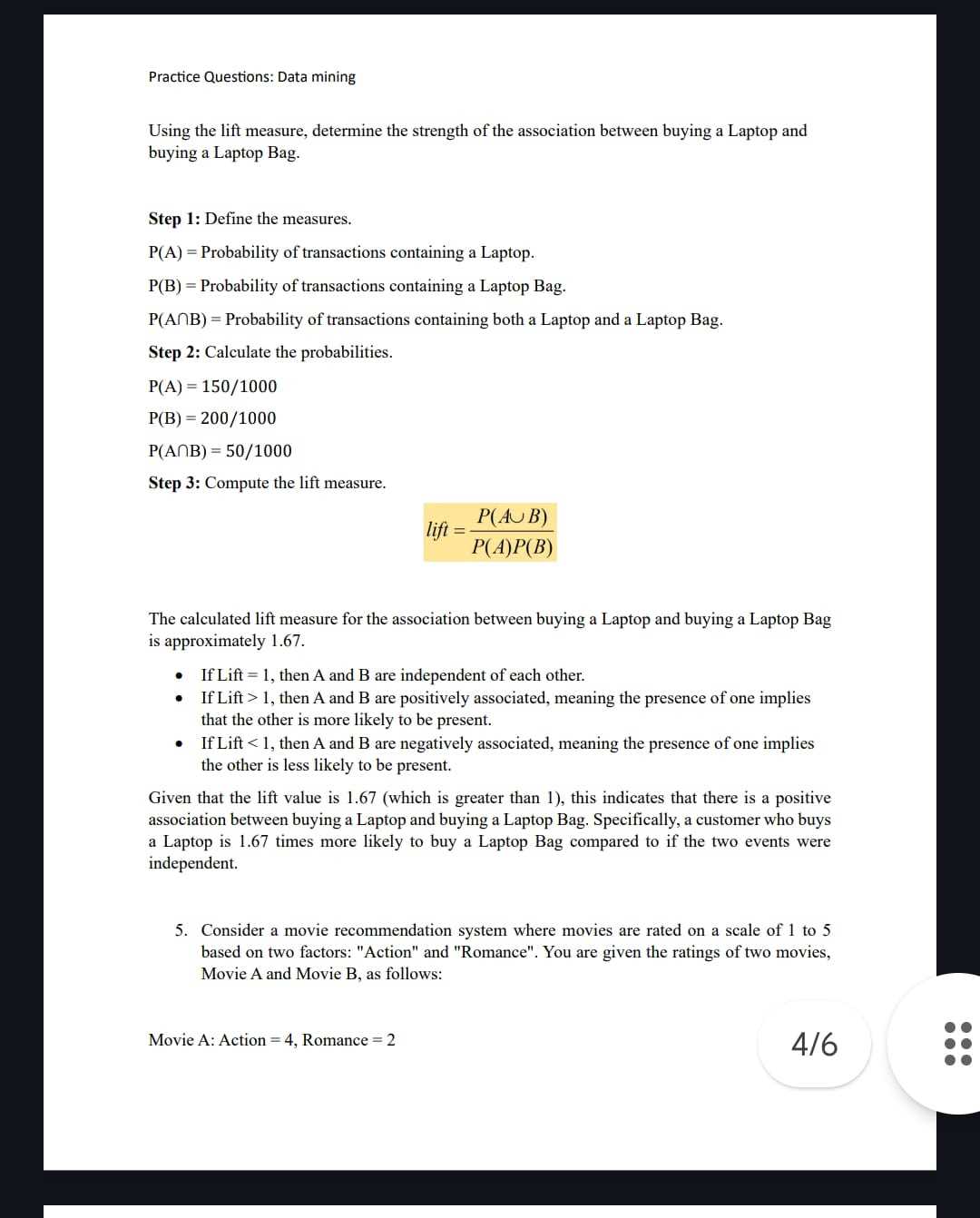

Practical Example: Solving Data Mining Problems

In this section, we will walk through a practical example of how to approach solving complex problems involving large datasets. The process involves identifying patterns, trends, or useful information that can help in making informed decisions. The goal is to demonstrate how these methods can be applied effectively to real-world issues.

Step 1: Problem Understanding

The first step in any analysis process is understanding the problem. This includes clarifying the objectives and deciding what type of insights are required. For example, a retail company may want to predict future sales or customer behavior patterns. In this case, the goal is to predict which products customers are likely to buy next based on their previous purchases.

Step 2: Data Collection

The next step involves gathering the relevant information. Data can come from multiple sources, such as sales records, customer feedback, or transactional logs. In our example, we would gather information on past customer purchases, including product types, frequency, and price points. This data will form the basis for identifying patterns.

Step 3: Preprocessing the Information

Once the data is collected, it is important to clean and prepare it for analysis. This includes handling missing values, removing duplicates, and ensuring the data is consistent. For instance, if some records have missing customer information or incorrect product codes, they must be corrected or removed to maintain the integrity of the analysis.

Step 4: Model Building

Next, a model is built to analyze the data. In our case, we may use a classification algorithm to predict which products a customer is likely to purchase based on their past behavior. The model is trained using historical data, allowing it to learn the relationships between customer characteristics and product preferences.

Step 5: Evaluation

After building the model, it is crucial to evaluate its performance. This can be done by comparing predicted outcomes to actual results. In this case, the model would predict future purchases, and then we would compare these predictions with real sales data. Common evaluation metrics include accuracy, precision, recall, and F1 score.

Step 6: Insights and Implementation

Once the model is evaluated, the final step is to draw actionable insights and implement them in decision-making. In our example, if the model identifies that customers who buy certain products together tend to make repeat purchases, this information could be used to create targeted marketing campaigns or suggest related products to customers on an e-commerce platform.

Common Techniques Used

- Classification: Categorizing customers into groups based on their purchasing patterns.

- Clustering: Grouping similar customers together to identify common characteristics.

- Association Rule Mining: Identifying relationships between different products frequently bought together.

By following these steps, complex problems can be solved effectively, turning raw data into actionable insights that lead to improved decision-making and business outcomes.

Preparing for Complex Data Analysis Scenarios

In this section, we will discuss the best practices for handling intricate situations that require advanced analytical techniques. These challenges often arise when working with large, unstructured, or highly complex datasets. The ability to approach these scenarios effectively is crucial for deriving meaningful insights and making data-driven decisions. Preparation for these tasks involves a combination of domain knowledge, statistical expertise, and familiarity with a variety of algorithms and tools.

One of the most important aspects of tackling these problems is understanding the specific objectives and requirements of the task. Whether the goal is to identify patterns, predict future trends, or cluster related items, having a clear vision of what needs to be accomplished ensures that the right methods and techniques are applied. Additionally, knowing the limitations and assumptions of various approaches helps avoid common pitfalls and guides the overall strategy.

Key Strategies for Tackling Complex Problems

To effectively prepare for challenging analytical tasks, consider the following strategies:

- Understand the Context: Familiarize yourself with the subject matter and the context in which the analysis will be applied. This can help you tailor the approach to the specific needs of the task.

- Clean and Preprocess the Data: High-quality data is essential for accurate results. This step includes removing outliers, filling in missing values, and ensuring that the dataset is consistent and reliable.

- Choose the Right Model: Selecting the appropriate model or algorithm is crucial for the success of the analysis. Factors like dataset size, type of problem, and performance requirements should all be considered when choosing a technique.

- Use Cross-Validation: To avoid overfitting and ensure the model generalizes well to unseen data, cross-validation techniques should be applied. This helps assess the model’s performance under different scenarios.

Tools and Techniques to Consider

For complex scenarios, a range of analytical tools and methods may be necessary. Some commonly used techniques include:

- Machine Learning Algorithms: Decision trees, neural networks, and support vector machines are frequently employed for classification and regression tasks.

- Dimensionality Reduction: Methods like PCA (Principal Component Analysis) are helpful for reducing the complexity of high-dimensional data while retaining key information.

- Ensemble Methods: Combining multiple models to improve the robustness and accuracy of predictions, such as random forests or gradient boosting.

- Clustering: Techniques like k-means or hierarchical clustering are useful when grouping similar items together without predefined labels.

By mastering these strategies and tools, you can confidently approach complex analysis tasks, whether they involve large volumes of unstructured data or intricate relationships between variables. Proper preparation and understanding of the techniques involved are the key to delivering actionable insights and making data-driven decisions.

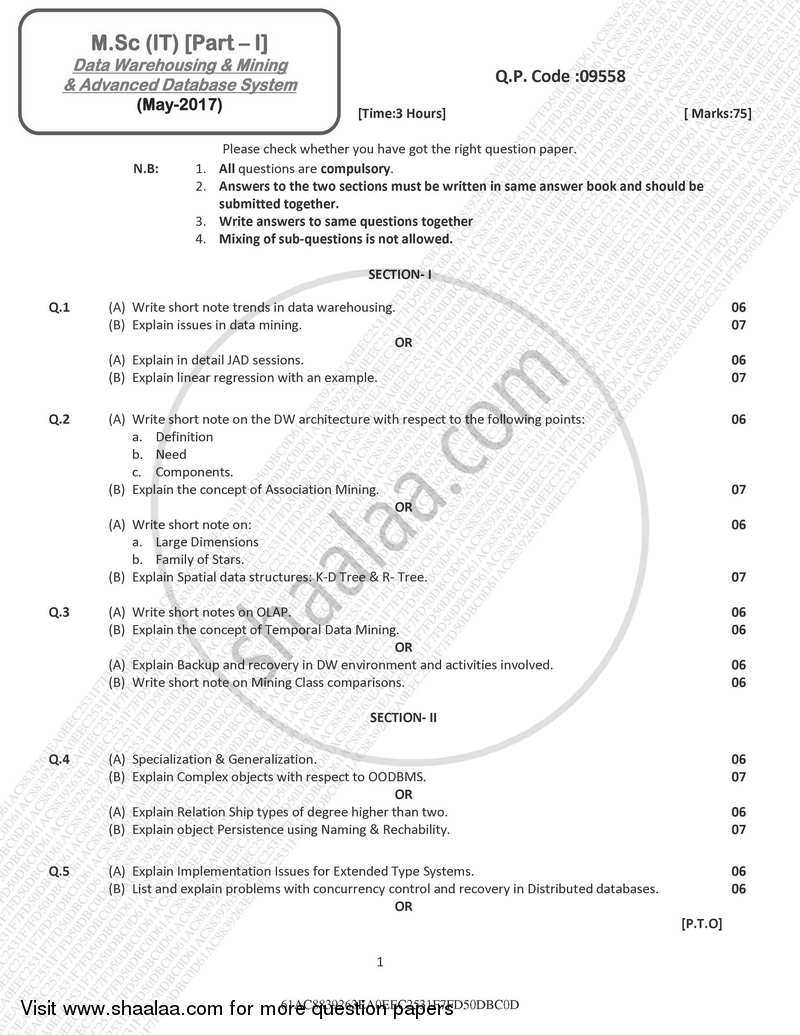

How to Review Past Assessment Papers

Reviewing previous assessment materials is a crucial step in preparing for future challenges. It provides insight into the format, style, and common topics covered, enabling more efficient preparation. By carefully analyzing past papers, you can identify recurring themes, frequently tested concepts, and areas where deeper understanding may be needed. This practice not only helps reinforce knowledge but also boosts confidence during assessments.

To make the most of this review process, it’s important to approach the task with a strategic mindset. Simply reading through past papers isn’t enough; active engagement with the material is key to extracting meaningful insights. Below are some steps that can help you review past assessments effectively:

Effective Strategies for Reviewing Past Papers

Follow these guidelines to ensure a thorough and productive review of previous materials:

- Identify Key Topics: Go through the papers and highlight recurring subjects or concepts. These are likely areas of focus for future assessments and should be studied more in-depth.

- Analyze the Question Structure: Pay attention to the types of questions asked. Understanding the common formats–such as multiple choice, short answer, or essay–will help you prepare accordingly.

- Practice Answering: Try answering the questions without looking at the solutions. This will test your understanding and help identify gaps in knowledge.

- Understand Mistakes: If you review your past responses, focus on why certain answers were incorrect. Recognizing the mistakes will help you avoid them in future assessments.

- Time Yourself: Set a time limit when practicing with past papers. This simulates real conditions and helps you manage your time effectively during the actual assessment.

How to Organize Your Review Process

Organizing your review sessions can increase efficiency and ensure comprehensive coverage of all topics. Consider the following steps:

- Set Clear Goals: Before you start reviewing, outline your objectives. For example, focus on mastering specific topics or improving your speed in answering questions.

- Break Down the Papers: Don’t attempt to review everything at once. Break down each past paper into manageable sections, focusing on one question or topic at a time.

- Track Your Progress: Keep track of your performance on each past paper. This will help you identify areas of improvement and monitor your progress over time.

- Review Solutions Thoroughly: After attempting the questions, compare your answers with the provided solutions. Understand the reasoning behind correct answers, even if you got the question right.

By following these strategies, reviewing past assessment materials becomes a more effective and rewarding process. Not only does it reinforce what you already know, but it also helps you identify weaknesses and refine your approach for future success.