The increasing importance of modern computing systems has led to the rise of complex frameworks and methods that drive various industries. Understanding these topics is essential for anyone pursuing a career in technology or seeking to master essential techniques. In this section, we focus on the critical areas that are frequently tested, providing insight into the most relevant subjects in this ever-evolving field.

To succeed in mastering these concepts, it is important to focus on both theoretical knowledge and practical applications. By reviewing key practices, tools, and methodologies, individuals can deepen their understanding and develop the skills necessary to excel in real-world scenarios. Whether you’re preparing for a formal evaluation or refining your expertise, this resource aims to offer valuable guidance for efficient learning.

Key topics covered here are designed to test your grasp of fundamental principles, advanced techniques, and cutting-edge tools. Emphasizing critical thinking and problem-solving, these materials provide a strong foundation for anyone aiming to achieve proficiency in the field.

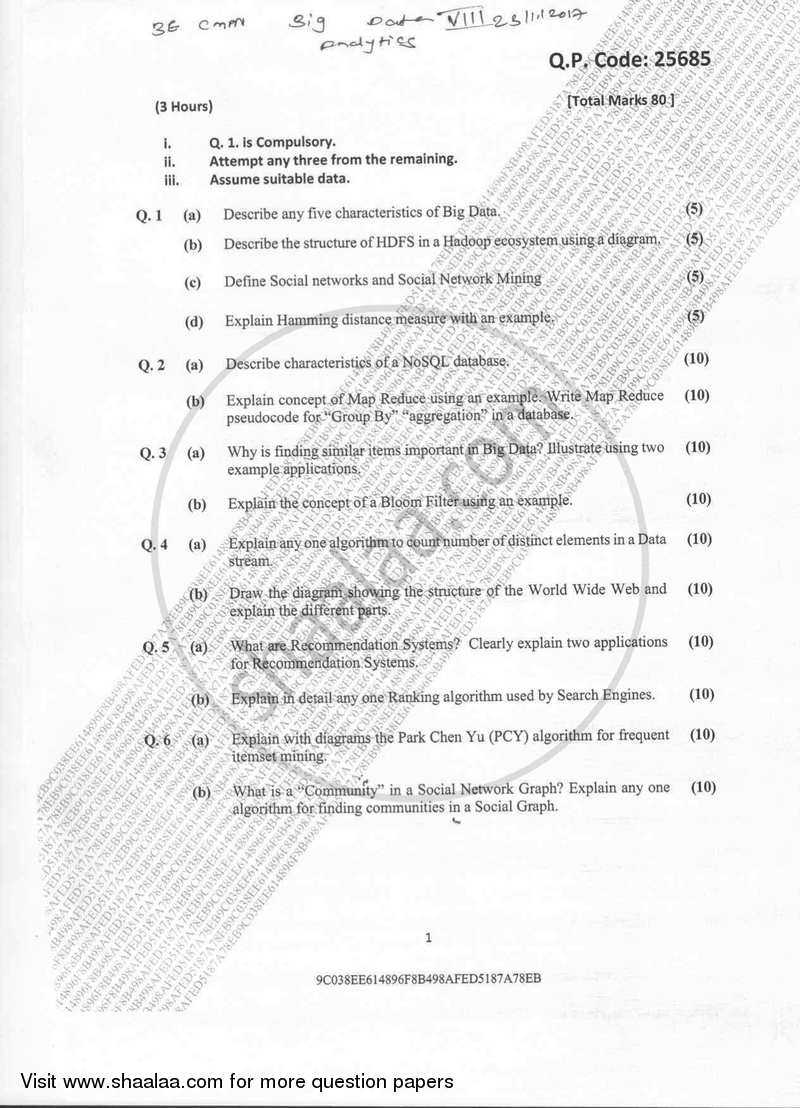

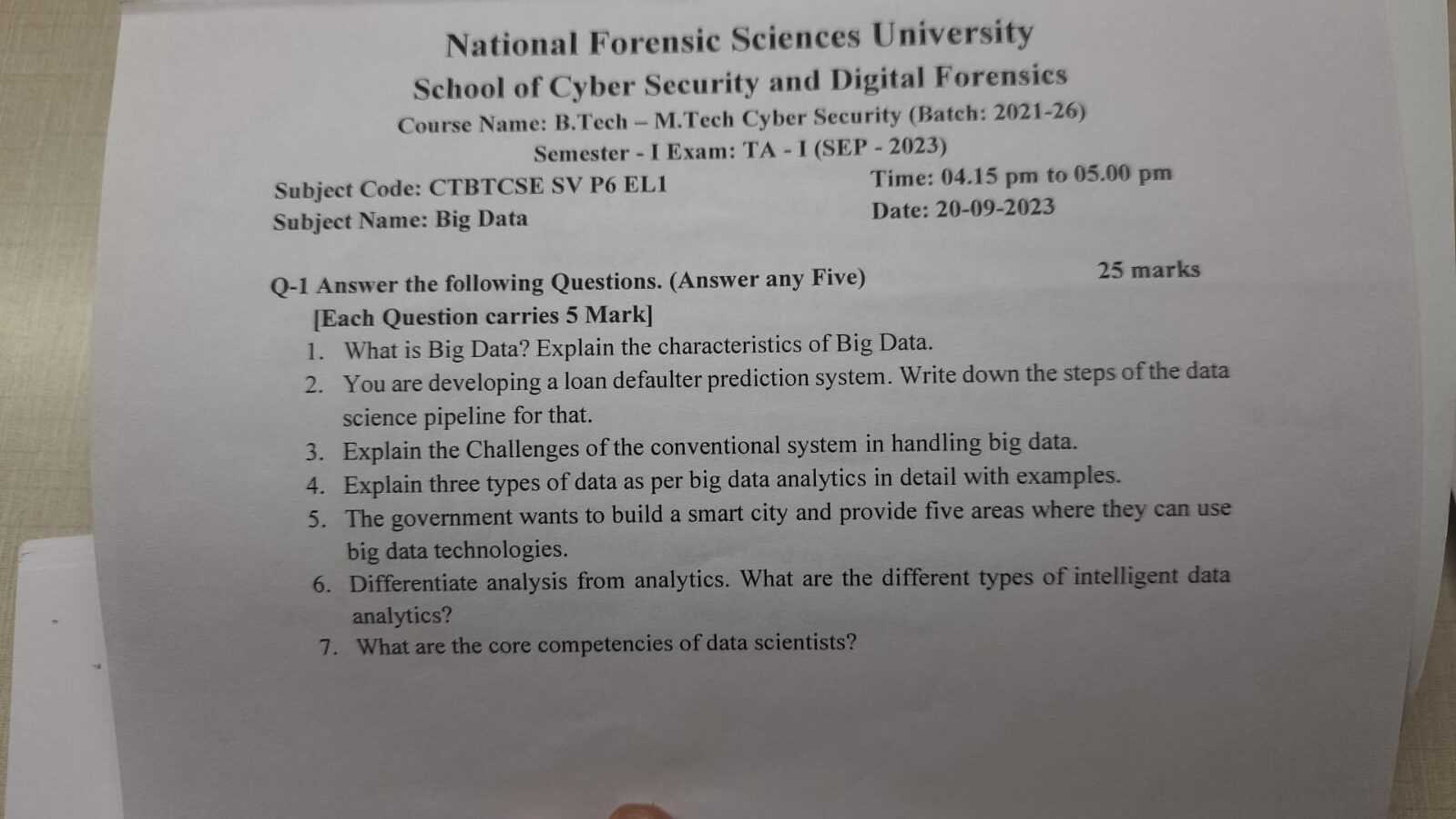

Big Data Exam Questions and Answers

Mastering complex concepts and techniques in advanced computing is essential for anyone looking to excel in this rapidly evolving field. This section provides a comprehensive overview of the core subjects often encountered in assessments, focusing on the critical areas you need to understand. By examining key topics and their practical applications, individuals can strengthen their expertise and prepare for real-world scenarios.

In this guide, you’ll find a range of examples that help clarify important principles and methodologies. These scenarios are designed to challenge your understanding and provide insight into how these concepts are tested. Whether you are reviewing for a formal evaluation or improving your overall proficiency, this resource offers structured material to enhance your knowledge.

Through detailed practice material, this section will provide the opportunity to familiarize yourself with the most commonly tested themes. Engaging with these examples will sharpen your analytical skills and reinforce your ability to apply theory to practical situations, helping you succeed in your studies and future professional endeavors.

Overview of Big Data Concepts

Understanding the underlying principles of large-scale information systems is essential for anyone working with modern technologies. These systems process vast amounts of information, often in real-time, to uncover patterns and drive decision-making. This section introduces the core ideas behind these complex environments, helping to build a solid foundation for further exploration.

Key components in these systems include storage solutions, processing techniques, and tools designed to handle the challenges posed by immense volumes of information. The ability to efficiently manage, analyze, and interpret this material is central to the success of these technologies. A thorough understanding of these fundamental concepts is crucial for anyone aiming to work in this field.

This section provides a brief yet comprehensive look at the critical building blocks of modern processing techniques, offering insight into how different elements interact to form a cohesive system. Mastery of these concepts is the first step toward becoming proficient in handling complex technologies and contributing to advancements in this area.

Key Techniques in Data Processing

In the realm of large-scale information handling, mastering various processing methods is crucial for effective analysis and decision-making. These techniques are designed to extract valuable insights from vast quantities of information, ensuring accuracy and efficiency. Whether dealing with structured or unstructured sets, the ability to select and apply the right methodology is essential for any professional in the field.

Real-Time Processing

Real-time processing enables the immediate handling of information as it is generated, making it essential for applications where speed is a priority. This technique allows for the rapid analysis and response to incoming data, supporting industries such as finance, healthcare, and telecommunications. Streamlining operations through this approach leads to faster decision-making and enhanced performance.

Batch Processing

Batch processing, on the other hand, involves collecting and processing information in large volumes at scheduled intervals. It is well-suited for scenarios where immediate results are not necessary but efficiency in handling large datasets is required. Optimizing this method can significantly reduce processing time and improve resource management.

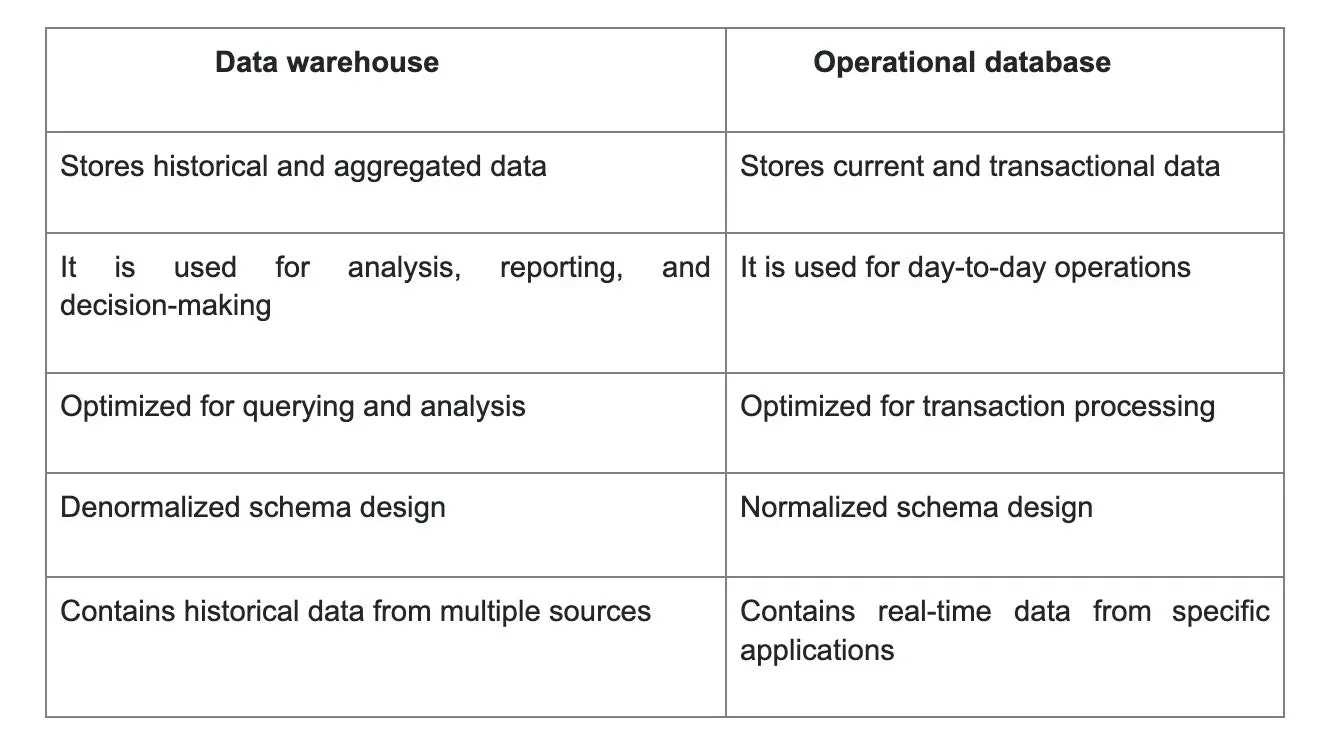

Understanding Data Warehousing

The concept of organizing vast amounts of information for efficient querying and analysis is central to modern business intelligence. By consolidating information from various sources into a unified repository, this approach ensures that data is accessible, reliable, and ready for in-depth analysis. Understanding the architecture and components involved is essential for maximizing the potential of these systems.

In a well-structured environment, raw information is extracted, transformed, and loaded into the warehouse, where it can be easily accessed for reporting and analysis. This process streamlines the flow of information, allowing organizations to derive valuable insights without the need to sift through raw, unstructured datasets. Efficient management of this system is crucial to ensuring that decision-makers have accurate, up-to-date resources at their disposal.

While data storage is an essential aspect, the true power of a warehouse lies in its ability to integrate diverse datasets and present them in a way that supports meaningful analysis. Optimizing this infrastructure can significantly enhance an organization’s ability to make informed, data-driven decisions across various departments.

Challenges in Big Data Management

Handling large-scale information systems presents numerous obstacles, particularly as the volume, variety, and velocity of collected material continue to grow. Managing such complex environments requires addressing several key issues that can hinder efficiency and accuracy. Overcoming these challenges is essential to ensuring that these systems can support effective decision-making and operational success.

- Data Quality – Ensuring that collected material is accurate, consistent, and reliable can be difficult due to errors, incomplete records, or inconsistencies across sources.

- Storage Capacity – The sheer volume of information often outpaces available storage solutions, making it essential to optimize capacity while ensuring easy retrieval and management.

- Scalability – As the amount of data increases, systems must be able to scale efficiently to accommodate growing needs without sacrificing performance or reliability.

- Security – Protecting sensitive information from unauthorized access or breaches is an ongoing challenge that requires robust encryption and access control measures.

- Real-Time Processing – The need to analyze data in real time places significant demands on processing systems, requiring advanced algorithms and tools to handle instant insights.

Addressing these challenges often involves adopting new technologies, refining workflows, and maintaining a flexible infrastructure that can evolve with emerging needs. Successful management hinges on the ability to balance efficiency, security, and adaptability while keeping systems running smoothly.

Data Analytics in Real-World Scenarios

The ability to analyze large sets of information and draw actionable insights is fundamental to solving complex problems in various industries. In real-world applications, this capability is crucial for improving business operations, enhancing customer experiences, and making informed decisions. By applying analytics techniques to real-time data, organizations can uncover patterns, forecast trends, and optimize processes for greater efficiency.

Healthcare Applications

In the healthcare sector, advanced analysis tools are used to track patient outcomes, optimize treatment plans, and predict disease outbreaks. By leveraging vast amounts of patient data, professionals can identify potential risks, suggest personalized interventions, and improve overall care. Real-time analysis helps doctors and researchers make informed decisions quickly, enhancing patient outcomes and reducing costs.

Retail and Customer Insights

In the retail industry, understanding consumer behavior is key to driving sales and improving customer satisfaction. By analyzing purchasing patterns, feedback, and demographic data, companies can tailor their offerings to meet customer needs more effectively. Predictive models help retailers adjust inventory, pricing strategies, and marketing efforts to stay competitive and maximize revenue.

Common Algorithms for Data Analysis

Various techniques are used to extract insights from vast amounts of information, each serving a specific purpose based on the type of analysis required. These methods range from simple statistical models to advanced machine learning algorithms, designed to uncover patterns, predict future trends, and optimize decision-making processes. Understanding the most commonly used algorithms is crucial for anyone working with complex datasets.

Supervised Learning Algorithms

Supervised learning involves training models on labeled data to make predictions or classifications. This type of analysis is used when historical data is available to train the algorithm. Common algorithms include:

- Linear Regression – A basic algorithm used for predicting a continuous output based on input variables.

- Logistic Regression – Used for binary classification tasks, determining the probability of a categorical outcome.

- Support Vector Machines (SVM) – A classification technique that finds the optimal hyperplane to separate data points into distinct classes.

- Decision Trees – A model that makes predictions by splitting data into branches based on feature values, creating a tree-like structure.

Unsupervised Learning Algorithms

Unsupervised learning is used to identify patterns in datasets without labeled outcomes. These algorithms help in clustering, grouping similar data points, or reducing the complexity of large datasets. Key algorithms include:

- K-Means Clustering – An algorithm that groups data into clusters based on similarities, helping to identify patterns or segments.

- Principal Component Analysis (PCA) – A dimensionality reduction technique that transforms a large set of variables into a smaller one while retaining key information.

- Hierarchical Clustering – Builds a tree of clusters by progressively merging or splitting them based on distance metrics.

Big Data Tools and Technologies

To manage and process vast volumes of information efficiently, specialized tools and technologies are required. These solutions are designed to handle high-scale environments, ensuring that complex tasks like storage, processing, and analysis can be performed with speed and accuracy. Understanding the key platforms and tools available is crucial for anyone involved in advanced information management.

Among the most widely used technologies are distributed computing frameworks, which allow for the parallel processing of large datasets across multiple systems. Additionally, storage solutions have evolved to accommodate growing volumes, ensuring that data can be securely stored and accessed at any time. These technologies form the backbone of modern analysis systems, enabling organizations to turn raw information into valuable insights.

From open-source platforms to proprietary software, the choice of tools depends on the specific needs of the project. Mastery of these technologies is essential for optimizing performance and ensuring scalability in an ever-evolving landscape.

Preparing for Data Science Exams

Successfully preparing for assessments in the field of information analysis requires a combination of theory, practical skills, and a deep understanding of various analytical techniques. It’s essential to not only review key concepts but also to practice applying these skills to real-world scenarios. This approach ensures that you are well-equipped to tackle complex problems and demonstrate your proficiency in the subject.

To be fully prepared, consider following a structured approach to study, focusing on key areas such as statistical methods, machine learning algorithms, and data visualization techniques. Here are some tips for effective preparation:

- Understand Core Concepts – Review foundational theories and methodologies used in the field, including regression models, classification techniques, and clustering algorithms.

- Practice Coding – Many assessments require practical problem-solving using programming languages like Python or R. Practice coding exercises regularly to strengthen your programming skills.

- Work on Case Studies – Analyze real-world datasets and work through case studies to apply theoretical knowledge to practical situations.

- Review Past Problems – Going through previous assessments or sample problems will help you familiarize yourself with the types of challenges you may encounter.

- Time Management – Develop strategies to manage time effectively during the assessment, ensuring that you can answer all questions within the allotted time.

By approaching your study sessions with a comprehensive plan, you can confidently tackle any challenge and perform well in your assessments. Remember, consistency and practice are key to mastering the material.

Important Big Data Frameworks

In the world of advanced information processing, various frameworks are used to handle large-scale computing tasks. These frameworks provide the necessary infrastructure to process, store, and analyze vast amounts of material efficiently. They allow organizations to scale their systems, improve performance, and simplify the complexity of managing large datasets across distributed environments.

Each framework has its strengths and specific use cases, catering to different aspects of processing and analysis. From distributed computing environments to real-time processing systems, these frameworks are designed to meet the growing demands of handling complex operations. Familiarity with these tools is essential for anyone involved in high-performance computing and advanced analytics.

Here are some of the most commonly used frameworks:

- Apache Hadoop – A popular open-source framework that allows for the distributed storage and processing of large datasets. It is built to scale and works with commodity hardware.

- Apache Spark – Known for its speed and ease of use, Spark is a unified analytics engine for big-scale data processing, supporting real-time processing and advanced analytics.

- Apache Flink – A framework designed for real-time stream processing, Flink excels in handling continuous data streams with low latency and high throughput.

- Cassandra – A highly scalable NoSQL database that provides high availability and fault tolerance, commonly used for storing large volumes of unstructured data.

- Google BigQuery – A serverless, highly scalable data warehouse solution designed for analyzing massive datasets with ease and efficiency.

These frameworks are central to modern information management systems, enabling rapid processing, flexibility, and scalability across various industries. Mastery of these tools enhances the ability to manage large volumes of information and extract actionable insights efficiently.

Real-Time Data Processing Essentials

Real-time processing refers to the continuous handling of incoming information, where insights and decisions must be made promptly, often within milliseconds or seconds. This capability is crucial for applications that rely on the immediate analysis of streaming information, such as fraud detection systems, online recommendations, and IoT (Internet of Things) applications. Efficient real-time systems must be capable of managing large volumes of continuously changing data without compromising performance or accuracy.

Key components of real-time processing include ingestion, processing, and output stages. Ingestion involves collecting data from various sources, processing applies algorithms to extract insights, and output involves delivering results to end users or systems in near real-time.

Here is a comparison of some essential technologies used in real-time processing:

| Technology | Purpose | Key Feature |

|---|---|---|

| Apache Kafka | Distributed event streaming platform | High throughput, fault tolerance, scalability |

| Apache Flink | Stream processing engine | Low latency, high accuracy, real-time analytics |

| Apache Storm | Real-time computation system | Distributed, real-time computation of data streams |

| Google Cloud Dataflow | Unified stream and batch processing | Scalable, serverless, integrates with other Google Cloud services |

Real-time processing tools allow organizations to handle complex and dynamic data streams, enabling faster decision-making and more responsive systems. These technologies are foundational in industries where time-sensitive information is crucial for success.

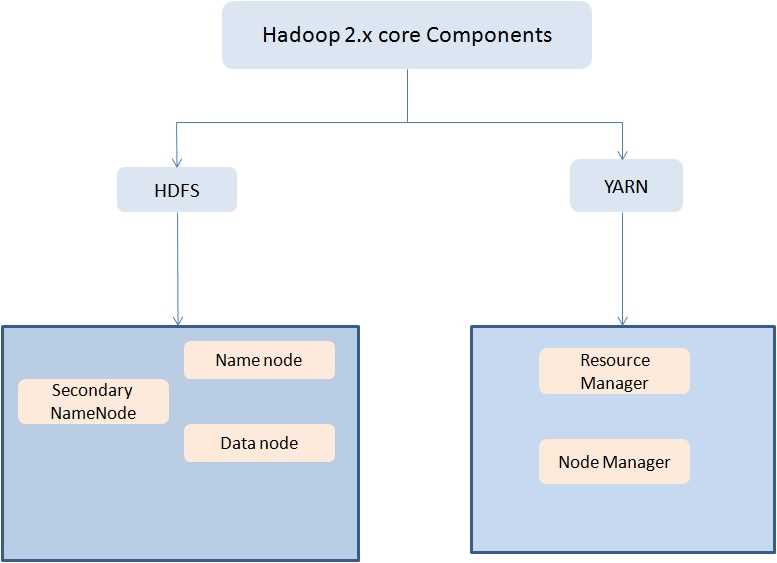

Understanding Hadoop Ecosystem Components

The Hadoop ecosystem is a suite of tools and frameworks designed to manage, process, and analyze vast amounts of unstructured or semi-structured information. This open-source platform enables distributed computing, allowing organizations to store and process enormous volumes of data across clusters of machines. The ecosystem includes various components that work together to provide scalability, fault tolerance, and high availability in a distributed environment.

The core of this ecosystem consists of multiple modules, each serving a unique purpose in managing the lifecycle of information processing. From storage systems to processing engines, these components collectively enhance the performance and efficiency of handling large-scale operations. Understanding how each part of the ecosystem interacts is essential for anyone looking to work with this technology.

Here are some key components of the Hadoop ecosystem:

- Hadoop Distributed File System (HDFS) – The primary storage system that enables reliable and scalable storage of large files across multiple nodes in a cluster.

- MapReduce – A computational framework for processing and generating large datasets in a distributed fashion. It divides tasks into smaller sub-tasks and processes them in parallel.

- YARN (Yet Another Resource Negotiator) – A resource management layer that enables resource allocation and scheduling for applications running on a Hadoop cluster.

- Hive – A data warehousing tool built on top of Hadoop that allows users to query large datasets using a SQL-like language.

- HBase – A NoSQL database that provides real-time access to large datasets, ideal for applications requiring quick read and write capabilities.

- Pig – A high-level platform that simplifies the process of writing MapReduce programs. It provides an abstraction layer that allows for easier coding.

- ZooKeeper – A coordination service that manages configuration information and provides synchronization and naming registry for distributed systems.

Each component in the ecosystem is designed to address specific challenges faced when processing vast amounts of information in a distributed setting. By combining these tools, organizations can efficiently manage large-scale applications while maintaining high performance, reliability, and scalability.

Data Security in Big Data Systems

As the volume of information increases, securing sensitive and critical data becomes a central concern for organizations managing large-scale systems. Protecting the integrity, confidentiality, and availability of stored information is essential, particularly when dealing with distributed environments. In such systems, safeguarding against unauthorized access, data breaches, and vulnerabilities is a complex but necessary task.

Incorporating robust security mechanisms at every stage of the data lifecycle ensures that unauthorized users cannot compromise the system. These measures must include data encryption, access controls, monitoring, and secure communication protocols to ensure data privacy and integrity. The growing reliance on cloud services and distributed computing further complicates the task, making it imperative for organizations to adopt a comprehensive security strategy.

Key security practices for managing large-scale systems include:

| Security Mechanism | Purpose | Key Benefits |

|---|---|---|

| Encryption | Protects data by converting it into an unreadable format | Prevents unauthorized access, ensures confidentiality |

| Access Control | Limits access to information based on user roles | Ensures that only authorized personnel can interact with sensitive data |

| Authentication | Verifies the identity of users and systems | Prevents unauthorized users from gaining access to critical resources |

| Audit Trails | Tracks user activity and access to systems | Helps detect and respond to potential security threats |

| Firewalls | Monitors and controls incoming and outgoing network traffic | Protects systems from unauthorized access and external threats |

By employing a multi-layered security approach, organizations can better protect themselves from potential threats while ensuring the safe handling of sensitive information. A focus on continuous monitoring, compliance with security standards, and proactive threat mitigation can significantly reduce the risks associated with managing large-scale systems.

Data Quality Management Practices

Ensuring the accuracy, consistency, and reliability of information across various systems is a crucial component for any organization managing vast amounts of records. Effective management practices focus on identifying and eliminating issues that may compromise the quality of stored or processed records. This enables businesses to make informed decisions based on trustworthy data, which in turn supports operational efficiency and better decision-making.

Key practices in managing the quality of information include implementing thorough validation methods, conducting regular audits, and integrating automated processes that ensure continual improvement. These practices help mitigate errors, reduce inconsistencies, and maintain a high standard of record integrity, which is essential for analytics, reporting, and strategic planning.

Common Approaches to Data Quality

Different methods are employed to maintain high-quality records, each addressing specific challenges and stages of information management. These strategies include:

- Data Validation: This involves cross-checking information to ensure its accuracy before it is entered into the system. Validating incoming records prevents incorrect or incomplete entries that could lead to inaccurate analyses.

- Data Cleansing: Involves identifying and rectifying or removing erroneous, duplicated, or outdated information from systems, ensuring that only reliable data remains for decision-making.

- Consistency Checks: Ensures that data across multiple systems or platforms is aligned and consistent, preventing discrepancies that could arise from different formats or terminology.

- Data Profiling: Involves analyzing records to understand their structure, content, and quality. Profiling helps uncover potential issues in the data, such as gaps or patterns that might require attention.

Tools and Techniques for Data Quality Improvement

To successfully implement these practices, organizations often turn to a range of tools and techniques that automate tasks, provide insights into data health, and integrate quality measures throughout data pipelines. These tools can help improve accuracy, speed, and consistency across large datasets. Some common tools include:

- ETL Tools: These tools extract, transform, and load data, ensuring that it is cleansed, validated, and formatted before it is entered into the target system.

- Data Governance Platforms: These platforms provide guidelines and frameworks for managing information quality, ensuring compliance with regulations and internal standards.

- Master Data Management (MDM): MDM systems consolidate information from various sources into a single, trusted version, reducing redundancies and ensuring consistency.

By implementing these practices and using the right tools, organizations can ensure that their information remains accurate, reliable, and useful for strategic decision-making.

Key Architectures for Managing Large-Scale Systems

Efficient management of large volumes of records requires a carefully designed system architecture that can handle the complexities of vast information storage, processing, and analysis. These frameworks ensure that organizations can effectively store, process, and retrieve information at scale, supporting both operational and analytical needs. Key architectures are designed to optimize performance, scalability, and flexibility in handling massive amounts of information across distributed environments.

There are several approaches to structuring these systems, each designed to address specific business needs and technical challenges. These architectures are built to support real-time processing, batch processing, and hybrid models, depending on the requirements of the use case.

Distributed Computing Architectures

Distributed computing frameworks enable the processing of records across multiple servers or machines, providing enhanced scalability and fault tolerance. Some of the most commonly used architectures in this category include:

- Hadoop Ecosystem: This architecture is based on a distributed file system and allows for processing vast amounts of data in parallel. It is known for its ability to handle both structured and unstructured information.

- Apache Spark: Spark provides faster processing than Hadoop by enabling in-memory computation. It supports real-time data analysis and is designed to scale horizontally across a distributed network of computers.

- NoSQL Databases: These systems, such as MongoDB or Cassandra, are specifically designed to handle large-scale, distributed records across clusters of machines, offering flexibility in data modeling and high availability.

Cloud-Based Architectures

Cloud platforms provide scalable, on-demand resources for managing large quantities of information. Cloud-based frameworks offer flexibility in terms of storage and computing power, reducing the need for organizations to maintain their own physical infrastructure. Popular cloud-based architectures include:

- Amazon Web Services (AWS): AWS provides an extensive set of tools and services to manage and process vast amounts of information, such as S3 for storage and Redshift for data warehousing.

- Google Cloud Platform (GCP): GCP offers a range of services for distributed computing, such as BigQuery for fast analysis and Cloud Storage for large-scale information storage.

- Microsoft Azure: Azure provides services like Azure Blob Storage and Azure Data Lake for storing and processing vast quantities of data, enabling scalable analytics and machine learning.

Event-Driven Architectures

For systems that require real-time processing of incoming records, event-driven architectures are essential. These architectures are designed to handle continuous streams of information in a highly responsive manner. Key components of event-driven frameworks include:

- Apache Kafka: A distributed event streaming platform that enables real-time processing and integration of large-scale data streams across systems.

- Apache Flink: A framework designed for real-time stream processing, providing low-latency analytics and handling large-scale data flows.

By leveraging these architectures, organizations can build flexible, scalable, and efficient systems for processing vast amounts of information, ensuring they can handle complex tasks like predictive analytics, machine learning, and large-scale reporting.

Cloud Computing Integration for Managing Large-Scale Systems

The integration of cloud computing with large-scale processing systems provides organizations with enhanced flexibility, scalability, and cost efficiency. By leveraging the cloud, companies can seamlessly handle vast volumes of information while ensuring high availability, easy data access, and improved computational power. This combination of cloud and distributed processing technologies enables businesses to innovate, scale rapidly, and reduce infrastructure costs.

Cloud platforms offer on-demand storage, computing resources, and advanced analytics capabilities, which are ideal for systems requiring massive data processing. Through integration, these systems can offload resource-intensive tasks to the cloud, while keeping data accessible and secure. Whether it’s about processing, storage, or real-time analysis, cloud services play a crucial role in enabling businesses to optimize their workflow.

Cloud Platforms for Large-Scale Management

Several cloud service providers offer specialized tools to manage and process massive amounts of records, which help improve performance, speed, and reliability. Some of the most popular platforms include:

| Cloud Provider | Key Services | Benefits |

|---|---|---|

| Amazon Web Services (AWS) | S3, EC2, Redshift | Scalable storage, fast processing, integrated analytics |

| Google Cloud Platform (GCP) | BigQuery, Cloud Storage, Dataflow | Real-time analysis, flexible storage, ease of scaling |

| Microsoft Azure | Azure Blob Storage, Azure Data Lake | Comprehensive analytics, secure storage, integrated services |

Benefits of Cloud Integration

Integrating large-scale systems with cloud computing platforms offers numerous advantages that enhance business efficiency and technical capability:

- Scalability: Cloud platforms provide the ability to scale up or down based on the volume of processing and storage requirements, ensuring optimal performance without unnecessary overhead.

- Cost-Efficiency: With pay-as-you-go models, businesses can avoid the upfront costs associated with maintaining their own infrastructure, while only paying for the resources they use.

- High Availability: Cloud providers offer infrastructure that ensures high availability and fault tolerance, keeping critical systems online even in the event of hardware failures.

- Flexibility: Cloud-based systems can be easily reconfigured and adapted to new needs, enabling businesses to quickly incorporate new technologies and strategies.

By adopting cloud-based solutions, companies can overcome the limitations of traditional systems and gain access to a suite of powerful tools that enable better performance, enhanced security, and streamlined data management.

Data Visualization Techniques for Exam Success

Effective visual representation of information can significantly enhance understanding and retention, particularly when preparing for assessments. Visual aids such as charts, graphs, and diagrams not only simplify complex concepts but also help identify patterns, trends, and relationships within large sets of information. By mastering the art of data visualization, learners can better organize and present their knowledge, which aids in both comprehension and recall.

Key Visualization Methods for Learning

Several visualization techniques can be used to clarify and streamline study material, making it easier to understand and remember. These methods provide clarity and offer different ways of interpreting the same set of information, helping to reinforce key concepts. Some common techniques include:

- Bar Charts: Ideal for comparing quantities across categories, bar charts offer a clear visual representation of differences and can quickly highlight trends or disparities.

- Line Graphs: These are useful for illustrating changes over time, allowing you to track progress and identify trends in chronological order.

- Pie Charts: A great choice for showing proportions and percentages within a whole, making them perfect for data breakdowns and distribution analysis.

- Flow Diagrams: Excellent for showing processes and workflows, these diagrams help visualize the sequence of steps and their connections.

Benefits of Visual Aids in Studying

Incorporating visual aids into your study sessions offers numerous benefits that go beyond just presenting information. By using these tools effectively, students can:

- Enhance Memory Retention: Visualizing key concepts helps the brain process and store information more effectively, improving recall during assessments.

- Identify Patterns and Trends: Visual tools help students spot recurring themes, allowing them to make connections between related concepts and anticipate questions that may arise.

- Clarify Complex Ideas: Visual aids break down complicated material into easily digestible parts, making it simpler to understand difficult or abstract topics.

- Boost Engagement: Engaging with visual representations keeps students focused, encouraging active participation in the learning process.

Incorporating visualization techniques into study routines not only makes learning more interactive but also provides an efficient way to organize information, ultimately contributing to exam success.

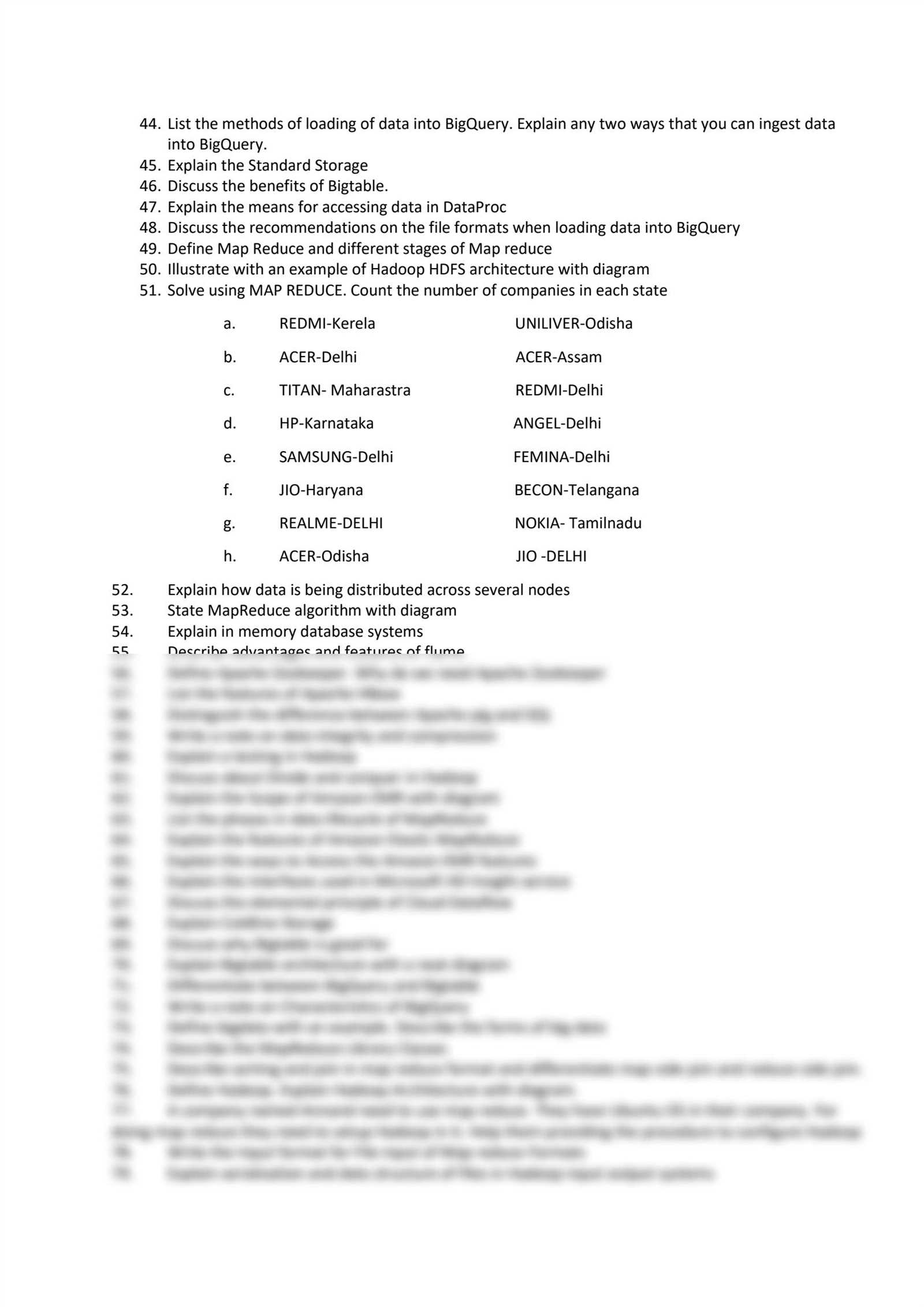

Sample Questions for Practice

Practicing with carefully crafted queries is a crucial part of mastering any subject. It helps solidify understanding, identify areas that need further attention, and enhance problem-solving skills. By regularly engaging with practice materials, learners can familiarize themselves with the format, structure, and types of topics that might be covered, leading to greater confidence and preparedness.

Practice Topics

Below are examples of practice scenarios designed to test your knowledge and abilities in the subject matter. These exercises focus on various concepts, techniques, and tools commonly encountered, allowing for well-rounded preparation.

- Define the concept of distributed computing. What are its advantages over traditional methods?

- Explain the difference between structured, semi-structured, and unstructured information.

- Describe how machine learning algorithms can be applied to identify patterns in large volumes of information.

- What are the key components of a cloud-based architecture for managing vast amounts of information?

- Provide an example of how a relational database differs from a NoSQL system in handling large-scale information storage.

Further Practice Exercises

Engaging with additional questions helps ensure thorough preparation for any assessment. Below are some more practice exercises that test critical thinking and application of various methodologies:

- How would you approach ensuring the accuracy and quality of information in a large system?

- What are the key factors to consider when selecting a framework for processing large-scale information?

- Discuss the role of real-time processing in applications such as social media or financial transactions.

- What challenges are associated with managing information privacy and security in large systems?

- Explain how data visualization can be used to improve the interpretability of complex data sets.

By practicing with these exercises, learners can reinforce their understanding and prepare for any challenges they may encounter. Regular practice is an essential part of the learning process, making it easier to recall key concepts when needed.