Understanding how widely values in a set differ from the average is a fundamental concept in statistics. This process helps identify the degree of variability within a collection of measurements, offering insights into the spread of numbers and their consistency.

By examining this measure, you can assess whether most numbers are clustered near the mean or if there are significant deviations. Knowing how to calculate this measure correctly is essential for analyzing trends, making decisions, and interpreting results in various fields such as finance, science, and quality control.

In this article, we will explore the necessary steps for determining this key statistical value and discuss how to refine the result to a convenient level of precision. Mastering this skill will enhance your ability to interpret data and draw meaningful conclusions.

Understanding Variance and Its Importance

In statistics, assessing how much individual values differ from a central measure provides crucial insight into the nature of a dataset. This concept plays a significant role in determining whether numbers in a group are tightly clustered or scattered. A deeper understanding of this measure is essential for interpreting not only raw data but also for making informed predictions and decisions based on statistical analysis.

Why It Matters in Data Analysis

By calculating this measure, you can identify patterns that highlight trends or inconsistencies. High values indicate a larger spread, suggesting a greater level of unpredictability, while lower values point to more consistent outcomes. In various applications, such as quality control or financial analysis, recognizing these variations can help detect underlying issues and improve decision-making processes.

Practical Applications in Different Fields

This concept is widely used across industries. For instance, in finance, it helps assess the risk of investments, while in manufacturing, it measures product quality. Understanding how these fluctuations affect outcomes is essential for managing uncertainty and optimizing performance.

| Context | Application |

|---|---|

| Finance | Assessing market risk and volatility |

| Quality Control | Evaluating product consistency and defect rates |

| Scientific Research | Understanding measurement reliability and experimental accuracy |

What Is Variance in Statistics

In statistical analysis, understanding how widely individual values spread around a central value is key to interpreting any dataset. This measure quantifies the degree of dispersion, providing a numerical representation of how much values deviate from their mean. The higher this measure, the more spread out the values are; conversely, a lower value suggests a tighter clustering of numbers.

How It Is Calculated

To determine this measure, first, calculate the mean of the values in the set. Then, subtract the mean from each individual value, square the result, and find the average of these squared differences. This result reflects how much, on average, each value deviates from the mean, offering a clearer picture of the dataset’s distribution.

Significance in Data Analysis

This measure is crucial for making data-driven decisions. It helps to understand the reliability of an estimate, identify trends, and measure the risk or uncertainty associated with a set of values. In fields ranging from finance to engineering, understanding this statistical property is essential for effective analysis and prediction.

Why Variance Matters in Data Analysis

In any form of statistical evaluation, understanding the spread of values within a set is essential for interpreting the results accurately. This measure reveals the level of consistency or unpredictability in a dataset, offering key insights into its reliability and potential risks. Whether analyzing financial performance, scientific results, or operational efficiency, grasping the significance of this metric helps make informed decisions.

Here are a few reasons why understanding this measure is critical in data analysis:

- Assessing Consistency: A low measure indicates uniformity, while a high value suggests variability, which can signal problems or highlight areas for improvement.

- Identifying Trends: By analyzing fluctuations, you can detect patterns and correlations that may not be immediately obvious, aiding in forecasting and strategic planning.

- Risk Management: In fields like finance or manufacturing, understanding the degree of variation helps manage risks, assess volatility, and set realistic expectations.

- Data Reliability: This measure helps determine the accuracy of data collection methods and the consistency of measurement processes.

Without understanding the spread of values, it’s difficult to draw meaningful conclusions or make predictions based on incomplete or unreliable data. By incorporating this measure into your analysis, you gain a fuller understanding of underlying trends and potential uncertainties, allowing for more robust decision-making.

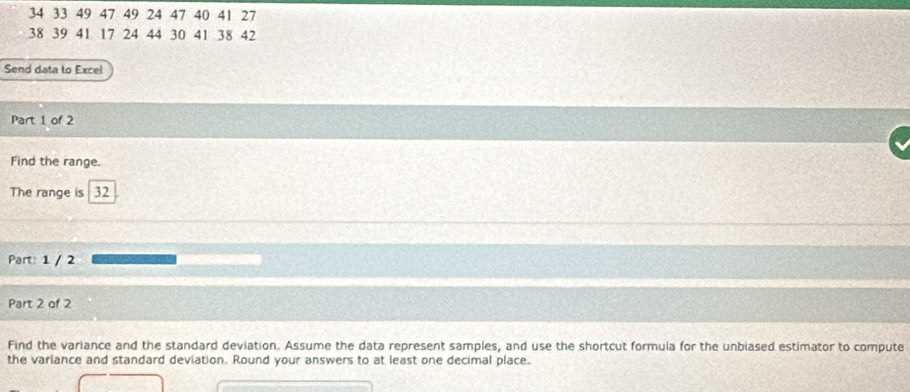

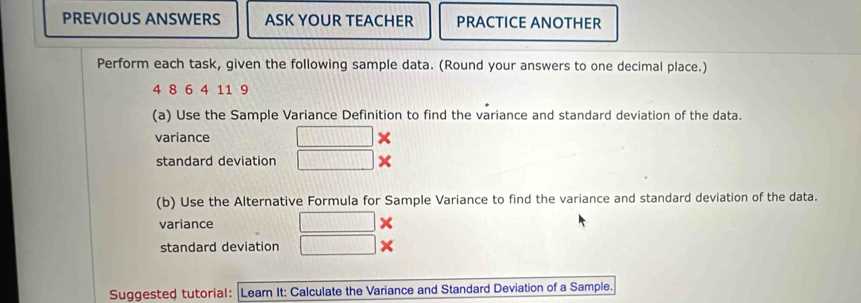

How to Calculate Variance Step by Step

To accurately assess the level of dispersion in a set of numbers, you must follow a specific process. This involves comparing each individual value to the average and quantifying the differences. By calculating this measure, you gain a deeper understanding of how values deviate from the mean, which is crucial for data analysis.

Step 1: Calculate the Mean

The first step is to determine the average of the values. Add all the numbers together and divide the sum by the total number of elements. This will give you the central value around which the other numbers are distributed.

Step 2: Subtract the Mean and Square the Differences

Next, subtract the mean from each individual value to find the deviation. Then, square each of these differences to eliminate negative values and give more weight to larger deviations. This helps to emphasize larger differences and reduce the impact of smaller ones.

Step 3: Calculate the Average of the Squared Differences

Now, take the sum of the squared differences and divide it by the number of values. This step will give you the final result, representing the average squared deviation from the mean. This number reflects how widely values are spread in relation to the average.

By following these steps, you will have calculated the dispersion of values, providing essential insight into how consistent or variable the set is. This process is vital for making reliable predictions and decisions based on statistical analysis.

Understanding Data Spread and Variance

When analyzing any group of values, it’s important to understand how they are distributed in relation to the average. The spread of numbers can indicate whether the values are tightly grouped around the mean or if there is significant variation across the dataset. This understanding helps provide insights into the stability, consistency, or unpredictability of the values.

Measuring this spread is crucial for making informed decisions in various fields, from business and finance to science and engineering. By examining how far individual numbers deviate from the average, you can assess the overall variability, which in turn affects predictions, risk management, and optimization strategies.

One way to quantify this spread is by calculating a statistical measure that highlights how much each value differs from the central value. A higher value indicates greater variation, suggesting that the numbers are more dispersed, while a smaller result implies that the numbers are closer to the mean and more predictable.

Ultimately, understanding the distribution of values allows you to assess the reliability of the dataset, identify potential outliers, and gain a deeper understanding of underlying trends or patterns. This measure plays a key role in interpreting and drawing conclusions from statistical analyses.

Formula for Variance Calculation

To calculate the dispersion of values in a set, a specific mathematical formula is used. This formula helps quantify how much individual elements deviate from the mean, providing a numerical value that represents the spread of the numbers. By following this structured approach, you can calculate the degree of variability in any dataset.

The general steps to calculate this measure can be broken down as follows:

- Step 1: Calculate the mean (average) of all values in the set.

- Step 2: Subtract the mean from each value to determine the difference (deviation) from the average.

- Step 3: Square each of the differences to eliminate negative values and emphasize larger deviations.

- Step 4: Find the average of these squared differences, which will give you the final result.

For a sample of values, the formula can be written as:

Sample Variance:

s² = Σ(xᵢ – μ)² / (n – 1)

Where:

- xᵢ represents each individual value in the set.

- μ is the mean of the values.

- n is the number of values in the set.

This formula is essential for understanding how much variation exists in the dataset and provides a deeper insight into its overall structure. Whether analyzing financial data, scientific results, or any other type of numerical information, this calculation is a fundamental part of statistical analysis.

What Does a Low Variance Indicate

A low value for this statistical measure suggests that the numbers in a set are closely grouped around the mean. In other words, individual values show little deviation from the average, indicating a high level of consistency and predictability. When the spread of values is small, the dataset is considered more stable, with less fluctuation between individual numbers.

This characteristic is particularly important in various fields, such as quality control and finance, where stability and uniformity are desired. For example, in manufacturing, a low value would suggest that the production process is reliable, with products consistently meeting the expected specifications. Similarly, in investment analysis, low variation in returns may signal a stable, low-risk asset.

However, while a low measure of spread indicates consistency, it is also important to ensure that the data does not lack diversity or contain too many outliers, which could lead to an incomplete understanding of the underlying trends.

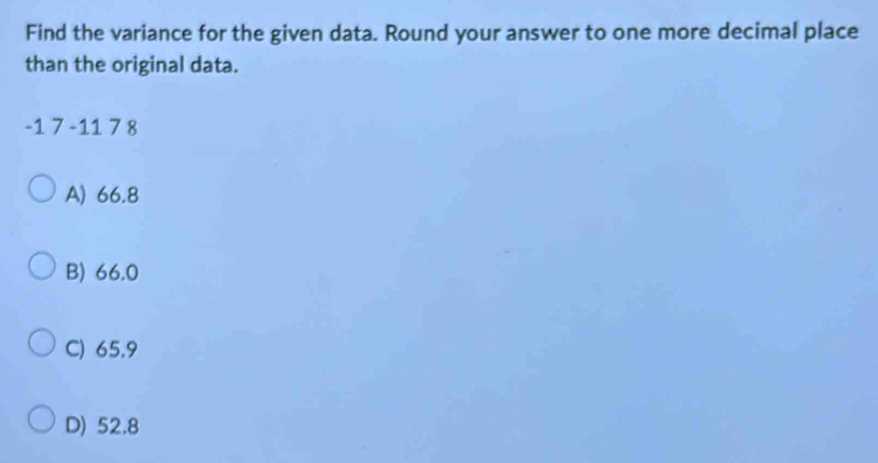

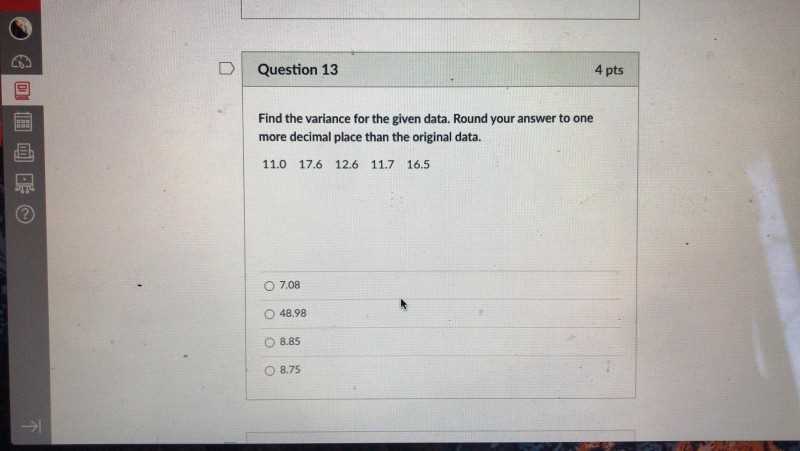

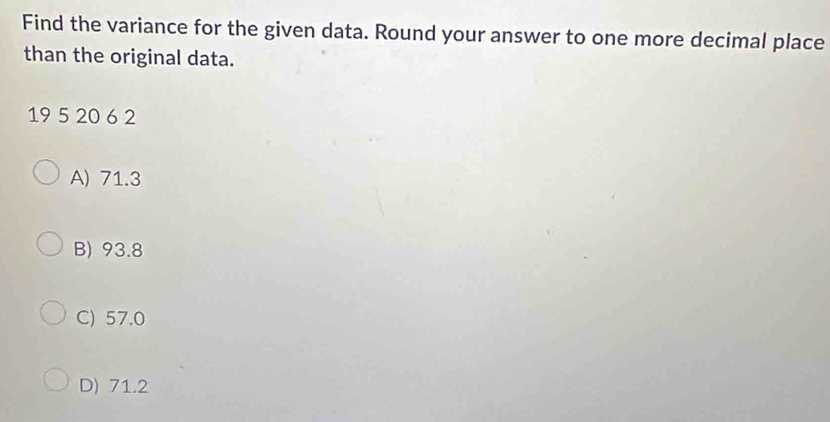

How to Round Variance to One Decimal Place

When calculating this measure, it’s often necessary to present the result in a simplified form, especially for reporting or practical use. Rounding helps provide a more readable and manageable figure, making it easier to interpret and compare across different datasets. This step is particularly useful when the precision of the calculation exceeds what is necessary for decision-making.

Step-by-Step Process

To properly round this measure to one decimal place, follow these simple steps:

- Identify the tenths place: This is the first digit after the decimal point. In a number like 12.345, the tenths place is 3.

- Check the next digit: If the digit in the hundredths place (second digit after the decimal point) is 5 or greater, round up the tenths place. If it’s less than 5, keep the tenths place unchanged.

- Make the adjustment: After rounding, remove any digits that follow the tenths place.

Example of Rounding

Consider the result of a calculation: 8.763. To round this value to one decimal place:

| Original Value | Step | Rounded Value |

|---|---|---|

| 8.763 | Check the hundredths place (6). Since it’s greater than 5, round up the tenths place (7). | 8.8 |

After rounding, the result is 8.8, which simplifies the data while maintaining enough precision for most practical applications.

Difference Between Variance and Standard Deviation

Both of these measures provide insight into the spread or dispersion of values in a dataset, but they represent this information in different ways. While they are closely related, understanding the distinction between them is crucial for proper interpretation in statistical analysis. The main difference lies in how the results are expressed and their mathematical relationship.

Conceptual Differences

One key difference is that while both metrics show how far values deviate from the mean, they are calculated using slightly different methods. The first metric involves squaring the deviations, which results in a value that is in squared units. On the other hand, the second metric takes the square root of the first value, bringing it back to the original unit of measurement. This process makes the second metric more intuitive and easier to interpret in many practical situations.

Mathematical Relationship

Mathematically, the second metric is simply the square root of the first. For example, if the first value is 16, its square root will give 4, which is the second value. The first number is more sensitive to large deviations, while the second offers a more understandable scale, making it more commonly used in reporting or presenting results.

Ultimately, both measurements serve important roles in statistics, with the first offering a more detailed, raw measure of spread, and the second providing a more practical and interpretable figure for analysis.

Common Mistakes When Calculating Variance

Accurately calculating this measure can be challenging, and there are several common errors that people make during the process. These mistakes can lead to incorrect conclusions, especially when analyzing large datasets. Understanding these pitfalls can help improve accuracy and ensure that calculations reflect true data trends.

Frequent Errors in Calculation

One of the most common mistakes is failing to subtract the mean correctly before squaring the differences. Sometimes, individuals mistakenly use the raw data values instead of the mean, which skews the results significantly. Another error is forgetting to divide by the number of values or using an incorrect denominator when calculating. In statistical formulas, it’s crucial to ensure the correct divisor is used to avoid underestimating the result.

Misinterpreting the Final Value

Another common mistake occurs after calculating the result–incorrectly interpreting the final value. Since the squared differences are used, the final result is in squared units. Not recognizing this can lead to confusion when presenting results. It’s important to understand that this value represents a raw measure of spread and is not directly comparable to other untransformed data points.

Example of Common Mistakes

Let’s consider an example calculation to highlight some common errors:

| Step | Correct Method | Common Mistake |

|---|---|---|

| Step 1: Subtract mean | Subtract the mean from each value in the set | Use raw data values instead of the mean |

| Step 2: Square the differences | Square each difference | Forget to square the differences |

| Step 3: Divide by number of values | Use correct divisor (n or n-1 depending on context) | Incorrectly divide by total values without adjustment |

By avoiding these common mistakes, you can ensure that your results are more reliable and reflect the true spread of values in your dataset.

Using Variance to Interpret Data Trends

Understanding how values in a dataset are spread around the central point is essential for interpreting underlying patterns and trends. By measuring the degree of fluctuation, one can assess whether values are consistently clustered together or if they show significant variability. This insight can be useful in various fields such as business, economics, and research, providing a clearer picture of consistency or unpredictability.

A high degree of variability in a dataset suggests that values are more spread out, which could indicate a diverse set of outcomes or greater uncertainty in predictions. In contrast, a low degree of fluctuation indicates that most values are tightly clustered, implying stability and predictability. These trends help to assess risk, forecast future occurrences, and make informed decisions based on observed data patterns.

For example, in financial markets, high variability could signal instability or potential for larger price movements, while low variability might indicate steady growth or limited fluctuations. In quality control, consistently low variability means that a product’s performance is reliable, while high variability could suggest manufacturing issues or inconsistent quality.

By interpreting the spread of values in this way, one gains a deeper understanding of how consistent or unpredictable a particular set of measurements is. This perspective can significantly influence decision-making processes and future strategies. Understanding the trend in terms of data dispersion can reveal important patterns that raw numbers alone may not convey.

How Variance Affects Statistical Models

Understanding the dispersion of values within a dataset is crucial for building accurate statistical models. The degree to which data points vary from the central value has a significant impact on the behavior and predictions of these models. A higher level of fluctuation can lead to less predictable outcomes, while a smaller spread might indicate more reliable and consistent patterns.

In statistical modeling, data variability can influence both the complexity and accuracy of the model. When variability is high, models may struggle to capture the underlying relationships between variables, often requiring more sophisticated techniques to account for the noise in the data. On the other hand, low variability typically makes it easier to identify trends, leading to simpler models with fewer potential errors.

Impact on Predictive Accuracy

Predictive models, such as regression or machine learning algorithms, rely heavily on the assumption that data patterns can be captured and generalized. When there is significant variability in the dataset, these models may overfit or underfit, failing to generalize well to new or unseen data. In cases of overfitting, the model becomes too tailored to the existing data, capturing even the random noise, which leads to poor performance in real-world applications.

Implications for Risk Assessment

In fields like finance and risk management, understanding how values deviate from the mean is vital for making decisions under uncertainty. A model built on data with high variability might predict a broader range of possible outcomes, leading to greater risk exposure. Conversely, a low-variance dataset may offer more stable predictions, reducing risk but potentially overlooking extreme but plausible scenarios.

Therefore, the role of data dispersion in statistical models cannot be overstated. It directly influences how well a model can fit data, predict future trends, and assess risk. Inaccurate assumptions about variability can distort conclusions, highlighting the need for careful consideration of how spread influences model performance.

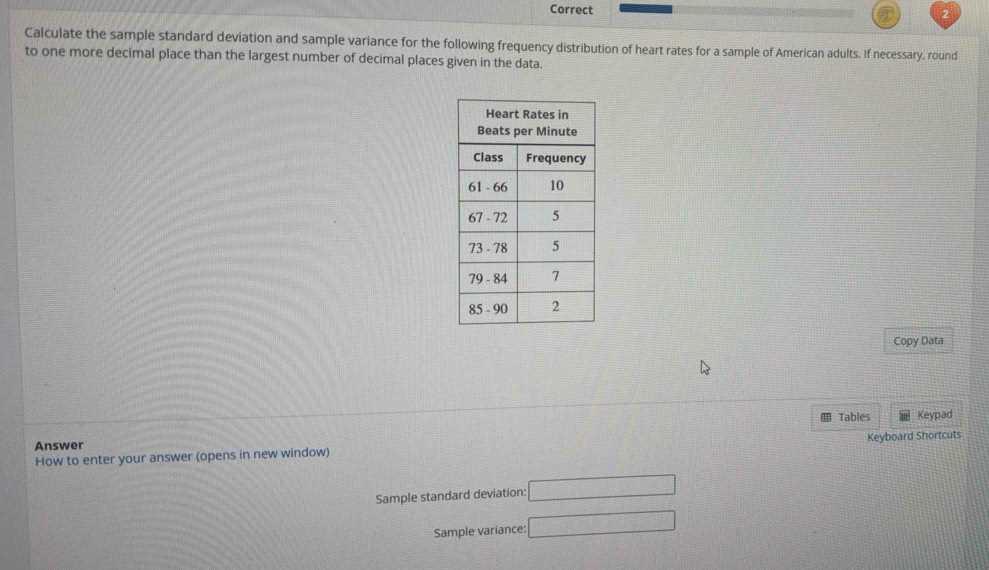

Calculating Sample Variance vs Population Variance

When measuring the spread or dispersion of a dataset, it is important to distinguish between calculating the spread for a sample versus an entire population. While both approaches aim to capture the degree of variation in the data, the formulas used to calculate each are different to account for the scope of the dataset being analyzed.

For a sample, we use a formula that adjusts for the fact that we’re working with only a subset of the entire population. This adjustment is done by dividing by the sample size minus one, often referred to as “Bessel’s correction.” This correction helps to reduce bias in the estimation of population variability when dealing with small samples.

In contrast, when calculating for an entire population, there is no need for this adjustment. Since we are working with complete information, we divide by the total number of data points in the population. This provides an accurate measure of variability without the need for bias correction.

Formula Differences

To illustrate the difference in calculation, here are the key formulas:

| Type of Variance | Formula |

|---|---|

| Sample Variance | s² = Σ(xᵢ – x̄)² / (n – 1) |

| Population Variance | σ² = Σ(xᵢ – μ)² / N |

Where:

- s² = sample variance

- σ² = population variance

- xᵢ = individual data points

- x̄ = sample mean

- μ = population mean

- n = number of sample data points

- N = total number of population data points

When to Use Each Calculation

It is crucial to choose the correct formula depending on whether you’re working with a sample or a population. For example, in many research scenarios, you might only have a sample of the entire population, in which case you should calculate the sample dispersion to make unbiased estimates about the larger group. On the other hand, if you have access to the entire population, then you can directly calculate the population spread.

In summary, the key difference lies in the correction factor used when working with a sample. This adjustment ensures that estimates of dispersion are as accurate as possible when only a subset of the population is available for analysis.

Real-World Applications of Variance

Understanding how spread or variability manifests in different types of information is crucial in many fields. Whether you’re assessing the consistency of a product, the risk of an investment, or the effectiveness of a teaching method, quantifying variation helps make informed decisions. This concept is applied widely in real-world scenarios to drive improvements and manage uncertainties.

Finance and Investment

In financial markets, understanding variability is essential for assessing risk. By analyzing how much the returns on a particular asset deviate from its average, investors can gauge the stability of an investment. A high degree of variation may indicate a volatile asset, while low variability often suggests a more stable, predictable investment. This helps investors to diversify their portfolios and choose assets that align with their risk tolerance.

- Portfolio management: Helping investors balance risk and return by understanding how individual investments behave in comparison to one another.

- Risk assessment: Determining the level of risk associated with different financial instruments based on past performance variability.

Quality Control in Manufacturing

In production environments, assessing how much product characteristics deviate from the desired specifications is critical for maintaining quality standards. High variability in measurements, such as product weight or size, can lead to defects and customer dissatisfaction. By understanding how spread out measurements are, manufacturers can fine-tune processes to reduce errors and improve consistency.

- Process optimization: Identifying sources of variability to make adjustments in production processes, leading to more consistent outcomes.

- Product quality assessment: Ensuring that products meet required specifications consistently and that deviations are kept within acceptable limits.

Healthcare and Medicine

Healthcare professionals use variability measurements to assess the effectiveness of treatments and medications. For instance, when analyzing how a group of patients responds to a specific treatment, understanding the variation in outcomes can help doctors choose the best course of action. Similarly, monitoring the variability in vital signs like blood pressure can alert healthcare providers to potential risks.

- Clinical trials: Analyzing patient responses to treatment and evaluating the consistency of outcomes to determine the effectiveness of new drugs or therapies.

- Patient monitoring: Detecting abnormal variations in vital signs to predict and prevent health complications.

Across these fields and beyond, understanding how much values deviate from their average helps professionals make more informed, data-driven decisions. Whether in finance, healthcare, or manufacturing, measuring variability plays a key role in optimizing processes, improving outcomes, and managing risks.

How to Use Variance in Quality Control

In quality control, measuring consistency and identifying irregularities are essential for maintaining product standards. The ability to assess how much a set of measurements deviates from its expected value is a crucial tool in ensuring that manufacturing processes remain efficient and that products meet customer expectations. By understanding variability, companies can proactively address issues before they lead to defects or customer dissatisfaction.

Identifying Process Stability

To ensure product quality, it’s essential to determine whether a production process is stable. High levels of variation in product specifications, such as size, weight, or performance, often indicate that the process is not controlled and may need adjustments. Conversely, low variation suggests that the process is running smoothly and products are being produced consistently.

- Process Monitoring: Regularly analyzing variation helps track production consistency over time.

- Threshold Setting: Setting acceptable levels of variability ensures that any deviation outside of this range can be flagged for further investigation.

Detecting Defects Early

By tracking how much individual units deviate from the target values, manufacturers can identify potential defects early in the production line. This early detection helps reduce waste, lower costs, and improve overall quality by addressing issues before they escalate.

- Preventive Measures: Identifying patterns of variation allows quality control teams to take corrective actions before significant defects occur.

- Inspection Frequency: Using variance metrics helps in determining when to conduct inspections or audits to identify defects early in the production cycle.

Improving Operational Efficiency

Reducing variability in production can lead to more efficient processes. By consistently measuring variation, manufacturers can pinpoint areas where the process can be improved. This can lead to fewer reworks, lower costs, and faster production times, ultimately increasing profitability.

- Process Optimization: Continuous analysis of variability enables the identification of bottlenecks and inefficiencies in production.

- Waste Reduction: Lowering variability helps reduce the amount of scrap or reworked items in production, leading to significant cost savings.

Incorporating variability analysis into quality control not only helps maintain high standards but also fosters continuous improvement. By using these insights, companies can enhance their processes, deliver consistent products, and improve customer satisfaction.

Impact of Outliers on Variance

Outliers are extreme values that deviate significantly from the majority of other observations in a set. These values can distort statistical analyses and lead to misleading interpretations, especially when measuring spread or dispersion. In many cases, outliers can disproportionately increase variability measures, affecting conclusions drawn from the data.

How Outliers Influence Statistical Spread

When outliers are present, they can inflate variability metrics, creating an impression of greater diversity in the data than actually exists. This effect occurs because outliers are further from the mean, resulting in larger squared differences when calculating measures of dispersion.

- Greater Influence on Mean: Outliers can pull the mean away from the center of the distribution, leading to a distorted measurement of average behavior.

- Exaggerated Spread: As a result of their distance from the majority of data points, outliers cause a larger spread and higher computed values for dispersion, such as squared differences.

Addressing the Effect of Outliers

While outliers can provide valuable insight into rare events or anomalies, they often skew the results of analyses. In many cases, it’s important to identify and assess outliers to determine whether they should be excluded or addressed separately to obtain more accurate results.

- Identifying Outliers: Statistical tests or visualizations such as box plots can help pinpoint outliers and assess their influence on overall results.

- Decision Making: If outliers are determined to be data errors, they can be removed or corrected. If they are valid, alternative approaches such as robust methods can be employed to minimize their impact on conclusions.

Overall, while outliers are an important part of any dataset, their influence on variability measures should be carefully considered to avoid misleading interpretations. Recognizing when outliers are unduly affecting analysis is key to ensuring more reliable and meaningful results.

How to Interpret Variance Results

Understanding how variability measures affect interpretation is crucial when analyzing any dataset. While it’s helpful to calculate these metrics, correctly interpreting their meaning is key to making informed decisions. A higher or lower variability can provide insights into the stability and consistency of the observed values, helping to identify patterns or anomalies.

Low Variability Interpretation

When variability is low, it suggests that the values are tightly grouped around the mean. This often indicates consistency and reliability in the observed values, with little fluctuation over time.

- Consistent Results: A small spread implies that data points do not deviate much from the mean, indicating predictability and stability.

- Reduced Risk: With low variability, there is less uncertainty in predicting future observations or outcomes, which is often a sign of well-controlled processes or uniform behavior.

High Variability Interpretation

A high degree of variability means the values are spread out widely from the mean, indicating more fluctuation or inconsistency within the dataset. This can be indicative of diverse factors affecting the outcome.

- Increased Uncertainty: A large spread suggests more uncertainty and makes future predictions less reliable. Higher variability often points to complex factors at play or unstable conditions.

- Presence of Outliers: Significant deviations in the data may signal the presence of outliers, which should be examined further to determine their impact on the overall analysis.

Contextualizing Variability

It’s essential to consider the context in which the data was collected when interpreting variability results. In some cases, high variability may be acceptable or even expected, such as in certain types of financial markets or complex systems, while in other scenarios, it might be a cause for concern.

- Expected Fluctuations: In certain industries, high variability is typical, like in stock market returns or product performance testing, where fluctuations are inherent.

- Unwanted Inconsistencies: In manufacturing or quality control, high variability can signal issues with processes, leading to defects or failure to meet standards.

In summary, interpreting variability requires careful consideration of the data context and the implications of the observed spread. Low variability often signals stability, while high variability may indicate uncertainty or complexity in the underlying factors.

Practical Examples of Variance Calculation

Understanding how to apply statistical measures in real-world situations can provide deeper insights into various processes and help in decision-making. Variability measurement plays a crucial role in identifying patterns, risks, and trends. Below are some examples of how these calculations are applied across different fields.

Example 1: Analyzing Exam Scores

Imagine a class of students where you want to measure how consistently students perform on an exam. By calculating variability, you can determine whether most students scored similarly or if there are large differences in performance.

- Step 1: Gather the scores of all students.

- Step 2: Find the mean score.

- Step 3: Subtract the mean from each score and square the result.

- Step 4: Calculate the average of these squared differences to obtain the spread of scores.

This method helps to assess whether most students understood the material similarly or if there were significant performance gaps that might require attention.

Example 2: Evaluating Manufacturing Process Stability

In a factory, you may want to evaluate the consistency of products being manufactured, such as measuring the weight of items produced. A high degree of variation may indicate problems with equipment or production methods.

- Step 1: Record the weights of products over a specific period.

- Step 2: Compute the mean weight of all items.

- Step 3: Calculate the differences between each product’s weight and the mean, then square the differences.

- Step 4: Find the average of these squared differences to quantify how much the weights deviate from the mean.

This calculation can highlight process instability and areas where improvements are necessary to ensure products meet quality standards.

Example 3: Stock Price Volatility

In finance, understanding the fluctuation in stock prices is essential for evaluating risk. A higher degree of variation indicates more volatility, which is crucial for investors seeking to understand potential returns and risks.

- Step 1: Collect the daily closing prices of a particular stock over a set period.

- Step 2: Calculate the mean of these prices.

- Step 3: Find the differences between each closing price and the mean, square them.

- Step 4: Compute the average of these squared differences to determine the degree of fluctuation.

By analyzing stock price variability, investors can better assess the risk of investment and make informed decisions based on price stability or volatility.

These practical examples demonstrate how variability measurements can be useful in a variety of fields, from education to manufacturing and finance. Understanding and calculating this metric can aid in improving processes, evaluating risks, and making data-driven decisions.