In the world of IT certification, mastering the core concepts and practical skills is essential for achieving success. This section focuses on the fundamental areas required to understand system management, user handling, and networking. The focus is on building a strong foundation that will enable you to tackle real-world challenges with confidence.

Through focused study, you’ll be introduced to important tools and techniques that are crucial for everyday system administration. These concepts play a key role in managing processes, permissions, and system performance. With an emphasis on practical application, the knowledge gained will prove valuable for both exam preparation and professional development.

Mastering the basics of system commands, security, and file structures will set the stage for more advanced topics. By practicing these key areas, you’ll not only improve your theoretical knowledge but also gain hands-on experience that is essential for troubleshooting and problem-solving in various environments.

Mastering Key Concepts for Certification Success

For those preparing for a certification focused on system management and networking, understanding the critical areas related to system operations, user management, and security is crucial. This section covers essential topics that test your ability to effectively handle file systems, permissions, and processes, along with the application of network fundamentals. Success in this area requires both theoretical knowledge and practical experience to ensure you’re ready for both the assessment and real-world tasks.

Key Skills for System Administration

One of the primary areas tested is the ability to manage users and groups within a system. This includes knowing how to configure user permissions, file access, and ensuring proper directory structures. Proficiency in handling these tasks allows you to maintain a secure and well-organized system environment.

Understanding Networking and System Performance

In addition to user management, another critical area of focus is networking and system performance. Knowing how to configure network settings, troubleshoot connectivity issues, and monitor system health is fundamental. These skills will not only help you excel in assessments but will also be invaluable in day-to-day system administration.

Practical knowledge in managing these core functions will directly influence your success. While studying for the certification, focus on mastering commands, configurations, and troubleshooting techniques. Regular practice and hands-on experience are the best ways to ensure your readiness for both the test and real-world scenarios.

Key Concepts in System Administration

Understanding the foundational principles of system management is critical for anyone seeking to excel in IT certifications. This section delves into the core areas that form the basis of system operations, including file structures, user management, and system processes. Gaining proficiency in these topics is essential for ensuring smooth system functionality and efficient problem resolution.

System Structure and File Management

A deep understanding of the file structure is necessary for managing data and files efficiently. The file system hierarchy defines how data is organized and accessed. Familiarity with directories, file types, and permissions is key to performing basic tasks such as creating, modifying, and deleting files. Some key concepts include:

- Directory structure and navigation

- File permissions and access control

- File ownership and management

- Understanding symbolic and hard links

Managing Users and Groups

Proper user and group management ensures that only authorized individuals can access specific resources. This area focuses on creating, modifying, and deleting user accounts, as well as assigning appropriate permissions to protect system integrity. Key points to consider include:

- Creating and managing user accounts

- Group membership and role assignments

- Setting file and directory permissions for users

- Security considerations in user management

Mastering these concepts not only prepares you for certification assessments but also provides the necessary skills to manage systems effectively and securely in a professional environment.

Preparing for Chapter 7 Exam

Success in certification assessments requires a strategic approach to study and practice. The preparation process for this section focuses on mastering key concepts such as file management, user configurations, and system processes. Understanding the core elements and practicing them in real-world scenarios is essential to perform well and feel confident during the test.

In order to maximize your readiness, it’s important to focus on both theoretical knowledge and practical application. Regularly reviewing core topics and testing your understanding with hands-on exercises will reinforce your skills. The following table outlines the most important areas to focus on during your preparation:

| Topic | Key Focus Areas |

|---|---|

| File Systems | Structure, permissions, creating, modifying files |

| User Management | Creating accounts, managing groups, assigning roles |

| System Processes | Understanding process management, scheduling tasks |

| Security | Configuring permissions, protecting system integrity |

| Networking | Basic configuration, connectivity issues, system monitoring |

Consistent practice and reviewing key areas will significantly improve your chances of success. In addition to theoretical study, it’s beneficial to replicate real-world situations and troubleshoot potential issues to further solidify your knowledge.

Understanding File Systems

Mastering the organization and management of files is a key part of system administration. A file system dictates how data is stored, accessed, and managed within the system. Understanding the structure and operations of file systems is essential for performing routine tasks like file manipulation, data storage, and securing access. In this section, we will explore the most important aspects of file systems, from basic directory structures to advanced configurations.

File System Hierarchy

The file system hierarchy is the blueprint for how data is stored and organized. It consists of a series of directories and subdirectories, starting from the root directory. Navigating and managing this structure efficiently is crucial for maintaining a well-organized system.

- Root directory – The starting point of the file system, denoted by “/”

- Home directory – User-specific files are stored here, typically under “/home/username”

- System directories – Important system files are housed in directories like “/etc”, “/bin”, and “/lib”

- Temporary files – Stored in “/tmp”, used for short-term storage by applications

File Permissions and Access Control

File permissions are critical for maintaining the security and integrity of the system. Permissions dictate who can read, write, or execute files, ensuring that sensitive data is protected and that unauthorized users cannot modify system files.

- Read (r) – Permission to view the contents of a file

- Write (w) – Permission to modify or delete the file

- Execute (x) – Permission to run a file as a program or script

- Owner and group – Assigning permissions to specific users or groups for better control

File System Types

There are various types of file systems, each with unique features and benefits. Some common types include:

- ext4 – The most widely used file system for modern systems, offering excellent performance and stability

- NTFS – Used primarily by Windows systems but also supported on other platforms for compatibility

- Btrfs – A newer file system designed to address scalability and advanced data management needs

- FAT32 – A simple, lightweight file system often used for external drives and portable media

Understanding these file systems and how they operate within the system is essential for efficient data management, troubleshooting, and security practices.

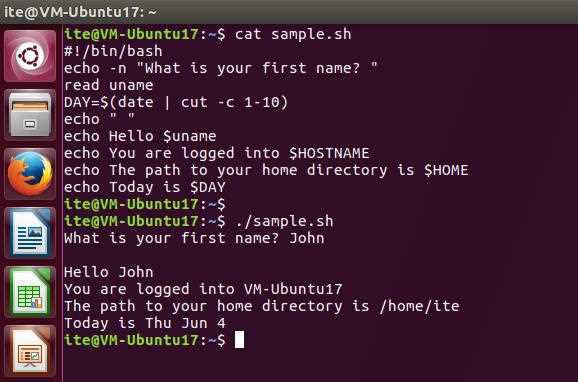

Important Commands for Chapter 7

Proficiency with key system commands is fundamental for managing tasks such as file manipulation, user management, and system monitoring. Mastering these essential commands will not only help you navigate the system effectively but also allow you to perform critical operations with efficiency and precision. This section highlights the most frequently used commands in system administration, covering everything from basic file handling to advanced process management.

Here are some of the most important commands to focus on for this section:

- ls – Lists the contents of a directory, including files and subdirectories.

- cd – Changes the current working directory, allowing for navigation through the system’s file structure.

- cp – Copies files or directories from one location to another, preserving the original.

- mv – Moves or renames files and directories within the system.

- rm – Removes files or directories. Caution is advised as this operation is permanent unless safeguards are used.

- chmod – Modifies file permissions, allowing administrators to control who can read, write, or execute files.

- chown – Changes the ownership of files or directories, typically used to assign the correct user and group permissions.

- ps – Displays a list of currently running processes, which is essential for monitoring system activity.

- top – Provides a real-time overview of system performance, displaying resource usage for processes.

- df – Shows disk space usage for file systems, helping to identify storage capacity and potential issues.

- du – Estimates file space usage within directories, which helps manage disk space effectively.

- grep – Searches for specific text patterns within files, useful for finding information quickly.

- tar – Archives and compresses files, making it easier to manage large volumes of data.

Mastering these commands will significantly enhance your ability to manage system resources, troubleshoot issues, and perform necessary maintenance tasks. Practicing these regularly will ensure you are ready for both theoretical assessments and real-world scenarios.

Managing Users and Groups

Effective user and group management is crucial for maintaining a secure and organized system. This involves creating, modifying, and deleting user accounts, as well as assigning appropriate permissions to ensure that only authorized individuals can access sensitive resources. Additionally, grouping users together allows for more efficient management of permissions and system resources. Mastering these tasks is essential for keeping the system running smoothly and securely.

The following tasks are key components of user and group management:

- Creating Users – New users can be added to the system with specific credentials, such as usernames and passwords, using commands like

useradd. - Modifying User Information – User details, such as passwords or login shells, can be updated using commands like

usermod. - Deleting Users – When a user no longer needs access to the system, their account can be removed with

userdel. - Managing Groups – Users can be organized into groups to simplify the process of assigning permissions. The

groupaddcommand creates new groups, whilegroupdelremoves them. - Assigning Permissions – Permissions can be granted to users or groups for accessing specific files and directories, usually through the

chmodandchowncommands. - Setting User Roles – By setting user roles and group memberships, it becomes easier to control access to system resources and manage users’ level of authority.

Properly configuring user accounts and group memberships helps improve both security and efficiency. Regular review of user access rights is essential for minimizing unauthorized access and ensuring that each user has only the necessary permissions for their tasks.

File Permissions Overview

Managing file access is a fundamental part of system administration. Properly configured permissions ensure that only authorized users can access, modify, or execute specific files or directories. These permissions are essential for maintaining security and privacy, preventing unauthorized actions, and organizing system resources effectively. Understanding how permissions work and how to modify them is crucial for anyone managing a multi-user environment.

File permissions are typically divided into three categories: read, write, and execute. Each category controls a specific type of access and is granted to different users or groups based on their roles and responsibilities within the system.

- Read (r) – Allows the user to view the contents of a file or directory.

- Write (w) – Grants the user the ability to modify or delete the file or directory.

- Execute (x) – Permits the user to run the file as a program or access a directory.

Permissions can be applied to three different entities:

- Owner – The user who owns the file or directory. Typically, this is the person who created it.

- Group – A set of users who are assigned specific permissions for a particular file or directory.

- Others – All users who do not belong to the owner or group category.

These permissions are represented by a three-character string, such as rwx, which indicates what actions are allowed for the owner, group, and others. Modifying permissions can be done using commands like chmod and chown, allowing administrators to fine-tune access control and maintain a secure system environment.

Working with File Directories

Directories are fundamental for organizing and managing files within any system. They provide a structure that helps users and administrators store, access, and manipulate files efficiently. Understanding how to navigate and manage directories is a key skill for anyone working with a file system. From creating new directories to moving and removing them, mastering these operations is essential for system organization and maintaining a clean and efficient workspace.

Here are some important operations for working with directories:

- Creating Directories – New directories can be created using the

mkdircommand. This allows users to organize their files in a structured way. - Listing Directory Contents – To view the contents of a directory, the

lscommand can be used. It displays files and subdirectories within the specified directory. - Changing Directories – The

cdcommand is used to change the current working directory, allowing users to navigate through the file system. - Removing Directories – The

rmdircommand is used to remove empty directories. If a directory contains files, it must first be emptied before removal. - Moving Directories – The

mvcommand allows users to move directories from one location to another or rename them. - Copying Directories – Directories can be copied using the

cp -rcommand. The-rflag ensures that all files and subdirectories are copied recursively. - Viewing Directory Structure – The

treecommand can display the directory structure in a tree-like format, making it easier to visualize the organization of files and subdirectories.

Efficient directory management is crucial for maintaining an organized and functional system. Proper use of directory-related commands ensures that users can access, modify, and delete files as needed, while keeping the file system tidy and easy to navigate.

Essential Networking Concepts in Linux

Networking is a critical aspect of system administration, enabling communication between devices and sharing resources across a network. Understanding key networking concepts is essential for managing connections, troubleshooting network issues, and ensuring smooth data transfer. This includes concepts such as IP addressing, routing, and various network protocols, which form the foundation for establishing and maintaining reliable connections.

Some fundamental networking concepts include:

- IP Addressing – Every device on a network is identified by an IP address. These addresses can be either IPv4 or IPv6, and understanding how to configure and manage them is vital for proper communication between devices.

- Subnetting – Subnetting divides a large network into smaller, more manageable sub-networks. This allows for better organization, improved performance, and more efficient use of IP addresses.

- Routing – Routers direct network traffic between different networks. Understanding how routing tables work and how to configure static and dynamic routes is essential for managing traffic efficiently.

- Network Interfaces – Each physical or virtual network connection on a device is handled through network interfaces. Admins need to configure and manage these interfaces to ensure proper connectivity.

- DNS – The Domain Name System (DNS) resolves domain names to IP addresses, enabling users to access websites and services using human-readable names instead of numerical addresses.

- Firewalls and Security – Firewalls control the flow of network traffic based on predefined security rules. Understanding how to configure firewalls is essential for protecting systems from unauthorized access and attacks.

- Network Troubleshooting – Common tools like

ping,netstat, andtraceroutehelp diagnose connectivity issues and identify network problems.

By mastering these networking concepts, administrators can ensure that their systems are properly configured, secure, and able to communicate effectively across networks. Understanding how to troubleshoot and maintain network connections is a vital skill for any system administrator.

System Management Tools Explained

System management tools are essential for maintaining the health and performance of a computer system. They provide administrators with the ability to monitor, configure, and troubleshoot various system components, from hardware to software. These tools help ensure that the system operates smoothly, securely, and efficiently, allowing for quick resolution of issues and the optimization of resources.

Monitoring and Performance Tools

Monitoring tools are designed to track system performance in real time. They provide valuable information about CPU usage, memory utilization, disk space, and network activity. These tools allow administrators to identify potential bottlenecks or performance issues that may impact system efficiency.

- top – Displays real-time information about system processes, CPU usage, memory usage, and more.

- htop – An enhanced version of

top, providing a more user-friendly interface and additional features for monitoring processes. - free – Displays information about available and used memory, helping administrators monitor system resources.

Configuration and Maintenance Tools

Configuration tools help manage system settings, software packages, and services. These tools allow administrators to configure network settings, install software, manage user accounts, and automate routine tasks. Proper configuration ensures that the system functions according to user requirements and security best practices.

- systemctl – A command-line tool for managing system services and processes, including starting, stopping, and enabling services.

- ufw – A simple command-line firewall tool used to configure network security settings and restrict unauthorized access to system resources.

- crontab – Used for scheduling repetitive tasks, allowing administrators to automate system maintenance tasks.

These management tools are critical for day-to-day administration, helping to streamline processes and ensure a smooth user experience. Whether it’s monitoring system health, configuring services, or automating tasks, understanding and using the right tools is essential for effective system management.

Common Pitfalls in Linux Essentials Exam

When preparing for a certification or proficiency test in system administration, many candidates face similar challenges. These obstacles often arise from misunderstanding key concepts, skipping important details, or misapplying commands and procedures. Recognizing these common pitfalls can help you better prepare and avoid mistakes that might hinder your progress during the assessment.

Misunderstanding Command Syntax

One of the most frequent mistakes is not fully grasping the correct syntax for commands. Even small errors in spelling, missing arguments, or incorrect switches can lead to unexpected outcomes. It’s crucial to practice commands and understand their specific usage to avoid this issue. Testing commands in a safe environment and reviewing man pages can prevent syntax-related errors during the assessment.

- Example: Forgetting to include the

-rflag when using thecpcommand to copy directories can result in only files being copied, not the entire directory structure. - Tip: Always double-check the command structure and any required flags before execution.

Neglecting Permissions and Ownership

Another common pitfall involves neglecting file permissions and ownership. In a test environment, not paying attention to who owns a file or the permissions granted to it can lead to errors when trying to access or modify files. Properly understanding how to manage permissions is essential for effective system management.

- Example: Failing to set the correct file permissions may prevent you from reading or editing a file, even if you’re logged in as an administrator.

- Tip: Review the

chmod,chown, andchgrpcommands to manage access control effectively.

Being aware of these common mistakes can help you prepare more effectively and avoid losing valuable time during an assessment. By familiarizing yourself with the tools, syntax, and best practices, you can enhance your performance and gain confidence in your abilities.

Exam Tips for Linux Certification

Preparing for a certification test can be a challenging but rewarding experience. Success in such assessments often requires more than just memorizing commands and concepts; it demands strategic planning, practice, and a clear understanding of what to expect on test day. To help improve your chances of success, consider these practical tips that will guide you through your preparation and testing process.

Focus on Core Concepts

Before diving into specific commands and procedures, it’s essential to have a solid grasp of the foundational principles that underpin the system. Understanding core concepts will not only help you navigate the test more confidently but also enhance your long-term skills as a system administrator.

- Networking fundamentals: Understand key concepts such as IP addressing, subnetting, and basic networking commands.

- File systems: Be comfortable with different types of file systems, managing files, and understanding permissions.

- User management: Familiarize yourself with commands for managing users, groups, and access control.

Practical Hands-On Practice

While theoretical knowledge is important, hands-on experience is equally crucial. Spend as much time as possible practicing commands, configurations, and troubleshooting on a real system or a virtual machine. Simulated environments will help reinforce your learning and ensure that you are comfortable executing tasks under pressure.

- Set up a test environment: Use virtual machines or containers to create a safe space for practice. This will allow you to experiment without worrying about system stability.

- Follow structured practice exams: Utilize mock tests to simulate the exam experience and assess your readiness. These will also help you identify areas that need improvement.

- Use man pages: Be sure you are comfortable navigating man pages (manuals) to look up syntax and options for commands.

Time Management and Strategy

During the actual test, time management is crucial. Don’t spend too much time on any single question or task. If you get stuck, move on and come back to it later. Here are some strategies to help you manage your time effectively:

- Read questions carefully: Ensure that you fully understand what is being asked before you begin. Rushed decisions can lead to avoidable mistakes.

- Prioritize tasks: Complete easier questions first to build confidence, then tackle the more challenging ones.

- Review answers: If time permits, go back and double-check your responses to ensure that you’ve answered everything correctly.

By following these tips, you can approach your certification test with confidence, improving both your preparation and performance. Combining knowledge, hands-on practice, and strategic thinking will help you succeed in any certification assessment.

Understanding Processes and Services

In any computing environment, the smooth functioning of a system depends heavily on how various tasks and applications are managed and executed. A process refers to an instance of a running program, while services are long-running background processes that provide essential functions for the system. Understanding how processes are initiated, managed, and monitored is key to maintaining a system’s performance and stability.

Every time a program is executed, a new process is created. These processes run in the background, each with its own set of resources and identifiers. Similarly, services, which are typically programs designed to run continuously, provide crucial system functionalities like network communication or scheduled tasks. Properly managing these processes and services ensures that the system runs efficiently and securely.

- Process Management: Understanding how processes are launched, monitored, and terminated is vital for system administration. Tools like `ps` and `top` allow users to view the processes currently running, while commands like `kill` can be used to stop processes that are no longer necessary or are consuming excessive resources.

- Service Control: Services are usually managed by a system’s service manager (like `systemd`). Administrators must understand how to start, stop, and restart services, as well as how to configure them to start automatically when the system boots.

- Resource Allocation: Every running process and service uses system resources such as memory and CPU time. Being able to monitor and optimize resource usage is essential for maintaining system performance, especially in multi-user or server environments.

Effective management of processes and services requires familiarity with the relevant tools and commands. In addition to basic process management, it’s important to understand how to configure services to run at startup and how to troubleshoot any issues that may arise. By mastering these concepts, you can ensure that the system remains responsive and secure under various workloads.

Handling System Logs

System logs play a critical role in monitoring and troubleshooting any computing environment. These logs record various activities, including system events, user actions, errors, and other important events that occur while the system is running. By effectively managing and analyzing these logs, administrators can ensure the smooth operation of the system, identify potential issues, and enhance security.

Understanding where logs are stored, how to access them, and how to interpret them is essential for maintaining a system. Logs are typically stored in plain text files and are categorized based on their content, such as authentication logs, system activity logs, or application logs. Regular monitoring of these logs can help in early detection of problems, system misuse, or security breaches.

Accessing System Logs

Most system logs are stored in the `/var/log/` directory. Some of the most common log files include:

- /var/log/syslog – Contains general system information and messages from various system components.

- /var/log/auth.log – Records authentication events, such as login attempts and sudo usage.

- /var/log/dmesg – Stores kernel ring buffer messages, providing a snapshot of system hardware events.

- /var/log/boot.log – Records the system’s boot process, which can be helpful for troubleshooting startup issues.

Analyzing and Managing Logs

Once the logs are accessed, administrators can use various tools to analyze and manage them effectively. Commands like `cat`, `less`, `grep`, and `tail` are commonly used for reading and filtering log files. For example, using `grep` to search for specific keywords in a log file can help identify issues quickly. Additionally, tools like `logrotate` are often used to manage log file sizes by archiving or compressing old logs, ensuring the system doesn’t run out of disk space.

By regularly reviewing system logs, administrators can ensure the system operates smoothly, detect potential issues early, and maintain security and integrity. Proper log management is a key skill for anyone working in system administration or security.

Filesystems and Disk Management

Effective management of storage is a vital component of any operating system. The way data is organized, stored, and accessed on a system’s disks can significantly impact performance, security, and ease of use. A disk is typically divided into partitions, and each partition is formatted with a filesystem that determines how data is stored and retrieved. Understanding how filesystems work and how to manage disk space is crucial for maintaining an efficient and organized system.

Disk management involves tasks such as creating, resizing, or deleting partitions, as well as formatting disks with appropriate filesystems. Administrators must be familiar with the various filesystem types, how to mount and unmount partitions, and how to monitor disk usage. Efficient disk space utilization is key to preventing system slowdowns and ensuring that critical data is available when needed.

Filesystem Types and Mounting

There are several types of filesystems, each with its own features and advantages. Some of the most common filesystems include:

- ext4 – One of the most widely used filesystems, known for its stability and performance. It is often the default choice for many systems.

- xfs – A high-performance filesystem, particularly useful for handling large files and databases.

- btrfs – A newer filesystem that offers advanced features such as snapshotting and data integrity checks.

- FAT32 – Commonly used for external drives or removable media, as it is widely compatible with many systems.

When working with filesystems, it’s essential to understand how to mount and unmount partitions. Mounting is the process of attaching a partition or disk to the file hierarchy, making it accessible to the system. The `mount` command is used to mount a filesystem, while the `umount` command is used to safely detach it. Understanding mount points and how to configure them is essential for efficient disk management.

Disk Partitioning and Monitoring

Partitioning divides a physical disk into multiple logical sections, each of which can be managed independently. Tools like `fdisk`, `parted`, and `gparted` can be used to partition disks. Proper partitioning helps in organizing data, isolating critical system files from user data, and improving system performance by reducing fragmentation.

Once the disk is partitioned, it’s important to monitor disk usage regularly to ensure optimal performance. Commands like `df` and `du` provide valuable information about disk space usage, helping administrators identify large files or partitions that may need to be cleaned up or expanded. Tools such as `lsblk` and `blkid` can be used to view disk and partition information, while `smartctl` helps monitor the health of hard drives, providing early warnings for potential hardware failures.

By understanding the intricacies of filesystems and disk management, system administrators can optimize storage performance, ensure data integrity, and prevent potential issues related to disk space or system crashes.

Backup and Recovery Techniques

Data loss can occur at any time due to hardware failures, software issues, or human errors. Therefore, it is essential to implement a robust backup and recovery strategy to ensure that critical information is not lost and can be restored when needed. Backup refers to creating copies of important data to secure it against unforeseen events, while recovery involves restoring that data when required. Understanding various techniques for backing up and recovering data is fundamental for system administrators to maintain data integrity and minimize downtime.

There are different types of backups, each serving a specific purpose, ranging from full backups that copy everything to incremental backups that capture only changes made since the last backup. Choosing the right strategy depends on the organization’s needs, the criticality of the data, and available resources. Moreover, having a clear recovery process in place ensures that the data can be quickly restored in case of an emergency, minimizing disruption to operations.

Backup Types and Strategies

Several backup strategies can be employed depending on the frequency of changes and the importance of data:

- Full Backup – A complete copy of all files and data, providing the most comprehensive backup solution. It is often used as the base for incremental backups.

- Incremental Backup – This type of backup saves only the changes made since the last backup, making it more efficient in terms of storage space and time.

- Differential Backup – Similar to incremental, but it saves all changes made since the last full backup, rather than the last backup of any kind. It requires more storage space than incremental backups but offers faster recovery.

Implementing a combination of these strategies, such as a full backup followed by incremental backups, can provide both efficiency and a strong safety net for recovering data.

Recovery Planning and Tools

Having a detailed recovery plan is just as important as the backup itself. The recovery plan should define the steps required to restore data, including which tools and processes will be used. It should also specify recovery time objectives (RTO) and recovery point objectives (RPO) to align with business continuity requirements. RTO refers to the time it takes to restore operations after a failure, while RPO indicates how much data can be lost without significant impact to the organization.

Common tools for backup and recovery include:

- rsync – A powerful command-line tool that allows efficient data transfer and backup between systems. It is often used for creating incremental backups.

- tar – A widely used archiving tool that can be used to create compressed backups of files and directories.

- Backup software solutions – Many commercial and open-source software tools are available for automating backups and managing recovery processes, such as Bacula, Amanda, and Duplicity.

Regularly testing the recovery process is essential to ensure that backups are functioning as intended and that the recovery process can be completed within the desired time frame.

Security Fundamentals for Assessments

Securing a system is one of the most critical aspects of managing any computing environment. It involves implementing measures to protect the integrity, confidentiality, and availability of both data and resources. Understanding the core principles of security is essential for preparing for assessments, as it forms the foundation for defending systems against potential threats and vulnerabilities. Effective security requires a layered approach, including preventive, detective, and corrective controls to minimize risks and protect the system from unauthorized access, data breaches, and attacks.

In preparation for assessments, a strong grasp of security concepts is crucial. Familiarity with user authentication, permission settings, firewalls, and encryption will help ensure a system is properly protected. Additionally, knowing how to manage security updates and monitor logs for suspicious activities is essential for maintaining a secure environment.

Key Security Concepts

The following concepts are fundamental when studying for security-related assessments:

- Access Control – Ensures that only authorized users and systems can access specific resources.

- Encryption – Protects data by converting it into unreadable code, which can only be decrypted by authorized parties.

- Firewalls – Act as barriers between a trusted network and untrusted networks, controlling incoming and outgoing traffic based on security rules.

- Intrusion Detection Systems (IDS) – Monitor systems and networks for suspicious activities and provide alerts when potential threats are detected.

- System Updates and Patch Management – Regularly updating software to fix vulnerabilities and keep the system secure from known exploits.

Security Tools and Techniques

Several tools and techniques are commonly used to enhance security. These tools can help identify vulnerabilities, monitor systems, and secure sensitive data. Below is a table of important tools and their functions:

| Tool | Purpose |

|---|---|

| iptables | A command-line firewall utility used to configure network packet filtering rules. |

| OpenSSL | A toolkit for implementing secure communication protocols, including SSL/TLS encryption. |

| Fail2Ban | An intrusion prevention software framework that helps protect against brute-force attacks by banning IPs with multiple failed login attempts. |

| SELinux | A security extension for enforcing access control policies and isolating system resources to prevent unauthorized access. |

| Auditd | Used for auditing system events to track suspicious activities and maintain logs for security analysis. |

Mastering these tools, along with understanding the core principles of access control and encryption, will equip you with the knowledge necessary to secure a system effectively and perform well in security-focused assessments.